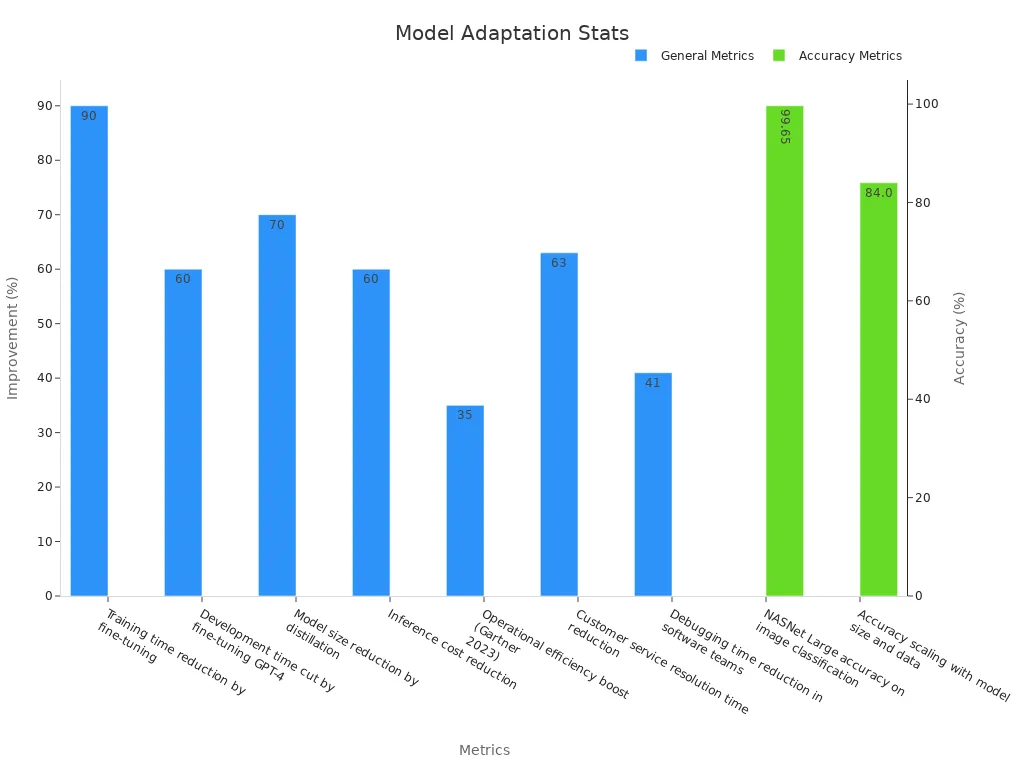

When you fine-tune a machine vision system, you start with a model that already understands how to interpret images and then adjust it to perform better for a specific task, such as image recognition tailored to your unique environment. By choosing to fine-tune a machine vision system using a pre-trained model, you can adapt it to your requirements, making the training process up to 90% faster compared to building a model from scratch. This approach also increases accuracy by 10-20% for applications like object sorting or item detection in photos.

| Statistic Description | Value / Example |

|---|---|

| Training time reduction by fine-tuning | Up to 90% faster than training from scratch |

| Performance improvement on specific tasks | 10-20% or more increase in accuracy/performance |

| NASNet Large accuracy on image classification | 99.65% accuracy |

| Data requirement reduction | Fine-tuning with a few thousand images vs millions |

When you fine-tune a machine vision system, you can address real-world challenges like limited data and high labeling costs. For example, a fine-tuned machine vision system achieved a 75% success rate in picking up new objects, demonstrating the effectiveness of this method for tasks beyond controlled lab settings.

Key Takeaways

- Fine-tuning adapts a pre-trained machine vision model to your specific task, saving up to 90% training time and improving accuracy by 10-20%.

- Using fine-tuning helps your model handle real-world challenges like limited data, different lighting, and new object types, making it more reliable and effective.

- The fine-tuning process includes preparing quality data, adjusting the model, training with validation, and careful deployment with ongoing monitoring.

- Parameter-efficient learning and transfer learning methods let you improve models using less data and computing power, reducing costs and speeding up training.

- Choosing the right tools and frameworks, like PyTorch or TensorFlow, and following best practices ensures your fine-tuned model performs well and stays up to date.

Why Fine-Tune Machine Vision System

Real-World Needs

You often face challenges when you use a machine vision system in real environments. The images in your dataset may look different from those used to train the original model. Lighting, camera angles, and object types can change. This is where vision fine-tuning becomes important. You can take a pre-trained model and adapt it to your own dataset, making it work better for your specific needs.

- In real-time object detection, vision fine-tuning helps models like YOLOv8 reach high precision and fast speeds. This is useful for tasks such as autonomous vehicles and security cameras.

- Medical imaging systems that use vision fine-tuning can achieve almost 99% precision and recall. This reduces the time doctors spend reading scans and improves how well they find health problems.

- Autonomous robots keep a steady 96% accuracy over long periods with vision fine-tuning, showing that these systems can work well without constant retraining.

- In industries like agriculture and manufacturing, vision fine-tuning lets you use smaller datasets. You do not need millions of images to get good results.

- If you skip vision fine-tuning, your model might fail in real-world tests. For example, an AI for diabetic retinopathy detection worked well in the lab but failed on 20% of real clinical scans because the dataset did not match real conditions.

You can see that validation and adaptation are key. Using advanced statistical methods, you can measure how well your model works on your dataset. This helps you avoid errors and improve quality.

Benefits

When you use fine-tuning, you make your machine vision system smarter and more efficient. You do not have to start learning from scratch. Instead, you use a model that already knows a lot and teach it to focus on your dataset. This saves time and resources.

Fine-tuning improves accuracy and makes your model more relevant to your task. You need less labeled data, which lowers costs. Your model becomes smaller and faster, so you get results quickly and at a lower cost. Vision fine-tuning also helps you customize your system for unique jobs, like sorting new types of products or recognizing rare objects.

Learning becomes more effective with fine-tuning. You can use semi-supervised learning to get good results even if your dataset is not fully labeled. Parameter-efficient learning methods help you keep the core knowledge of your model while boosting its performance for your dataset.

A real-world example shows that fine-tuning can improve keyword accuracy by 8% and increase user engagement. This means your system not only works better but also gives users a better experience. With vision fine-tuning, you can meet the demands of your industry and keep your machine vision system up to date.

Fine-Tuning Computer Vision Model Process

Fine-tuning computer vision model systems involves several important steps. You need to follow a clear process to get the best results from your deep neural network. Each step helps you adapt your model to your specific task and dataset. Let’s walk through the main stages.

Data Preparation

You start by collecting and preparing your dataset. The quality and variety of your dataset matter a lot. You want your dataset to match the real-world images your model will see. Many teams use public datasets like COCO and then add their own labels or instructions. You can also use large language models to create synthetic data or generate captions and bounding boxes. Some projects, like LLaVA-Instruct-150K, use GPT-4 to make multimodal instruction data from COCO. Others, such as StableLLaVA, combine image creation with text generation for even richer datasets.

Tip: Use data augmentation to make your dataset stronger. Data augmentation means you change your images in small ways, like flipping, rotating, or changing colors. This helps your model learn better and avoid overfitting.

You can also mix datasets or randomize the order of your data. This makes your model more robust and helps it learn to handle different situations. Accurate labeling is key. If your dataset has errors, your model will not learn the right things.

Model Adjustment

Once your dataset is ready, you adjust your model for fine-tuning. You usually start with a pre-trained deep neural network. You might replace or reset the last layer so your model can focus on your new task. For example, if you want your model to recognize new objects, you change the output layer to match your new classes.

Research shows that model fine-tuning does not always improve performance just by adding more data. Sometimes, using too much data can make your model less accurate. You need to find the right balance. Try mixing different datasets and using data augmentation to help your model generalize better. You can also use parameter-efficient fine-tuning methods to save resources and speed up learning.

Note: Model fine-tuning works best when you use domain-specific data and adjust your model step by step. Always check your results and make changes as needed.

Training and Validation

Now you train your model using your prepared dataset. Training means you show your model many examples so it can learn to make better predictions. You use supervised learning, where your model learns from labeled data. You also use data augmentation during training to help your model learn from more examples.

You need to validate your model as you train. Validation means you test your model on a separate part of your dataset to see how well it is learning. You look at metrics like accuracy, precision, and recall. If your model starts to overfit, you can use techniques like early stopping or regularization.

Here is a table showing how fine-tuning computer vision model steps can improve real-world results:

| Aspect | Metric / Result | Description / Impact |

|---|---|---|

| Success Rate Improvement | From 76.5% to 97.1% on LIBERO benchmark | Task performance jumps after fine-tuning with the right recipe. |

| Inference Speedup | Up to 26× throughput increase | Faster results using parallel decoding and action chunking. |

| Latency | 0.07 ms (single-arm), 0.321 ms (bimanual tasks) | Low latency allows high-frequency control in real robots. |

| Real-world Robot Success Rate | Up to 15% absolute improvement over baselines | Fine-tuned models outperform others in complex tasks. |

| Training Details | 50-150K gradient steps, batch size 32, 8 GPUs | Shows a practical setup for reproducible training. |

You can see that the right training and validation steps make a big difference. Fine-tuning helps your model work faster and more accurately in real-world tasks.

Deployment

After training and validation, you deploy your model. Deployment means you put your model into a real system where it can start making predictions. You need to watch key metrics like inference time (how fast your model gives answers), throughput (how many images it can process per second), and memory use.

Tip: Use A/B testing or shadow mode to compare your new model with your old one. This helps you see if your fine-tuned model really works better.

You should monitor your model after deployment. Set up dashboards to track accuracy, precision, recall, and latency. If your model’s performance drops, you can retrain it with new data. You can also use continuous learning to keep your model up to date as your dataset changes.

Many teams use tools like MLflow or Weights & Biases to track experiments and manage versions. You can choose to deploy your model in the cloud or on edge devices, depending on your needs. Cloud deployment gives you flexibility and scalability, but you need to manage resources and costs carefully.

Note: Continuous monitoring and learning help your model stay accurate and useful over time. This keeps your system working well and gives you the best return on your investment.

Model Fine-Tuning Considerations

Data Quality

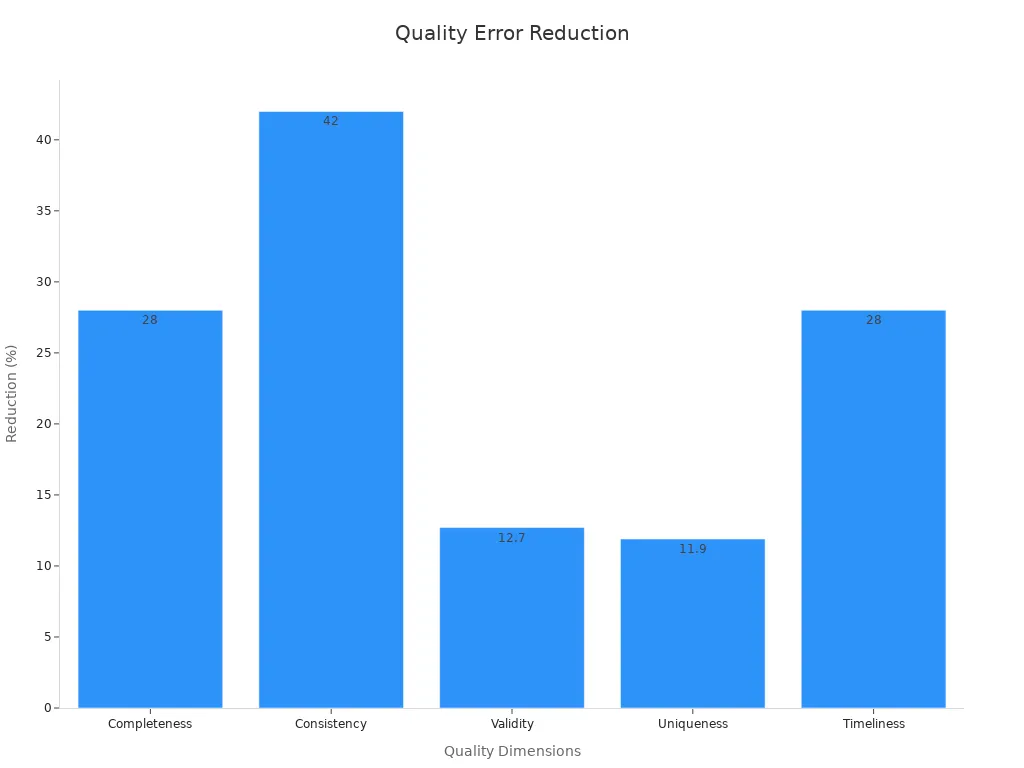

You need high-quality data for successful model fine-tuning. If your data has errors or missing information, your model may learn the wrong things. You should check your data for completeness, consistency, and accuracy. Teams often use tools to measure and clean data throughout the process. You can improve data quality by setting up rules for data entry, using automated checks, and training your team.

| Data Quality Dimension | Example Error Rate Reduction | Measurement Techniques | Best Practices |

|---|---|---|---|

| Completeness | 70% → 98% | % of filled fields | Mandatory fields, audits |

| Consistency | 42% fewer errors | Format checks | Standardization, validation |

| Validity | 13% → 0.3% | Rule validation | Automated rules, documentation |

| Uniqueness | 12% → 0.1% | Duplicate detection | Deduplication, real-time checks |

| Integrity | 34% better processing | Key validation | Database constraints, audits |

| Reliability | 99.97% reliability | Error rate trends | Monitoring, feedback loops |

| Timeliness | 28% better pricing accuracy | Update frequency | Automated refresh, delay checks |

Tip: Regular audits and automated validation help you keep error rates low and your model fine-tuning on track.

Overfitting

Overfitting happens when your model learns details or noise from your training data that do not apply to new data. This makes your model less reliable in real-world situations. You can spot overfitting if your model performs well on training data but poorly on new data. To prevent this, use techniques like early stopping, regularization, and data augmentation. Lower learning rates and larger batch sizes also help your model generalize better.

- Overfitting increases the risk of errors and can cause your model to make bad predictions.

- Real-world problems include medical mistakes, financial losses, and unfair decisions.

- Monitoring training loss and validation loss helps you catch overfitting early.

Note: Parameter-efficient fine-tuning methods, such as using adapter layers, can help reduce overfitting by updating only a small part of the model.

Computational Resources

Model fine-tuning can use a lot of computing power. You need to plan for enough memory, processing speed, and storage. Benchmarks like MLPerf help you measure how well your system uses resources. You can improve efficiency by tuning hyperparameters, pruning your model, or using faster hardware.

- Use distributed computing to train your model on multiple GPUs or TPUs.

- Optimize data loading and memory use to avoid slowdowns.

- Monitor energy use and costs to keep your project affordable.

Tip: Regular benchmarking helps you find and fix bottlenecks, making your model fine-tuning faster and more efficient.

Domain Specificity

Your model works best when you fine-tune it for your specific field or task. Domain-specific fine-tuning uses data and tasks from your area, such as agriculture, healthcare, or law. You can use techniques like adapter layers or multi-task learning to help your model learn both general and specialized knowledge.

- Companies in agriculture, manufacturing, and law have improved accuracy and reduced errors by using domain-specific model fine-tuning.

- You can use continual learning to keep your model updated as your field changes.

- Parameter-efficient fine-tuning lets you adapt your model without needing huge amounts of data or computing power.

Note: Tailoring your model to your domain helps you get better results and stay ahead in your industry.

Learning Methods and Tools

Transfer Learning

You can use transfer learning to make your machine vision system smarter and faster. Transfer learning lets you start with a model that already knows how to see and understand images. You do not need to train everything from the beginning. Instead, you transfer what the model has learned from one task to another. This saves time and helps you get better results, especially when you have less data.

Transfer learning works by freezing the early layers of a model and only training the last few layers. This way, you keep the general features and only change the parts that matter for your new task. If you have more data, you can fine-tune more layers for even better results. Studies show that deep fine-tuning helps your model adapt to new domains, while shallow fine-tuning is fast and works well with small datasets. You can also use top-tuning, which trains only a simple classifier on top of pre-trained features. This method gives you almost the same accuracy as full fine-tuning but much faster.

- Deep fine-tuning improves adaptation to new tasks.

- Shallow fine-tuning is efficient for small datasets.

- Top-tuning reduces training time by up to 100 times.

Transfer learning reduces overfitting and boosts the performance of transfer learning in real-world tasks. You can use transfer learning for many jobs, like medical imaging or object detection. Adjusting learning rates for each layer helps you balance speed and accuracy. Transfer learning is a key part of modern machine vision.

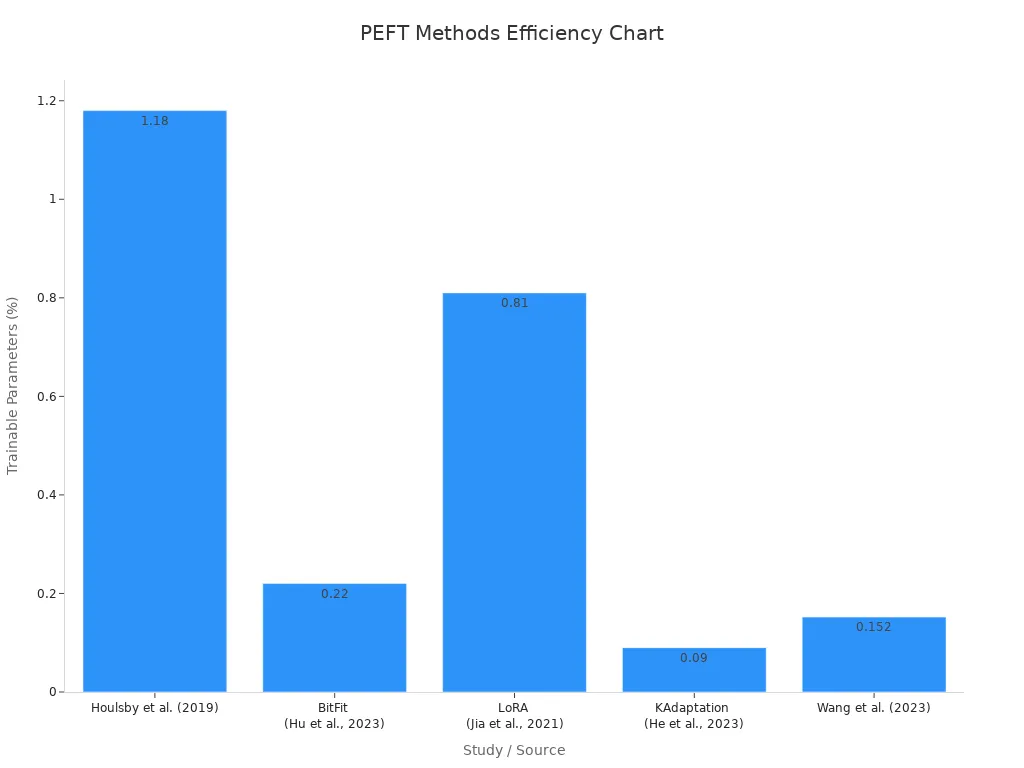

Parameter-Efficient Learning

Parameter-efficient learning helps you fine-tune large models without using too much memory or time. You only update a small part of the model, which saves resources and keeps your system fast. Many studies show that you can train less than 1% of the model’s parameters and still get great results.

| Study / Source | PEFT Method(s) | Percentage of Trainable Parameters | Performance Impact / Metrics | Application Domain |

|---|---|---|---|---|

| Houlsby et al. (2019) | Adapter | ~1.18% | Maintains accuracy close to full fine-tuning | General vision models |

| BitFit (Hu et al., 2023) | BitFit | 0.22% | Comparable performance to full fine-tuning | General vision models |

| LoRA (Jia et al., 2021) | LoRA | 0.81% | High effectiveness, often combined with BitFit for best results | General vision models |

| KAdaptation (He et al., 2023) | KAdaptation | 0.09% | Maintains high accuracy with minimal parameter updates | Vision Transformer (ViT) |

| Dutt et al. (2023) | Multiple PEFT methods | Varies | Up to 22% performance gain in medical imaging tasks | Medical image analysis |

| Wang et al. (2023) | Contrastive learning + PEFT | 0.152% | Comparable to GPT-4 on biomedical QA tasks | Biomedical QA |

Prompt tuning and adapter tuning are two popular parameter-efficient learning methods. Prompt tuning changes the input to guide the model, while adapter tuning adds small modules inside the model. Both methods help you avoid overfitting and make your model work well with less data. You can use parameter-efficient learning for vision-language models and other tasks where you want to save time and resources.

Frameworks and Libraries

You need the right tools to make transfer learning and parameter-efficient learning easy. PyTorch is one of the most popular frameworks for transfer learning. Over half of research teams use PyTorch because it is fast and flexible. PyTorch lets you change your model in real time, which helps you experiment and debug quickly. You can use GPU acceleration and distributed training to handle large datasets.

| Metric | PyTorch | TensorFlow | Impact |

|---|---|---|---|

| Training Time | Faster (~31% faster execution) | Slower | PyTorch enables quicker model training, beneficial for fine-tuning tasks requiring rapid iteration. |

| RAM Usage | Higher (~3.5 GB) | Lower (~1.7 GB) | PyTorch trades off higher memory consumption for speed. |

| Validation Accuracy | Comparable (~78%) | Comparable (~78%) | Both frameworks achieve similar accuracy, indicating PyTorch’s speed advantage does not compromise performance. |

PyTorch works well with cloud platforms like Microsoft Azure. Many companies use PyTorch to build and deploy AI models at scale. You can also use TensorFlow, which is good for production systems. Both frameworks support transfer learning and parameter-efficient learning. You can find many libraries and fine-tuning api tools to help you automate your workflow. Multimodal models and automation tools make it easier to use transfer learning for images, text, and more.

Tip: Try different frameworks and libraries to find what works best for your learning tasks. Use automation tools to speed up your transfer learning projects.

When you fine-tune machine vision system models, you unlock higher accuracy and reliability for your specific tasks. Studies show that this approach can boost accuracy by up to 15% and even increase defect detection rates by 90%. You can fine-tune machine vision system models to improve vision capabilities in healthcare, finance, and more. If you want to get started, try these steps:

- Prepare clean, well-labeled data.

- Choose a pre-trained model that fits your needs.

- Use best practices like freezing layers and regular validation.

- Explore tutorials and courses for hands-on guidance.

Keep learning and experimenting to see how fine-tuning can help your projects succeed.

FAQ

What is the difference between fine-tuning and training from scratch?

Fine-tuning uses a model that already knows how to see images. You only teach it new details for your task. Training from scratch means you start with no knowledge and need much more data and time.

How much data do you need for fine-tuning?

You can fine-tune with a few thousand labeled images. You do not need millions. The more your data matches your real-world task, the better your results.

Can you fine-tune a model for more than one task?

Yes! You can fine-tune a model for several tasks by using multi-task learning. This helps your model learn different jobs at the same time.

Tip: Use separate output layers for each task to keep results clear.

What tools help you fine-tune machine vision models?

You can use tools like PyTorch, TensorFlow, and MLflow. These tools help you train, test, and track your models. Many teams use cloud platforms for faster results.

See Also

Do Filtering Techniques Improve Accuracy In Machine Vision?

Ways Machine Vision Systems Achieve Precise Alignment By 2025

Comparing Firmware-Based And Traditional Machine Vision Systems