Sensor pixel resolution in a machine vision system describes how many tiny dots, or pixels, a sensor uses to capture an image. Higher resolution means the system can see smaller details, leading to sharper images and more accurate measurements. For example, a 768×576 pixel camera can resolve up to 384×288 distinct objects, which improves defect detection and measurement precision. In 2025, rapid advances in high-resolution sensors and AI drive demand for precise inspection, as shown in the table below:

| Aspect | Details |

|---|---|

| Market Size (2025) | USD 13.52 billion |

| Key Growth Drivers | High-resolution, 3D vision, AI integration |

| Defect Detection Accuracy | Up to 99% with AI-driven systems |

Choosing the right sensor pixel resolution machine vision system depends on the detail needed for each application.

Key Takeaways

- Higher sensor pixel resolution lets machine vision systems see smaller details, improving image sharpness and measurement accuracy.

- Choosing the right pixel size balances detail and sensitivity; larger pixels capture more light for clearer images, while smaller pixels increase resolution but may add noise.

- Sensor size and pixel arrangement affect image quality; larger sensors and monochrome pixels often provide better clarity and sensitivity.

- Good lighting, matching lenses, and proper calibration are essential to get the best results from a machine vision system.

- New technologies and AI integration in 2025 enhance defect detection and speed, but selecting the right sensor depends on the specific application needs and environment.

Sensor Pixel Resolution Basics

Definition

Sensor pixel resolution in machine vision systems describes the ability of a sensor to capture fine details. Technically, it refers to the spatial frequency limit set by the pixel size on the sensor. This limit is measured in line pairs per millimeter (lp/mm). The Nyquist frequency, which is the highest spatial frequency the sensor can resolve, equals one line pair for every two pixels. The formula for sensor resolution is:

sensor resolution = (1 lp) / (2 × pixel size) × 1000

Here, the pixel size is in microns, and the factor 1000 converts microns to millimeters. Pixels are small, light-sensitive elements, usually between 3 and 10 microns in size. Smaller pixels increase the number of pixels per millimeter, which raises the sensor’s ability to see tiny details. However, smaller pixels can also make the sensor more sensitive to noise.

Image Quality

Pixel resolution plays a key role in how sharp and clear an image appears. More pixels mean the sensor can capture more detail, making it easier to see small features. However, image quality depends on more than just pixel count. Sensor size and lens quality also matter. Larger sensors collect more light, which helps reduce noise and improve detail, especially in low-light settings. As pixel sizes shrink to boost resolution, challenges like increased noise and heat can appear. New sensor designs, better noise reduction, and improved lenses help keep images clear even as resolution increases.

Measurement Accuracy

Measurement accuracy in machine vision relies on sensor resolution. The number of pixels along the X and Y axes sets the smallest detail the system can measure. For example, a sensor with 1000 pixels across a 1-inch field of view gives a pixel size of 0.001 inch. To get reliable measurements, the pixel size should be about one-tenth of the required tolerance. Higher resolution allows for more precise measurements, but it can also increase processing demands and require better lighting and optics. Sub-pixel algorithms can further improve accuracy by estimating positions between pixels.

Tip: Always match sensor resolution with lens quality and lighting to achieve the best measurement results.

Key Factors in Machine Vision Sensors

Pixel Size

Pixel size plays a major role in how a machine vision sensor performs. Larger pixels can collect more light, which increases the sensor’s sensitivity and improves the signal-to-noise ratio (SNR). This means images appear brighter and clearer, especially in low-light conditions. Larger pixels also have a higher charge saturation capacity, which helps prevent image artifacts. However, sensors with larger pixels need more space, which can increase the size and cost of the sensor. Smaller pixels allow more pixels to fit on a sensor, raising the resolution and letting the system see finer details. Yet, smaller pixels may suffer from blooming and crosstalk, which can lower image contrast. Advances like back-illuminated sensor designs help smaller pixels capture more light, balancing resolution and sensitivity. Most machine vision sensors in 2025 use pixel sizes between 3 and 10 micrometers.

Note: Choosing the right pixel size means balancing resolution, sensitivity, noise, and cost for each application.

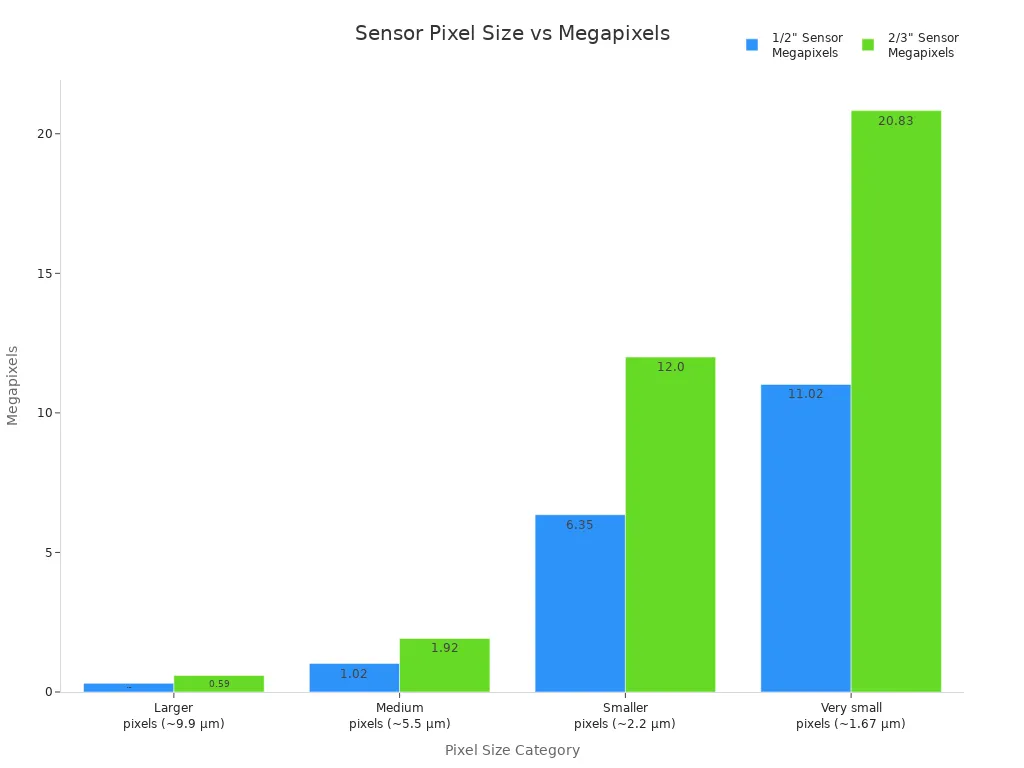

Sensor Size

Sensor size affects both the field of view and the overall image quality. Larger sensors can hold more pixels and larger pixel sizes, which improves light sensitivity and dynamic range. This leads to better images, especially in challenging lighting. Smaller sensors make cameras more compact and affordable but may reduce image quality. The table below shows common sensor sizes and their impact:

| Sensor Size | Pixel Size Range (µm) | Approximate Resolution (MP) | Impact on Image Quality |

|---|---|---|---|

| 1/2" | 3.45 – 9.9 | 0.31 – 11.02 | Balanced, common in machine vision |

| 2/3" | 3.45 – 9.9 | 0.59 – 20.83 | Higher resolution, better low-light |

| 1/3" | Smaller than 1/2" | Lower | Compact, lower image quality |

| 1/4" | Even smaller | Lower | Very compact, lowest image quality |

| 35 mm | Largest | Highest | Best quality, largest and most costly |

Pixel Arrangement

Pixel arrangement shapes how a sensor captures and processes images. Most color sensors use a Bayer pattern, where each pixel records only red, green, or blue light. The camera then uses interpolation, called debayering, to create a full-color image. This process can lower the native resolution by up to 50% and may introduce artifacts like false colors or blurry edges. Monochrome sensors do not use color filters, so each pixel captures all the light, resulting in higher sensitivity and sharper images. However, they cannot capture color directly. The choice between Bayer and monochrome sensors depends on whether the application needs color information or maximum detail and sensitivity.

Tip: For tasks that require the highest detail, such as precise measurements or defect detection, monochrome sensors often provide better results. For applications where color is important, Bayer pattern sensors are the standard choice.

Sensor Pixel Resolution Machine Vision System

Resolution and Sensitivity

Sensor pixel resolution machine vision system design always involves a balance between resolution and sensitivity. Pixel size plays a key role in this trade-off. Larger pixels collect more light, which increases sensitivity and helps the sensor detect faint signals. For example, a pixel measuring 6.5 micrometers can gather twice as much light as a 4.5 micrometer pixel. This means larger pixels can produce brighter images with less noise, especially in low-light conditions. However, smaller pixels allow more pixels to fit on the sensor, which increases resolution and lets the system see finer details. This higher resolution is important for tasks that require spotting tiny defects or measuring small features. Yet, smaller pixels collect less light, which can make the image noisier and reduce sensitivity. Some sensors use a technique called binning, which combines several small pixels into one larger pixel. This boosts sensitivity but lowers resolution. In 2025, most machine vision sensors use pixel sizes between 3 and 10 micrometers, balancing the need for both detail and sensitivity.

Tip: When choosing a sensor pixel resolution machine vision system, always consider the lighting conditions and the smallest detail you need to detect.

Noise and Dynamic Range

Noise and dynamic range are two important factors that affect image quality in a sensor pixel resolution machine vision system. Noise refers to random variations in the image that can make it harder to see small details. Dynamic range measures how well the sensor can capture both very bright and very dark parts of a scene at the same time. Larger pixels help reduce noise because they can hold more charge before becoming full. This leads to a higher signal-to-noise ratio (SNR) and better dynamic range. For example, sensors with larger pixels can reach a dynamic range of about 69 dB and a maximum SNR of around 39 dB. These values mean the sensor can show both shadows and highlights clearly, which is important for accurate inspection. Smaller pixels, while increasing resolution, tend to have lower saturation capacity and higher noise. Sensor size also affects noise and dynamic range. Larger sensors can use bigger pixels and collect more light, which improves both SNR and dynamic range. The arrangement of pixels, such as using a Bayer pattern or a monochrome layout, also influences noise and image clarity.

| Measurement | Value (dB) | Description |

|---|---|---|

| Dynamic Range | ~69 dB | Range between minimum and maximum detectable signal levels |

| Maximum SNR | ~39 dB | Highest signal-to-noise ratio, linked to pixel saturation and noise floor |

Note: A sensor pixel resolution machine vision system with high dynamic range and low noise produces clearer images, making it easier to spot defects and measure objects accurately.

Defect Detection

Defect detection is one of the most important uses for a sensor pixel resolution machine vision system. High pixel resolution allows the system to find very small defects, such as scratches or microfractures, that lower-resolution sensors might miss. For example, high-resolution cameras can detect defects as small as 1.5 micrometers. The ability to analyze images at the pixel level helps measure the size and shape of defects, which is critical for quality control in manufacturing. Studies show that improving image quality by averaging multiple measurements can reduce false positives in defect detection from 30% down to 0%. However, higher resolution also means the system must handle larger amounts of data, which can slow down processing if not managed well. The choice of sensor type, such as CCD or CMOS, and the type of camera, like line scan or area scan, also affects how well the system detects defects. In 2025, most machine vision systems use pixel sizes between 3 and 10 micrometers and sensor sizes like 1/2", 2/3", or 1", which provide a good balance between resolution, sensitivity, and speed.

For best results, system designers must match the sensor pixel resolution machine vision system to the specific needs of the application, considering the smallest defect size, required speed, and available lighting.

Trends and Selection for 2025

New Technologies

In 2025, sensor design has advanced quickly. Contact Image Sensor (CIS) technology now offers high speed, high resolution, and true metrology. These sensors have a compact form, making them fit well in tight spaces like battery or PCB production lines. CIS sensors combine the camera, lens, and lighting, which makes system setup faster and easier. New products such as Dragonfly S USB3 and Z-Trak2 high-speed 3D profile sensors show how far the technology has come.

| Advancement Aspect | Description |

|---|---|

| Sensor Technology | Contact Image Sensor (CIS) technology |

| Key Improvements | High speed, high resolution, high dynamic range, true metrology |

| Form Factor | Compact design with small size compared to conventional line scan cameras |

| Working Distance | Typically 10-20mm vs. 250-500mm for conventional line scan cameras |

| Integration | Combines camera, lens, and lighting components for faster and easier system design |

| Application Suitability | Ideal for spatially restricted environments such as battery, print, and PCB production lines |

| Use Case | Inline automatic optical inspection (AOI) in high-throughput production lines |

| New Products Mentioned | Dragonfly S USB3, Lince5M NIR high-speed 2D CMOS sensors, Z-Trak2 high-speed 3D profile sensors |

Artificial intelligence (AI) now works closely with high-resolution sensors. AI uses deep learning to analyze textures and shapes that older systems could not see. These smart systems use CPUs, GPUs, and FPGAs to process images in real time. Edge and cloud computing help manage large amounts of data. AI-powered vision systems now inspect products, guide robots, and make decisions faster and more accurately than before.

Application Needs

Different industries have unique needs for sensor pixel resolution. In healthcare, doctors use high-resolution sensors to spot tiny details in medical images. This helps them find problems early and plan better treatments. CMOS sensors are popular because they capture clear images with low noise. In manufacturing, factories need precise sensors for code reading, defect detection, and guiding robots. Global shutter sensors help when objects move fast, while rolling shutter sensors work better in low light. Robotics uses depth sensing and high dynamic range to help robots see and move safely.

| Application Area | Example Use Case | Sensor Pixel Resolution Role | Description |

|---|---|---|---|

| Manufacturing | Gear measurement | Subpixel level accuracy | High-resolution sensors enable subpixel accurate measurement of gears to ensure quality control in manufacturing processes. |

| Manufacturing | Weld seam trajectory recognition | Precise image resolution | Laser scanning displacement sensors combined with vision sensors guide robotic welding with high accuracy, improving weld quality and position. |

| Healthcare | Robotic surgical suturing | Subpixel resolution for strain measurement | Sensors with subpixel resolution provide high precision and safety in measuring strain during robotic suturing, critical for surgical accuracy. |

| Robotics | Object recognition and autonomous navigation | Matching sensor resolution with lens capabilities | Visual sensors with appropriate pixel resolution are essential for accurate object recognition, manipulation, and navigation in robotic systems. |

| Robotics | Medical surgery | High precision visual sensing | Visual sensors with matched resolution and lens parameters support precise control and perception in robotic medical procedures. |

Practical Factors

Selecting the right sensor pixel resolution machine vision system means looking at several practical factors. Lighting affects how much detail the sensor can capture. The lens must match the sensor’s pixel size to avoid losing resolution. Calibration keeps the lens and sensor aligned, which is important for sharp images. Smaller pixels need better lenses and careful calibration to get the best results. Working distance and object size also matter, as they affect which lens and sensor to choose.

- Good lighting helps the sensor see fine details.

- The lens must match the sensor’s resolution to avoid blurry images.

- Calibration corrects for small errors and keeps measurements accurate.

- Testing with real-world targets checks if the system meets the needs.

Tip: Always test the full system—sensor, lens, and lighting—together to make sure it works well for your application.

Sensor pixel resolution machine vision system selection shapes image quality, measurement accuracy, and defect detection. Engineers should balance resolution, sensitivity, and system cost by considering feature size, lighting, and processing needs.

- Avoid over-specifying resolution to reduce unnecessary costs.

- Use pixel rules: 3 pixels per feature for traditional systems, 5-10 for AI.

- Remember, system cost includes hardware, lighting, and installation.

To choose the best sensor:

- Identify the smallest feature to detect.

- Match sensor and lens to scene size.

- Use calibration and regular software updates for accuracy.

Stay informed about new sensor technologies to keep systems effective.

FAQ

What does pixel resolution mean in a machine vision sensor?

Pixel resolution shows how many pixels a sensor uses to capture an image. More pixels mean the sensor can see smaller details. High resolution helps the system find tiny defects and measure objects more accurately.

How does pixel size affect image quality?

Larger pixels collect more light. This makes images brighter and reduces noise. Smaller pixels increase resolution but may add noise. Engineers choose pixel size based on the need for detail and lighting conditions.

Why do some applications use monochrome sensors instead of color sensors?

Monochrome sensors capture all light at each pixel. This gives higher sensitivity and sharper images. Many inspection tasks do not need color, so monochrome sensors work better for finding small defects or measuring tiny features.

How can someone choose the right sensor resolution for their project?

Engineers start by finding the smallest feature they need to see. They match the sensor and lens to the object size. They test the system with real samples. Good lighting and calibration help get the best results.

See Also

Understanding Camera Resolution In Machine Vision Technology

Exploring Pixel-Based Machine Vision For Today’s Uses

Machine Vision Segmentation Technologies Forecast For 2025