A rectified linear unit, often called ReLU, serves as a simple yet powerful function within neural networks. ReLU transforms negative values to zero and keeps positive values unchanged. This approach helps neural networks learn patterns in images more efficiently. In deep learning, ReLU has become essential for training neural networks used in machine vision. Researchers found that ReLU-based models outperformed traditional activation functions in 92.8% of classification tasks. The table below shows how different activation types compare in accuracy and speed:

| Activation Function Type | Impact on Accuracy | Convergence Speed | Misclassification Confidence |

|---|---|---|---|

| Bounded Functions | Greater stability | Faster convergence | Lower misclassification |

| Symmetric Functions | Improve suppression | Varies | Reduced false predictions |

| Non-monotonic Functions | Strong performance | Enhanced features | Better handling of negatives |

ReLU stands out in neural network applications, especially in the Rectified Linear Unit machine vision system, by boosting both speed and accuracy.

Key Takeaways

- ReLU is a simple activation function that turns negative inputs to zero and keeps positive inputs unchanged, helping neural networks learn image patterns faster.

- Using ReLU in machine vision improves training speed, accuracy, and helps models focus on important image features by creating sparse activations.

- ReLU solves the vanishing gradient problem, allowing deep neural networks to learn better and avoid getting stuck during training.

- The ‘dying ReLU’ problem can stop some neurons from learning, but variants like Leaky ReLU keep neurons active and improve model performance.

- Beginners should start with ReLU in their models, try its variants if needed, and use simple experiments to build strong machine vision systems.

Rectified Linear Unit Basics

What Is ReLU

ReLU stands for rectified linear unit. This activation function has become a standard choice in many neural network designs. When a neural network uses ReLU, it helps the model learn patterns in data, especially in images. ReLU works by changing all negative values in the input to zero. It keeps positive values the same. This simple rule makes the activation easy to use and fast to compute. Many deep learning models use ReLU because it helps them train faster and find important features in data.

Tip: ReLU is often the first activation function that beginners learn when studying deep learning.

Mathematical Definition

The rectified linear activation function has a simple mathematical form. It is written as:

f(x) = max(0, x)

This means that if the input value is less than zero, the output is zero. If the input is greater than or equal to zero, the output is the same as the input. This creates a piecewise linear function with two parts:

- A flat segment at zero for all negative inputs.

- A straight line for all positive inputs.

The derivative of the rectified linear activation function is also simple. For negative inputs, the derivative is zero. For positive inputs, the derivative is one. At zero, the derivative does not exist, but most neural network software sets it to zero during training. These properties make ReLU very efficient for deep learning models. The function’s output is sparse, meaning many neurons in a neural network will have zero activation. This helps the model focus on the most important features.

Nonlinearity in Neural Networks

Neural networks need nonlinearity to solve complex problems. If a neural network only used linear activation functions, it could not learn patterns that are not straight lines. The rectified linear activation function introduces nonlinearity by changing the output for negative and positive values. This allows deep learning models to learn more complex shapes and patterns in data.

A recent benchmark study tested neural networks on datasets with non-linear relationships. These datasets included patterns that could not be separated by a straight line. The study showed that neural networks with non-linear activation functions, like ReLU, could find these patterns better than those with only linear activations. However, the study also found that neural networks sometimes struggle to detect the right features when there is a lot of noise. This shows that while ReLU and other non-linear activation functions are powerful, they need careful design and enough data to work well.

Note: Nonlinearity from activation functions like ReLU is what gives deep learning its power to solve real-world problems.

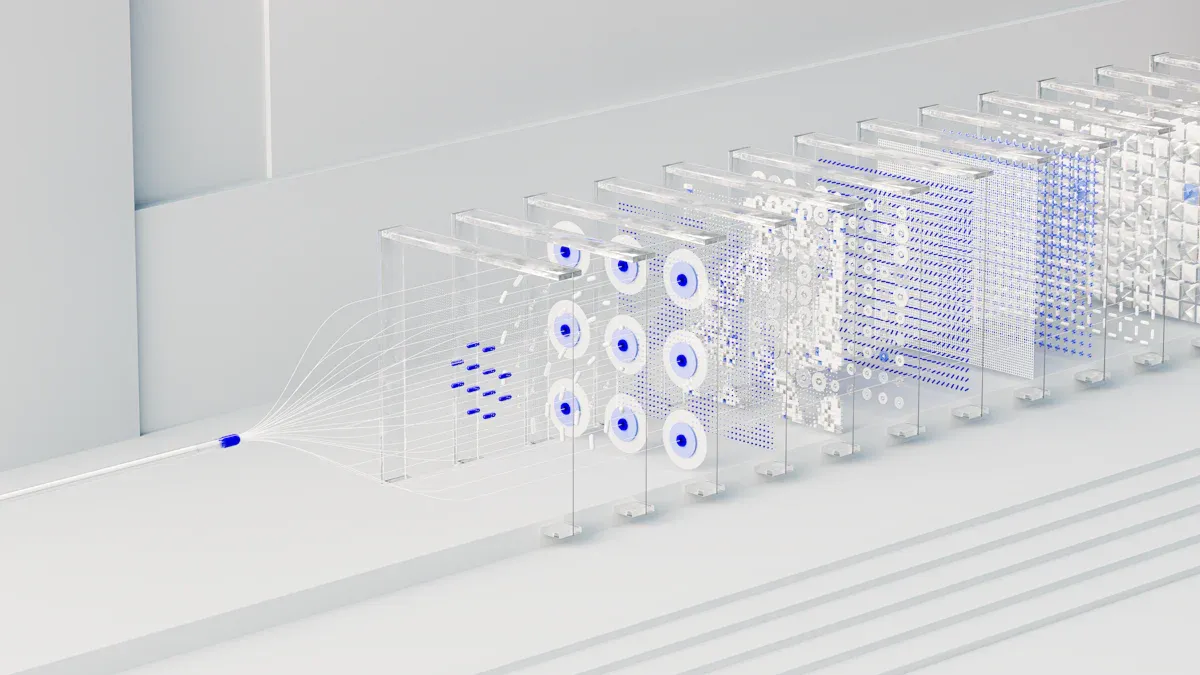

Rectified Linear Unit Machine Vision System

Applications in Vision

The rectified linear unit machine vision system has changed how computers see and understand images. Many neural network models use ReLU to help with image recognition and classification. In computer vision applications, ReLU works inside convolutional neural networks. These networks look at images and find patterns that help with tasks like object detection and tracking.

Researchers have used ReLU in famous models such as AlexNet, VGGNet, GoogleNet, and ResNet. These models use ReLU after each convolutional layer. This step helps the neural network learn important features in images. For example, in image classification, ReLU helps the model decide if a picture shows a cat, a dog, or a car. The rectified linear unit machine vision system also helps with object detection. Models like R-CNN, YOLO, and SSD use ReLU to find and track objects in real time. These models can spot stop signs, people, or animals in photos and videos.

ReLU has made deep learning models faster and more accurate for recognition tasks. This improvement helps many applications, from self-driving cars to security cameras.

Feature Extraction

Feature extraction is a key part of the rectified linear unit machine vision system. Neural networks use ReLU to pick out the most important parts of an image. When a neural network looks at a picture, it tries to find shapes, edges, and colors that help with recognition. ReLU helps by turning off neurons that do not find useful features. This process makes the neural network focus on the best information.

In deep learning, ReLU creates sparse activations. Sparse activations mean that only some neurons are active at a time. This helps the neural network ignore noise and pay attention to clear patterns. For example, in image classification, ReLU helps the model find the right features to tell a cat from a dog. The rectified linear unit machine vision system uses this method to improve recognition in many applications.

Training Efficiency

The rectified linear unit machine vision system also improves training efficiency. Training deep neural networks can take a long time. ReLU speeds up this process. Krizhevsky and his team showed that using ReLU in AlexNet made the model train six times faster than models with older activation functions like tanh or sigmoid. This speed comes from ReLU’s simple, non-saturating form. Neural networks with ReLU do not get stuck as often during training. This helps the model learn better and faster.

ReLU also helps with the vanishing gradient problem. In deep learning, the vanishing gradient problem can stop a neural network from learning. ReLU’s design keeps gradients strong, so the neural network can keep learning even in very deep models. This benefit has made ReLU a top choice for training deep neural networks in recognition and classification tasks.

Many real-world applications use the rectified linear unit machine vision system because of these benefits. For example, a stop sign detector used transfer learning with a neural network trained on 50,000 images. After pretraining, the model needed only 41 new images to learn to recognize stop signs. This shows how ReLU helps neural networks learn quickly and with less data.

The impact of relu on deep learning is clear: faster training, better feature learning, and improved recognition make ReLU a key part of modern vision systems.

ReLU Benefits and Challenges

Computational Efficiency

ReLU offers several advantages for machine vision systems. The activation function is simple and fast to compute. This simplicity helps models train quickly and use less computer power. Many researchers have found that ReLU leads to highly sparse activations. In most networks, only about 15–30% of neurons activate at once. Sparse activations make the model more efficient and easier to understand.

- Sparsity-based acceleration frameworks can make inference over three times faster than models without sparse activations.

- Sparse activations help the model ignore noise and focus on important features.

- Models using ReLU show better generalization and lower error rates in tasks with noisy inputs.

- The ProSparse method achieves high sparsity without losing performance, which maximizes efficiency.

ReLU’s computational efficiency allows developers to build larger and deeper models without slowing down training or inference.

Vanishing Gradient Solution

The vanishing gradient problem can stop a neural network from learning. This problem happens when gradients become too small as they move through many layers. Earlier activation functions like sigmoid and tanh often caused this issue. ReLU helps solve the vanishing gradient problem because it does not saturate for positive inputs. This means gradients stay strong during backpropagation, even in deep networks.

| Activation Function | Key Characteristics | Impact on Vanishing Gradient Problem |

|---|---|---|

| ReLU | Outputs zero for negative inputs; linear for positive inputs; computationally efficient; sparse activations | Does not saturate for large inputs, allowing better gradient flow and mitigating vanishing gradients |

| Sigmoid | Outputs between 0 and 1; saturates at extremes | Saturation causes gradients to vanish, hindering learning in deep networks |

| Tanh | Outputs between -1 and 1; zero-centered; saturates at extremes | Also suffers from saturation leading to vanishing gradients |

Research shows that ReLU improves training speed and helps gradients move through deep networks. This makes it easier to train large models for machine vision.

Dying ReLU and Variants

Despite its benefits, ReLU has some challenges. One common issue is the "dying ReLU" problem. This happens when too many neurons output zero and stop learning. If a neuron always receives negative inputs, it will never activate again. This can reduce the model’s ability to learn new patterns.

To address this, researchers created variants like Leaky ReLU and Parametric ReLU (PReLU). Leaky ReLU allows a small, non-zero output for negative inputs. PReLU lets the model learn the best slope for negative values. These variants help keep more neurons active and improve learning.

Tip: When building a neural network, try using Leaky ReLU or PReLU if you notice many neurons are not activating.

Getting Started with ReLU

Simple Code Example

Many beginners start with a simple neural network to see how relu works in practice. The following Python code uses TensorFlow and Keras to build two models. One model uses relu, and the other uses Leaky ReLU. Both models help computers learn from images by introducing non-linearity.

- The first model uses relu as the activation function in its layers.

- The second model uses Leaky ReLU, which helps prevent the dying relu problem by allowing a small output for negative values.

import tensorflow as tf

from tensorflow.keras.layers import Dense, LeakyReLU

model_relu = tf.keras.models.Sequential([

Dense(64, input_shape=(100,), activation='relu'),

Dense(64, activation='relu'),

Dense(1, activation='sigmoid')

])

model_leaky_relu = tf.keras.models.Sequential([

Dense(64, input_shape=(100,)),

LeakyReLU(alpha=0.01),

Dense(64),

LeakyReLU(alpha=0.01),

Dense(1, activation='sigmoid')

])

model_relu.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

model_leaky_relu.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

A simple relu-based model can achieve strong results. For example, a PyTorch model using relu reached a test accuracy of 89.92% on a vision task. The confusion matrix below shows the model’s performance:

| Metric | Value |

|---|---|

| Test Accuracy | 89.92% |

| Confusion Matrix | [[50, 2], [3, 45]] |

These results show that relu helps models learn to classify images with high accuracy.

Beginner Tips

New learners can follow these tips to get the most out of relu in their projects:

- Start with relu as the default activation in hidden layers. It works well for most vision tasks.

- If many neurons stop working, try Leaky ReLU or PReLU to keep the network learning.

- Use simple models first. This helps you see how relu affects training and results.

- Check the model’s accuracy and confusion matrix to understand its strengths and weaknesses.

- Read the documentation for your deep learning library to learn more about relu and its variants.

Tip: Relu makes training faster and helps models find important features in images. Beginners can build strong machine vision systems by starting with relu and learning from simple experiments.

ReLU plays a vital role in machine vision systems. It helps models learn complex patterns and speeds up training. Key benefits include:

- Faster convergence during training.

- Improved accuracy in image recognition.

- Stable gradients that prevent learning problems.

- Efficient feature selection for better results.

- Support for deep neural networks.

Beginners should try different activation functions and explore variants like Leaky ReLU. Experimenting with these tools can lead to better performance in real-world projects.

FAQ

What does ReLU stand for in neural networks?

ReLU stands for Rectified Linear Unit. It is a simple activation function that helps neural networks learn patterns in data, especially in images.

Why do machine vision systems use ReLU?

Machine vision systems use ReLU because it speeds up training and helps models find important features in images. ReLU also prevents some common learning problems.

Can ReLU cause any problems during training?

Sometimes, ReLU can cause the "dying ReLU" problem. This happens when some neurons stop working and always output zero. Using variants like Leaky ReLU can help fix this issue.

How does ReLU improve image recognition accuracy?

ReLU creates sparse activations. This means only important features get passed to the next layer. The model learns to focus on what matters most, which improves accuracy.

Is ReLU the only activation function used in deep learning?

- No, other activation functions exist, such as sigmoid, tanh, and softmax.

- ReLU is popular because it works well for many tasks, but sometimes other functions work better for specific problems.

See Also

Complete Overview Of Machine Vision For Industrial Automation

Understanding Camera Resolution Fundamentals In Machine Vision Systems

Introductory Guide To Calibration Software For Vision Systems