Image pixel resolution in machine vision systems describes the number of pixels that capture detail in an image. Higher pixel density increases sharpness and reveals smaller defects that lower resolutions may miss.

- High-resolution cameras can detect surface anomalies as small as 1.5 micrometers, which improves defect detection in automotive and electronics manufacturing.

- Increased pixel size and overall system resolution both influence image clarity, helping operators measure components accurately and maintain quality control.

Mastering these basics supports better performance and more reliable results in any image pixel resolution machine vision system.

Key Takeaways

- Higher image resolution reveals smaller details and improves defect detection in machine vision systems.

- Choosing the right pixel size balances image clarity and sensitivity, depending on lighting and detail needs.

- Camera resolution, lens quality, and sensor size must work together to achieve accurate and clear images.

- Selecting resolution depends on the field of view, smallest feature size, and speed requirements of the task.

- Testing the full system, including lighting and lenses, ensures reliable performance and avoids common mistakes.

Key Concepts

Pixel

A pixel is the smallest addressable element in a digital image or display. In machine vision, each pixel acts as a sample of the original scene. Pixels form a grid, and each one records the intensity of light. In color imaging, a pixel often contains values for red, green, and blue. On a camera sensor, a pixel refers to a single sensor element that captures light for one color channel. The arrangement of pixels in a two-dimensional grid allows imaging systems to process and analyze visual information. Understanding how each pixel contributes to the overall image helps users interpret data and make accurate measurements.

Resolution

Resolution describes the number of pixels in an image. It determines how much detail the imaging system can capture. Higher resolution means more pixels represent the scene, which leads to sharper images and better clarity. This is important for tasks like defect detection, object recognition, and quality control. Several factors influence resolution, such as pixel size, field of view, and sensor quality. For example, a higher resolution camera provides more pixels per unit area, making it easier to spot small defects. However, increasing resolution can slow down processing and raise costs, so users must balance detail with speed and budget.

- The number of pixels in an image directly affects the level of detail.

- More pixels allow for finer measurements and improved defect detection.

- Pixel size, field of view, and resolution work together to define image quality.

- Lighting, lens quality, and sensor size also play important roles.

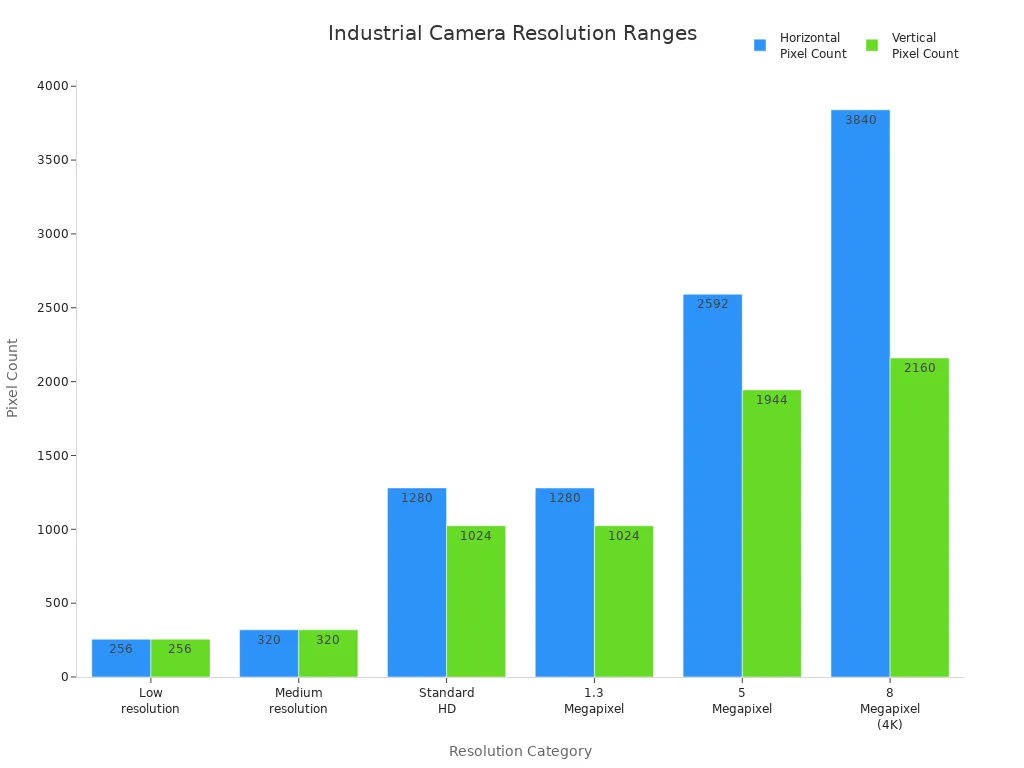

Camera Resolution

Camera resolution refers to the total number of pixels a camera sensor can capture. Industrial machine vision systems use a range of camera resolutions, depending on the application. The table below shows common camera resolution levels and their typical uses:

| Resolution Example | Pixel Dimensions | Typical Use/Application |

|---|---|---|

| Low resolution | 256 x 256 | High frame rate, fast motion tracking |

| Medium resolution | 320 x 320 | Detecting small defects in small fields of view |

| Standard HD | 1280 x 1024 | General inspection tasks, balanced speed and detail |

| 1.3 Megapixel | ~1280 x 1024 | Motion tracking, wider field of view |

| 5 Megapixel | 2592 x 1944 | High detail inspection, clear identification |

| 8 Megapixel (4K) | 3840 x 2160 | Very high detail, detailed quality control |

Selecting the right camera resolution depends on the smallest feature to inspect, the field of view, and the speed required. Users should also consider sensor size, lighting, and environmental conditions. Balancing these factors ensures the imaging system meets the needs of the application.

Image Pixel Resolution Machine Vision System

How Resolution Affects Detail

Resolution plays a key role in the ability of an image pixel resolution machine vision system to capture and reveal smaller details. When a camera has more pixels, it can display finer features in the scene. This higher spatial resolution allows the system to spot tiny defects on surfaces, such as scratches on a smartphone screen or small cracks in automotive parts. For example, in quality control, a high-resolution camera can detect flaws that might be invisible to the human eye or to cameras with fewer pixels. Object recognition also improves because the system can separate closely spaced features, leading to better accuracy in sorting or identifying products.

Tip: Higher resolution helps in applications where accuracy and the detection of smaller details are critical, but it may slow down processing because larger images take more time to analyze.

Machine vision systems must balance resolution and speed. On fast-moving production lines, lower resolution may be chosen to keep up with the pace, even if some detail is lost. In contrast, for tasks that require careful inspection, such as checking printed circuit boards, higher resolution is preferred, even if it means slower processing. The choice depends on the specific needs of the application.

Role of Pixel Size

Pixel size has a direct impact on image quality and the performance of an image pixel resolution machine vision system. The size of the pixel determines how much light each pixel can collect. Larger pixels gather more photons, which increases the sensitivity of the image sensor. This means the camera performs better in low-light conditions and produces images with less noise. Larger pixels also improve the signal-to-noise ratio, making the image clearer and more accurate.

However, larger pixels mean fewer pixels can fit on the sensor, which limits the system’s ability to resolve smaller details. Smaller pixels allow more pixels to be packed into the same sensor size, increasing spatial resolution and making it possible to see finer features. Yet, smaller pixels collect less light, which can lead to more noise and lower contrast, especially at high spatial frequencies. They are also more likely to experience blooming and crosstalk, which can reduce image quality.

The size of the pixel must be chosen based on the needs of the application. If the goal is to detect very small defects, smaller pixels and higher resolution are needed. If the system must work in low light or requires high sensitivity, larger pixels are better. The relationship between pixel size, sensor size, and image quality is a trade-off. For example, a larger sensor with large pixels can provide both a wide field of view and good sensitivity, but it may require special lenses to avoid vignetting.

Sensor and Lens Factors

The image sensor and lens both affect the performance and accuracy of an image pixel resolution machine vision system. The sensor size determines how many pixels can fit on the sensor and how much area the camera can see. A larger sensor can hold more pixels or larger pixels, which improves image quality and allows for a wider field of view. Sensor performance also depends on the type of technology used. For example, CCD sensors usually have lower noise and higher dynamic range, which helps in capturing clear images, while CMOS sensors offer faster frame rates but may have more noise.

Lens characteristics are just as important. The lens must match the sensor size to avoid problems like vignetting, where the edges of the image become dark. The focal length of the lens controls how much of the scene is captured and how large objects appear in the image. A lens with a larger aperture lets in more light, which is helpful in low-light situations. The quality of the lens affects image sharpness, distortion, and brightness. Precision in lens design ensures that the system can reproduce fine details with high accuracy.

- Focal length affects field of view and magnification, which changes how much detail the system can capture.

- Aperture size controls how much light reaches the sensor, impacting image brightness and clarity.

- The image circle must be large enough to cover the sensor, or the corners of the image may appear dark.

- Lens coatings and materials help maintain image quality in tough industrial environments.

- Compatibility between the lens and sensor ensures the system works efficiently and delivers reliable results.

Note: Always match the lens to the sensor and application needs to achieve the best accuracy and performance in imaging.

The combination of sensor technology, pixel size, sensor size, and lens quality defines the overall accuracy and effectiveness of the image pixel resolution machine vision system. Choosing the right components ensures the system can detect smaller details, maintain high accuracy, and deliver reliable performance in different environments.

Choosing Resolution

Field of View and Pixel Size

Selecting the right resolution for a machine vision system starts with understanding the field of view and pixel size. The field of view (FOV) is the area that the camera captures in one image. A wide FOV spreads the available pixels over a larger area, which lowers the image space resolution. A narrow FOV concentrates pixels in a smaller area, increasing both image space resolution and object space resolution. This means that the same camera can deliver different levels of detail depending on how much of the scene it covers.

Pixel size also plays a key role. Smaller pixels allow more detail to be captured in the same sensor area, which increases spatial accuracy. However, smaller pixels collect less light, which can lead to more noise in the image. Larger pixels improve sensitivity but may reduce spatial detail if the total number of pixels stays the same.

Tip: To maintain high object space resolution across a wide FOV, choose a camera with higher camera resolution or smaller pixel size. Always match the lens quality to the sensor to avoid image blur.

Working distance, or the space between the camera and the object, affects lens selection. A good rule is to keep a working distance to FOV ratio of about 4:1. This helps balance cost, performance, and accuracy. If the working distance is too short, wide-angle lenses are needed, which can lower image quality. If the distance is too long, larger and more expensive lenses may be required.

Step-by-Step Guide

Choosing the best resolution for a machine vision task involves several steps. Each step ensures the system meets the accuracy and reliability needed for quality control.

-

Define Inspection Goals

Identify what the system must detect or measure. List the smallest defect or feature size and the required accuracy. -

Analyze Product and Environment

Measure the size of the object and the area to inspect. Note the speed of production and any environmental factors like dust or vibration. -

Calculate Required Resolution

Use the smallest feature size and FOV to determine the minimum image space resolution. A common rule is that a defect should cover at least 4–5 pixels for reliable detection.- Formula:

Object space resolution = Field of View (mm) / Number of Pixels (sensor width or height) - Example: If the FOV is 40 mm and the sensor has 800 pixels across,

Object space resolution = 40 mm / 800 pixels = 0.05 mm/pixel

- Formula:

-

Select Camera and Sensor

Choose a camera with enough camera resolution to meet the calculated object space resolution. Consider whether a line-scan or area-scan camera fits the application. -

Choose Lens and Working Distance

Pick a lens that matches the sensor size and FOV. Make sure the lens can focus at the required working distance and delivers enough spatial accuracy. -

Select Lighting and Accessories

Choose lighting that highlights defects and matches the surface type. Decide if color or monochrome imaging is needed. -

Install and Test

Set up the hardware. Adjust camera settings like exposure and gain. Test the system with sample products and fine-tune for best accuracy.

Note: Always validate the system by checking if it detects the smallest required defect with the needed accuracy.

Example Calculations

The following table shows how different machine vision tasks require different resolution settings and accuracy levels:

| Task Type | Typical Application | Field of View (FOV) | Smallest Feature to Detect | Minimum Object Space Resolution | Recommended Camera Resolution |

|---|---|---|---|---|---|

| Barcode Reading (1D) | Conveyor belt tracking | 100 mm | 0.5 mm | 0.1 mm/pixel | 1000 pixels (line scan) |

| Surface Inspection (2D) | Detecting scratches | 50 mm x 50 mm | 0.2 mm | 0.04 mm/pixel | 1250 x 1250 pixels |

| Dimensional Measurement (3D) | Automotive parts | 200 mm x 200 mm | 0.1 mm | 0.02 mm/pixel | 10,000 x 10,000 pixels |

For example, if a user needs to inspect a 50 mm wide part for defects as small as 0.2 mm, the calculation would be:

- Required object space resolution = 0.2 mm / 5 pixels = 0.04 mm/pixel

- Camera resolution needed = 50 mm / 0.04 mm/pixel = 1250 pixels

This ensures that each defect covers at least 5 pixels, improving detection accuracy.

Remember: The lens must match the sensor and provide enough spatial detail. High-resolution cameras need high-quality lenses to avoid losing accuracy due to blur.

Selecting the right resolution, pixel size, and lens ensures the system achieves the required accuracy for each application. Always consider the balance between image space resolution, object space resolution, and spatial accuracy to optimize performance.

Common Misconceptions

Pixel Size vs. Resolution

Many beginners believe that larger pixels always create better images. This idea is not always true. Pixel size alone does not decide image quality. Other factors, such as pixel sensitivity, noise, and sensor design, play important roles. Larger pixels collect more light, which can help in low-light situations. However, smaller pixels can increase resolution and reveal finer details. Designers must balance pixel size with other needs, such as speed and application requirements.

Here is a table that explains some common misunderstandings:

| Misconception | Clarification | Additional Notes |

|---|---|---|

| Larger pixels always mean better image quality | Image quality depends on more than pixel size. Sensor design and noise matter too. | Larger pixels help in low light but do not always give better images. |

| Smaller pixels reduce image quality | Smaller pixels can improve resolution but may collect less light. | Good sensor design can reduce noise and improve performance. |

| Higher resolution always improves image quality | Higher resolution must match system needs. Too many pixels can slow down the system. | System goals and camera limits should guide choices. |

Note: Some sensors use both small and large pixels to handle bright and dark areas, improving dynamic range.

Higher Resolution Limits

People often think that higher resolution always leads to better results. In reality, higher resolution increases the number of pixels, which can slow down processing and require more storage. If the system does not need extra detail, higher resolution may lower performance. The camera, lens, and lighting must all work together. If one part cannot keep up, the whole system may suffer.

- Higher resolution can help find small defects.

- More pixels mean larger files and slower speeds.

- The lens and sensor size must match the camera resolution for best results.

Manufacturers often use regions of interest or faster processors to handle large images. Matching camera resolution to the smallest feature needed helps avoid wasted resources.

Typical Mistakes

Beginners sometimes make mistakes when choosing cameras for machine vision:

- They focus only on pixel count and ignore lens quality.

- They forget to check if the lens matches the sensor size.

- They do not test the system with real samples before finalizing the setup.

- They overlook the need for proper lighting, which affects image clarity.

Tip: Always test the full system, not just the camera, to ensure reliable performance.

Understanding these misconceptions helps users make better choices and build more effective machine vision systems.

Understanding image pixel resolution, pixel size, and camera resolution helps users build better machine vision systems.

- Resolution defines the smallest detail the system can see.

- Camera resolution depends on sensor pixel size and magnification.

- Matching pixel size to magnification avoids blurry or missed details.

- The weakest part of the system limits overall performance.

Beginners can learn more through online tutorials, books, and community forums. Exploring both traditional and deep learning methods gives a strong foundation for future projects.

FAQ

What is the difference between pixel size and resolution?

Pixel size tells how big each pixel is on the sensor. Resolution shows how many pixels make up the image. Small pixels can capture more detail, but large pixels collect more light.

How does field of view affect image resolution?

A wide field of view spreads pixels over a larger area. This lowers the detail in each part of the image. A narrow field of view packs more pixels into a smaller space, which increases detail.

Why do machine vision systems need high resolution?

High resolution helps the system find small defects and measure objects more accurately. It improves quality control in factories. More pixels mean the camera can see finer details.

Can higher resolution slow down a machine vision system?

Yes. Higher resolution creates larger image files. The system needs more time and power to process these files. This can slow down inspection speed, especially on fast production lines.

See Also

Fundamentals Of Camera Resolution In Machine Vision Technology

Understanding Pixel-Based Machine Vision In Contemporary Uses

A Comprehensive Guide To Image Processing In Vision Systems

Exploring Thresholding Techniques Within Machine Vision Frameworks

Top Image Processing Libraries Essential For Advanced Vision Systems