A graphics processing unit, often called a GPU, is a specialized unit designed to handle complex graphics and image processing tasks. In a gpus (graphics processing units) machine vision system, this unit processes large amounts of visual data quickly. Many industries now use GPUs to power modern machine vision systems that require real-time decision-making. The graphics technology allows for fast and accurate analysis, which is essential in environments where speed and reliability matter most.

The table below highlights how different sectors benefit from gpus (graphics processing units) machine vision system technology:

| Industry | Reported Benefits and Applications |

|---|---|

| Manufacturing | Improved productivity, defect detection, quality control, automation of inspection, cost reduction, remote monitoring |

| Healthcare | Enhanced diagnostic accuracy, medical imaging, diabetic retinopathy detection, remote patient monitoring, better care |

| Agriculture | Crop monitoring, improved productivity, automation of visual tasks |

| Transportation | Autonomous vehicle navigation, faster processes, improved safety |

With the power of graphics, companies can reduce errors, improve operational efficiency, and enable smarter solutions across many fields.

Key Takeaways

- GPUs have thousands of small cores that work together to process many images and tasks at once, making them much faster than CPUs for machine vision.

- Parallel processing in GPUs enables real-time applications like object detection, facial recognition, and video analysis across industries such as manufacturing, healthcare, and transportation.

- Choosing the right GPU depends on factors like compute power, memory size, speed, power efficiency, and compatibility with AI software frameworks.

- GPUs outperform other hardware like FPGAs and VPUs in scalability and ease of programming but may use more power and cost more upfront.

- Integrating GPUs into machine vision systems improves accuracy, speed, and cost efficiency, helping industries automate inspections, enhance medical imaging, and enable autonomous vehicles.

What Is a GPU?

GPU Basics

A GPU, or graphics processing unit, is a specialized electronic circuit that speeds up graphics and image processing tasks. This unit handles rapid mathematical calculations, which helps computers display images and videos smoothly. Unlike a CPU, which manages many types of tasks, a GPU focuses on graphics and image processing. It uses its own memory to process large amounts of data quickly.

GPUs come in two main types. Integrated GPUs are built into the same chip as the CPU. Discrete GPUs are separate hardware units with their own memory and cooling systems. Both types help computers handle complex graphics rendering and image processing. The unit converts 3D shapes into images that appear on screens. This process, called rendering, is essential for real-time 3D graphics and 3D graphics applications.

Note: The term GPU refers to the processor itself, while a graphics card includes the GPU and other supporting parts.

The architecture of a GPU is different from a CPU. A CPU has a few powerful cores for handling single tasks. A GPU has thousands of smaller cores. These cores work together to process many tasks at once. This design makes the unit ideal for graphics rendering and image processing.

| Architectural Aspect | CPU Characteristics | GPU Characteristics |

|---|---|---|

| Core Design | Few, powerful | Thousands, smaller |

| Execution Model | Sequential | Parallel |

| Memory Hierarchy | Large caches | High bandwidth |

| Workload Suitability | General tasks | Graphics, parallel |

Graphics and Image Processing

GPUs play a key role in graphics rendering and image processing. The unit can process high-resolution images and videos much faster than a CPU. In graphics rendering, the GPU turns 3D models into 2D images for display. This process uses parallel processing, where many cores work on different parts of the image at the same time.

For image processing, the GPU handles tasks like filtering, color correction, and object detection. These tasks require the unit to process millions of pixels quickly. The GPU’s parallel design allows it to complete these jobs faster and more efficiently than a CPU. In some cases, a GPU can be up to 100 times faster than a CPU for graphics and image processing.

GPUs also support real-time 3D graphics, which is important for video games, simulations, and machine vision. The unit’s ability to handle graphics rendering and image processing at high speed makes it essential for modern graphics applications.

GPU in Machine Vision

Parallel Processing Power

GPUs play a key role in the gpus (graphics processing units) machine vision system by enabling parallel processing. Each gpu contains thousands of small cores that work together. This design allows the system to process many tasks at the same time. In machine vision, this means the gpu can handle large volumes of image data processing quickly and efficiently.

The table below compares how CPUs and GPUs handle parallel tasks in machine vision:

| Feature | CPU Characteristics | GPU Characteristics |

|---|---|---|

| Core Architecture | Few powerful cores | Thousands of smaller cores optimized for parallelism |

| Processing Style | Sequential, suited for single-thread tasks | Parallel, suited for multi-threaded tasks |

| AI Workload Performance | Slower for large-scale deep learning | Optimized for high-speed AI computations |

| Memory Bandwidth | Limited, general-purpose | High bandwidth, efficient for large AI datasets |

| Power Efficiency | Higher power consumption per computation | More efficient due to parallel execution |

| Cost-Effectiveness for AI | Less cost-effective for large AI models | More cost-effective for AI training and inference |

| Scalability | Limited scalability | Easily scales with multi-GPU setups (NVLink, PCIe) |

| Software Optimization | General-purpose applications | Optimized for AI frameworks like TensorFlow, PyTorch |

| Precision Support | Primarily FP32 and FP64 | Supports FP16, INT8, and specialized Tensor Cores |

GPUs in a gpus (graphics processing units) machine vision system use parallel processing to accelerate deep learning technologies. For example, convolutional neural networks (CNNs) use many filters to extract features from images. The gpu can run these filters at the same time, which speeds up object recognition and facial recognition tasks. This parallel approach also helps with video analysis, where the gpu processes multiple frames at once.

Tip: Parallel processing in GPUs allows real-time object detection and facial recognition, making them ideal for smart cameras and advanced image processing.

Some main advantages of using GPUs for parallel processing in machine vision include:

- Thousands of cores enable massive parallelism, which accelerates deep learning tasks such as object recognition.

- High memory bandwidth supports efficient handling of large datasets, reducing data transfer bottlenecks.

- Specialized AI accelerators like Tensor Cores optimize matrix multiplications, speeding up training and inference.

- Multi-GPU support allows scalable training of large models by distributing workloads.

- Support for mixed precision (FP16 and FP32) enables faster computation with minimal accuracy loss.

- Seamless integration with popular AI frameworks and libraries enhances development efficiency.

- Real-time processing capabilities for tasks such as object detection and video analysis due to parallel execution of multiple data streams.

Real-Time Vision Applications

A gpus (graphics processing units) machine vision system enables real-time vision applications across many industries. In manufacturing, smart cameras powered by GPUs inspect products on assembly lines. They detect defects and ensure quality assurance with high speed and accuracy. In healthcare, GPUs support medical imaging by reconstructing images quickly and reducing noise. This helps doctors make faster and more accurate diagnoses.

GPUs accelerate image and video processing by performing thousands of operations at once. This is critical for real-time machine vision tasks such as object detection, image classification, and video analysis. For example, in autonomous vehicles, the gpu processes data from multiple cameras to identify objects and people on the road. This allows the vehicle to react instantly to changes in its environment.

- Industrial automation uses GPUs for tasks like automotive weld inspection and EV battery foam analysis.

- Medical imaging benefits from real-time data handling from multiple cameras, improving diagnostic accuracy.

- Smart surveillance systems use GPUs for real-time video analysis, supporting facial recognition and object recognition in crowded places.

Note: GPU acceleration can improve operational efficiency by up to 30% in industrial settings by reducing latency and increasing throughput.

Many real-world applications rely on GPUs for high-end image processing and advanced image processing. For example, NVIDIA’s GPU architecture enables real-time 4D imaging and dynamic visualization in healthcare. AI frameworks like MONAI and TensorRT help deploy advanced AI models for medical imaging, making clinical workflows more efficient. Cloud-based GPU infrastructure also supports rapid deployment of AI models across multiple locations.

Popular software frameworks such as PyTorch, TensorFlow, and OpenCV provide native GPU acceleration. These frameworks integrate with NVIDIA libraries like cuDNN and TensorRT, making it easier to build and deploy machine vision solutions. MATLAB also supports GPU acceleration, allowing users to generate high-performance code for vision models.

Comparing GPU Technology

GPU vs CPU

GPUs and CPUs both play important roles in machine vision, but they have different strengths. CPUs handle general tasks and manage the system. They use a few powerful cores to process instructions one after another. This design works well for tasks that need complex decision-making or low latency. GPUs, on the other hand, contain thousands of smaller cores. These cores work together to process many tasks at once. This parallel approach makes GPUs ideal for graphics rendering and image processing.

The table below shows how CPUs and GPUs compare in machine vision workloads:

| Aspect | CPU Characteristics | GPU Characteristics |

|---|---|---|

| Core Count | Few cores (2 to 64), optimized for low latency | Thousands of cores designed for massive parallelism |

| Processing Approach | Sequential, general-purpose computing | Parallel processing, specialized for repetitive tasks |

| Performance Strength | Handles complex decision-making and diverse tasks | Excels in data-intensive, parallelizable workloads |

| Typical Workloads | General computing, multi-threaded applications | Graphics rendering, machine learning, scientific computations |

| Suitability for Machine Vision | Suitable for tasks requiring sequential logic and low latency | Ideal for large-scale data throughput and parallel computations |

| Collaboration | Works with GPU to manage system and general tasks | Accelerates specialized parallel tasks |

CPUs and GPUs often work together. The CPU manages the system, while the GPU speeds up graphics rendering and image processing.

Machine vision systems benefit from this teamwork. The CPU handles logic and control, while the GPU boosts throughput for graphics tasks. GPUs outperform CPUs in deep learning, graphics rendering, and repetitive image processing. CPUs remain important for tasks that cannot be split into many small parts.

GPU vs FPGA and VPU

Besides CPUs, other hardware options include FPGAs and VPUs. Each has unique features for graphics and machine vision. GPUs stand out for their high throughput and parallel processing. They handle graphics rendering, deep learning, and image processing with ease. FPGAs offer low latency and flexible hardware. They can be reprogrammed for specific tasks, making them useful for real-time graphics rendering and custom pipelines. VPUs focus on embedded and mobile devices. They use less power and work well for simple graphics tasks.

The table below compares these hardware types:

| Hardware | Processing Speed | Flexibility | Ease of Programming | Power Efficiency | Typical Use Case |

|---|---|---|---|---|---|

| GPU | Very high; thousands of cores enable fast deep learning inference and training. | Highly programmable with CUDA and popular frameworks. | Supported by mature tools but high power consumption and cost. | High power consumption (e.g., 225W for RTX 2080). | Autonomous vehicles, high-performance AI tasks. |

| FPGA | Moderate to high with low and deterministic latency; suitable for real-time processing. | Highly flexible with hardware reconfiguration; supports parallel functions. | Programming is complex and specialized; tools can be expensive and proprietary. | Moderate power consumption (e.g., 60W for Intel Aria 10). | Machine vision cameras, frame grabbers, embedded systems requiring low latency. |

| VPU | Moderate; optimized for inference rather than training. | Less flexible; designed for embedded/mobile applications. | Easier programming with open ecosystems; supports various deep learning frameworks. | Very low power consumption (<1W for Intel Movidius Myriad 2). | Embedded/mobile devices, drones, handheld vision systems. |

- GPUs excel in graphics rendering and parallel processing. They are easy to program using popular frameworks.

- FPGAs provide low latency and flexible hardware for custom graphics pipelines, but they require specialized skills.

- VPUs offer low power use and simple programming for graphics in edge devices.

Cost and scalability also matter when choosing hardware for graphics and machine vision. GPUs cost more but scale well with multi-GPU setups. FPGAs have lower power use and cost less to run, but they do not scale as easily. VPUs work best for small, embedded graphics systems.

| Hardware | Cost Considerations | Scalability Considerations | Additional Notes |

|---|---|---|---|

| GPU | Higher power consumption; widely available; ease of programming reduces development cost | Good scalability via multi-GPU setups; specialized ML features improving efficiency | Increasingly specialized for ML; supports low-precision arithmetic and multi-GPU communication |

| CPU | Generally lower initial cost; familiar programming model | Limited scalability for ML compared to GPUs and accelerators | Not specialized for ML; less efficient for parallel ML workloads |

| FPGA | Cheaper than ASICs; programmable and adjustable designs reduce upfront cost; programming complexity can increase development cost | Highly parallel but typically slower; power efficient; suitable for smaller scale or specialized applications | Allows custom data flow; lower power usage; used in real-time AI with ultra-low latency |

| VPU | Emerging specialized hardware for edge vision; emphasizes low power consumption | Designed for edge devices, so scalability is limited compared to data center GPUs/TPUs | Focused on efficiency for vision tasks on edge; newer technology with growing ecosystem |

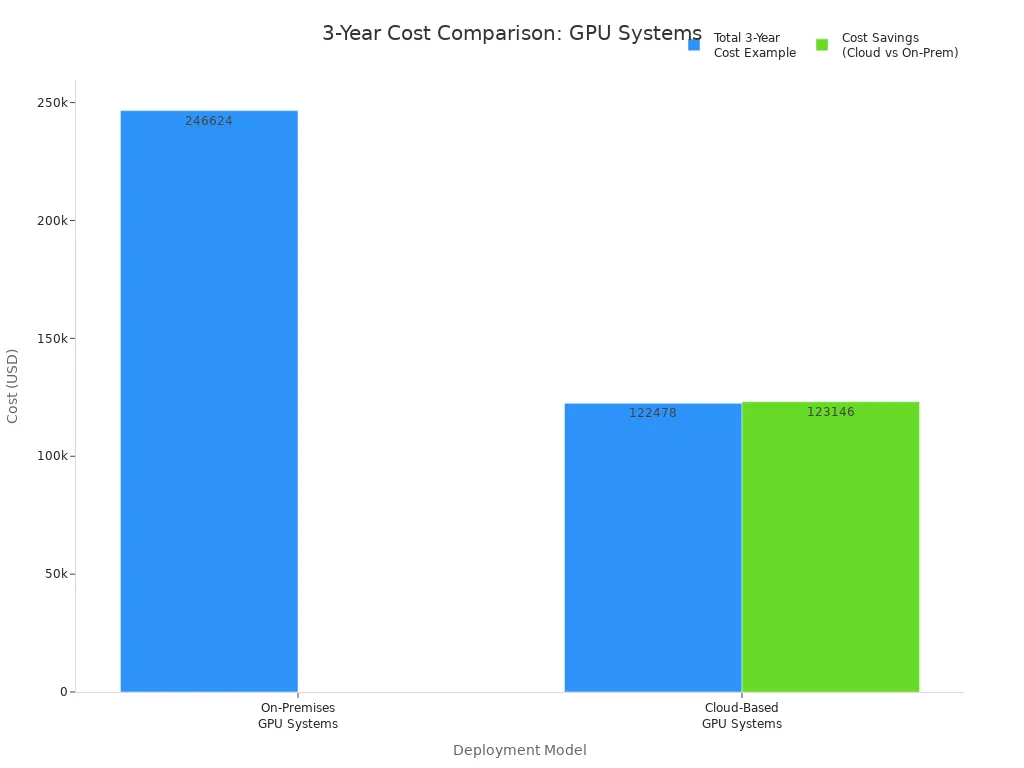

Cloud-based GPU technology can lower costs and improve scalability for graphics rendering and machine vision. The chart below shows how cloud GPU systems save money compared to on-premises solutions:

Cloud GPU systems reduce upfront costs, speed up deployment, and offer over 50% savings in three years.

Choosing a GPU for Machine Vision

Performance Factors

Selecting the right GPU for a machine vision system requires careful evaluation of several performance factors. Each factor affects how well the system can process images, run deep learning models, and handle real-time tasks. The table below summarizes the most important performance factors and their impact on machine vision applications:

| Performance Factor | Description | Impact on Machine Vision GPU Selection |

|---|---|---|

| Compute Power | Number of CUDA cores and Tensor cores enabling parallel processing and optimized matrix operations | Determines throughput and speed for deep learning inference and training |

| Memory Bandwidth | Rate of data transfer between GPU memory and cores | Affects speed of data access, critical for handling large datasets |

| Memory Capacity (VRAM) | Amount of onboard memory to store models and datasets | Enables processing of larger models without frequent data swapping |

| Power Efficiency | Performance per watt, influenced by memory type (e.g., HBM vs GDDR5) | Important for energy consumption and operational cost in deployments |

| Latency | Time delay between task initiation and response | Lower latency is crucial for real-time machine vision applications |

| Compatibility | Support for ML frameworks and software ecosystem | Ensures smooth integration and optimized performance |

| Thermal Design Power (TDP) | Power requirement and heat dissipation capability | Influences cooling needs and system stability |

| Cost vs Performance | Balance between GPU price and computational capabilities | Affects budget decisions and scalability |

| Scalability | Support for multi-GPU setups and future-proofing | Enables handling larger workloads and evolving model complexity |

When choosing a GPU, users should consider these factors:

- Compute power, such as CUDA and Tensor cores, drives parallel processing and speeds up deep learning tasks.

- Memory capacity (VRAM) determines the size of models and datasets the system can handle.

- Memory bandwidth affects how quickly the GPU can access and process data.

- Power efficiency impacts energy use and operational costs, especially in large or embedded systems.

- Compatibility with machine learning frameworks ensures smooth integration and optimized performance.

- Latency must remain low for real-time machine vision tasks.

- Cost versus performance helps balance budget with system needs.

- Scalability allows for future expansion with multi-GPU setups.

The most widely used GPU models in machine vision today include NVIDIA’s L4, GeForce RTX series, RTX 6000 Ada, L40S, A100, V100, H100, and the Blackwell family. These models offer a range of VRAM sizes, memory bandwidths, and support for different precision formats. Newer GPUs like the H100 deliver much faster training and inference speeds than older models, making them popular for demanding machine vision tasks.

Tip: Always match the GPU’s compute power and memory to the complexity of the vision models and the size of the datasets.

Power and Integration

Power consumption and integration play a major role in deploying GPUs for machine vision, especially in embedded and industrial environments. Energy-efficient GPUs enable high-performance vision processing while keeping power use low. For example, the NVIDIA Jetson Orin platform powers autonomous underwater vehicles and smart tractors. These systems dedicate a portion of their energy budget to vision tasks, allowing for longer operation and less heat buildup. Lower power draw also means less need for cooling, which is important in compact or battery-powered devices.

When integrating GPUs into machine vision systems, users often face several challenges:

- Processing speed must meet real-time requirements. Optimization is needed to reduce latency.

- Hardware limitations, such as low VRAM or limited compute resources, can slow down performance.

- Balancing accuracy and speed may require model simplification or quantization.

- High computational demands can increase costs and require advanced cooling solutions.

- Data variability and noise need robust preprocessing and adaptable models.

- Energy efficiency is crucial for battery-powered or embedded devices.

- Scalability becomes an issue as system complexity grows.

To address these challenges, users can:

- Use asynchronous data transfer to reduce delays.

- Adjust batch sizes to balance memory use and throughput.

- Apply mixed precision training to save memory and speed up computation.

- Monitor GPU usage with tools like NVIDIA System Management Interface.

Note: Integrating vision and motion control on the same platform with a real-time operating system can reduce latency and improve system reliability.

Matching GPU technology to specific machine vision needs requires understanding the application. For real-time inspection on a factory line, a GPU with high compute power and low latency works best. For embedded systems like drones or mobile robots, energy-efficient GPUs with moderate compute power and low heat output are ideal. In large-scale AI training or cloud-based deployments, high-end GPUs with large VRAM and multi-GPU support provide the best scalability.

Recent trends show that hybrid architectures, combining CPUs, GPUs, and neural processing units, are becoming more common. These systems bring AI processing closer to the edge, reducing latency and improving real-time performance. Major industry players continue to develop new GPU technology tailored for machine vision, expanding its use across industries like manufacturing, healthcare, and transportation.

Real-World GPU Machine Vision Systems

Industrial Automation

Factories now use industrial gpu accelerated pcs and smart cameras to improve production. These systems use graphics technology to inspect products and find defects. In automotive manufacturing, graphics-powered machine vision systems check welds and spot problems quickly. This leads to a 30% increase in operational efficiency and faster defect identification. On electric vehicle battery lines, graphics-based analysis of foam helps reduce downtime by processing large datasets in real time. Multi-sensor floor panel analysis uses graphics to boost output speed by two to three times.

| Application Area | Real-World Example | Reported Performance Improvement |

|---|---|---|

| Defect Detection & Quality Assurance | Automotive manufacturing weld inspection | 30% increase in operational efficiency; rapid defect identification |

| Assembly Line Monitoring | EV battery foam analysis | Faster processing of large datasets in real time, reducing downtime |

| Complex Inspection Tasks | Multi-sensor floor panel analysis | Output speed boosted by 2-3 times using smart vision accelerators |

Factories must also consider the environment. Graphics-powered machine vision systems use a lot of electricity. Training a large model can use as much energy as 100 homes in a year. Data centers that run these graphics systems need water for cooling and often rely on fossil fuels. Companies should plan for energy use and look for ways to lower their carbon footprint.

Medical Imaging

Hospitals and clinics rely on graphics to process medical images. GPUs help doctors see 3d scans from MRI and CT machines. Graphics technology allows thousands of tasks to run at once, which speeds up 3d image rendering. Doctors can view results instantly and make faster decisions. Graphics also power AI tools that help with object detection and facial recognition in scans. These tools find small problems that might be missed by the human eye.

- Graphics improve real-time visualization and support high-resolution imaging.

- AI hardware in graphics cards makes diagnostics faster and more accurate.

- Graphics-based segmentation and registration help doctors plan treatments.

- Graphics acceleration leads to faster diagnoses and better patient care.

Medical imaging systems must follow strict rules. Developers must test graphics-powered AI tools with real patient data. They must show that the system works safely and accurately. These rules help protect patients but can slow down how fast new graphics technology reaches hospitals.

Autonomous Vehicles

Autonomous vehicles depend on graphics to understand the world around them. Graphics processors handle data from cameras, LiDAR, and radar at the same time. This parallel processing lets the vehicle see objects, read road signs, and track traffic patterns in real time. Graphics technology, like NVIDIA’s CUDA, speeds up deep learning algorithms for perception and decision-making. The vehicle can react quickly to changes and avoid obstacles.

Graphics allow the car to build a 3d map of its surroundings. The system uses graphics to process sensor data and make driving decisions. Fast graphics processing keeps passengers safe and helps the vehicle follow traffic laws.

Graphics play a key role in making autonomous vehicles possible. They enable real-time perception, object detection, and navigation in complex environments.

GPUs bring major benefits to machine vision systems. The table below shows how graphics technology supports real-time, accurate, and cost-effective solutions:

| Advantage Category | Description |

|---|---|

| Parallel Architecture | Graphics with thousands of cores accelerate deep learning and high-performance tasks. |

| On-device Processing | Graphics enable real-time decisions without outside computing. |

| Integration with Cameras | High-speed cameras and graphics work together for complex vision tasks. |

| Latency Reduction | Graphics process data locally, lowering response times. |

| Scalability and Flexibility | Graphics systems adapt to drones, vehicles, and IoT devices. |

| Cost Efficiency | Graphics reduce cloud use and lower costs. |

| Software Optimization | Graphics algorithms improve accuracy and cut errors. |

Organizations can take these steps to get started with graphics-powered machine vision:

- Use cloud platforms with graphics acceleration for easy scaling.

- Choose graphics hardware that matches the workload size.

- Optimize graphics algorithms for energy savings and better performance.

Graphics will keep evolving. Smaller, smarter, and more efficient graphics will power the next generation of smart cameras, vehicles, and edge devices.

FAQ

What makes a GPU better than a CPU for machine vision?

GPUs have thousands of small cores. These cores work together to process many images at once. CPUs have fewer cores and handle tasks one by one. This makes GPUs much faster for image and video tasks.

Can a GPU help with real-time video analysis?

Yes. A GPU can process many video frames at the same time. This helps cameras and computers spot objects or people quickly. Real-time analysis becomes possible in factories, hospitals, and cars.

How does GPU memory affect machine vision tasks?

More GPU memory lets the system handle bigger images and larger models. High memory helps avoid slowdowns when working with lots of data. It also supports advanced AI tasks.

Are GPUs hard to add to existing systems?

Most modern systems support GPUs. Many GPUs fit into standard slots on computers. Some software may need updates to use the GPU. Many popular machine vision tools already support GPU acceleration.

See Also

Understanding Vision Processing Units In Machine Vision Technology

Exploring Pixel-Based Machine Vision For Contemporary Uses

A Comprehensive Overview Of Image Processing In Machine Vision

How Cameras Function Within Machine Vision Systems Today

Essential Guide To Semiconductor Technology In Machine Vision