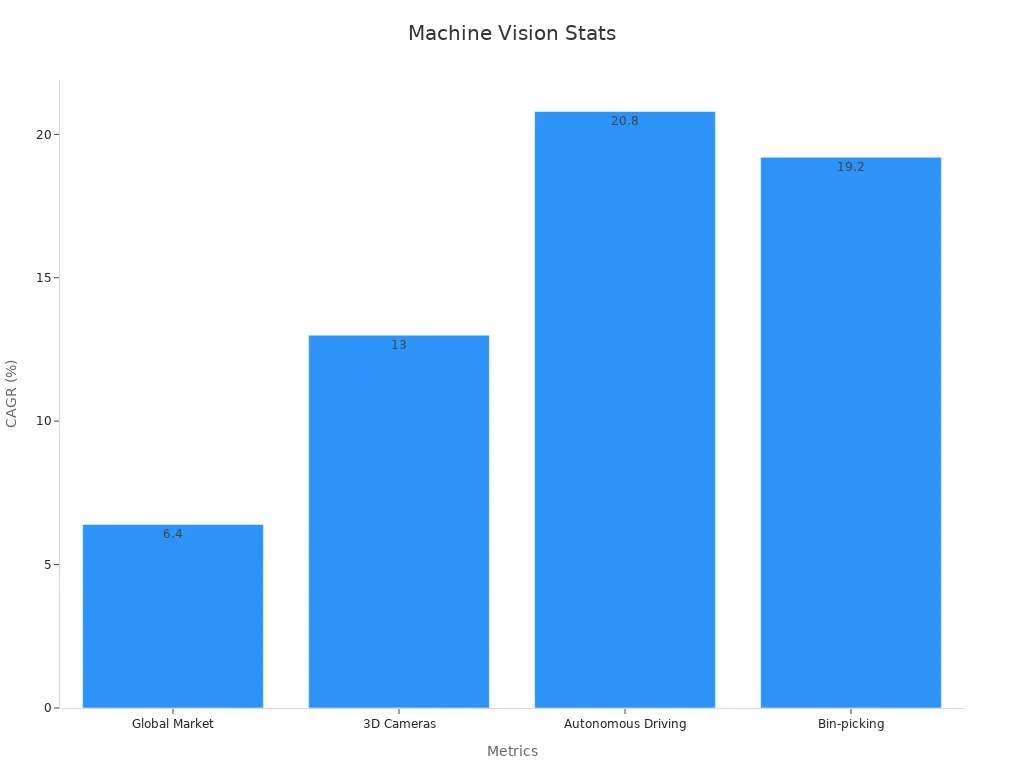

An ann machine vision system uses artificial neural networks to help computers see and understand images. These systems play a big role in industries, making tasks like inspection and automation faster and more accurate. For example, neural networks can identify defects in products with up to 99.7% accuracy and work 300 times faster than people.

Machine vision continues to grow, with new tools making it easier for anyone to start exploring this technology.

Key Takeaways

- ANN machine vision systems use neural networks to help computers see and understand images quickly and accurately, improving tasks like defect detection and quality control.

- These systems combine cameras, sensors, and AI software to automate inspections and processes in industries such as manufacturing, healthcare, and agriculture.

- Neural networks learn from many images to recognize patterns and objects, making machine vision faster and more reliable than older methods.

- Proper lighting and camera setup are essential for good machine vision results, and smart cameras can adapt to changing conditions to improve accuracy.

- Beginners can start learning machine vision using free tools, open-source datasets, and pretrained models, following simple steps to build and improve their own projects.

ANN Machine Vision System Overview

What Is Machine Vision?

Machine vision gives computers the ability to see and understand the world through images. In modern industry, a machine vision system uses cameras, sensors, and software to capture and process visual information. These systems help factories and businesses automate tasks that once needed human eyes. For example, in manufacturing, machine vision checks products for defects, counts items, and guides robots on assembly lines.

Machine vision is used in many fields, such as automotive, electronics, food processing, and biopharmaceuticals. It helps with inspection, quality control, and process automation. In the oil and gas industry, machine vision inspects pipelines and monitors equipment for safety.

A typical machine vision system works in several steps:

- Cameras or optical sensors capture images of objects or scenes.

- Software processes these images to extract useful information.

- The system makes decisions, such as sorting items or detecting errors.

Some advanced systems, like the Cytek® Amnis® ImageStream®X Mk II, can analyze millions of cells using up to 12 image channels and measure dozens of features per channel. These systems combine high-quality cameras, sensors, and powerful software to perform detailed image analysis. In agriculture, drones with cameras scan fields to spot crop problems early, helping farmers improve yields.

Machine vision applications have become more powerful with the rise of artificial intelligence and ai-based tools. Popular software libraries, such as OpenCV and TensorFlow, allow developers to build custom solutions for healthcare, retail, and security. Integration with IoT platforms enables real-time image processing, supporting smart cities and industrial automation.

Role of Artificial Neural Networks

An ann machine vision system uses a neural network to help computers interpret images more like humans do. A neural network is a computer model inspired by the brain. It has many simple units, called neurons, connected together. Each neuron processes information and passes it to others, allowing the system to learn patterns and make decisions.

The structure of a neural network includes layers of neurons. Each connection has a weight, which changes as the system learns. Activation functions decide if a neuron should send its signal forward. This setup allows the network to recognize complex patterns, such as shapes, letters, or even human poses.

- Markerless human motion tracking uses neural networks for pattern recognition in dynamic environments.

- Multi-view pictorial structures help estimate 3D human poses, showing how neural networks handle complex visual analysis.

- These methods are important in both industrial and biomedical fields, where accurate image interpretation is critical.

Machine vision technology collects images quickly, but understanding those images is the real challenge. Neural networks solve this by processing many parts of an image at once. This parallel processing makes them much faster than older, serial methods. For example, in medical imaging, neural networks can segment brain tumors from MRI scans, detect tuberculosis in chest X-rays, and find bone fractures with high accuracy. These tasks once took much longer and were less precise with traditional rule-based systems.

| Study | Key Findings |

|---|---|

| Rumelhart, Hinton, and Williams (1986) | Developed backpropagation, making neural networks better at learning from images. |

| Hornik (1991) | Showed that multilayer neural networks can solve complex visual problems. |

| Jain, Duin, and Mao (2000) | Improved pattern recognition using neural networks. |

| Recknagel (2006) | Proved machine learning can find visual patterns efficiently. |

| Zuur, Ieno, and Elphick (2010) | Demonstrated neural networks handle large visual datasets well. |

These studies show that integrating neural networks with machine vision systems leads to better accuracy and reliability. In manufacturing, this means faster and more precise quality checks. In healthcare, it means quicker and more accurate diagnoses. Performance metrics like accuracy, precision, and recall confirm these benefits. For example, neural networks often achieve higher accuracy than older methods, making them a key part of modern computer vision.

Machine vision and computer vision are closely linked. Machine vision focuses on industrial tasks, while computer vision covers a wider range of applications, including robotics and self-driving cars. Both fields rely on artificial intelligence and ai to process and understand images.

An ann machine vision system combines the strengths of machine vision hardware and neural network software. This combination allows industries to automate complex tasks, improve safety, and boost efficiency. As technology advances, these systems will become even more important in manufacturing and other fields.

Neural Network Architecture

Structure and Layers

A neural network in machine vision uses a layered structure to process images. Each layer has a special job. The input layer receives image data, such as pixel values. Hidden layers follow, where most of the machine learning happens. These layers use neurons, which are small computing units. Each neuron connects to others through weights and biases. The output layer gives the final result, such as a label for image classification.

| Component | Role in Neural Network Architecture |

|---|---|

| Input Layer | Initial layer that receives input data features. |

| Hidden Layers | Intermediate layers that transform input data through weighted connections and computations. |

| Neurons (Nodes) | Computing units that process inputs using weights, biases, and activation functions. |

| Weights and Biases | Parameters that adjust the strength and threshold of neuron activations during training. |

| Activation Functions | Introduce non-linearity allowing the network to learn complex patterns (e.g., ReLU, sigmoid). |

Modern deep learning models use special layers to improve performance. Convolutional layers help the neural network find patterns in images, like edges or shapes. Pooling layers reduce the size of the data, making the system faster. Dropout layers randomly turn off some neurons during training. This helps prevent overfitting and makes the model better at handling new images. Residual connections, like those in ResNet, allow very deep networks to learn without losing important information. Inception modules combine filters of different sizes, helping the network see both small and large details. U-Net and 3D CNNs help with tasks like medical image segmentation and video analysis.

Learning and Training

Machine learning lets a neural network learn from data instead of following fixed rules. During training, the system shows the network many labeled images. The network makes predictions and compares them to the correct answers. It then adjusts its weights and biases to improve accuracy. This process repeats many times, helping the network get better at tasks like image classification.

Deep learning uses techniques like data augmentation to create more training examples. This helps the neural network learn to handle different lighting, angles, or noise. Regularization methods, such as dropout and weight decay, stop the network from memorizing the training data. Instead, the network learns general patterns that work on new images. Layer normalization keeps the learning process stable, while attention mechanisms help the network focus on important parts of the image.

Researchers test deep learning models using metrics like sensitivity, specificity, and AUC. They use cross-validation to check if the model works well on different sets of data. Studies show that training choices, such as optimizer type and learning rate, can change how well a neural network performs. Multilayer feed-forward networks are common in machine learning for pixel classification. These networks pass information forward through several layers, making them powerful tools for deep learning in machine vision.

Image Processing in Machine Vision

Pattern and Object Recognition

Machine vision systems use deep learning to recognize patterns and objects in images. These systems help factories and other industries find and sort items quickly. Artificial neural networks can learn to spot shapes, colors, and even small details that people might miss. Deep learning models, such as RetinaNet, show strong results in object recognition tasks. On the MSCOCO dataset, RetinaNet achieves high mean Average Precision (mAP) and mean Average Recall (mAR) scores. These scores show how well the system finds and labels objects of different sizes.

| Model | mAP | mAR | AP_S | AP_M | AP_L | AR_S | AR_M | AR_L |

|---|---|---|---|---|---|---|---|---|

| RetinaNet ANN | 0.319 | 0.497 | 0.102 | 0.347 | 0.505 | 0.273 | 0.543 | 0.662 |

Machine vision helps with tasks like sorting packages, checking labels, and guiding robots. In manufacturing, neural networks support quality inspection by finding missing parts or wrong colors. These systems improve inspection reliability and reduce errors. Deep learning allows machine vision to handle complex scenes and many object types.

Machine vision with deep learning can process thousands of images every hour, making production quality checks faster and more accurate.

Defect Detection and Quality Control

Machine vision systems use machine learning for defect detection and quality control. These systems find problems like cracks, spots, or missing pieces in products. In one case, a camera-based machine vision system with machine learning found porosity defects much better than a traditional photodiode system.

| Porosity Threshold | Detection Rate (Camera + ML) | Detection Rate (Photodiode) |

|---|---|---|

| 0.5% | 43% | 20% |

| 5% | 100% | 85% |

This table shows that machine vision with machine learning detects more defects, even at low thresholds. Deep learning models also help with anomaly detection, spotting rare or unusual problems that older systems might miss. Machine vision supports quality control in food, electronics, and pharmaceuticals. These systems check bottles, inspect food, and monitor printer quality. Compared to rule-based systems, deep learning and machine learning adapt to new types of defects and changes in production. This flexibility improves quality and keeps products safe.

Industrial Applications and Challenges

Automation and Computer Vision

Industries use machine vision and computer vision to improve industrial automation. These technologies help factories inspect products, guide robots, and sort items quickly. In manufacturing, machine vision applications have led to a 21% increase in productivity and a 25% reduction in scrap rates. Electronics companies have seen a 30% decrease in missed defects and a 40% reduction in inspection cycle times. Semiconductor production now achieves 95% accuracy in defect detection. These results show how machine vision and deep learning boost quality and efficiency.

| Statistical Method | Purpose |

|---|---|

| Bland-Altman test | Measures agreement between machine vision and standard measurement systems to assess compliance differences. |

| 2-sample T-test | Compares average performance metrics between machine vision systems and traditional methods. |

| 2-sample equivalence test | Confirms statistical equivalence of machine vision system measurements to standard methods. |

Machine learning and ai allow these systems to learn from data and adapt to new tasks. Deep learning models can recognize patterns, detect defects, and classify objects with high accuracy. Computer vision supports smart factories by enabling real-time monitoring and decision-making. Artificial intelligence helps companies automate complex processes, reduce errors, and improve product quality.

Overcoming Real-World Obstacles

Machine vision systems face many challenges in real-world environments. Lighting changes, object orientation, and complex backgrounds can make image analysis difficult. Agricultural settings are especially tough because of unpredictable light, irregular terrain, and objects with unusual shapes. These factors make it hard for computer vision and machine learning systems to work reliably.

- Proper lighting is critical for machine vision performance. The right illuminator improves image quality and feature extraction.

- Different lights, such as LEDs or fluorescent bulbs, affect how cameras capture images. Diffuse lighting works well for 3D objects, while ring lights suit flat surfaces.

- Uniform lighting setups, like diffuse chambers, have achieved near 100% success in inspecting grains and oilseeds.

- Ambient light and shadows can introduce noise, making it harder for deep learning models to find features.

Smart cameras and advanced solutions help overcome these obstacles. Adaptive Neuro-Fuzzy Inference Systems (ANFIS) combine machine learning and fuzzy logic to handle uncertainty and complex patterns. Smart cameras use built-in ai and deep learning to adjust to changing conditions. These tools make machine vision applications more reliable in manufacturing and industrial automation.

Tip: Choosing the right camera and lighting setup can greatly improve machine vision results. Testing different setups helps find the best solution for each environment.

Getting Started with Machine Learning

Tools and Resources

Many beginners start their journey in machine learning for machine vision by exploring accessible platforms and tools. TensorFlow and Keras offer open-source libraries that support deep learning projects. These platforms provide strong community support and many tutorials. MATLAB is another option, known for its user-friendly interface, though it is not free. SimpleCV and OpenVINO give users free and efficient ways to build machine vision applications. CUDA helps speed up processing by using GPUs, which is useful for large-scale machine learning tasks.

Pretrained models, such as those based on Mask RCNN or GPT-4, help beginners save time and resources. Fine-tuning these models can reduce development time by 60%. Model distillation can shrink model size by 70%, making machine learning more efficient. Open-source datasets like ImageNet, with over 15 million images, allow users to train and test their machine vision systems. These resources make it easier for anyone to start building projects and experimenting with different machine learning techniques.

Tip: Beginners should use open-source datasets and pretrained models to practice and learn faster.

Beginner Steps

A simple checklist can help beginners get started with machine vision and machine learning:

- Define the problem, such as object detection or defect recognition.

- Collect or download a dataset, using sources like ImageNet.

- Choose a platform, such as TensorFlow or Keras.

- Select a pretrained model or build a simple neural network.

- Train the model using labeled data.

- Evaluate performance with metrics like accuracy, precision, and recall.

- Experiment with data augmentation and transfer learning to improve results.

- Track experiments using tools like Neptune for better monitoring.

Case studies show that even beginners can achieve strong results. For example, AlexNet reduced error rates in image classification, and transfer learning has helped in fields like medical imaging. Beginners can use ensemble techniques and synthetic data to boost model performance. Success metrics such as weighted F1-score and balanced accuracy help track progress. Machine learning platforms support rapid iteration, making it easier to learn from each experiment.

Continuous learning and hands-on practice help beginners gain confidence and skill in machine vision and machine learning.

ANN machine vision systems help many industries improve quality and speed. These tools work in factories, hospitals, and farms. Anyone can start learning with free resources and simple projects.

Joining online communities or trying hands-on experiments helps build skills faster.

Exploring new ideas and practicing often leads to better understanding. The world of machine vision keeps growing. Every learner can find a place in this exciting field.

FAQ

What is the main benefit of using ANN in machine vision?

Artificial neural networks help computers recognize patterns in images. They improve accuracy and speed in tasks like defect detection. Many industries use ANN to make quality checks faster and more reliable.

Can beginners use machine vision tools without coding experience?

Many platforms offer user-friendly interfaces. Tools like MATLAB and drag-and-drop features in some software help beginners build simple projects. Tutorials and guides support learning without deep coding skills.

How do machine vision systems handle different lighting conditions?

Proper lighting setup improves image quality. Smart cameras and adaptive systems adjust to changes in light. Using the right lights, such as LEDs or diffuse chambers, helps the system see objects clearly.

Are machine vision systems expensive to start with?

Some tools and cameras cost a lot, but many free resources exist. Open-source software and public datasets let beginners experiment without spending much money. Many start with basic equipment and upgrade later.

See Also

How To Properly Position Devices In Vision Systems

Understanding Machine Vision Systems For Semiconductor Industry

Comparing Firmware-Based Vision With Conventional Systems

An Introduction To Metrology Using Machine Vision Technology