A fn machine vision system occurs when a model fails to detect an object or defect that actually exists. This type of error directly reduces the accuracy and reliability of any machine vision system. In industries like automotive or aerospace, even a 2% false negative rate can result in missed defects, leading to recalls or safety risks.

- Missed defects lower accuracy, hurt brand trust, and can cause legal issues.

- A machine vision system with high fn rates may not improve, as the model cannot learn from undetected errors.

Careful tuning of the model and improving data quality help boost accuracy and reduce fn machine vision system errors.

Key Takeaways

- False negatives happen when a machine vision system misses real defects or objects, lowering accuracy and risking safety.

- Balancing false negatives and false positives is crucial; reducing one can increase the other, so models must fit each application’s needs.

- Precision, recall, and the F1 score are key metrics that help measure and improve how well a system detects true positives and avoids missed cases.

- Real-world examples in industry, medical imaging, and security show that reducing false negatives improves safety, quality, and trust.

- Teams can lower false negatives by improving data quality, tuning models, enhancing imaging, and continuously monitoring system performance.

FN Machine Vision System

What Is FN?

A fn machine vision system makes a specific type of mistake called a false negative. In this case, the system fails to detect a real defect or hazard during inspection. This error is also known as a Type II error in statistics. The system inspects an item that actually has a problem but labels it as defect-free. This mistake allows faulty products to reach customers or unsafe conditions to go unnoticed.

A false negative means the model misses a true positive case. In machine vision, this can lead to serious consequences in manufacturing, healthcare, or security.

Several factors can cause false negatives in a machine vision system:

- Selection bias: The model trains on data that does not match real-world situations.

- Measurement bias: Differences in how data is collected for training and production.

- Poor model evaluation: The model does not perform well on new or unseen data.

- Neglecting error analysis: Teams do not review mistakes, so the same errors keep happening.

- Lack of monitoring: The model is not checked after deployment, so errors increase over time.

FN vs. Other Errors

A fn machine vision system does not only make false negatives. It can also make false positives. A false negative happens when the system misses something that is actually there. A false positive occurs when the system detects something that is not present.

The trade-off between these errors is important. In some cases, like medical diagnosis or security, missing a real problem (false negative) is more dangerous than raising a false alarm (false positive). For example, in cancer screening, doctors want to catch every possible case, even if it means more false positives. In other cases, such as spam filtering, false positives are more costly because they block real emails.

- False negatives can be more dangerous because they miss real threats or defects.

- False positives lead to unnecessary actions, like extra tests or false alerts.

- Adjusting the model’s detection threshold can reduce one type of error but may increase the other.

- Cost-sensitive learning helps balance these errors by assigning different penalties based on the application.

A fn machine vision system must find the right balance between these errors to ensure safety and reliability. Computer vision models and machine vision system designers often adjust their models to fit the needs of each application.

Confusion Matrix

FN’s Role

The confusion matrix is a key tool for evaluating a machine vision system. In binary classification, it shows how well the model predicts two classes, such as "defective" and "non-defective." The confusion matrix is a 2×2 table that compares the actual condition to the predicted result. Here is a typical layout:

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actual Positive | True Positive (TP) | False Negative (FN) |

| Actual Negative | False Positive (FP) | True Negative (TN) |

False negatives (FN) appear in the cell where the actual class is positive, but the prediction is negative. In machine vision, this means the system missed a real defect or object. The confusion matrix helps count these missed cases. By looking at the confusion matrix, engineers can see how many times the model failed to detect a positive instance. This information is important for binary classification and multi-class classification tasks.

The confusion matrix also helps measure recall. Recall is the ratio of true positives to the sum of true positives and false negatives. A high number of false negatives lowers recall. This means the model misses more real cases. In critical applications, such as industrial inspection or medical imaging, reducing false negatives is very important. The confusion matrix allows teams to focus on improving detection and reducing missed cases.

Key Terms

- False Negative (FN): The model predicts negative, but the actual case is positive. These are missed detections.

- True Positive (TP): The model correctly predicts a positive case.

- True Negative (TN): The model correctly predicts a negative case.

- False Positive (FP): The model predicts positive, but the actual case is negative.

- Recall (Sensitivity): The ratio of true positives to all actual positives. It shows how well the model finds positive cases.

- Precision: The ratio of true positives to all predicted positives. It shows how accurate the positive predictions are.

- F Score: The weighted average of recall and precision. It summarizes model performance.

- Confusion Matrix Components: TP, TN, FP, and FN are the main parts of the confusion matrix.

- Type 2 Error: Another name for a false negative in classification tasks.

The confusion matrix gives a clear picture of model performance in classification. It helps teams find and fix errors in a machine vision system.

Performance Metrics

Precision and Recall

Precision and recall are two of the most important evaluation metrics for any machine vision system. These performance metrics help teams understand how well a model detects defects or objects. Precision measures the accuracy of positive predictions. It shows how many of the items labeled as positive by the model are actually positive. The formula for precision is:

Precision = True Positives / (True Positives + False Positives)

A high precision means the model makes few mistakes when it predicts a positive case. False negatives do not directly affect precision. Instead, false positives have a direct impact on this metric. However, there is a trade-off between precision and recall. When a model tries to reduce false negatives to improve recall, it may increase false positives, which can lower precision.

Recall, also called sensitivity, measures the ability of the model to find all actual positive cases. The formula for recall is:

Recall = True Positives / (True Positives + False Negatives)

Recall tells how many real defects or objects the model finds out of all possible positives. False negatives play a big role here. If the model misses many positive cases, the recall drops. In industrial inspection or medical imaging, high recall is critical because missing a defect or disease can have serious consequences.

Precision and recall together give a complete picture of model evaluation. Precision focuses on the correctness of positive predictions, while recall focuses on finding all positives.

The confusion matrix provides the counts needed for both precision and recall. Teams use these performance metrics to guide model evaluation and performance improvement. In real-world machine vision system applications, balancing precision and recall ensures both high accuracy and reliability.

F1 Score

The F1 score combines precision and recall into a single performance metric. This score is the harmonic mean of precision and recall. The formula for the F1 score is:

F1 Score = 2 × (Precision × Recall) / (Precision + Recall)

The F1 score gives a balanced view of model performance, especially when the data has many more negatives than positives. A low F1 score means the model either misses too many positives (low recall) or makes too many false positive predictions (low precision). False negatives lower recall, which in turn lowers the F1 score. This makes the F1 score a sensitive measure for model evaluation in tasks where missing a positive case is costly.

The confusion matrix plays a key role in calculating the F1 score. It provides the true positives, false positives, and false negatives needed for the formula. Teams use the F1 score as a main evaluation metric in performance evaluation, especially when the cost of false negatives is high.

| Metric | Formula | What It Measures |

|---|---|---|

| Precision | TP / (TP + FP) | Accuracy of positive predictions |

| Recall | TP / (TP + FN) | Ability to find all actual positives |

| F1 Score | 2 × (Precision × Recall) / (Precision + Recall) | Balance between precision and recall |

The F1 score helps teams compare different models and choose the best one for their needs. It is especially useful in industrial and AI applications where both precision and recall matter.

Why These Metrics Matter in Machine Vision

Precision, recall, and F1 score are critical evaluation metrics for model evaluation in machine vision systems. These performance metrics help teams measure model performance beyond simple accuracy. In many industrial and AI applications, the data is imbalanced. There are far fewer defects than non-defects. In these cases, accuracy alone can be misleading. A model that always predicts "no defect" can have high accuracy but poor recall and F1 score.

Performance evaluation using these metrics ensures that the model does not miss important cases. High precision reduces the risk of accepting defective parts. High recall ensures that the model finds all defects. The F1 score balances these needs, making it a key metric for model evaluation and performance improvement.

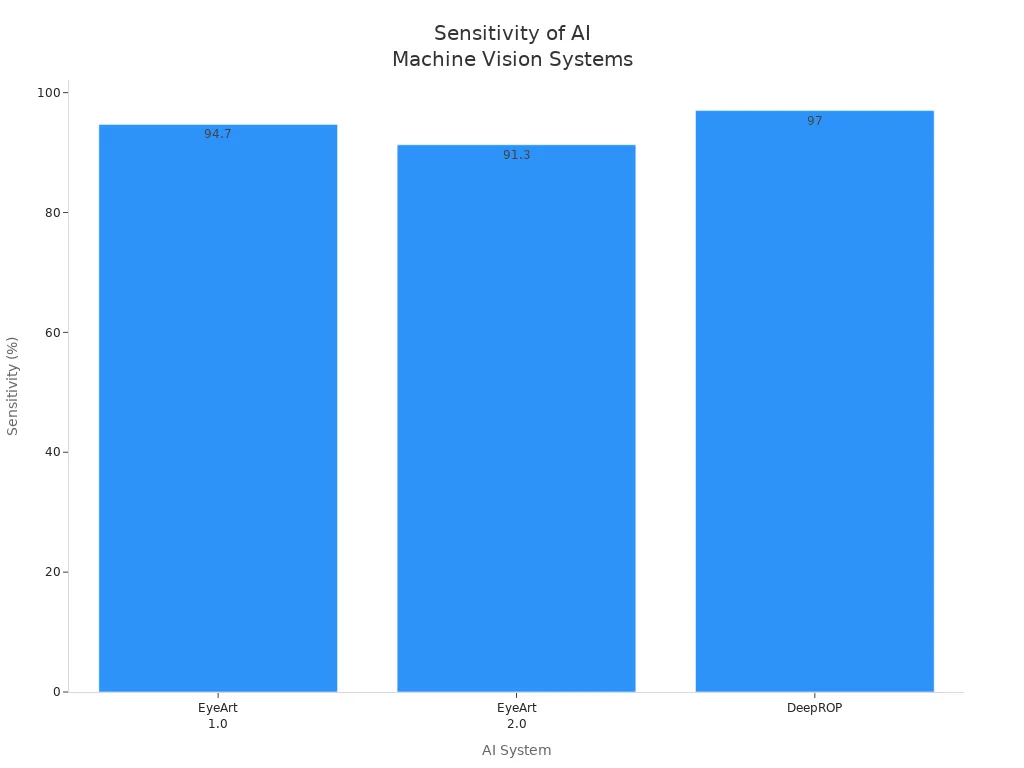

Advances in artificial intelligence and deep learning have improved the sensitivity of machine vision systems. These improvements have reduced false negatives and increased recall. The table below shows how different AI systems perform in medical imaging tasks:

| AI System | Application Area | Sensitivity (True Positive Rate) | Specificity | False Negative Impact | Notes on False Negatives and Challenges |

|---|---|---|---|---|---|

| EyeArt 1.0 | Retinopathy detection | 94.7% (any retinopathy) | 20% | Missed 6-15% of patients needing referral | Higher false positives but reduced false negatives compared to traditional methods |

| EyeArt 2.0 | Retinopathy detection | 91.3% | 91.1% | Lower false negatives and false positives than EyeArt 1.0 | Improved sensitivity and specificity, fewer ungradable images |

| DeepROP | Retinopathy of Prematurity (ROP) | 97% (test dataset) | 99% | High sensitivity reduces false negatives | Incorporates multiple image analysis, good performance on validation data |

AI-powered machine vision systems now use deep learning to detect subtle defects that humans might miss. These systems provide consistent and scalable inspection performance. They classify defects with high accuracy and minimize false negatives. Real-time analysis and advanced image processing allow AI to identify complex anomalies, improving overall defect detection sensitivity.

Teams rely on precision, recall, and F1 score as the main evaluation metrics for model evaluation and performance evaluation. These metrics help ensure that machine vision systems deliver reliable and accurate results in high-stakes environments.

FN Examples

Industrial Inspection

In industrial settings, machine vision systems play a key role in object detection and quality control. When a system fails to detect a defect on a production line, this is a false negative. For example, a camera may miss a small crack in a car part during inspection. This missed detection allows faulty products to move forward in the process. Over time, undetected defects can reach customers, leading to product recalls or safety hazards.

- Missed object detection in manufacturing can lower accuracy and damage brand reputation.

- Real-time object recognition helps catch defects quickly, but false negatives still occur when lighting or camera angles change.

- Engineers often review detection results to improve system accuracy and reduce future errors.

Medical Imaging

Medical imaging relies on object recognition and object detection to find signs of disease. False negatives in these systems can have serious consequences. For instance, if an AI tool fails to detect cancer in a mammogram, the patient may not receive timely treatment. Studies show that when radiologists receive false negative feedback from AI, their own detection accuracy drops. Only 21% of radiologists identified cancer when AI feedback was a false negative, compared to 46% without AI input. Missed detections can delay diagnosis or treatment, which may harm patients. The severity of the impact depends on the condition. Missing a serious disease can lead to severe outcomes, while missing a mild issue may have little effect.

False negatives in medical imaging not only reduce diagnostic accuracy but also influence doctors’ decisions, sometimes leading to worse patient outcomes.

Security Systems

Security systems use object detection to identify threats, such as intruders or unattended objects. A false negative occurs when the system fails to detect a person entering a restricted area. This missed detection can compromise safety and security. In critical applications like autonomous driving, false negatives mean the system does not recognize hazards on the road. These failures can lead to accidents because the system cannot respond to unseen dangers.

- False negatives in security reduce system reliability and increase safety risks.

- Real-time detection and regular system updates help lower the chance of missed threats.

In all these areas, reducing false negatives improves the reliability and safety of machine vision systems.

Reducing FN

Measurement Methods

Teams use several measurement methods to detect and quantify false negatives in a machine vision system. These methods help improve model evaluation and guide performance improvements. The table below shows some of the most effective metrics and validation processes:

| Metric / Method | Description | Role in Detecting False Negatives and Accuracy |

|---|---|---|

| Mean Average Precision (mAP) | Measures detection confidence and accuracy using Intersection over Union (IoU) thresholds. | Quantifies detection accuracy and helps identify false negatives and positives. |

| Average Precision (AP) | Combines precision and recall metrics. | Differentiates false negatives from other detection errors. |

| Mean Intersection over Union (mean-IoU) | Measures overall detection accuracy including missed objects (false negatives). | Includes zero IoU for missed objects, capturing false negatives impact. |

| Object IoU | IoU calculated for individual cropped objects, assessing size and location matching. | Provides object-specific accuracy, critical for compliance and false negative detection. |

| Delta Object IoU | Measures difference in IoU between reference and compressed frames. | Directly quantifies performance degradation, useful for tracking false negatives. |

Validation phases such as Installation Qualification, Operational Qualification, and Performance Qualification ensure the system works correctly in real-world conditions. Measurement Systems Analysis checks accuracy and repeatability. Real-time evaluation and continuous monitoring help teams track recall, precision, and model evaluation over time.

Optimization Strategies

Teams use many strategies to reduce false negatives and improve model performance. Regular calibration and maintenance keep the system accurate. Calibration tunes the system for different environments and products, which lowers the chance of missing defects. Improved imaging, such as high-resolution cameras, better lighting, and advanced lenses, increases image quality and helps the model detect more features. Enhanced image quality leads to better recall and precision.

Real-time processing with higher frame rates and better sensors gives clearer images and faster detection. Embedded vision systems and software optimization allow real-time evaluation and quick response. Teams often retrain the model with new data and use synthetic data to handle rare cases. Deep learning models benefit from hyperparameter tuning and feature extraction, which boost recall and precision.

Environmental factors like lighting, shadows, and glare can cause false negatives. Teams address these by using adaptive lighting, color filtering, and lens improvements. Advanced technologies such as transformer-based frameworks, self-supervised learning, and IoT integration further improve real-time detection and model evaluation.

Teams must balance reducing false negatives with avoiding too many false positives. Lowering the detection threshold increases recall but may reduce precision. Raising the threshold improves precision but can increase false negatives. The best approach depends on the application. For example, in medical imaging, high recall is critical, while in spam filtering, high precision matters more. Teams use precision-recall curves and dynamic thresholding to find the right balance for each model.

Ongoing best practices include regular audits, continuous model evaluation, and collecting diverse training data. Combining traditional algorithms with deep learning models also improves overall detection performance and reduces false negatives in real-time applications.

Effective management of false negatives in a machine vision system leads to better performance and higher product quality. High recall ensures most defects are detected, which reduces the risk of faulty products reaching customers. Regulatory standards require strict control of false negatives, especially in safety-critical industries. Teams that understand key metrics and apply best practices can maintain strong performance and meet legal requirements.

Ongoing evaluation and improvement help machine vision systems deliver reliable and accurate results in real-world applications.

FAQ

What is a false negative in a machine vision system?

A false negative happens when the system misses a real object or defect. The system says nothing is wrong, but a problem exists. This mistake can lead to missed defects or safety issues.

Why do false negatives matter in quality control?

False negatives allow faulty products to pass inspection. Customers may receive defective items. Companies risk recalls, extra costs, and damage to their reputation.

How can teams reduce false negatives in machine vision?

Teams can improve lighting, use better cameras, and retrain models with more data. Regular calibration and software updates also help lower the chance of missed detections.

What is the difference between a false negative and a false positive?

A false negative means the system misses a real problem. A false positive means the system finds a problem that does not exist. Both errors affect accuracy, but false negatives often have higher risks.

See Also

Understanding The Role Of Cameras In Vision Systems

An Introduction To Sorting Using Machine Vision Technology

How Machine Vision Systems Identify And Detect Flaws

Comparing Firmware-Based Vision To Conventional Machine Systems

Essential Camera Resolution Concepts For Machine Vision Applications