Feed-forward neural networks show several features that make them valuable in computer vision and artificial intelligence. Key characteristics include:

- Unidirectional data flow: Each neural layer passes information forward without loops.

- Layered structure: Input, hidden, and output layers process visual data step by step.

- Strong pattern recognition: These neural networks excel at image classification, object detection, and calibration.

- Computational efficiency: Feed-forward models often require less processing time in artificial intelligence applications.

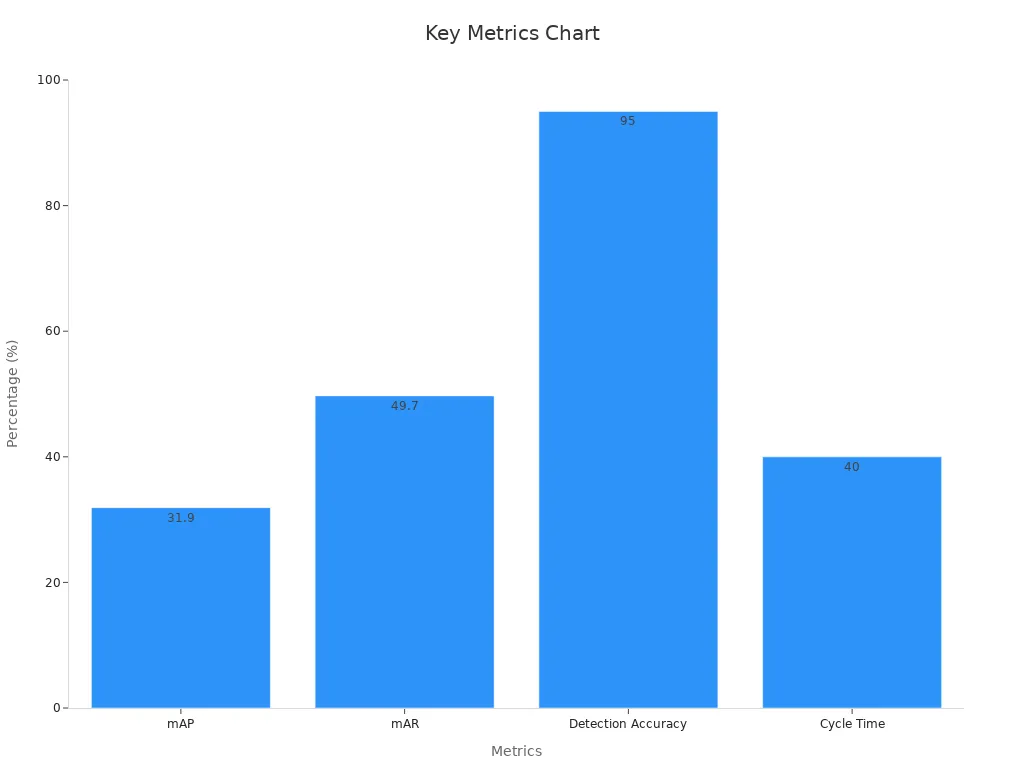

Feed-forward neural networks drive improvements in industrial productivity, defect detection, and inspection speed. The table below highlights performance metrics from real-world Feed-Forward (Neural) Networks machine vision system deployments.

| Metric / Application Area | Description / Result |

|---|---|

| Accuracy, Precision, Recall | Core metrics to evaluate pattern and object recognition performance in feed-forward neural networks. |

| Sensitivity, Specificity, AUC | Used to assess model detection capabilities and robustness. |

| Mean Average Precision (mAP) | RetinaNet ANN achieved mAP of 0.319 on MSCOCO dataset, indicating strong object recognition ability. |

| Mean Average Recall (mAR) | RetinaNet ANN achieved mAR of 0.497, showing effective recall across object sizes. |

| Defect Detection Rates | Camera + ML system detected porosity defects at 43% (threshold 0.5%) vs. 20% for traditional system. |

| Industrial Productivity Increase | Machine vision applications led to a 21% increase in productivity and 25% reduction in scrap rates. |

| Defect Detection Accuracy | Semiconductor production achieved 95% accuracy in defect detection using machine vision. |

| Inspection Cycle Time Reduction | Electronics industry saw a 40% reduction in inspection cycle times with machine vision systems. |

Feed-forward neural networks form the foundation of many artificial intelligence solutions in computer vision. These neural models support accurate, fast, and reliable visual analysis.

Key Takeaways

- Feed-forward neural networks process data in one direction through input, hidden, and output layers, making them simple and fast for many vision tasks.

- These networks use activation functions like ReLU and sigmoid to learn complex patterns, enabling strong image classification and object detection.

- Feed-forward models excel in tasks that need quick training and straightforward design but lack spatial awareness needed for complex image analysis.

- They improve industrial productivity by detecting defects accurately and reducing inspection times in real-world machine vision applications.

- For simple image tasks, feed-forward networks offer a strong solution, while more advanced models like CNNs work better for complex spatial problems.

What Is a Feedforward Neural Network?

Structure and Layers

A feed-forward neural network is a type of artificial neural network that processes information in one direction. This neural network architecture uses a series of layers to transform input data into useful outputs. Each feed-forward neural network contains three main types of layers: input, hidden, and output. The input layer receives raw data, such as pixel values from an image. Hidden layers sit between the input and output layers. These hidden layers use neurons to perform calculations and learn patterns in the data. The output layer produces the final result, like a classification label or a prediction.

Researchers describe the structure of a feed-forward neural network as fully connected. Every neuron in one layer connects to every neuron in the next layer. This design allows the network to learn complex relationships in the data. Activation functions, such as ReLU or sigmoid, add non-linearity to the network. This feature helps the neural network model patterns that are not straight lines. The table below shows the main architectural components:

| Architectural Component | Description |

|---|---|

| Input Layer | The first layer where input data is fed; each neuron represents a feature or attribute of the input data. |

| Hidden Layers | One or more intermediary layers where neurons perform computations and transformations; crucial for learning complex patterns. |

| Output Layer | The final layer producing predictions or outputs; the number of neurons depends on the problem type (classification, regression, etc.). |

| Connections (Weights) | Links between neurons of adjacent layers with associated weights learned during training; determine connection strength. |

| Activation Functions | Functions applied after weighted sums to introduce non-linearity; common types include ReLU, sigmoid, and tanh. |

A feed-forward neural network can have one or more hidden layers. With enough hidden units, these neural networks can approximate any continuous function. This property makes them powerful tools in machine vision and other artificial intelligence tasks.

Data Flow Direction

Feed-forward neural networks move data in a single direction. Information starts at the input layer and flows through each hidden layer to the output layer. There are no feedback loops or cycles in this neural network architecture. This unidirectional data flow keeps the structure simple and easy to understand.

The absence of feedback connections means that each layer only receives information from the previous layer. This design reduces structural complexity and memory needs. As a result, feed-forward neural networks process data quickly and efficiently. Studies show that this approach leads to shorter operation times and higher application efficiency compared to feedback neural networks.

Note: The clear, one-way flow of data in a feed-forward neural network helps each layer focus on its specific role. This structure supports fast learning and adaptability in artificial intelligence systems.

Key Features of Feed-Forward Neural Networks

Layer Connectivity

A feed-forward neural network uses a simple and direct way to connect its layers. Each neuron in one layer links to every neuron in the next layer. This design is called fully connected. The information always moves forward, from the input layer through hidden layers to the output layer. There are no loops or backward paths.

Researchers have found that changing how layers connect can improve performance. For example, using different activation functions in different layers helps the network learn better. Some scientists use special functions, like a parametric sigmoid, to control how fast the network learns. They also use hybrid methods, such as combining genetic algorithms and gradient descent, to set up the best connections. These methods make the feed-forward neural network more stable and faster during training.

The clear, one-way connections in a feed-forward neural network make it easy to understand and use. This structure supports fast data processing and helps avoid confusion in the flow of information.

Activation Functions

Activation functions play a key role in feed-forward neural networks. They help the network learn complex patterns by adding non-linearity. Without activation functions, the network could only model straight lines, which would limit its power.

Common activation functions include ReLU, sigmoid, and tanh. Each function changes the output of a neuron in a different way. For example, ReLU keeps only positive values and sets negative values to zero. Sigmoid squashes values between 0 and 1. These functions allow the network to solve hard problems, like recognizing objects in images.

Studies show that using the right activation functions in a feed-forward neural network leads to better results. Some networks use different functions in different layers to improve learning. This approach helps the artificial neural network handle many types of data and tasks in artificial intelligence and deep learning.

Training Process

The training process in a feed-forward neural network uses two main steps: forward propagation and backpropagation. In forward propagation, the network takes input data and passes it through each layer to get an output. The network then compares this output to the correct answer and calculates the error.

Backpropagation is the next step. The network uses the error to adjust the weights of the connections between neurons. This process repeats many times, helping the network learn from its mistakes. The goal is to reduce the error as much as possible.

Empirical studies show that these training methods work well for feed-forward neural networks. For example, researchers trained over 60,000 artificial neural networks to predict complex patterns. They found that using noise during training helped the networks generalize better. Regularization methods, like Bayesian backpropagation, also reduced errors and improved learning. These findings prove that forward propagation and backpropagation make feed-forward neural networks strong tools for machine vision and deep learning.

Feed-forward neural networks stand out for their computational simplicity. Their straightforward design allows for fast training and easy use in artificial intelligence. At the same time, their layered structure and activation functions give them the power to model non-linear data, making them valuable in many deep learning applications.

Feed-Forward (Neural) Networks Machine Vision System Applications

Image Classification

Feed-forward (neural) networks machine vision system plays a major role in image classification. These systems help computers sort and label images by learning patterns in visual data. In computer vision, feed-forward models use their layered structure to extract features from images. Each layer finds new details, such as edges, colors, or shapes. This process helps the network recognize objects and scenes.

CNNs, a type of feed-forward network, have set new standards in image classification. For example, CNNs using the PreLU activation function reached a 4.94% top-5 error rate on the ImageNet dataset. This result even surpassed the human expert top-5 error rate of 5.1%. Modified ResNet-50 models also showed high accuracy in medical image classification, such as detecting COVID-19 from chest CT scans. In astrophysics, a ResNets V2 variant classified galaxy images with better accuracy than older models. These results show that feed-forward (neural) networks machine vision system can handle many image classification tasks in computer vision.

Object Detection

Object detection is another important application for feed-forward (neural) networks machine vision system. These networks help computers find and identify objects in pictures or videos. The role of feed-forward neural networks includes extracting features and recognizing patterns that point to specific objects.

- Fast R-CNN, Faster R-CNN, R-FCN, and YOLO9000 are top-performing feed-forward models for object detection.

- Deep feed-forward networks can match human vision in recognizing objects, even when objects change in size or position.

- Hierarchical feed-forward models can rival the visual cortex in primates for core object recognition.

- These networks excel at finding known objects but may struggle with new, unseen items.

Empirical research shows that feed-forward models work well for single-image recognition. However, recurrent models perform better in dynamic tasks, such as recognizing objects in a rapid sequence. This difference highlights the strengths and limits of feed-forward (neural) networks machine vision system in computer vision.

Calibration and Optimization

Feed-forward (neural) networks machine vision system also improves calibration and optimization in computer vision. Calibration helps the system make more reliable predictions. For example, temperature scaling adjusts the network’s output to give more trustworthy probability scores. This method keeps classification accuracy steady while making the system’s confidence more reliable.

| Performance Metric | Initial RMSE | RMSE after NN-Kalman Filter Optimization | RMSE after NN-Alpha-Beta Filter Optimization |

|---|---|---|---|

| Root Mean Square Error (RMSE) | ~5.21 | Reduced by 54.22% to approximately 2.38 | Reduced by 38.2% to approximately 3.22 |

This table shows how feed-forward (neural) networks machine vision system can lower errors in calibration. The NN-Kalman filter cut RMSE by over 54%, while the NN-Alpha-Beta filter reduced it by 38.2%. These improvements help computer vision systems become more accurate and dependable.

Feed-forward networks support many computer vision tasks, including image classification, object detection, and system calibration. Their ability to extract features and recognize patterns makes them essential for modern image recognition.

Comparison with Other Neural Networks

Feed-Forward vs. CNNs

Feed-forward neural networks use a simple structure. Each neuron connects to every neuron in the next layer. This design works well for basic image tasks in computer vision, such as simple pattern recognition or basic image classification. However, feed-forward models do not use spatial information in images. They treat every pixel as separate, which can limit their accuracy for complex images.

Convolutional neural networks, or CNNs, use a different approach. CNNs use filters to scan across images. These filters help the network find shapes, edges, and textures. CNNs work better for deep learning tasks that need to understand spatial patterns, such as object detection or face recognition. In artificial intelligence, CNNs have become the top choice for most advanced computer vision systems.

Tip: Use feed-forward models for simple visual tasks or when fast training is important. Choose CNNs for tasks that need high accuracy and spatial awareness.

| Feature | Feed-Forward Neural Networks | Convolutional Neural Networks |

|---|---|---|

| Layer Connections | Fully connected | Convolutional and pooling |

| Spatial Awareness | Low | High |

| Best Use Case | Simple image tasks | Complex image analysis |

| Training Speed | Fast | Slower |

Feed-Forward vs. RNNs

Feed-forward neural networks process each input independently. They do not remember past data. This makes them fast and easy to train. However, they struggle with tasks that need memory, such as analyzing a sequence of images.

Recurrent neural networks, or RNNs, use feedback loops. These loops help the network remember previous inputs. RNNs work well for deep learning tasks that involve sequences, such as video analysis or time-series prediction in artificial intelligence.

Statistical studies show that RNNs perform closer to human levels in sequential tasks. For example, in artificial grammar learning, RNNs match human performance across most grammar types. Feed-forward models perform worse, especially as the sequence gets longer. RNNs need more training data and take longer to train, but they handle complex sequences better.

Note: Use feed-forward networks for single-image tasks. Choose RNNs for tasks that need to process sequences or remember past information.

Limitations of Feedforward Neural Network in Vision

Scalability Challenges

Feed-forward neural networks face several challenges when scaled for large vision tasks. As the size of the network grows, the need for more computing power and memory increases. This can make it hard to deploy these models in real-world systems, especially when handling large amounts of image data or serving many users at once.

- Overfitting becomes more likely as networks get bigger and more complex.

- Training large models often requires advanced infrastructure, such as distributed computing or cloud-based solutions.

- Teams may use tools like Docker containers or Kubernetes to manage and scale these systems.

- Scaling up often means higher costs and the need for system redesign.

Teams must balance model performance with available resources to avoid bottlenecks and slowdowns.

Lack of Spatial Awareness

Feed-forward neural networks do not naturally understand the spatial relationships in images. They treat each pixel or feature as separate, missing how parts of an image connect or relate to each other. Studies show that the first layer of a simple feed-forward CNN has a high similarity to basic image features (r = 0.89 for pixel space), but a very low correlation with high-level spatial features (r = 0.15 for spatial location). Capsule networks, by comparison, show much better spatial understanding.

This means feed-forward models often struggle with tasks that require recognizing shapes, positions, or the arrangement of objects in an image. They focus on low-level details and miss the bigger picture.

Overfitting Risks

Overfitting is a common problem in feed-forward neural networks, especially in vision tasks. When a model learns too much from the training data, it may start to memorize noise or irrelevant patterns. This leads to poor results on new, unseen images.

- Overfitting often happens with large networks or small datasets.

- Regularization methods like dropout and early stopping help reduce this risk.

- Reducing the number of features or using more data can also help.

A study found that deep feed-forward networks with certain activation functions, like softplus, showed higher test losses and became less stable. Training for too long or using the wrong hyperparameters can make overfitting worse.

| Aspect | Description |

|---|---|

| Model Type | Deep feed-forward neural networks |

| Key Risks | Overfitting, unstable gradients, poor generalization |

| Mitigation Strategies | Regularization, hyperparameter tuning, early stopping, dimension reduction |

Careful tuning and regularization are key to building feed-forward neural networks that generalize well in vision tasks.

A feed-forward neural network uses input, hidden, and output layers with non-linear activation functions. This design helps the network model complex patterns in images. Recent research shows that a feed-forward neural network reached about 97.7% accuracy on the MNIST dataset. These networks work well for image classification and other machine vision tasks. However, they may not match the efficiency of specialized models like CNNs. For simple tasks, a feed-forward neural network is a strong choice. For complex vision problems, advanced architectures may give better results.

FAQ

What makes feed-forward neural networks different from other neural networks?

Feed-forward neural networks move data in one direction only. They do not use loops or memory. This design makes them simple and fast for many vision tasks.

Can feed-forward neural networks handle color images?

Yes, feed-forward neural networks can process color images. Each pixel’s color values enter the input layer as separate features. The network learns patterns from these values to classify or detect objects.

Why do feed-forward neural networks need activation functions?

Activation functions help the network learn complex patterns. Without them, the network could only solve simple problems. Functions like ReLU or sigmoid add non-linearity, making the network more powerful.

When should someone use a feed-forward neural network in machine vision?

Feed-forward neural networks work best for simple image tasks. They suit projects that need fast results and do not require understanding spatial relationships. For complex images, other models like CNNs may work better.

See Also

The Role Of Deep Learning In Advancing Machine Vision

Feature Extraction Techniques Driving Machine Vision Performance

Neural Network Frameworks Transforming The Future Of Machine Vision