An embedded systems machine vision system combines a camera, processor, and software in one compact unit to analyze images or video in real time. This system helps machines "see" and make decisions, often improving speed and accuracy. For example, smart surveillance cameras use embedded systems to spot faces or detect motion instantly. The global market for these systems is growing rapidly, with the value expected to reach over $22 billion by 2032.

| Year | Market Size (USD Billion) | CAGR (%) | Notes |

|---|---|---|---|

| 2023 | 10.75 | – | Base year market size |

| 2024 | 11.61 | 8.7 | Projected market size |

| 2032 | 22.59 | 8.7 | Forecasted growth |

These systems play a key role in industries like manufacturing, healthcare, and automotive by making processes safer and more efficient.

Key Takeaways

- Embedded machine vision systems combine cameras, processors, and software into one small device that analyzes images quickly and efficiently.

- These systems work in real time without needing external computers, which makes them faster, smaller, and more energy-efficient than traditional machine vision setups.

- They help industries like manufacturing, healthcare, and automotive improve safety, quality control, and automation by detecting defects and guiding robots.

- Key hardware includes image sensors, lenses, processors, and protective housings, while software uses AI models to recognize objects and patterns instantly.

- Embedded vision systems are flexible and easy to integrate, making them ideal for mobile, remote, or space-limited environments and everyday smart devices.

Embedded Systems Machine Vision System

What It Is

An embedded systems machine vision system is a compact device that allows machines to see and understand their surroundings. These systems combine cameras, processors, and software into a single unit. They do not need an external computer to work. Instead, they use advanced hardware like GPUs, FPGAs, or SoCs to process images and make decisions right on the device. This design helps the system analyze visual data quickly and efficiently.

Key features of embedded machine vision systems include:

- Integration of image capture and processing in one device

- Real-time analysis and decision-making

- Compact and flexible design for use in small spaces

- Low power consumption, making them suitable for portable or battery-powered devices

- Cost efficiency due to fewer components and less wiring

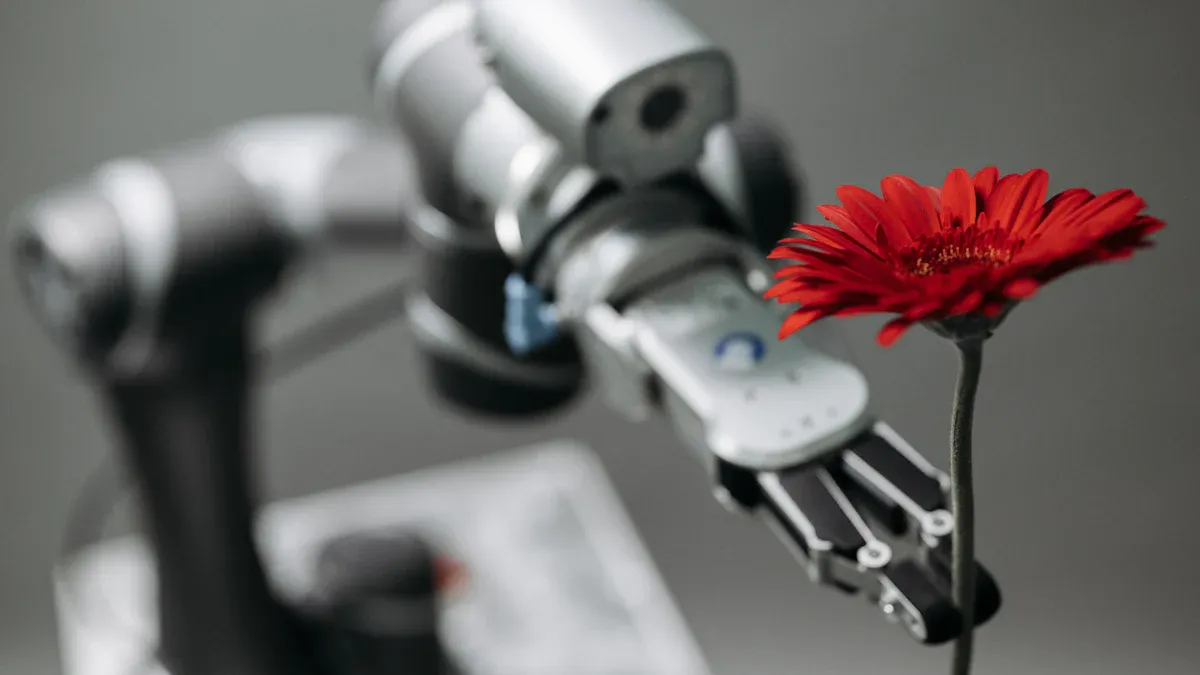

Embedded vision systems support many applications. They work in industrial automation, robotics, consumer electronics, and other fields where speed and accuracy matter. These systems help with tasks like object detection, pattern recognition, and motion tracking. They also provide real-time visual feedback, which is important for safety and quality control.

Note: Embedded machine vision systems often use specialized processors such as NVIDIA Jetson or NXP i.MX. These processors allow the system to run AI models and perform complex tasks without delay.

How It Works

Embedded machine vision systems work by combining several parts into a single, self-contained unit. The main components include:

- Imaging sensors (such as CMOS or CCD)

- Integrated processors (like ARM SoCs or vision processing units)

- Memory for storing data

- Optics and lighting for clear image capture

- Communication interfaces for connecting to other devices

The system starts by capturing images or video through its camera. The processor then analyzes this data in real time. It uses software and AI models to recognize objects, track movement, or check for defects. All of this happens inside the device, without sending data to an external computer.

| Feature | Traditional Machine Vision | Embedded Vision Systems |

|---|---|---|

| Image Capture and Processing | Processing on separate PC | Integrated in one device |

| Size and Power | Larger, higher power use | Compact, low power |

| Real-Time Analysis | Often delayed by data transfer | Immediate, on-device |

| Cost and Cabling | More expensive, more wiring | Lower cost, less wiring |

Embedded systems machine vision system technology allows for real-time processing and immediate action. For example, in a factory, the system can spot a faulty product and remove it from the line instantly. In a smart camera, it can detect faces or movement as soon as they appear. This speed comes from the close integration of hardware and software, which reduces latency and boosts reliability.

Embedded vision technology also supports AI and machine learning. The system can learn to recognize new patterns or objects over time. This makes it useful for changing environments, like autonomous vehicles or drones. The low power design means these systems can run on batteries, making them ideal for portable devices.

Embedded machine vision systems have changed how industries use visual data. They provide fast, accurate, and reliable results in real time. With embedded computing and advanced image processing, these systems continue to grow in importance across many fields.

Embedded vs. Traditional Machine Vision

Processing Differences

Machine vision systems help machines see and understand the world. Traditional machine vision systems use separate parts for image capture and processing. A camera or sensor collects the image, then sends it to a powerful computer for analysis. This setup often needs many cables and takes up more space.

Embedded machine vision systems work differently. They combine the camera, processor, and software into one small device. The system captures and processes images right where the camera is. This design allows for real-time analysis and decision-making. The device does not need to send data to an external computer, which reduces delays.

The table below shows the main differences in how these systems process data:

| Aspect | Traditional Machine Vision Systems | Embedded Machine Vision Systems |

|---|---|---|

| Integration | Separate image capture and processing | All-in-one device with camera, processor, and software |

| Processing Location | External, server-class computer | On-device, edge computing |

| Processing Capabilities | High-level, complex processing | Tailored, application-specific processing |

| Size and Footprint | Large, needs more space | Compact, fits in small spaces |

| Energy Consumption | High power use | Low power use |

| Operational Costs | Higher, due to more hardware and maintenance | Lower, with fewer components |

| Connectivity | Needs cables and network connections | Works without extra connections |

| Application Suitability | Best for large factories and controlled settings | Ideal for mobile, remote, or space-limited environments |

On-device processing in embedded machine vision systems leads to much lower latency. The system analyzes images directly inside the camera hardware. This means it can make decisions almost instantly. Traditional machine vision systems send data to an external computer, which takes more time. This delay makes traditional systems less suitable for real-time tasks, such as automated defect detection or fast-moving robotics.

Key Advantages

Embedded vision systems offer several important benefits over traditional machine vision systems. These advantages make them popular in many automated and real-time applications.

- Embedded machine vision systems are much smaller and lighter. Their compact design fits into tight spaces, such as drones or portable medical devices.

- These systems use less power. They can run on batteries, making them perfect for mobile or remote uses.

- The integration of camera and processor into one device reduces the need for extra hardware and cables.

- Embedded vision systems use processors designed for smartphones and other small electronics. This helps lower energy use and keeps the system cool.

- The system performs real-time processing near the sensor, which saves power and speeds up decision-making.

- Embedded machine vision systems are easier to install and maintain. Their simple design means fewer parts can break or need repair.

- These systems work well in places where traditional machine vision systems cannot fit or where power is limited.

Tip: Embedded vision systems are ideal for automated tasks that need quick responses, such as sorting products on a conveyor belt or guiding robots in real time.

Cost and responsiveness also set embedded machine vision apart. These systems analyze images locally, so they do not need to send data to an external computer. This local processing improves real-time performance and reduces the need for network connections. Traditional machine vision systems often cost more to operate because they need extra computers and support.

| Aspect | Embedded Machine Vision Systems | Traditional Machine Vision Systems |

|---|---|---|

| Processing Location | On-device, real-time analysis and decision-making | External computers, higher latency |

| Responsiveness | Fast, real-time processing with low delay | Slower, due to data transfer and external processing |

| Cost Factors | Lower operational costs, less need for extra hardware | Higher costs, more hardware and support needed |

| Form Factor | Compact, works in places with limited space or connectivity | Larger, needs stable network and more space |

| AI Integration | Efficient AI models run on-device, supporting real-time automated applications | Often needs powerful external systems for AI tasks |

| Application Examples | Robotics, healthcare, smart traffic, agriculture, automated vehicles | Large factories, controlled environments |

Model distillation helps embedded machine vision systems run advanced AI models with less memory and processing power. This makes the system faster and more efficient, while keeping accuracy high. As a result, embedded vision systems can deliver real-time results in automated environments, even with limited resources.

Components of Embedded Machine Vision Systems

Hardware Elements

Embedded vision systems rely on several key hardware components. These parts work together to capture, process, and analyze images in real-time. The table below shows the main hardware elements and their roles:

| Hardware Component | Description |

|---|---|

| Image Sensor | Converts light into electrical signals. Uses CCD or CMOS technology. Features like rolling or global shutters help with real-time detection. |

| Lens | Focuses light onto the image sensor. Types include manual, autofocus, and liquid lenses. The lens must match the sensor’s resolution for clear images. |

| Cover (Housing) | Protects the camera from dust, water, and impacts. Industrial systems often use IP67-rated covers for safety. The housing also helps with heat dissipation. |

| Processor | Handles real-time image processing. Some systems use ARM SoCs for energy efficiency. Others use GPUs for deep learning and complex detection tasks. |

Cameras play a central role in embedded vision systems. Many use FLIR cameras with CMOS sensors, such as Sony Pregius, for high-quality image capture. These cameras support interfaces like USB3, GigE, and MIPI CSI2, making them suitable for both industrial and consumer applications. Recent advancements in embedded vision technology have led to smaller, faster, and more energy-efficient cameras.

Software and Algorithms

Software drives the intelligence behind embedded vision systems. The process starts with data collection, where cameras capture images for analysis. Next, labeling and annotation mark important features, such as defects or objects. Algorithm training uses these labeled images to teach the system how to recognize patterns. Validation checks the accuracy of the trained model. Deployment puts the model into real-time use, with ongoing updates to improve performance.

| Category | Examples / Details |

|---|---|

| Software Tools | OpenCV (fast, real-time capable), Scikit-image (easy to use, slower) |

| Programming Languages | Python (simple), C++ (fast, real-time) |

| Traditional Algorithms | Edge detection, feature detection, segmentation, object detection |

| Deep Learning Models | CNNs, YOLOv5, YOLOX (optimized for edge devices like NVIDIA Jetson Nano) |

| Embedded System Types | Fully embedded software for specific tasks, or PC-based for more complex needs |

Real-time detection often uses AI models like YOLO, SSD, and RetinaNet. These models balance speed and accuracy, making them ideal for embedded computer vision. Developers use frameworks such as TensorFlow Lite and PyTorch Mobile to deploy image recognition and deep learning models on embedded hardware.

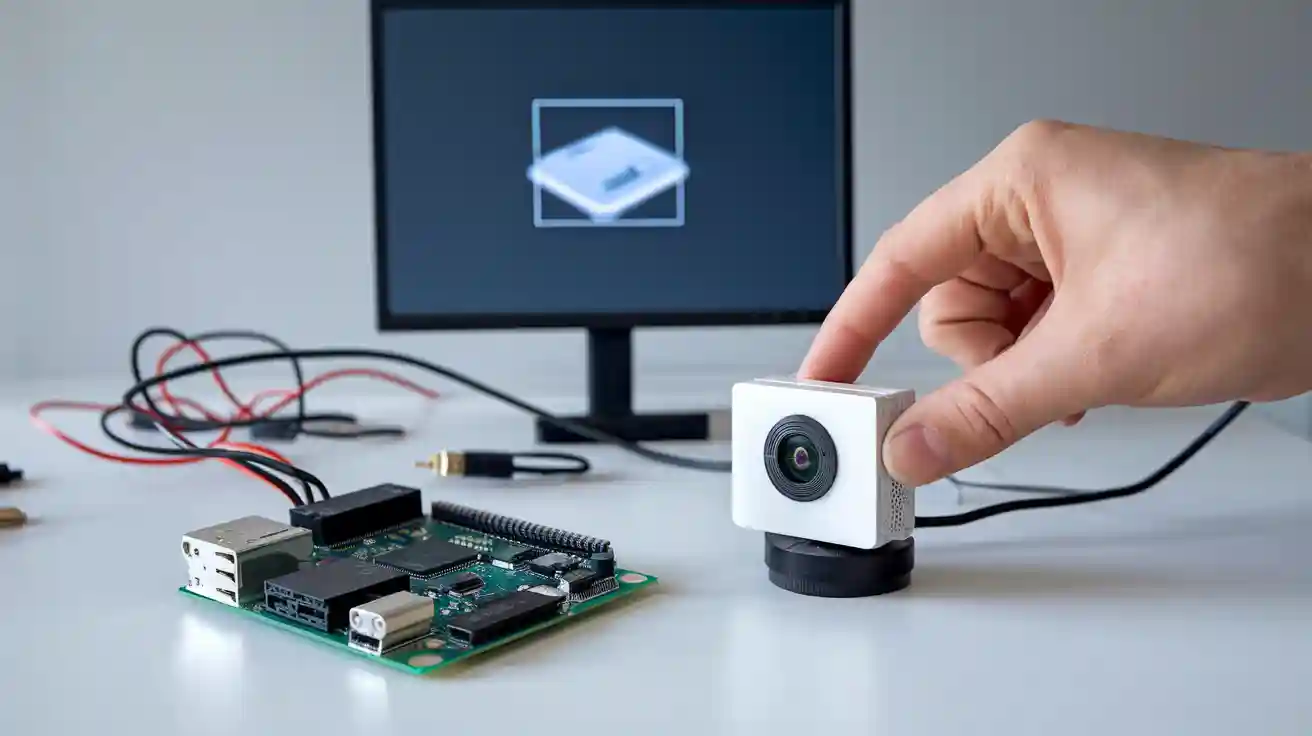

Integration Options

Integration options give embedded vision systems flexibility and modularity. Many systems use standard hardware interfaces like USB, GigE, and MIPI CSI2 to connect cameras to processors. Single-board computers, such as Raspberry Pi or NVIDIA Jetson, provide a platform for real-time image processing. System on Modules (SoMs) offer compact solutions with customizable features.

Modular designs let users mix and match cameras, sensors, and output boards. This approach supports easy upgrades and prototyping for different applications, such as robotics or smart surveillance.

Software development kits (SDKs) help connect embedded vision systems to other devices, like PLCs or lighting controllers. These SDKs support protocols such as Modbus and EtherNet/IP, making integration with industrial equipment easier. Some systems use FPGAs for specialized real-time image processing tasks.

Embedded vision systems can adapt to many environments. Their modular design allows for quick changes in hardware or software, supporting new detection tasks or real-time requirements. This flexibility helps industries keep up with changing needs and technology.

Applications of Embedded Vision Systems

Industry Use Cases

Embedded vision systems have transformed manufacturing by making processes faster, safer, and more reliable. These systems handle many quality control tasks, such as visual inspection and defect detection, with high accuracy. In manufacturing, companies use automated inspection to check products on assembly lines. For example, Tesla uses over 75% automation, which speeds up production and reduces errors. Real-time visual inspection helps identify waste and improve efficiency. Manufacturers also use embedded vision for predictive maintenance, which detects early signs of equipment failure and reduces downtime.

Here are some common manufacturing applications:

- Products Assembly: Automated detection and placement of parts.

- Lean Manufacturing: Real-time data to spot inefficiencies.

- Quality Control: High-resolution cameras for defect detection.

- Predictive Maintenance: Monitoring equipment for early failure signs.

- Barcode and QR Code Reading: Fast, accurate product tracking.

- Safety: Ensuring workers follow safety protocols.

| Use Case Category | Description |

|---|---|

| Cutting and Sawing | Robots cut materials by assessing orientation and dimensions, improving safety and precision. |

| Material Handling, Picking, Packaging, and Placing | Robots use vision for object identification and obstacle detection in logistics. |

| Non-Destructive Testing (NDT) | Vision systems inspect product quality without damaging items. |

| Automated Dimensioning | Cameras measure freight dimensions for better warehouse management. |

| Machine Tending | Robotic arms use vision for precise loading and unloading. |

| Remote Warehouse Management | Telepresence robots use vision for navigation and inventory tasks. |

In the automotive sector, embedded vision holds about 40% of the market share. These systems support advanced driver-assistance, lane-keeping, and parking assistance. They play a key role in automotive component inspection and automated defect detection, making vehicles safer and more autonomous.

Everyday Devices

Embedded vision systems also improve daily life. In consumer electronics, these systems power automated inspection for smartphones and smart home devices. They enable real-time detection of defects and ensure high manufacturing quality control. AI-based vision applications help detect micro-defects and verify labeling, which reduces waste and improves product quality.

Many home automation products use embedded vision. Smart door locks recognize household members, while smart thermostats adjust settings based on occupancy. These devices use edge computing for fast, local decision-making. Industrial vision systems also appear in robotics, where they guide robots for picking, placing, and inspection tasks.

- Embedded vision adds intelligence to IoT devices.

- It supports autonomous decisions in home security and energy management.

- The technology makes devices smarter, more efficient, and safer.

Embedded vision systems continue to shape manufacturing, healthcare, automotive, and consumer electronics by making automated inspection and visual inspection faster and more accurate.

Embedded systems machine vision delivers many benefits:

- Cost-effective and energy-efficient designs fit into tight spaces.

- Real-time image recognition and defect detection improve quality control.

- These systems enable predictive maintenance and automate supply chains.

- Real-time processing supports high-precision tasks in robotics and manufacturing.

Real-time analysis transforms industries and daily life by reducing errors and boosting productivity. Companies like Haier use real-time embedded vision for faster, smarter production. As technology advances, more people can explore real-time solutions for smarter, safer environments.

FAQ

What is the main benefit of using embedded machine vision systems?

Embedded machine vision systems process images quickly inside the device. This design saves time and energy. Many industries use these systems to improve safety and quality.

Can embedded vision systems run artificial intelligence models?

Yes. Many embedded vision systems use special processors to run AI models. These models help the system recognize objects, faces, or patterns in real time.

Where do people use embedded machine vision systems?

People use these systems in factories, cars, hospitals, and homes. For example, robots use them to check products, and smart cameras use them for security.

How do embedded vision systems save energy?

Embedded vision systems use low-power processors. They do not need large computers. This design helps save electricity and allows the systems to run on batteries.

See Also

Understanding Semiconductor Vision Systems For Industrial Use

An Overview Of Electronics-Based Machine Vision Technologies

How Image Processing Powers Machine Vision Systems Today