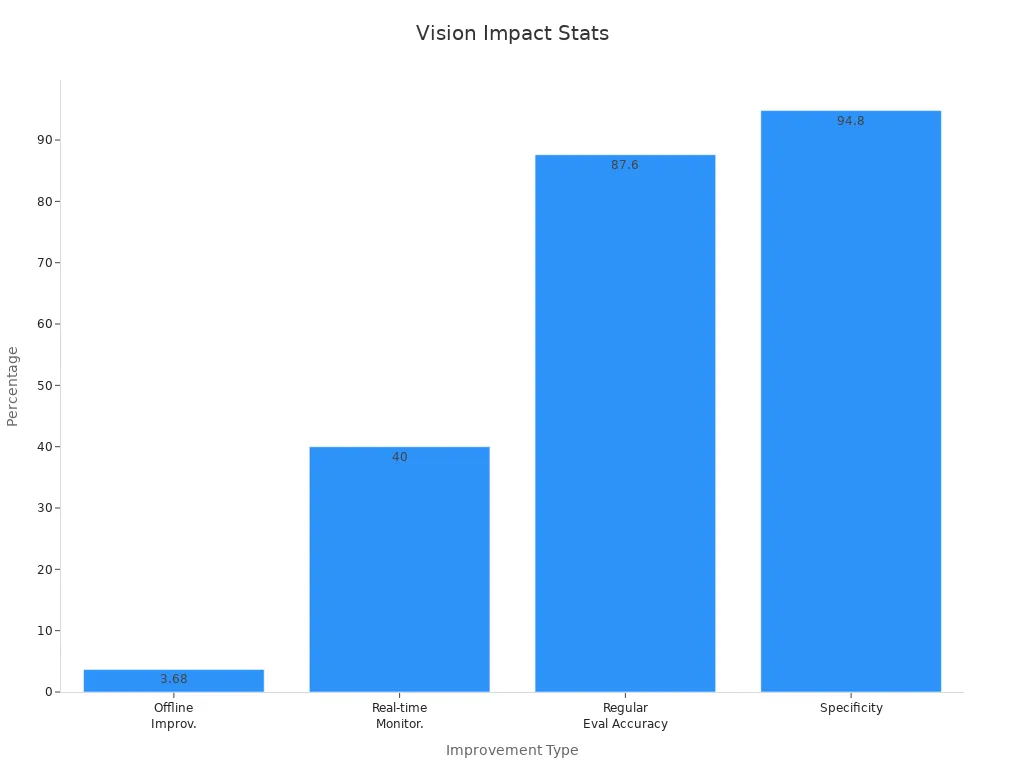

A classification machine vision system uses advanced computer vision to categorize objects or features from digital images. These systems play a key role in automated quality and control for industries that demand high accuracy. Companies have reported up to an 80% reduction in labor costs and a 20-30% decrease in maintenance expenses after adopting machine vision systems. Deep learning and AI boost the speed and precision of every vision system, whether it is 1D, 2D, or 3D. The technology enables real-time decision-making, as shown in the chart below.

Key Takeaways

- Classification machine vision systems sort images to help computers recognize objects and defects quickly and accurately.

- Different types of vision systems—1D, 2D, and 3D—work best for specific tasks, with 1D systems often being faster and simpler.

- Good image preprocessing and feature extraction improve system accuracy by focusing on important details like shapes and colors.

- AI and deep learning, especially convolutional neural networks, boost classification speed and accuracy beyond traditional methods.

- Machine vision systems improve quality control by detecting defects faster and more reliably, reducing costs and increasing safety.

Classification in Machine Vision Systems

What Is Classification?

A classification machine vision system helps computers understand and organize images. In these systems, classification means sorting images into groups based on what they show. For example, a vision system can look at a picture and decide if it shows a good product or a defective one. This process uses pattern recognition to find shapes, colors, or textures that match certain categories. Pattern recognition systems help machines learn from many examples, making them better at sorting and classification over time.

Classification can be simple or complex. Binary classification sorts images into two groups, like "pass" or "fail." Multi-class classification puts images into more than two groups, such as sorting apples, oranges, and bananas. Machine vision systems use object recognition to spot and label items in pictures. These systems rely on technology like deep learning and convolutional neural networks (CNNs) to improve accuracy. CNNs help the vision system focus on important parts of an image, making recognition more reliable.

Note: The effectiveness of classification machine vision system strategies depends on using high-quality data and the right evaluation metrics. Metrics like accuracy, precision, recall, and F1 score help measure how well the system works.

| Metric | Definition/Formula | Use Case/Interpretation |

|---|---|---|

| Accuracy | (True Positives + True Negatives) / Total Predictions | Measures overall correctness of classification |

| Precision | True Positives / (True Positives + False Positives) | Indicates accuracy of positive predictions |

| Recall | True Positives / (True Positives + False Negatives) | Measures ability to find all positive cases |

| F1 Score | 2 * (Precision * Recall) / (Precision + Recall) | Balances precision and recall |

Types of Classification Tasks

Machine vision systems handle different types of sorting and classification tasks. Some tasks are easy, like telling if a light is on or off. Others are harder, such as recognizing handwritten numbers or sorting mixed objects on a conveyor belt. Pattern recognition helps the system learn from many images, so it can handle both simple and complex jobs.

Researchers have tested classification machine vision system performance on many problems. In one study, humans solved most visual tasks quickly, while machines needed thousands of examples to reach similar accuracy. Machines performed better with more training data and advanced features, but some tasks remained difficult. The study used error rates to compare simple and complex tasks, showing that machines still struggle with abstract reasoning.

Machine vision systems often use a two-step process for better results. First, the system makes a prediction. If it is unsure, a second network checks the image again. This method improves reliability and helps the system focus on the right parts of the image, as shown in the chart below.

A classification machine vision system becomes more powerful when it uses the right data, strong pattern recognition, and advanced technology. These systems now play a key role in sorting, recognition, and quality control across many industries.

System Types and Image Processing

1D, 2D, and 3D Systems

Machine vision systems come in three main types: 1D, 2D, and 3D. Each type works best for different tasks. A 1D vision system looks at data in a single line, such as reading barcodes or checking the edges of flat materials. These systems often work faster and use less computer power. In real-world tests, 1D models like 1D-AlexNet reached an average accuracy of about 82%, much higher than 2D models like 2D-AlexNet, which scored around 64%. 1D systems also need fewer resources for training and do not require changing signals into images, making processing simpler.

A 2D vision system captures flat images, like photos. It helps with tasks such as sorting objects by shape or color. Some advanced 2D models, like EfficientNet-B4, can get close to the accuracy of 1D systems, but 1D models usually keep an edge in speed and simplicity.

A 3D vision system collects depth information. It can measure the height, width, and depth of objects. This type is useful for checking the shape of flexible items or complex parts. 3D systems face challenges when objects change shape or move, so encoding spatial details becomes very important. Researchers have not yet found clear statistical results comparing 3D systems to 1D or 2D, but 3D systems help solve problems that need more than just flat images.

Image Acquisition and Preprocessing

A vision system starts by capturing images with cameras or sensors. Good image quality is key for accurate results. The next step is preprocessing, which means cleaning and improving the images before analysis. Preprocessing can include removing noise, adjusting brightness, or sharpening edges. For example, using filters like Median-Mean Hybrid or Unsharp Masking with Bilateral Filter can make preprocessing up to 87.5% efficient.

Better preprocessing leads to higher accuracy. In one study, refining the algorithm dropped the mean square error from 0.02 to 0.005, showing clearer images. Increasing the batch size during processing raised recognition accuracy from 58% to 74%. Advanced methods like Canny edge detection and illumination fixes help the vision system find features even in tough conditions, such as low light or shadows. These steps make processing faster and more reliable, which is important for real-time tasks.

Feature Extraction and Algorithms

Feature Engineering

Feature extraction helps machine vision systems simplify complex visual data. This step turns raw images into useful information that computers can understand. By focusing on important details, feature engineering makes classification faster and more accurate. Engineers use different methods to highlight shapes, edges, colors, or textures in an image. These features help pattern recognition systems spot differences between objects.

Many industries have seen big improvements from advanced feature engineering.

- Medical image analysis now detects diseases with an efficiency of 97.88% and an accuracy of 88.75% by using advanced feature extraction. This leads to better diagnostic results for patients.

- Autonomous vehicles use methods like Histogram of Oriented Gradients (HOG) to identify road signs, pedestrians, and vehicles. These systems work well even when lighting or weather changes.

- Facial recognition technology combines features such as HOG and Local Binary Patterns (LBP) to improve accuracy. Studies show that combining these features with dimensionality reduction helps systems recognize faces more reliably.

- Machine vision systems often face challenges with image quality and lighting. Multi-stream spatiotemporal models help these systems stay accurate in changing environments.

- Tools like PCA, TPOT, Featuretools, and OpenCV make feature engineering easier and more effective.

- Hybrid approaches that mix traditional feature engineering with deep learning boost both accuracy and efficiency.

Tip: Good feature engineering reduces the amount of data the system needs to process, making pattern recognition faster and more reliable.

AI and Deep Learning

AI and deep learning have changed how machine vision systems classify images. These technologies use neural networks, especially convolutional neural networks (CNNs), to learn from large sets of labeled images. CNNs can find patterns in images that humans might miss. This makes them very good at tasks like object recognition and scene analysis.

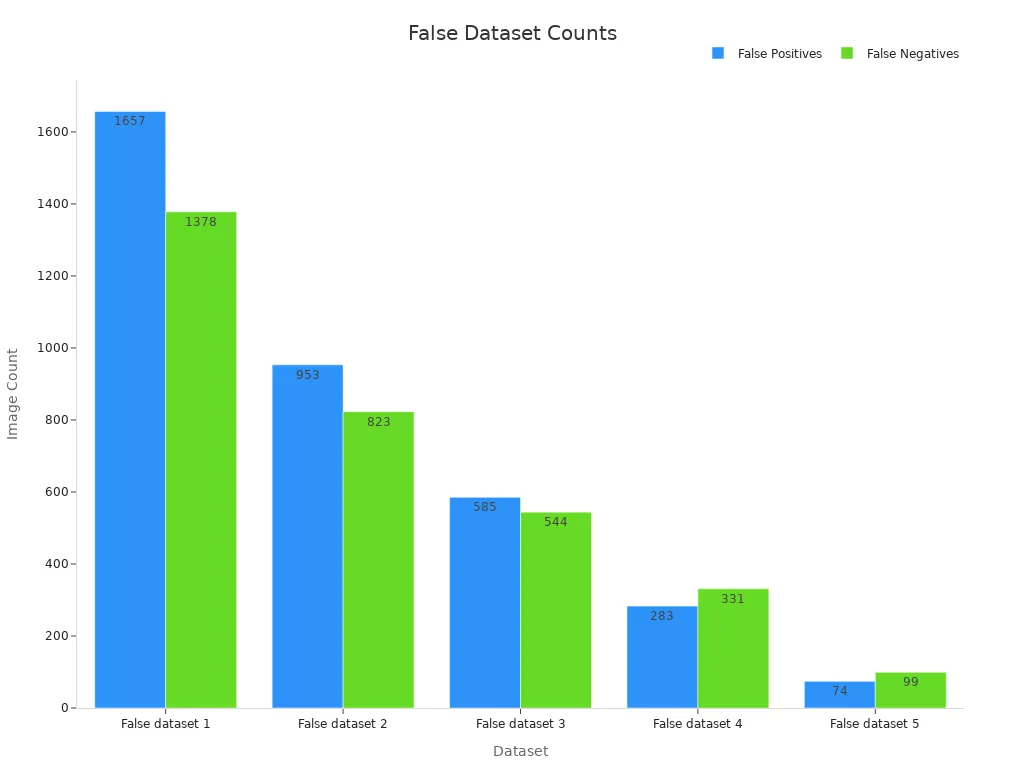

Performance metrics show the value of AI and deep learning in classification. Metrics such as accuracy, precision, recall, F1 score, ROC curve, and AUC-ROC help measure how well a model works. These metrics show if a system makes correct predictions, avoids mistakes, and balances different types of errors. Confusion matrices give a clear picture of true and false predictions, helping engineers improve their models.

- CNNs have improved accuracy by up to 15% on benchmark datasets.

- Compared to older machine learning models, CNNs perform about 20% better in key metrics.

- Error rates have dropped by nearly 20% in some cases, making these systems more reliable for important jobs.

- Deep learning tasks have seen a total performance boost of about 25%, including gains in speed and resource use.

- CNNs also use less memory and energy, which helps modern AI models run faster and more efficiently.

Peer-reviewed studies support these results.

- CNNs show strong results in classification, object detection, and scene analysis when trained on large labeled datasets.

- Researchers like Elakkiya et al. built a cervical cancer diagnostic system using hybrid networks, which improved classification in medical images.

- Harrou et al. created a vision-based fall detection system that worked well in real homes.

- Pan et al. used deep learning for navigation mark classification, showing the practical value of AI in real-world tasks.

- These studies agree that learning-based approaches, especially deep learning, outperform static analysis methods in automation and intelligence.

- Using large datasets and advanced architectures makes machine vision systems more accurate and robust.

Pattern recognition plays a key role in these advances. AI-powered systems can process images quickly and spot patterns that help with classification. As technology improves, machine vision systems will continue to get better at recognition and decision-making.

Applications in Quality and Process Control

Industrial Inspection

Industrial inspection uses advanced vision inspection systems to check products and parts during manufacturing. These systems help companies maintain high quality and meet strict standards. For example, in electronics manufacturing, machine vision checks printed circuit boards for solder and alignment defects. This process reaches a 98% defect detection accuracy, compared to 85% for manual inspection. Inspection also becomes 60% faster, and costs drop by 20%. In the pharmaceutical industry, vision systems inspect vials for cracks, contamination, and labeling errors. These systems achieve 99.5% accuracy and process up to 300 vials per minute.

A table below shows more examples of improved quality control and inspection:

| Industry/Application | Improvement Details | Quantitative Impact/Results |

|---|---|---|

| Electronics Manufacturing | Detects solder/alignment defects | 98% accuracy, 60% faster, 20% cost reduction |

| Pharmaceutical Industry | Inspects vials for cracks and errors | 99.5% accuracy, 3x faster |

| Power Line Inspection | Drones inspect 250 km in 5 minutes | 400% more defects found, €3M saved yearly |

| Agriculture | Drones identify crop diseases | Maximizes yields, improves sustainability |

These examples show how vision inspection systems automate processes, increase efficiency, and support assembly verification. They also help with sorting and recognition tasks, making quality control tasks more reliable.

Automated Defect Detection

Automated defect detection uses machine vision to find flaws that humans might miss. Traditional inspection often suffers from fatigue, slow speed, and errors. Automated systems use AI and deep learning to spot defects quickly and accurately. For example, in manufacturing, these systems reach accuracy rates above 99.5%, while manual inspection stays between 85% and 90%. Inspection times drop by 40%, and labor costs fall by about 50%.

Automated systems also help with sorting and verification, making sure only high-quality products move forward. They collect data for continuous improvement and adapt to new quality control tasks. In food processing, machine vision sorts grains and predicts meat tenderness with high accuracy. In agriculture, drones with vision systems detect crop issues, leading to better resource use and sustainability.

Note: Automated inspection not only improves quality but also increases workplace safety by handling hazardous tasks.

Machine vision systems deliver improved quality control, increased efficiency, and better data management. They outperform traditional methods in speed, accuracy, and cost, helping industries meet strict quality standards.

Machine vision systems use steps like image capture, feature extraction, and classification to improve product quality and process control. AI and deep learning help these systems reach high accuracy and efficiency. Experts use metrics such as accuracy, precision, recall, and F1 score to measure how well these systems match human judgment and handle complex data. Companies now rely on machine vision to keep quality high and reduce errors. As AI grows, these systems will play an even bigger role in quality and control.

- Accuracy: Shows how well the system predicts correctly.

- Precision: Measures the correctness of positive results.

- Recall: Checks if the system finds all important cases.

- F1 Score: Balances precision and recall for fair results.

FAQ

What is a classification machine vision system?

A classification machine vision system uses cameras and computers to sort or label objects in images. These systems help factories and businesses check products, find defects, and organize items quickly and accurately.

How does deep learning improve machine vision classification?

Deep learning helps machines learn from many images. It finds patterns that humans might miss. This technology increases accuracy and allows the system to handle more complex tasks, such as recognizing faces or reading handwriting.

Why do industries use machine vision for quality control?

Industries use machine vision because it works faster and more accurately than people. These systems reduce errors, save money, and help companies meet strict quality standards. They also keep workers safe by handling dangerous inspection jobs.

Can machine vision systems work in low light or with poor images?

Yes, modern machine vision systems use special filters and image processing tools. These tools clean up images, remove noise, and adjust brightness. This allows the system to work well even in tough conditions.

What are common challenges in machine vision classification?

Some challenges include poor image quality, changing lighting, and objects that look similar. Engineers solve these problems by using better cameras, advanced algorithms, and more training data.

See Also

A Comprehensive Guide To Object Detection In Machine Vision

Fundamentals Of Sorting Techniques In Machine Vision Systems

An Overview Of Computer Vision Models And Machine Systems

How Image Processing Powers Modern Machine Vision Systems

The Importance Of Image Recognition In Quality Control Systems