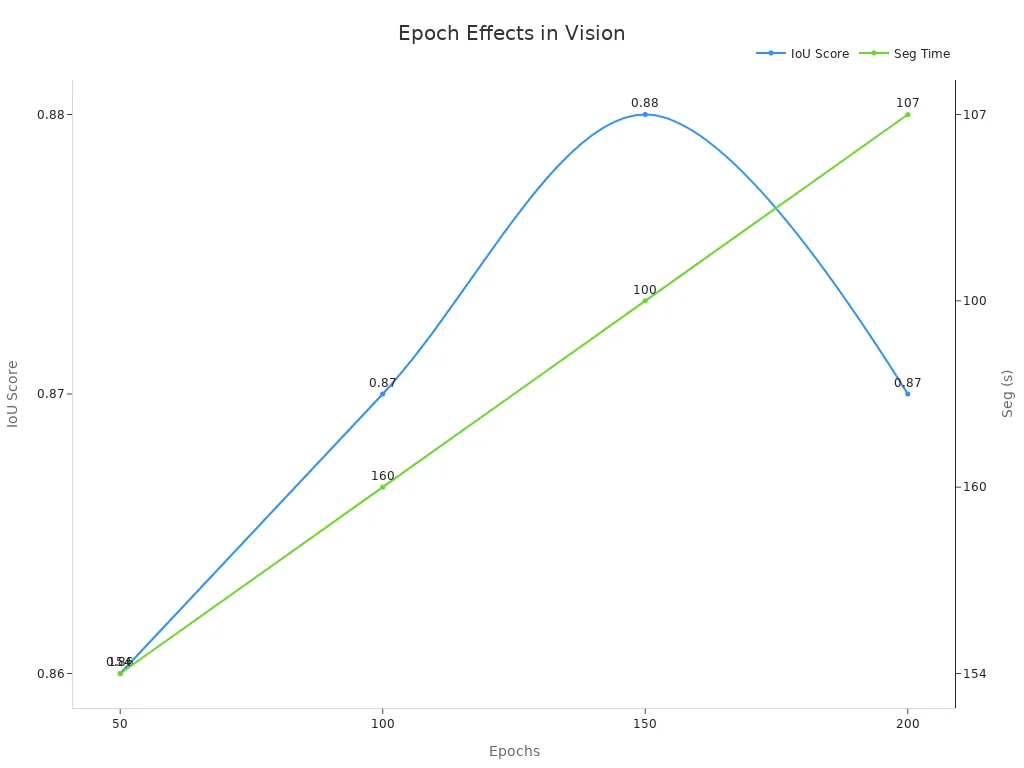

An epoch in a machine vision system marks one complete cycle where the model reviews every image in the training set. Each epoch gives the system a new chance to learn and improve its accuracy. Selecting the right number of epochs helps a model avoid errors and reach better results. For example, researchers tested a machine vision system with different epoch counts on cardiac MRI segmentation. The table below shows how model performance changed as the number of epochs increased:

| Epochs | IoU Score | Variance | Segmentation Time (s) for 366 images | Key Observation |

|---|---|---|---|---|

| 50 | 0.86 | 0.11 | 154 | Good initial performance |

| 100 | 0.87 | 0.11 | 160 | Improved accuracy |

| 150 | 0.88 | 0.10 | 100 | Best performance; optimal balance before overfitting |

| 200 | 0.87 | 0.11 | 107 | Slight overfitting observed |

The results show that the epoch machine vision system reached its best accuracy after 150 epochs. This example highlights why understanding the role of each epoch is vital for anyone working with an epoch machine vision system.

Key Takeaways

- An epoch means the model has seen every image in the training set once, helping it learn and improve with each cycle.

- Choosing the right number of epochs is crucial to avoid underfitting (too few epochs) and overfitting (too many epochs).

- Early stopping helps find the best number of epochs by stopping training when the model stops improving on new data.

- Training uses batches and iterations; one epoch equals processing all batches once, which helps the model learn step by step.

- Good data quality, balanced classes, and careful tuning of epochs lead to more accurate and reliable machine vision models.

Epoch Machine Vision System

Epoch Definition

An epoch in a machine vision system means the model has seen every image in the training dataset once. During each epoch, the model processes all the data, learns from its mistakes, and updates its internal settings. This cycle repeats many times, allowing the model to improve its predictions with each pass. In the context of epoch machine learning, the term "epoch" refers to this complete journey through the training dataset. The process helps the model recognize patterns, shapes, and features in images. Each epoch gives the learning algorithm a new chance to adjust and become more accurate.

The epoch machine vision system relies on this repeated exposure to data. For example, in the IGLOO machine vision system, researchers trained a YOLOv8 model for 200–500 epochs. The table below shows how this approach improved accuracy and robustness:

| Aspect | Details |

|---|---|

| System | IGLOO machine vision system for solubilization index of phosphate-solubilizing bacteria |

| Model | YOLOv8 semantic segmentation |

| Epochs | 200–500 |

| Batch Size | 4 |

| Input Resolution | 800 × 800 pixels |

| Accuracy | >90% for bacterial colony and halo segmentation |

| F1 Score | 0.87–0.90 |

| Relative Error | <6% compared to manual measurements |

| Overfitting Risk | Slight risk at 500 epochs, marginal gains beyond 200 epochs |

| Validation Method | Confusion matrices, comparison with manual measurements |

| Practical Impact | Objective, efficient, reproducible quantification reducing observer variability |

This table shows that increasing the number of epochs in the epoch machine vision system can boost accuracy, but too many epochs may lead to overfitting.

Importance

Epochs play a central role in epoch machine learning. Each epoch allows the model to learn from the entire training dataset, making small improvements with every cycle. The process of learning depends on how many times the model sees the data. If the model trains for too few epochs, it may not learn enough and will underfit. If it trains for too many epochs, it may memorize the training dataset and overfit, losing the ability to generalize to new images.

Researchers often start with a set number of epochs, such as 50 or 100, and then monitor how the model performs. They adjust the number of epochs based on results, sometimes using early stopping to prevent overfitting. This method stops training when the model’s performance on new data stops improving. The following steps outline a typical approach:

- Set an initial number of epochs for the epoch machine vision system.

- Train the model and watch training and validation results.

- Increase epochs if the model underfits, or use early stopping if it overfits.

- Repeat the process, tuning the number of epochs for the best results.

Tip: Batch normalization can help the model learn faster and more reliably. It standardizes the data inside the model during training, which can reduce the number of epochs needed and improve generalization.

The importance of epochs also appears in real-world studies. For example, a deep learning system trained for 30 epochs on images with different lighting conditions showed that good lighting and enough epochs led to higher accuracy. The network trained under good lighting reached 95.71% accuracy, while the one trained under poor lighting performed worse. This result highlights that while epochs are vital, the quality of the training dataset also matters.

Training Process

Epoch Machine Learning

In the training process of a machine vision system, the concept of epoch machine learning plays a key role. An epoch marks one full pass through the entire training dataset. During each epoch, the neural model reviews every image and learns from its mistakes. This cycle repeats many times, which helps the model improve its ability to recognize patterns and features.

The training process often uses multiple epochs. Each time the neural model completes an epoch, it gets better at its task. For example, training a convolutional neural network on a large dataset like ImageNet may require hundreds or even thousands of epochs. This allows the neural model to move from learning simple shapes to understanding complex details in images. If the training process uses too few epochs, the neural model may underfit and miss important patterns. Too many epochs can cause overfitting, where the neural model memorizes the training data and fails to work well on new images.

Note: Techniques such as early stopping and learning rate schedules help control the number of epochs and improve the training process.

The relationship between epochs, batches, and iterations is important in epoch machine learning:

- The training dataset is split into smaller groups called batches.

- Each batch goes through the neural model one at a time.

- One iteration means the neural model has processed one batch.

- One epoch means the neural model has seen all batches once.

For example, if a dataset has 1000 images and the batch size is 100, there are 10 batches per epoch. If the training process runs for 20 epochs, the neural model completes 200 iterations.

A simple analogy: Think of learning as reading a book. Each epoch is like reading the whole book once. Each batch is a chapter. Each iteration is reading one chapter. Multiple epochs mean reading the book several times to understand it better.

Parameter Updates

During epoch machine learning, the neural model updates its parameters after each batch. These updates help the model learn and improve with every iteration. The training process uses different strategies to manage these updates:

- Time-based decay lowers the learning rate over epochs, allowing big changes early and small changes later.

- Step decay reduces the learning rate after a set number of epochs for better fine-tuning.

- Adaptive methods like Adam or RMSprop adjust the learning rate for each parameter, making learning more precise.

- Learning rate warm-up starts slow and increases, helping the neural model stabilize early in training.

- Cyclical learning rates change the learning rate up and down, helping the neural model escape bad solutions.

These methods help the learning algorithm find the best settings for the neural model. The training process repeats parameter updates many times during multiple epochs, which leads to better accuracy and generalization.

Model Performance

Underfitting and Overfitting

The number of epochs has a big impact on how well a model learns. If a model trains for too few epochs, it may not learn enough from the data. This problem is called underfitting. If a model trains for too many epochs, it may start to memorize the training data instead of learning patterns. This is called overfitting. Both problems can lower accuracy and make the model less useful for new images.

Here is a table that shows the difference between underfitting and overfitting:

| Aspect | Underfitting Characteristics | Overfitting Characteristics |

|---|---|---|

| Training Loss Behavior | Increases gradually with more training examples; may show a sudden dip at the end (not always) | Very low at the start; remains low or slightly increases with more training examples |

| Validation Loss Behavior | High initially; gradually lowers but does not improve significantly; may flatten or dip at end | High initially; gradually decreases but does not flatten, indicating potential improvement |

| Gap Between Training & Validation Loss | Small or training and validation losses are close at the end | Large gap; training loss much lower than validation loss |

| Interpretation | Model is too simple, fails to learn enough from data (high bias) | Model is too complex, fits noise in training data (high variance) |

| Relation to Training Epochs | Increasing epochs do not significantly improve validation loss | Increasing epochs without control leads to overfitting indicated by divergence in losses |

- Underfitting happens when the model is too simple and cannot learn enough, so both training and validation error stay high.

- Overfitting happens when the model is too complex and trains for too many epochs, so it performs well on training data but poorly on new data.

- The right number of epochs helps the model learn just enough to enhance accuracy and reduces error on new images.

- As the dataset size grows, the model often needs fewer epochs because it sees more examples in each pass.

Early Stopping

Early stopping is a helpful method for finding the right number of epochs. It watches the model’s performance on a validation set and stops training when the validation error starts to rise. This method prevents the model from memorizing the training data and helps it generalize better.

- Early stopping improves generalization and saves time by stopping training when the model reaches its best accuracy.

- It is easy to use and works well with deep neural networks.

- Early stopping reduces error by halting training before overfitting begins.

- Studies show that early stopping can achieve high accuracy and stop training at the right time, even faster than some other methods.

Tip: To choose the right number of epochs, start with a small value, monitor validation error, and use early stopping. Adjust the number of epochs based on how the model performs. For large datasets, try fewer epochs first, since the model learns from more data in each cycle.

Practical Examples

Image Classification

Image classification tasks often rely on careful epoch selection to achieve the best results. In a real-world example, engineers trained a model on the ImageNet-1K dataset. They started with 100 epochs to balance learning and avoid both underfitting and overfitting. Too few epochs caused the model to miss important patterns, while too many wasted resources and led to memorization of the training data. The team used batch size adjustments and data loaders to shuffle and batch images, which helped the model learn efficiently. They also used the Adam optimizer for parameter updates.

To protect against losing progress during long training runs, the team saved model checkpoints after each epoch. This practice allowed them to resume training if needed. Another group faced overfitting when training for too many epochs. They solved this by using an EarlyStopping callback in Keras. This tool watched the validation loss and stopped training when the model stopped improving. The approach helped the model generalize better to new images.

Tip: Saving checkpoints and using early stopping can make image classification training more reliable and efficient.

Object Detection

Object detection models also depend on the right number of epochs for strong performance. In one study, researchers trained detection models for 200 epochs. They found that image preprocessing steps, such as padding, resizing, and rotation, worked together with epoch-based training to improve accuracy. The team measured results using precision-recall curves and mean Average Precision (mAP). Padded and rotated images led to better bounding box predictions and fewer missed objects.

A table can help summarize the impact of preprocessing and epochs on detection accuracy:

| Preprocessing | Epochs | mAP Score | Observation |

|---|---|---|---|

| None | 200 | 0.72 | Baseline performance |

| Padding | 200 | 0.78 | Improved bounding boxes |

| Rotation | 200 | 0.76 | Better detection of objects |

These findings show that both epoch selection and preprocessing steps play a key role in detection tasks. Careful planning leads to higher accuracy and better results in real-world systems.

Selecting the right number of epochs shapes the success of machine vision systems. Careful tuning helps models avoid both underfitting and overfitting. The table below shows how strong labeling, feature engineering, and model evaluation support robust results:

| Aspect | Details |

|---|---|

| Dataset Labeling | Over 400,000 epochs labeled, covering 85.15% of total epochs, with balanced class distribution. |

| Model Evaluation | XGBoost reached balanced accuracy of 0.990 and strong calibration on external datasets. |

| Cross-validation | Stratified 10-fold cross-validation ensured model robustness. |

- More epochs do not always mean better performance.

- Balanced data, feature quality, and real-world testing matter most.

- Careful epoch selection leads to reliable and accurate machine vision models.

Readers can use these insights to build stronger machine vision projects.

FAQ

What is an epoch in a machine vision system?

An epoch means the model has seen every image in the training set once. Each epoch helps the model learn and improve its predictions.

How does the number of epochs affect model accuracy?

Too few epochs can cause underfitting. Too many can lead to overfitting. The right number helps the model learn patterns without memorizing the data.

What is early stopping, and why is it useful?

Early stopping watches the model’s performance on new data. It stops training when accuracy stops improving. This method prevents overfitting and saves time.

How do batches and iterations relate to epochs?

A batch is a small group of images. An iteration happens when the model processes one batch. One epoch means the model has seen all batches once.

Can the same number of epochs work for every dataset?

No. Different datasets need different numbers of epochs. Larger datasets often need fewer epochs because the model learns from more examples each time.

See Also

Understanding Pixel-Based Vision Technology In Contemporary Uses

The Effect Of Frame Rate On Vision System Efficiency

A Comprehensive Guide To Image Processing In Vision Systems

Comparing Firmware-Based Vision With Conventional Machine Systems