A facial recognition machine vision system identifies people by capturing images, detecting faces, and analyzing unique features. Machine vision systems use high-resolution cameras and lenses to collect clear visual data. Facial recognition technology then applies computer vision models for detection and feature extraction, turning faces into digital data. AI and neural networks match these features against stored profiles, enabling fast and reliable identification. The integration of facial recognition and machine vision systems supports real-time monitoring and automation in many sectors. The following table shows the rapid adoption of facial recognition systems worldwide:

| Sector/Application | Adoption Insights |

|---|---|

| Retail & e-commerce | Dominated market with 21.4% revenue share in 2022; used for payments and customer experience |

| Healthcare | Expected CAGR of 17.5%; used for secure access and patient data protection |

| Access Control | Largest revenue share (36.0% in 2022); enhanced by liveness detection technology |

| Government & Security | Moderate growth; used for surveillance and law enforcement |

Key Takeaways

- Facial recognition systems use high-quality cameras and AI to capture and analyze faces quickly and accurately.

- The process includes image capture, face detection, feature extraction, matching, and decision-making to identify people in real time.

- Accuracy depends on good lighting, camera quality, face position, and handling challenges like occlusions and changes in appearance.

- These systems help improve security, access control, healthcare, retail, and consumer devices by automating identification and authentication.

- Privacy, bias, and ethical concerns require strong protections, transparency, and fair use to build trust and ensure responsible technology use.

Workflow

Facial recognition machine vision systems follow a structured workflow to identify and verify individuals. Each step in this process uses advanced computer vision models, facial recognition technology, and automation to achieve accurate and real-time results.

Image Capture

Machine vision systems begin with image capture. High-resolution digital cameras and specialized sensors collect visual data from photos, videos, or live feeds. The choice of hardware impacts the quality of facial recognition data. Popular camera models include Basler ace2 Basic, Basler ace2 Pro, and LUCID Vision Labs Triton. These cameras offer different resolutions, frame rates, and sensor features to suit various facial recognition system needs.

| Camera Model | Resolution | Frame Rate (FPS) | Sensor Features | Connectivity | Application Notes |

|---|---|---|---|---|---|

| Basler ace2 Basic | 2 MP | 160 | Balanced performance, cost-effective | USB 3, GigE | Suitable for general computer vision including facial recognition |

| Basler ace2 Pro | 5 MP | 60 | Higher resolution for fine detail | USB 3, GigE | Ideal for detailed inspections and facial recognition requiring higher image fidelity |

| LUCID Vision Labs Triton | 12.3 MP | 9 | Excellent low-light performance, IP rated | GigE | Best for low-light or night-time facial recognition scenarios |

Sensor size and shutter type also affect image recognition. Global shutters help avoid distortion during motion capture. The image capture process determines the accuracy of facial recognition software. Poor lighting, occlusions, and camera angles can reduce image quality. Hardware characteristics such as focal length and resolution are critical for capturing usable facial features, especially at long distances. Optimizing image sensors before deployment balances cost and accuracy for applications like smart city surveillance.

- The image capture process directly affects facial recognition accuracy by influencing image quality and resolution.

- Poor lighting and occlusions complicate recognition.

- Hardware features such as focal length and resolution are critical for capturing usable facial images.

- Recognition algorithms trained on high-resolution images struggle with low-resolution inputs.

- Deep learning approaches, such as super-resolution CNNs, improve recognition on low-resolution images but still face performance degradation.

- Sensor selection and optimization balance cost and accuracy.

- Increasing focal length improves recognition accuracy up to distances of about 20 meters.

Face Detection

After capturing images, machine vision systems use object detection algorithms to locate faces. Face detection is a crucial step in facial recognition technology. Computer vision models analyze the image and identify regions likely to contain faces. Several methods exist for detection, each with strengths and weaknesses.

| Method Category | Description | Example Algorithms / Techniques |

|---|---|---|

| Knowledge-based | Uses human-defined rules about facial feature positions and distances. | Rule-based heuristics |

| Template Matching | Uses predefined face templates to detect faces by correlation with input images. | Edge detection, controlled background technique |

| Feature-based | Extracts structural facial features and uses classifiers to differentiate face regions. | Haar Feature Selection, Histogram of Oriented Gradients (HOG) |

| Appearance-based | Relies on machine learning and statistical analysis to model faces from training images. | PCA (Eigenfaces), Hidden Markov Models, Naive Bayes, GANs |

Viola-Jones uses Haar-like features and a sliding window to detect faces. SSD and YOLO are machine learning approaches that use anchor boxes and grids for real-time detection. Neural networks trained on large datasets offer robust performance, even with occlusion and lighting changes. Face detection accuracy can reach up to 99.97% under ideal conditions. Real-world factors such as aging, face covers, and poor image quality reduce accuracy. One-stage models like SSD and YOLO are preferred for real-time applications due to their speed.

| Algorithm | Accuracy (mAP) | F1 Score | Processing Time (GPU) | Notes |

|---|---|---|---|---|

| DSFD | 93.77% | 0.883 | 0.132 sec | High accuracy, slower |

| MTCNN | 10.30% | 0.123 | 0.023 sec | Fastest, low accuracy |

Face detection balances speed and accuracy depending on the application. Machine vision systems use these algorithms to isolate faces for further analysis.

Feature Extraction

Once faces are detected, facial recognition software extracts facial features. This step uses computer vision models to analyze key points such as eyes, nose, mouth, and contours. Feature extraction simplifies raw image data and highlights unique characteristics for facial comparison and analysis.

| Technique Category | Examples/Methods | Description/Notes |

|---|---|---|

| Local Appearance-Based | Local Binary Pattern (LBP), Histogram of Oriented Gradients (HOG), Gabor filters, Correlation filters | Extract features from small face regions or patches focusing on texture, pixel orientations, and local details. |

| Key-Points-Based | SIFT, SURF, BRIEF | Detect points of interest on the face and extract localized features around these key points. |

| Holistic Approaches | Principal Component Analysis (PCA), Linear Discriminant Analysis (LDA), Independent Component Analysis (ICA), Eigenface, Eigenfisher | Treat the entire face image as a whole, using subspace techniques to extract global features. |

| Frequency Domain Analysis | Discrete Fourier Transform (DFT), Discrete Cosine Transform (DCT), Discrete Wavelet Transform (DWT) | Represent face features in frequency domain, independent of training data. |

| Deep Learning-Based | Convolutional Neural Networks (CNNs), Stacked Auto-Encoders (SAE) | Use deep neural networks to extract robust and compact facial features, often combining holistic and local info. |

| Hybrid Approaches | Combination of local and holistic methods | Combine strengths of multiple techniques for improved recognition performance. |

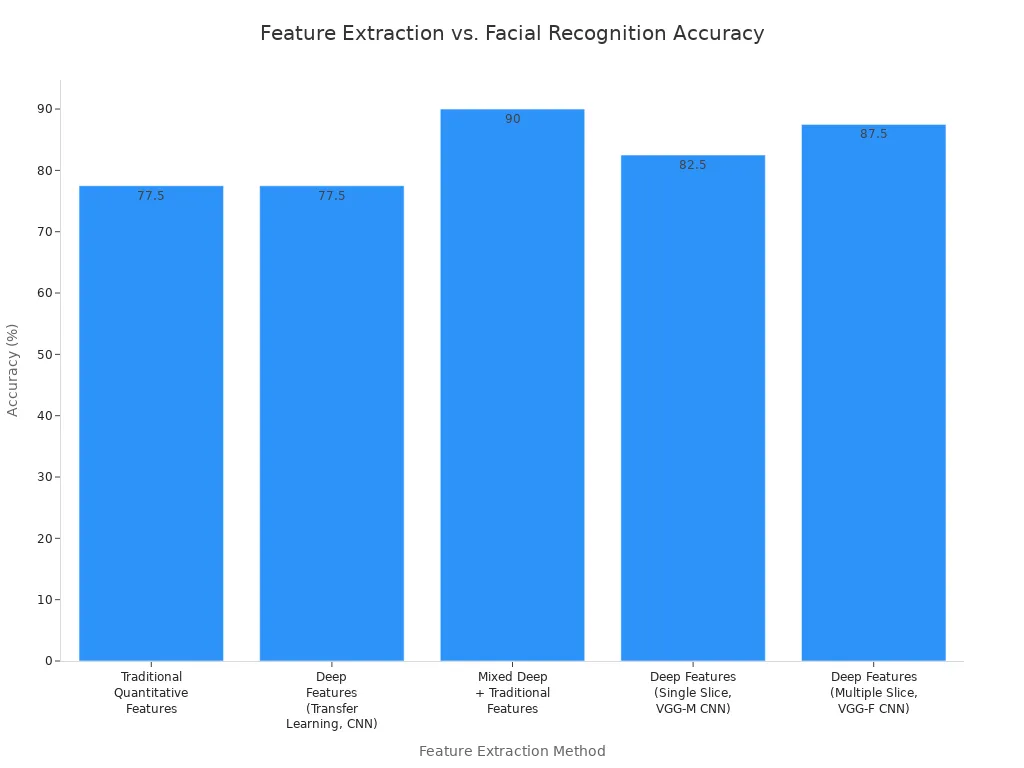

Deep learning models, especially CNNs, extract features automatically through multiple layers. This process increases accuracy and adaptability. Combining traditional and deep learning features yields the highest accuracy. The chart below compares facial recognition accuracy across five feature extraction methods:

Automated feature extraction reduces manual intervention and adapts better to complex tasks. Machine vision systems use these techniques to create robust facial recognition data for matching.

Face Matching

Facial recognition systems match extracted facial features to entries in a database. Computer vision models compare the new data to stored profiles using several methods:

- Eigenfaces (PCA) use principal component analysis to extract facial features.

- Fisherfaces (LDA) employ linear discriminant analysis for better discrimination.

- LBPH Algorithm extracts local texture patterns encoded into histograms.

- DLib with ResNet uses deep learning for high-accuracy face recognition.

Systems measure distances between key facial landmarks, analyze shapes and contours, and use skin texture and wrinkles to enhance recognition accuracy. Similarity scores are calculated and compared against thresholds to identify or verify individuals. Holistic matching compares the entire face, while feature-based matching analyzes individual facial features and their spatial relationships. Some systems use 3D facial recognition for more detailed matching.

The matching process supports real-time identification and automation in applications such as security, access control, and smart devices.

Decision-Making

Facial recognition machine vision systems make final identification or verification decisions using real-time decision-making. The process involves several steps:

- Image acquisition: Cameras capture multiple face images.

- Preprocessing: Systems refine images through detection, normalization, and alignment.

- Facial feature extraction: Computer vision models extract geometric, texture, and statistical features.

- Feature representation: Features combine into mathematical vectors.

- Database enrollment and matching: Algorithms compare vectors using similarity metrics.

- Decision making: Systems apply thresholds on False Match Rate (FMR) and False Non-Match Rate (FNMR) to determine if a match is valid.

- Output: The system produces a confidence or match score that informs the final decision.

Confidence thresholds play a critical role in facial recognition technology. Higher thresholds reduce false positives but increase miss rates, meaning some correct matches are discarded. Lower thresholds increase false positives but may be acceptable in investigative scenarios. Proper use of confidence thresholds enhances reliability and trustworthiness. Improper thresholding can lead to untrustworthy data and potential misuse.

Facial recognition software distinguishes between verification (one-to-one matching) and recognition (one-to-many matching). The system uses similarity scores and thresholds to decide if a match is valid. Verification uses higher thresholds to reduce false accepts, while recognition may use lower thresholds to avoid missing matches. The final decision is based on these scores and thresholds, producing confidence or match scores that confirm identity or verify claims.

Facial recognition machine vision systems rely on automation, computer vision models, and facial recognition technology to deliver accurate, real-time results across many industries.

Technologies

Facial Recognition Technology

Facial recognition technology forms the core of every facial recognition system. This technology uses computer vision models to analyze faces in images or videos. It works by capturing facial features and turning them into digital data. Facial recognition technology emulates human visual perception by using deep convolutional neural networks. These networks process faces in a way similar to how the human brain recognizes people. They create structured face representations that keep identity and facial attributes, even when faces change in angle or lighting. Facial recognition technology uses hierarchical processing, which helps it recognize faces quickly and accurately. This approach allows facial recognition software to handle complex tasks, such as identifying people in crowds or matching faces from different viewpoints.

Machine Vision

Machine vision systems provide the eyes for facial recognition technology. These systems use cameras and sensors to capture images or videos. They then use computer vision models to detect and align faces. Machine vision systems measure and extract facial features, such as the eyes, nose, and mouth. They match these features against stored data using facial recognition algorithms. Machine vision systems improve the precision of facial recognition by handling challenges like poor lighting or unusual angles. They also use artificial intelligence to adapt and process data in real time. This makes facial recognition systems faster and more reliable. The table below shows how two-stage detector machine vision systems improve accuracy and precision:

| Aspect | Two-Stage Detector Machine Vision Systems |

|---|---|

| Accuracy | Higher precision due to a two-step process: region proposal followed by classification and refinement. |

| Speed | Near real-time processing with optimized models. |

| Computational Resources | Needs more resources, such as GPUs. |

| Application | Focuses on key facial features for better detection in security. |

Machine Learning & Neural Networks

Machine learning and neural networks drive the intelligence behind facial recognition technology. Facial recognition systems use computer vision models like convolutional neural networks (CNNs) to extract features from faces. CNNs learn to recognize patterns, such as edges and shapes, from raw images. Popular CNN architectures include VGGFace, ResNet-50, and SENet. These models help facial recognition software achieve high precision and adaptability. CNNs replace manual feature extraction, making facial recognition algorithms more accurate. They also use data augmentation to improve learning from small datasets. Facial recognition technology benefits from deep learning algorithms, which allow systems to learn and improve over time. This leads to better accuracy and precision in real-world applications.

PCA & Algorithms

Principal Component Analysis (PCA) and other facial recognition algorithms play a key role in facial recognition systems. PCA reduces the number of variables in face images while keeping important information. It transforms faces into a set of principal components, called eigenfaces. Facial recognition software uses these components to compare and identify faces efficiently. Support Vector Machines (SVM) often work with PCA to classify faces based on reduced features. This combination improves the precision and speed of facial recognition technology. Research shows that PCA-based systems can reach high recognition rates, especially when combined with optimization algorithms. Modern facial recognition systems also use advanced computer vision models and facial recognition algorithms, such as deep neural networks, to further boost accuracy and precision.

Accuracy Factors

Influencing Elements

Many factors influence the precision of machine vision systems in facial recognition. Environmental conditions play a big role.

- Lighting conditions matter a lot. Too much light, darkness, or uneven lighting can hide important facial features.

- Camera calibration and sensor placement affect how well the system captures faces. Sensors should stay at face level and remain clean.

- A neutral background helps the system focus on the right facial features.

- The size of the face in the image should be at least 200×200 pixels for best precision.

- Face orientation is important. The system works best when the face looks toward the camera within 35 degrees.

- Occlusions like hats, glasses, masks, or scarves can block facial features.

- Changes in appearance, such as shaving a beard or aging, also affect precision.

- Motion blur and extreme facial expressions, like yawning or closed eyes, make detection harder.

- Machine vision systems sometimes struggle to tell apart twins or people who look very similar.

Modern computer vision models try to handle these challenges. They use large datasets and advanced training to improve detection and precision, even when faces wear masks or glasses.

Measuring Accuracy

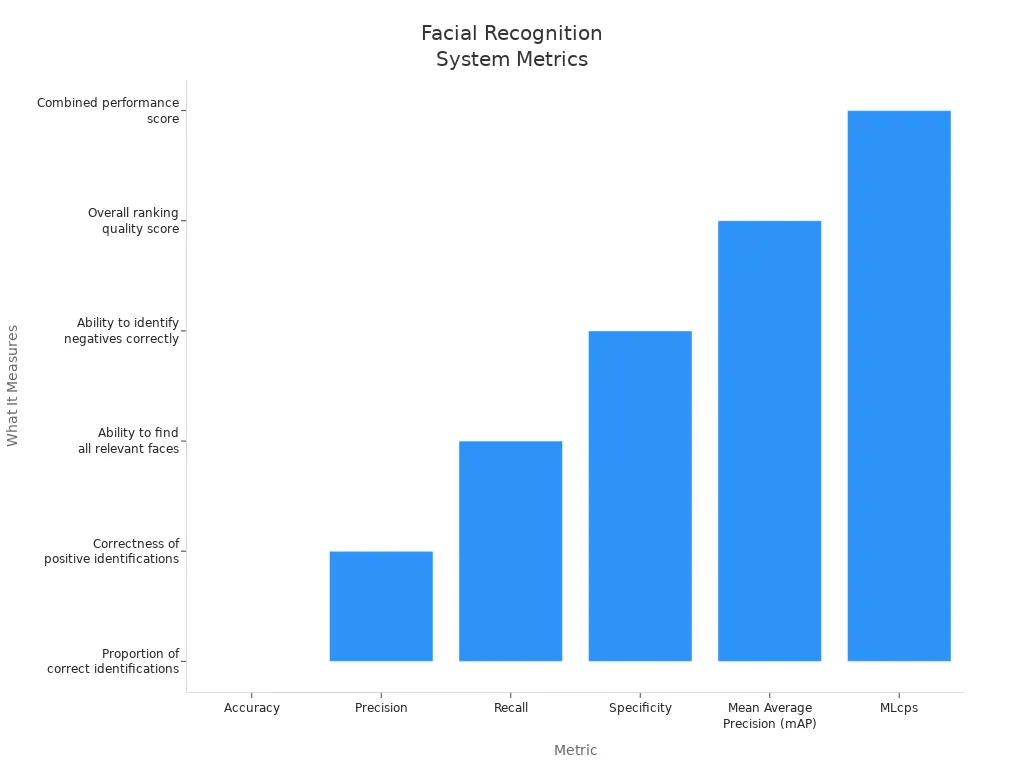

Experts use several metrics to measure the precision of facial recognition. These metrics help compare different computer vision models and machine vision systems. The table below shows common metrics:

| Metric | What It Measures |

|---|---|

| Accuracy | Proportion of correct identifications or rankings |

| Precision | Correctness of positive identifications |

| Recall | Ability to find all relevant faces |

| Specificity | Ability to correctly identify negatives |

| Mean Average Precision (mAP) | Overall ranking quality across thresholds |

| MLcps | Combined performance score for imbalanced data |

Industry benchmarks, such as the NIST Facial Recognition Technology Evaluation, use these metrics to test computer vision models. High-performing machine vision systems often reach accuracy rates above 99% in controlled settings.

Improving Performance

Machine vision systems use many strategies to achieve improved accuracy and precision.

- Image preprocessing removes noise and focuses on important facial features.

- Accurate detection and cropping help the system find and isolate faces, removing background distractions.

- Denoising and filtering techniques, like Gaussian filtering, enhance edges and normalize lighting.

- Computer vision models use Principal Component Analysis and Linear Discriminant Analysis to reduce data size and highlight key facial features.

- Combining spatial and frequency domain techniques gives a more complete view of facial features, boosting precision.

- Data-centric approaches, such as using diverse training datasets and data augmentation, help computer vision models learn from many types of faces.

- Model-centric approaches adjust training to reduce bias and improve fairness.

- Human-in-the-loop annotation lets experts check and correct data, raising the quality of training for computer vision models.

By following these best practices, machine vision systems can deliver reliable facial recognition, even in challenging real-world conditions.

Applications

Security & Surveillance

Security teams use machine vision systems for facial recognition surveillance in airports, government buildings, and public events. These systems help identify suspects, control access, and manage crowds. They also support emergency response and traffic monitoring. Facial recognition improves safety by detecting threats and helping police find missing persons. Biometric authentication adds another layer of protection, making it harder for unauthorized people to enter secure areas. Many cities use machine vision systems to keep public order and respond quickly to incidents.

Tip: Facial recognition surveillance helps security staff monitor large crowds and spot suspicious activity faster.

| Industry | Applications of Facial Recognition Machine Vision Systems | Industry-Specific Goals |

|---|---|---|

| Security | Surveillance in airports, government premises, and public events; suspect identification; access control; crowd management; emergency response; traffic monitoring; missing person identification | Focus on safety, threat detection, and public order |

Consumer Devices

Machine vision systems have changed how people use consumer devices. Smartphones, tablets, and laptops now offer facial recognition for authentication. Users unlock devices and approve payments with a glance. Biometric authentication makes these actions quick and secure. Smart home systems use facial recognition to control access and personalize settings. Some gaming consoles use machine vision systems to track players’ faces and improve gameplay. The benefits of facial recognition include convenience and better security for everyday technology.

- Facial recognition in phones and tablets provides fast authentication.

- Smart home devices use machine vision systems for personalized access.

- Gaming consoles use facial recognition to enhance user experience.

Industry Uses

Many industries rely on machine vision systems for facial recognition. Retailers use these systems to analyze customer behavior, prevent shoplifting, and offer personalized marketing. Healthcare providers use facial recognition for patient identification, real-time monitoring, and fraud prevention. Hospitals improve patient care and safety with machine vision systems. In warehouses, companies deploy facial recognition to manage access and track employees. The use cases of facial recognition continue to grow as technology advances.

| Industry | Example Applications | Specific Case or Example |

|---|---|---|

| Healthcare | AI-powered diagnostics (medical imaging); real-time patient monitoring; AR-assisted surgery | Stanford Medicine’s CheXNeXt algorithm for pneumonia detection; Oxehealth’s contactless monitoring system; UC San Diego’s AR surgical assistance |

| Retail | Smart inventory management; customer behavior and heatmap analysis; loss prevention via facial recognition | Walmart’s Retail Link System; Sephora’s product interaction analysis; ASDA’s facial recognition to reduce theft |

| Security | AI surveillance and anomaly detection; facial recognition for access control; crowd analysis for event safety | Singapore Changi Airport’s smart surveillance; Clearview AI’s law enforcement facial recognition; Tokyo 2020 Olympics crowd management |

Cost and scalability matter when deploying machine vision systems. Large retailers have scaled facial recognition from small tests to hundreds of warehouses by adding video streams gradually, keeping costs low. System designers must consider database size, user count, and environment. Vendors recommend scalable systems with redundancy to support many searches and large databases. New 3D facial recognition technologies improve accuracy but may increase costs. Support services like consultation and maintenance help manage risks and expenses. Companies balance performance and scalability with financial limits to get the most benefits of facial recognition.

Challenges & Ethics

Privacy & Security

Facial recognition machine vision systems raise important privacy and security concerns. Many people worry about being watched in public spaces without their consent. These systems often collect facial data secretly, which can violate privacy rights. The risk of abuse by authorities may threaten democratic freedoms. Constant monitoring can also discourage free speech and group activities.

| Privacy/Data Security Concern | Explanation |

|---|---|

| Lack of Consent | Many systems identify people without their knowledge, breaking privacy rules. |

| Unencrypted Facial Data | Facial data cannot be changed like passwords, so breaches can lead to identity theft or stalking. |

| Lack of Transparency | People may not know when or how their faces are scanned. |

| Technical Vulnerabilities | Hackers can trick systems with photos or masks, creating security risks. |

| Inaccuracy and Bias | Some groups face higher error rates, leading to unfair treatment. |

Strong cybersecurity and clear rules help protect sensitive data. Companies must use cybersecurity measures to prevent hacking and data leaks. Ethical frameworks stress the need for transparency, fairness, and respect for individual rights.

Bias & Fairness

Bias in facial recognition technology can lead to unfair results. Systems sometimes make more mistakes with women or people of color. These errors can cause false arrests or denial of services. Marginalized groups may face more harm from these mistakes. Fairness means treating everyone equally and avoiding discrimination. Developers train systems on diverse datasets to reduce bias. Regular testing helps find and fix unfair patterns. Companies must stay alert to new risks as technology changes.

Note: Fair and unbiased systems build trust and improve security for everyone.

Technical Limits

Facial recognition machine vision systems face technical limits. Poor lighting, low-quality cameras, and unusual angles can lower accuracy. Occlusions like hats or masks block key features. Some systems struggle with twins or people who look very similar. Hackers may use deepfakes or 3D masks to fool security checks. These technical challenges make it hard to guarantee perfect results. Regular updates and strong security help reduce risks. Teams must test systems in real-world settings to spot weaknesses.

Regulations

Regulations for facial recognition technology differ across countries. The European Union’s AI Act sets strict rules to protect rights and limit real-time surveillance in public spaces. This law aims to prevent discrimination and protect human dignity. However, the rules remain complex and sometimes incomplete, especially about consent. States must create clear laws to avoid human rights abuses and legal confusion. Technology companies like Microsoft support government rules and ethical standards. They call for fairness, transparency, and accountability in all uses. Many places still debate how much to limit or ban facial recognition. The legal landscape continues to change as new risks and uses appear.

Facial recognition machine vision systems use advanced cameras, computer vision, and AI to identify faces quickly. These systems help many industries improve security and efficiency. However, they face challenges like bias, privacy risks, and data protection. The table below shows key ethical concerns and ways to address them:

| Ethical Challenge | Real-World Impact | Suggested Mitigation |

|---|---|---|

| Racial and Gender Bias | Misidentification, wrongful arrests | Diverse datasets, bias testing |

| Data Privacy | Unauthorized data use | Consent, strong protections |

| Mass Surveillance | Loss of anonymity | Oversight, legal safeguards |

Many algorithms show higher error rates for women and people of color, leading to unfair outcomes. Responsible use and ongoing improvements remain important. Staying informed helps everyone understand future changes in this technology.

FAQ

What is the main difference between face detection and face recognition?

Face detection finds and locates faces in an image. Face recognition identifies or verifies who the person is. Detection acts as the first step. Recognition uses the detected face to match it with stored profiles.

How do facial recognition systems protect user data?

Most systems use encryption to keep facial data safe. They store data in secure databases. Some companies use privacy policies and follow laws to protect user information. Regular security updates help prevent hacking.

Can facial recognition work in low light or at night?

Many modern systems use special cameras and sensors that work in low light. Infrared technology helps capture clear images even at night. Performance may drop if lighting is very poor, but new models keep improving.

Are facial recognition systems always accurate?

No system is perfect. Accuracy depends on image quality, lighting, and how much the person’s appearance changes. Glasses, hats, or masks can lower accuracy. Regular updates and better training data help improve results.

See Also

Understanding The Basics Of Machine Vision And Image Processing

A Comprehensive Guide To Machine And Computer Vision Models

The Role Of Character Recognition In Advanced Vision Systems

Exploring Electronic Systems Behind Machine Vision Technologies

Comparing Firmware-Based Machine Vision With Conventional Systems