An autonomous navigation machine vision system in 2025 uses advanced cameras, sensors, and artificial intelligence to let a machine move and understand its surroundings without human help. These systems combine powerful computer vision, edge AI, and sensor fusion to deliver real-time navigation in complex environments. The global market for autonomous navigation machine vision systems is expected to reach between $3.96 billion and $6.17 billion in 2025, driven by rapid adoption in vehicles, robotics, and drones.

| Source | Market Size 2025 (USD Billion) |

|---|---|

| Fortune Business Insights | 3.96 |

| Research and Markets Report | 6.17 |

A machine vision system integrates sensor technologies like LIDAR, high-resolution cameras, and IMUs with AI-driven software for precise navigation and object detection. These systems use mapping, localization, and motion planning to help machines make safe decisions. Each autonomous navigation machine vision system operates with seamless feedback and adaptation, supporting a wide range of machines and environments.

Key Takeaways

- Autonomous navigation machine vision systems use cameras, sensors, and AI to help machines see and move safely without human help.

- These systems combine multiple sensors like LiDAR, stereo cameras, and deep learning models to detect objects and understand their surroundings in real time.

- Applications include self-driving cars, robots in factories, drones for inspections, and machines working in forests and maritime environments.

- Machine vision systems improve safety, accuracy, and flexibility while adapting to new environments through learning and feedback.

- Future systems will use reinforcement learning and advanced AI to become smarter, faster, and more reliable across many industries.

Technologies

Cameras and Sensors

A machine vision system in 2025 relies on a wide range of cameras and sensors to help a machine understand its environment. These systems use forward-facing ADAS cameras for collision avoidance and cruise assist. Surround view camera systems combine several cameras to give a 360° view around the machine, which is important for parking and situational awareness. Infrared cameras help with night vision and driver monitoring, tracking alertness and safety. Stereo vision cameras, such as Intel’s RealSense and Subaru’s EyeSight, use two viewpoints to estimate depth and distance. Time-of-Flight cameras emit infrared light to measure distance, creating real-time depth images for navigation and obstacle detection. LiDAR sensors add high-precision 3D spatial data, which is valuable for long-range detection and mapping. Sensor fusion, which combines cameras with LiDAR and radar, is a best practice for robust perception and safer navigation. Embedded vision cameras with AI processing units, like Luxonis OAK-D, perform real-time object recognition and decision-making.

- Forward-facing ADAS cameras for front-view data

- Surround view camera systems for 360° vision

- Infrared cameras for night vision and driver monitoring

- Stereo vision cameras for depth perception

- Time-of-Flight cameras for real-time depth images

- LiDAR sensors for 3D spatial data

- Sensor fusion for robust perception

- Embedded vision cameras with AI for real-time recognition

Ultrasonic sensors and IMUs also play a key role in a machine vision system. Ultrasonic sensors emit waves and measure reflections to estimate short-range distances, helping with obstacle detection and mapping. IMUs measure acceleration and angular velocity, supporting dead reckoning navigation. These sensors work best when combined with other sensors, as each has strengths and weaknesses. For example, IMUs can drift over time, and ultrasonic sensors have limited range. Sensor fusion frameworks combine data from IMUs, cameras, and LiDAR to improve localization and perception.

Deep Learning Models

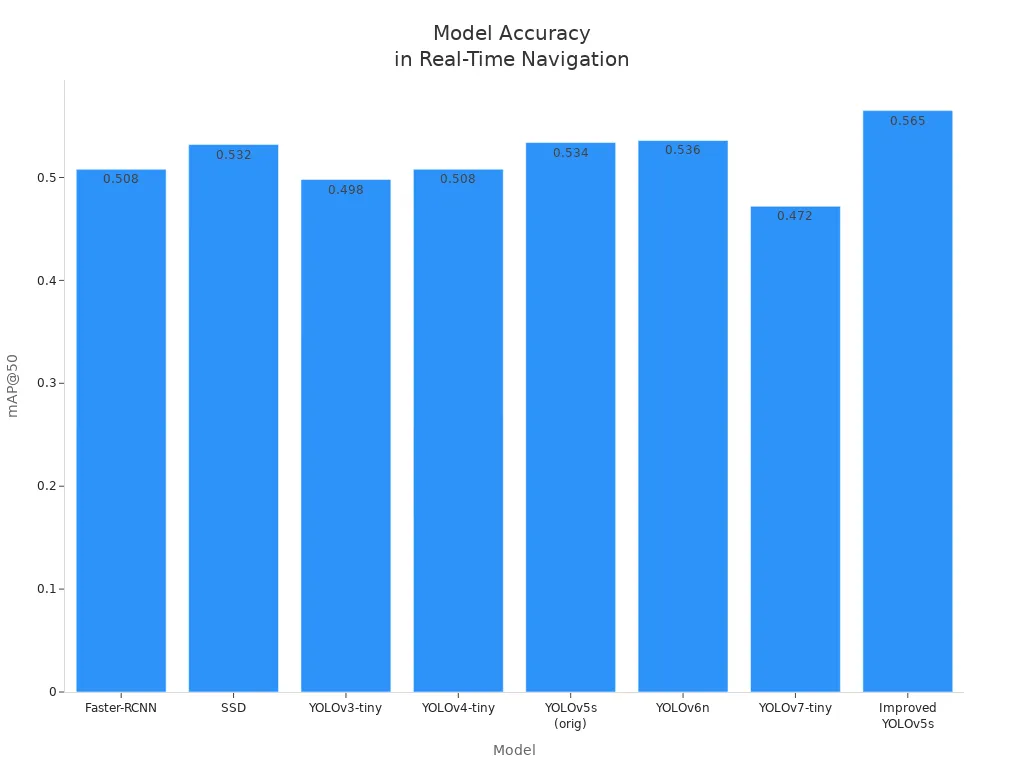

A computer vision-based navigation system depends on deep learning models for object detection and navigation. Convolutional Neural Networks (CNNs) form the foundation for object detection and scene recognition. Advanced CNNs like Mask R-CNN and Faster R-CNN improve detection accuracy at different scales and distances. Single-stage detectors such as YOLO and SSD offer high speed and efficiency, which is important for real-time applications. Newer models like CornerNet and RefineNet use keypoint detection and multi-path refinement to boost accuracy while staying efficient.

| Model | Params (M) | FLOPS (G) | mAP@50 | FPS |

|---|---|---|---|---|

| Faster-RCNN | 41.3 | 60.5 | 0.508 | N/A |

| SSD | 24.1 | 30.5 | 0.532 | N/A |

| YOLOv3-tiny | 8.7 | 12.9 | 0.498 | N/A |

| YOLOv4-tiny | 6.0 | 16.2 | 0.508 | N/A |

| YOLOv5s (orig) | 7.0 | 16.0 | 0.534 | 78.5 |

| YOLOv6n | 4.6 | 11.3 | 0.536 | 84.3 |

| YOLOv7-tiny | 6.0 | 13.0 | 0.472 | 98 |

| Improved YOLOv5s | 6.4 | 15.6 | 0.565 | 52 |

The improved YOLOv5s model stands out for its high accuracy and efficiency. It uses an attention module and a new loss function to boost small object detection and precision. This model, when paired with a stereo camera system, captures real-time stereo images and estimates depth. The system detects objects, calculates their positions, and measures distances from the machine. Control algorithms like Pure Pursuit and Model Predictive Control help the machine avoid hazards and make safe decisions. YOLOv5s achieves over 90% recognition accuracy even in fog and low light, outperforming other detectors. The YOLOv5 segmentation model with a ResNet-50 backbone also shows high precision and reliability across different road and weather conditions.

Stereo Vision

Stereo vision gives a machine vision system the ability to see depth, much like human eyes. The system uses two cameras to capture images from slightly different angles. By finding matching points in both images and calculating the difference, the system estimates how far away objects are. This process creates a depth map, which helps the machine detect obstacles, plan paths, and perform tasks that need precise distance measurement. Stereo vision is cost-effective and provides high-resolution texture data. It works well in many lighting conditions, making it useful for real-time navigation.

- Two cameras capture images from different viewpoints.

- The system matches points in both images and calculates disparity.

- Disparity helps the system triangulate and compute object depth.

- The depth map supports obstacle detection and navigation.

- Accurate depth depends on camera calibration, baseline distance, and lighting.

- Stereo vision offers a flexible and real-time 3D perception solution.

However, stereo vision has some limitations. Sensor noise and calibration errors can reduce depth accuracy. Real-time image processing can be complex and slow. Changes in lighting and shadows can affect detection. Matching features between images becomes hard in complex scenes. To overcome these challenges, a machine vision system often uses sensor fusion and advanced processing techniques.

Computer Vision-Based Navigation System

A computer vision-based navigation system processes sensor data to make real-time decisions. Cameras and sensors collect images and videos under different lighting conditions. The system uses pattern recognition, feature detection, object classification, and neural networks to identify landmarks, obstacles, and terrain. Simultaneous Localization and Mapping (SLAM) algorithms build a 3D map and locate the machine within it. Path planning and obstacle avoidance algorithms use this data to chart safe routes and adjust paths when obstacles appear. Feedback and adaptation through machine learning help the system improve accuracy and handle complex environments.

- Cameras and sensors collect environmental data.

- The system processes data using advanced algorithms for detection and classification.

- SLAM builds a 3D map and tracks the machine’s position.

- Path planning and obstacle avoidance ensure safe navigation.

- Machine learning enables feedback and adaptation for better performance.

A computer vision-based navigation system faces many challenges. Environmental factors like rain, snow, fog, and low light can make detection harder. Technical issues include sensor fusion complexity, calibration, and high computational demands. The system must handle lane detection variability, traffic sign differences, and 3D vision problems. Real-time processing requires strong algorithms and robust AI models to keep the machine safe and efficient.

A modern machine vision system combines all these technologies to deliver reliable object detection and navigation. By integrating cameras, sensors, deep learning, and stereo vision, the system enables a machine to move safely and make smart decisions in real time. This approach forms the backbone of every advanced computer vision-based navigation system in 2025.

Architecture

Data Capture

A machine vision system starts with data capture. The system uses a sensing layer to collect raw data from many sensors. These include GNSS, IMU, LiDAR, cameras, radar, and sonar. Each sensor gives the machine different information, such as position, distance, or images. The system often uses sensor fusion to combine these inputs for better real-time localization. The localization and perception layer processes this data for object detection and tracking. The mapping layer then builds a map to help the machine plan safe navigation paths.

- Sensing layer: Collects raw data from sensors.

- Localization and perception layer: Processes data for detection and tracking.

- Mapping layer: Builds and updates maps for navigation.

The system uses the Temporal Sample Alignment algorithm to synchronize data from sensors with different speeds. This keeps the machine vision system accurate and reliable.

Processing Pipeline

The processing pipeline in a computer vision-based navigation system has several steps. First, the system acquires images or videos from sensors. Next, it preprocesses the data to reduce noise and improve quality. The machine vision system then segments images and extracts features for object detection. Perception uses models like YOLO and Faster R-CNN for 2D and 3D detection. Localization uses GPS and IMU data to find the machine’s position. Prediction estimates where objects will move. Planning finds the best path for navigation. Control executes these plans to move the machine safely.

| Step | Description |

|---|---|

| Perception | Detects and classifies objects |

| Localization | Finds machine position |

| Prediction | Forecasts object movement |

| Planning | Chooses navigation path |

| Control | Moves the machine |

Edge computing helps the system process large amounts of real-time data quickly. The machine vision system uses AI to optimize detection and navigation.

Decision-Making

A computer vision-based navigation system relies on decision-making algorithms to guide the machine. The system uses decision matrix algorithms like gradient boosting and AdaBoosting to combine models for better prediction. Neural networks, especially CNNs, classify and segment objects for detection. Hierarchical decision trees help the system decide when to turn, brake, or accelerate. Actor-Critic algorithms, such as DDPG, allow the machine vision system to adapt to changing environments and perform precise control. The system manages uncertainty with Bayesian Neural Networks, which estimate confidence in detection and navigation. This makes the machine safer and more reliable.

Note: The system uses probabilistic frameworks to reduce errors and improve safety during navigation.

Feedback and Adaptation

A machine vision system must adapt to new environments. Feedback mechanisms help the system learn and improve. Optical flow navigation lets the machine use motion cues for obstacle avoidance. Appearance-based navigation stores images and matches them to current views for guidance. Mapless strategies allow the machine to recognize landmarks without maps. Map-building strategies update 3D maps as the machine moves. Reinforcement learning machine vision system uses feedback to refine actions through trial and error. The system also uses sensor fusion to improve detection and navigation in real time. Learning from demonstration helps the machine vision system imitate human actions and adapt to new situations.

- Optical flow navigation for obstacle avoidance

- Appearance-based and mapless navigation for flexibility

- Reinforcement learning machine vision system for continuous improvement

A computer vision-based navigation system becomes smarter over time by learning from feedback and adapting to changes.

Applications

Automotive Navigation

Autonomous vehicles use a machine vision system to drive safely and efficiently. These systems combine cameras, radar, and lidar to detect objects, keep lanes, and avoid obstacles. Companies like Tesla and Waymo use deep learning and sensor fusion to help vehicles plan paths and respond to traffic. Tesla’s Model Y completed a fully autonomous delivery in 2025, showing how a machine can travel without human help. Driver monitoring systems use vision to check for fatigue or distraction, making vehicles safer. Peugeot’s driving aids include adaptive cruise control, lane-keeping, and automated parking. Machine vision systems improve safety by detecting hazards, even in tough conditions like construction zones. Vision-Language Models help vehicles understand rare events, such as emergency vehicles blocking lanes, and make better decisions.

Robotics and Industry

In factories and warehouses, a machine vision system guides robots as they move and work. Robots use cameras and lidar to build 3D maps, plan routes, and avoid obstacles. These systems help robots find and pick items, manage inventory, and inspect products for defects. Amazon’s robots use machine vision systems to move packages and recharge on their own. In manufacturing, robots use vision to spot cracks or errors on assembly lines. Machine vision systems give robots the precision to handle complex tasks and adapt to changes. They use feedback to correct their movements and keep operations running smoothly.

| Use Case | Description |

|---|---|

| Autonomous Navigation | Robots use sensors and vision to plan safe routes and avoid obstacles. |

| Object Detection | Robots find and recognize items for sorting and assembly. |

| Quality Control | Vision systems check products for defects on assembly lines. |

| Warehouse Robotics | Robots manage inventory and move packages in large warehouses. |

| SLAM | Robots create and update maps to navigate complex spaces. |

UAVs and Drones

Drones rely on a machine vision system for safe and smart flight. These systems let drones inspect sites, monitor crops, and deliver goods without human pilots. Drones use cameras, thermal sensors, and AI to detect obstacles and adjust their path in real time. They can fly in places where GPS does not work, using deep learning and SLAM to map their surroundings. Drones help with emergency response by finding people or hazards quickly. In agriculture, drones use machine vision systems to check plant health and guide spraying. Real-time data from drones improves safety and efficiency in many fields.

- Drones perform close-up inspections in dangerous areas.

- Machine vision systems reduce human error and speed up emergency response.

- Drones use AI to detect and track objects for inspection and reporting.

- Machine vision systems help drones fly in GPS-denied environments.

- Drones support agriculture, logistics, and public safety with real-time data.

Maritime and Forestry

A machine vision system helps autonomous vehicles and machines operate in challenging environments like ports and forests. In maritime navigation, these systems combine cameras, lidar, and radar to see through fog, bright light, and busy waterways. Deep learning helps the system detect obstacles and plan safe routes, even when traditional sensors struggle. The system uses sensor fusion to create a full picture of the environment, improving collision prediction and path planning. Real-time decision-making lets ships adjust their navigation to avoid risks. In forestry, machine vision systems guide vehicles through dense woods, helping with tasks like planting, harvesting, and mapping. These systems allow machines to work safely and efficiently in places where humans face danger or limited visibility.

Applications of machine vision systems show how machines can work in many settings, from city streets to remote forests, making navigation safer and more reliable for all types of autonomous vehicles.

Benefits and Challenges

Advantages

A machine vision system for autonomous navigation brings many advantages over traditional methods. These systems work well in low light or changing lighting because they use infrared imaging and special markers. Machines can navigate in places like commercial farming houses without needing extra lights. The system achieves high accuracy, with mean errors as low as 0.34 cm and yaw angle errors below 0.22°. Machines do not need physical changes to the environment, such as magnetic strips or color markers, which keeps floors undamaged and reduces setup time. The system also avoids problems caused by poor floor conditions or blocked signals. Users can change navigation paths easily by editing simple text files. Machine vision systems provide real-time localization and navigation without needing a lot of extra infrastructure. AI and deep learning help machines learn from data and adapt to new environments, giving higher accuracy and faster processing. These features make the system flexible and reliable for many tasks.

- Works in low or changing light

- High positioning accuracy

- No need for physical changes to the environment

- Flexible and easy path planning

- Real-time localization and navigation

- Learns and adapts to new environments

Limitations

Despite many strengths, a machine vision system faces several limitations. Machines depend on large amounts of labeled training data, which takes time and money to collect. High computing power is needed, making real-time use on edge devices harder. The system can struggle with changes in lighting, occlusions, or attacks that lower accuracy. Biases in training data may cause poor results in new or tough conditions. Machines also face high initial costs for cameras and accessories. Connecting the system to older machines can be complex. Scaling up may need more hardware or software. Energy storage limits how long machines can operate, especially in maritime settings. Communication underwater remains a challenge. Ethical and privacy concerns require careful planning and compliance with laws.

Data and Transparency

Data transparency and explainability play a key role in the adoption of autonomous navigation machine vision systems. Users, regulators, and developers need to understand how the system makes decisions to trust it. Explainable AI helps solve the "black box" problem by making the machine’s choices clear. This builds confidence and supports safety. Regulatory rules, like those in the EU, require systems to explain their actions. Clear explanations help users feel safe and encourage wider use. Developers can also use this information to improve the system. Balancing enough detail without overwhelming users remains important. As machines become more common, transparency will support responsible and safe use.

Future Trends

Reinforcement Learning Machine Vision System

A reinforcement learning machine vision system will shape the next generation of autonomous navigation. This system allows a machine to learn from its environment by using visual feedback and direct interaction. Deep reinforcement learning helps the machine improve object detection and navigation skills over time. The reinforcement learning machine vision system uses neural networks to process complex images and focus on important features. During training, the machine explores different paths and learns which actions lead to safe navigation. In recent studies, a reinforcement learning machine vision system enabled micro aerial vehicles to avoid collisions in indoor spaces without GPS. The machine used monocular depth images and convolutional neural networks to adapt quickly. Simulation and real-world results matched closely, showing that the reinforcement learning machine vision system reduces training time and increases efficiency. This approach lets the machine adapt to new environments without needing new programming. The reinforcement learning machine vision system supports continuous improvement and safer decision-making.

AI and Sensing Advances

By 2025, the reinforcement learning machine vision system will benefit from major advances in AI and sensing. Machines will shift from 2D to 3D vision using stereo cameras and time-of-flight sensors. These upgrades help the machine build accurate 3D maps for better navigation. AI-powered vision will allow the machine to recognize objects, detect anomalies, and predict behavior. Semantic segmentation and visual SLAM will help the machine understand complex scenes. Vision Transformers will improve the machine’s ability to extract features and scale up. Self-supervised learning will reduce the need for labeled data during training. Edge AI will let the machine process data locally, making real-time decisions faster and safer. Multimodal AI will combine visual data with other sensor inputs, giving the machine a deeper understanding of its surroundings. Explainable AI will make the reinforcement learning machine vision system more transparent and trustworthy.

- 3D vision and depth sensing for precise mapping

- Vision Transformers and self-supervised learning for better training

- Edge AI for real-time, secure processing

- Multimodal AI for richer context

Industry Impact

The reinforcement learning machine vision system will transform many industries. In manufacturing, the machine will guide robots to inspect products and avoid hazards. In healthcare, the machine will help robots move safely in busy hospitals. Agriculture will use the reinforcement learning machine vision system for crop monitoring and autonomous tractors. Logistics will see machines using advanced vision for warehouse navigation. Construction sites will benefit from machines that adapt to changing layouts. The reinforcement learning machine vision system will allow each machine to learn from experience, improve with every task, and reduce the need for manual programming. As training becomes faster and more efficient, the machine will handle more complex jobs. The reinforcement learning machine vision system will set new standards for safety, adaptability, and performance.

The future of autonomous navigation depends on the reinforcement learning machine vision system. Machines will become smarter, safer, and more reliable as these systems evolve.

Autonomous navigation machine vision systems in 2025 give each machine the power to see, decide, and move safely. Every machine uses cameras, sensors, and deep learning to understand its world. A machine can work in cars, robots, drones, or ships. The machine learns from feedback and adapts to new places. Many industries trust the machine to improve safety and speed. As AI grows, each machine will become smarter. The future will see the machine handle more complex jobs. Readers should think about how a machine could help in their field.

FAQ

What is an autonomous navigation machine vision system?

An autonomous navigation machine vision system uses cameras, sensors, and AI to help a machine move and understand its surroundings. The system makes decisions in real time without human help.

How do these systems avoid obstacles?

These systems detect obstacles using cameras, LiDAR, and deep learning models. They process images and sensor data to find objects in the path. The system then plans a safe route around the obstacles.

Where are autonomous navigation machine vision systems used?

People use these systems in cars, robots, drones, ships, and even in forests. The technology helps machines work safely in many places, including cities, factories, farms, and oceans.

Why is transparency important in these systems?

Transparency helps people trust the system. When users and regulators understand how the system makes decisions, they feel safer. Clear explanations also help improve the technology.

What are the main challenges for these systems?

The systems need a lot of training data and strong computers. They can struggle in bad weather or with poor lighting. High costs and privacy concerns also create challenges.

See Also

Exploring The Future Of Robotic Vision Guidance Systems

Defining The Automotive Industry’s Machine Vision Technology

How Guidance Vision Systems Enhance Robotic Functionality

Transforming Aerospace Production With Advanced Vision Systems

Advancing Assembly Inspection Through Machine Vision Innovations