Point cloud tools machine vision system technology reshapes how industries see and understand the world in 2025. These systems use point cloud technology for advanced 3D perception, automation, and real-time analysis. Point cloud technology captures millions of data points, allowing a machine vision system to build detailed models and improve analysis speed. Adoption rates surge across sectors as point cloud technology drives innovation. The table below shows how different industries use machine vision system solutions powered by point cloud technology and highlights rapid growth:

| Industry | Role of 3D Vision Systems (Point Cloud Tools) | Market Growth (2023-2025) |

|---|---|---|

| Automotive | Precise assembly, defect detection, robot navigation | 5.02% growth in 2023, up to 12.3% in Germany |

| Semiconductor & Electronics | Detailed inspections, waste reduction, yield improvement | 16% growth in 2024, 12.5% in 2025 |

| Manufacturing & Logistics | Enhanced quality, defect detection, logistics automation | $65.25B in 2023, projected $217.26B by 2033 |

Key Takeaways

- Point cloud tools create detailed 3D models that help machines see and understand objects and spaces accurately.

- Advanced sensors like lidar and photogrammetry collect point cloud data, enabling precise scanning for many industries.

- AI and deep learning improve point cloud processing by automating tasks like noise removal, segmentation, and object detection.

- Machine vision systems using point clouds boost efficiency and quality in manufacturing, robotics, healthcare, and smart cities.

- Real-time processing and human-AI collaboration make point cloud technology faster, more reliable, and easier to use.

Point Cloud Machine Vision System

Point Clouds Overview

A point cloud machine vision system uses a set of data points in a three-dimensional space to represent the surface of objects or scenes. Each point in a point cloud has coordinates (x, y, z) and sometimes includes color or intensity information. These systems help machines see and understand the world in 3D. By processing point cloud data, a machine vision system can build accurate 3D models. This allows the system to measure distances, find shapes, and detect defects. The ability to understand 3D spaces is important for automation tasks like robotic picking, quality checks, and obstacle detection.

Point clouds come from two main sources: laser scanners and photogrammetry. Laser scanners, such as lidar, send out laser pulses and measure how long it takes for the light to return. This gives very accurate 3D point cloud data. Photogrammetry uses many photos taken from different angles. Special software then creates a 3D model from these images. The density of a point cloud, or how many points are in a given area, depends on the type of sensor and how far it is from the object. High-density point clouds show more detail but require more processing power.

Note: Point cloud machine vision systems often use deep learning to process irregular and unstructured point cloud data. This helps with tasks like classifying shapes and segmenting parts in a 3D scan.

3D Sensing Technologies

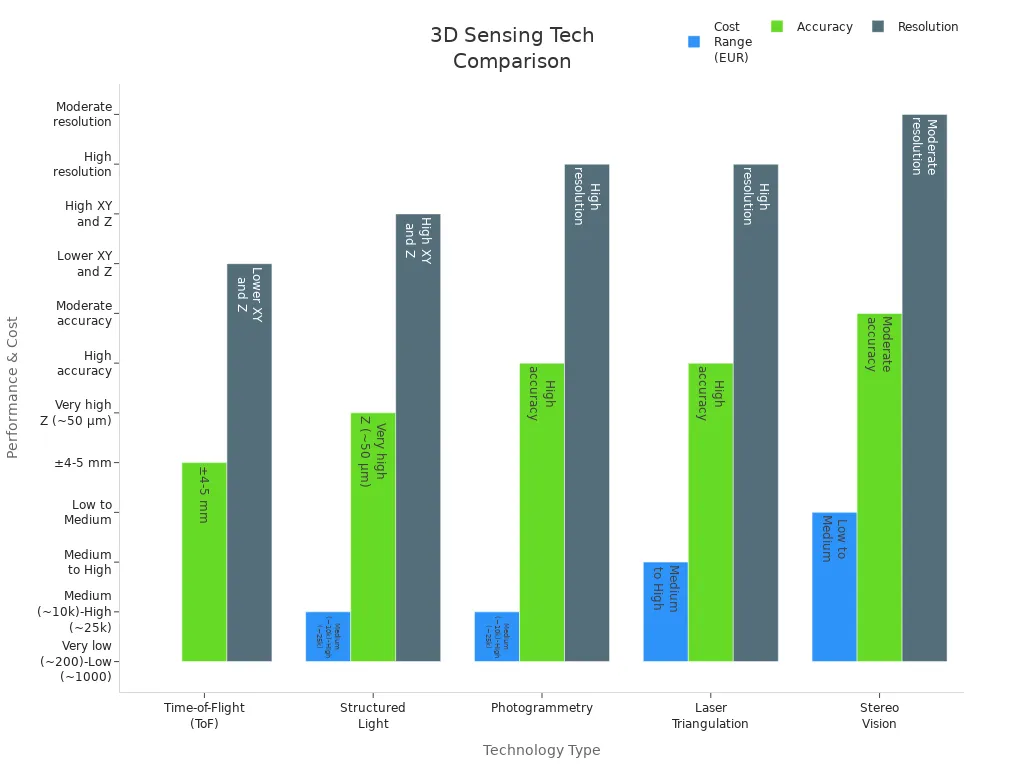

Modern 3d scanning relies on advanced sensor technologies to create point cloud data. The most common methods include lidar, stereo vision, structured light, and photogrammetry. Each technology has its own strengths in terms of accuracy, resolution, and cost.

| Technology Type | Accuracy | Resolution | Cost Range (EUR) |

|---|---|---|---|

| Time-of-Flight (ToF) | ±4-5 mm | Lower XY and Z resolution | Very low (~200) to Low (~1000) |

| Structured Light | Very high Z-resolution (~50 µm) | High XY points and Z-depth precision | Medium (~10k) to High (~25k) |

| Photogrammetry | High accuracy | High resolution | Medium (~10k) to High (~25k) |

| Laser Triangulation | High accuracy | High resolution | Medium to High |

| Stereo Vision | Moderate accuracy | Moderate resolution | Low to Medium |

- Lidar sensors use rapid laser pulses to capture 3d point cloud data with high accuracy. They work well for both stationary and moving objects. Terrestrial laser scanners (TLS) provide the highest precision for static scans, while mobile laser scanners can be mounted on vehicles or drones for fast, wide-area scanning.

- Photogrammetry uses cameras to take many pictures from different angles. Software then reconstructs the 3d point cloud. This method is common in drone-based 3d scanning.

- Structured light projects patterns onto objects and measures how the patterns change. This gives very detailed 3d point cloud data.

- Stereo vision uses two cameras to mimic human eyes. The system compares the images to find matching points and calculates depth using triangulation. Stereo vision works well outdoors and with ambient light but needs more computing power for textureless surfaces.

Other important cameras and sensors in a machine vision system include:

- Area scan cameras: Capture a full image in one shot, good for flat or uniform objects.

- Line scan cameras: Build images one line at a time, ideal for inspecting moving materials.

- Laser profilers: Capture 3d profiles from above, unaffected by color or lighting.

Data Acquisition

Data acquisition is the process of collecting point cloud data for a machine vision system. The two main procedures are 3d laser scanning and photogrammetry.

-

3D Laser Scanning:

- Lidar sensors send out laser pulses and measure the time it takes for the light to return. This creates a highly accurate 3d point cloud.

- Systems often combine lidar with RGB cameras for color and IMUs for position tracking.

- Terrestrial laser scanners (TLS) are used for detailed, static scans, such as measuring floor flatness or capturing objects.

- Mobile laser scanners are used for fast scanning of large areas, like building sites or roads.

- Specialized scanners can map railways, roadways, or wide landscapes.

-

Photogrammetry:

- Cameras take many photos from different angles.

- Software processes these images to build a 3d point cloud.

- This method is useful when lidar is too heavy or expensive.

A point cloud machine vision system must handle several challenges during data acquisition:

- Environmental factors like rain, moving objects, and shiny surfaces can add noise or ghost points.

- Misalignment of scans or poor control points can cause errors in the final 3d point cloud.

- Point density matters. Too few points miss details; too many slow down processing.

- Sensor calibration and accuracy affect data quality.

- Shadows, hidden parts, and complex shapes can cause segmentation errors.

- Incomplete data or annotation mistakes can lead to misclassification, which is risky for autonomous systems.

Tip: Combining different 3d scanning methods and using advanced software helps reduce errors and improve the quality of point cloud data.

A point cloud machine vision system in 2025 uses a mix of 3d laser scanners, lidar, and camera-based methods to collect and process point cloud data. These systems power automation, inspection, and real-time analysis across many industries.

Point Cloud Processing

Point cloud processing forms the backbone of every modern machine vision system. These steps transform raw 3d point cloud data into actionable insights. The process includes noise reduction, segmentation, feature extraction, and advanced analysis. Each stage improves the quality and usefulness of point cloud data for ai machine vision applications.

Preprocessing

Preprocessing prepares point cloud data for further analysis. Raw 3d point cloud scans often contain noise, outliers, and redundant points. These issues can reduce the accuracy of a machine vision system. Preprocessing steps include:

- Noise Reduction: Algorithms remove stray points caused by sensor errors or environmental factors. This step ensures cleaner data for analysis.

- Downsampling: The system reduces the number of points while keeping important features. This makes point cloud processing faster and more efficient.

- Alignment and Registration: Multiple scans from different angles must align correctly. Registration algorithms match overlapping areas to create a complete 3d point cloud.

- Normalization: The system scales and orients point cloud data to a standard format. This step helps with consistent analysis across different datasets.

A well-designed preprocessing pipeline improves the performance of ai machine vision. Clean and organized point cloud data leads to better segmentation and feature extraction.

Segmentation

Segmentation divides point cloud data into meaningful regions or objects. This step allows a machine vision system to identify parts, surfaces, or objects within a 3d scene. Effective segmentation is critical for tasks like object detection, measurement, and automated inspection.

Recent advances in point cloud processing use powerful techniques for segmentation:

- Transformer networks, such as the multiscale super-patch transformer network (MSSPTNet), use dynamic region-growing to extract super-patches. These networks combine local and global context, improving segmentation accuracy.

- MSSPTNet aggregates local features at multiple scales and uses self-attention to find similarities among super-patches. This approach segments repetitive structures quickly and accurately.

- Point-based methods like PointNet and PointNet++ process raw point cloud data directly. These models avoid information loss from voxelization and capture both pointwise and local neighborhood features.

- Local neighborhood encoding modules describe geometric relationships between points. This method enhances feature extraction by focusing on local structures.

- Multi-scale feature representation strategies help models handle different levels of detail in 3d point cloud data.

Segmentation methods that combine transformer-based architectures, local neighborhood encoding, and multi-scale learning deliver the best results for feature extraction and analysis.

Discretization-based methods, such as voxelization, often lose important information or require high computational resources. Modern ai machine vision systems prefer direct point-based and transformer-based approaches for point cloud processing.

AI Integration

Artificial intelligence and deep learning have revolutionized point cloud processing. These technologies enable advanced analysis, automation, and real-time decision-making in a machine vision system. AI integration brings several benefits:

| Platform | AI/Deep Learning Integration Features | Application Focus |

|---|---|---|

| BasicAI | AI-assisted annotation, multi-sensor fusion, object tracking, collaborative workflows | Large-scale LiDAR, autonomous driving |

| iMerit | Multi-sensor fusion, 3D-to-2D projection alignment, multi-frame annotation, human review | Autonomous driving, robotics |

| Supervisely | 3D LiDAR sensor fusion, 3D object detection, tracking, segmentation, data version control | Computer vision projects |

| CVAT | 3D bounding box annotation, synchronized 2D/3D visualization, interpolation tracking | Flexible deployment, research |

| Kognic | Human-in-the-loop workflows, pre-labeling, synchronized 2D/3D viewing, QA insights | Data curation and quality assurance |

AI-powered point cloud processing platforms use:

- Automatic object detection and segmentation for fast analysis.

- Multi-sensor fusion to combine data from LiDAR, radar, and cameras.

- Cross-frame propagation and multi-frame annotation for dynamic scenes.

- Sampling and interpolation to handle large, sparse datasets.

- Real-time annotation and tracking for moving objects.

- Human-AI collaboration to improve accuracy and quality.

These features allow ai machine vision systems to process massive amounts of point cloud data efficiently. Deep learning models learn to recognize patterns, classify objects, and extract features from complex 3d point cloud scenes. This integration supports advanced analysis in fields like autonomous driving, robotics, and industrial inspection.

Leading software tools such as ScanXtream and VisionLidar offer robust point cloud processing capabilities. They support AI-driven workflows, real-time analysis, and seamless integration with machine vision system hardware.

Point cloud processing in 2025 relies on a combination of advanced preprocessing, state-of-the-art segmentation, and deep AI integration. These steps unlock the full potential of 3d point cloud data for next-generation ai machine vision applications.

Applications of Machine Vision System

Manufacturing

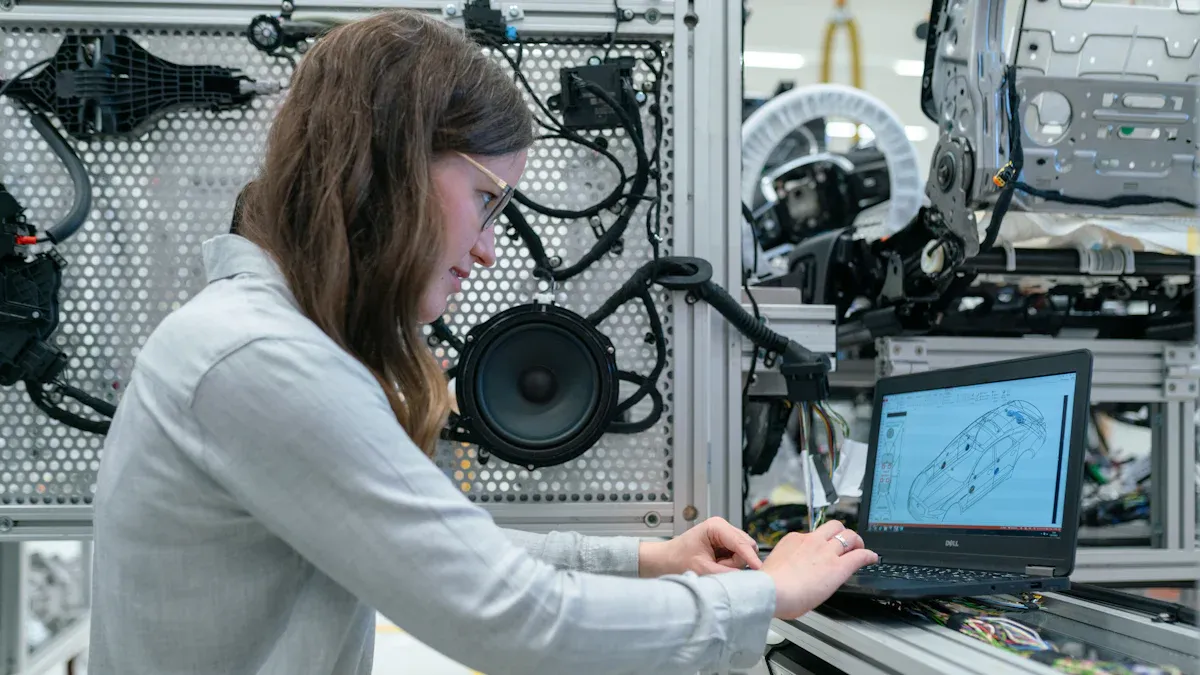

Manufacturing in 2025 relies on point cloud tools machine vision system for better 3d scanning, inspection, and automation. Factories use 3d scanning methods like laser triangulation, Time-of-Flight sensors, and structured light to check product quality and guide robots. These systems create digital twins for virtual testing and real-time comparison. Automated quality control finds surface flaws, checks packaging, and inspects fill levels. AI and deep learning improve the accuracy of inspection and assembly. Robots use 3d scanning for pick-and-place tasks and to verify component orientation. Factories track products with barcode reading and manage supply chains. Real-time process monitoring gives instant feedback, helping workers fix problems quickly. Predictive maintenance uses 3d scanning to watch equipment health, reducing downtime. Material identification and safety monitoring also benefit from these systems.

- 3d scanning enables precise volumetric analysis and dimensional gauging.

- Digital twins support real-time analysis and workflow optimization.

- Automated inspection improves quality and reduces waste.

Robotics and Navigation

Robots and autonomous vehicles use point cloud tools machine vision system for navigation and object recognition. 3d scanning with lidar and stereo vision helps robots see depth and avoid obstacles. AI and deep learning increase accuracy in object detection and route planning. Edge computing allows robots to process 3d scanning data quickly, making real-time decisions. Robots in factories use 3d scanning to assemble parts and move safely. Drones use 3d scanning for mapping and obstacle avoidance, reaching high success rates in trials. Systems like TOPGN use lidar to detect transparent obstacles, improving safety. Robots create 3d costmaps from scanning data, adjusting their paths to avoid collisions. These advances improve picking accuracy and reduce inspection errors.

Healthcare

Healthcare professionals use point cloud tools machine vision system for advanced imaging and patient care. 3d scanning gives doctors detailed views inside the body, improving diagnostic accuracy. Surgeons use 3d scanning to guide instruments during operations, increasing precision. Hospitals track patient movement with 3d scanning, keeping patients safe. AI and edge computing process 3d scanning data on-site, protecting privacy and speeding up analysis. Companies create virtual and 3d printed models for surgical planning, helping doctors prepare for complex cases. AI-powered platforms use 3d scanning for real-time identification of critical anatomy, reducing complications and improving quality of care.

Smart Cities

Smart cities depend on point cloud tools machine vision system for urban planning and infrastructure management. 3d scanning with lidar captures detailed maps of streets, bridges, and utilities. Planners use these 3d models to monitor traffic and design better transportation systems. Real-time analysis of 3d scanning data helps manage traffic flow and reduce congestion. Flood risk assessment uses elevation data from 3d scanning to improve drainage design. Utility mapping with 3d scanning finds underground pipes and wires, lowering construction risks. Continuous monitoring detects wear in roads and bridges, supporting maintenance. Disaster management teams use 3d scanning to map risk zones and guide emergency response. Projects like Singapore’s Virtual Twin use 3d scanning and lidar for city-wide analysis and planning.

Point cloud tools machine vision system brings higher quality, better accuracy, and faster analysis to manufacturing, robotics, healthcare, and smart cities. These systems transform industries by making 3d scanning, inspection, and real-time decision-making more reliable and efficient.

Benefits and Challenges

Advantages

Point cloud tools bring many advantages to a machine vision system. These systems use 3d scanning to create accurate digital models of objects and spaces. The models show every detail, even in hard-to-reach areas. Teams can use these models to check for design conflicts and track construction progress. 3d scanning supports digital twins, which update in real time as physical changes happen. This improves accuracy and keeps digital records up to date. Point cloud systems also help with remote collaboration. People can visit sites virtually and see updates in 3d models. Laser scanning reduces labor and costs compared to older methods. The technology improves communication by letting everyone see the same 3d model, which boosts project tracking and engagement. 3d scanning delivers millimeter-level accuracy, even in dense areas like forests. Automated data processing saves time and reduces errors. Projects start and finish faster, and clients trust the results because of the high quality.

- Accurate digital models from 3d scanning

- Real-time updates with digital twins

- Remote collaboration and virtual site visits

- Lower labor costs and fewer errors

- High accuracy and quality in every scan

Data Volume

3d scanning creates huge amounts of point cloud data. Modern laser scanners collect billions of points in a single scan. Handling this much data can be hard. Traditional machine vision systems often needed manual work and could not keep up. AI-based tools now process large datasets quickly and with high accuracy. These tools filter, classify, and remove artifacts automatically. This makes it possible to scan long roads or big buildings without delays. Automation improves quality and saves time, making large-scale 3d scanning practical.

Real-Time Processing

Real-time processing is important for many uses, like autonomous vehicles and robotics. 3d scanning systems now use AI and machine learning to automate tasks such as filtering and object recognition. Cloud computing helps by making it easy to store and process large point cloud data. Specialized software like Autodesk ReCap Pro and CloudCompare supports fast modeling and analysis. Advances in hardware and algorithms allow real-time decisions, which improves safety and quality in critical tasks. Cloud platforms also let teams work together from different places, keeping quality high.

- AI and machine learning automate 3d scanning tasks

- Cloud computing enables fast, secure, and scalable processing

- Real-time analysis supports safety and high quality

Human-AI Collaboration

Human-AI collaboration improves the annotation and interpretation of 3d scanning data. Trained annotators set strict guidelines to keep quality and accuracy high. AI tools help by making annotation faster and reducing mistakes. Platforms like BasicAI Cloud combine manual checks with automatic annotation, which increases speed and accuracy. Teams can work together on the same dataset, manage tasks, and control access. This teamwork leads to better project outcomes and higher quality. Experts also help explain AI results, building trust and making sure the machine vision system works as expected.

- Human experts set standards for quality and accuracy

- AI speeds up annotation and reduces errors

- Teamwork and expert review improve project quality

Edge computing and next-generation AI are changing 3d scanning. Systems now process data near the source, which reduces delays and improves real-time quality. No-code AI tools and cloud-native security make machine vision systems more flexible and secure. Quantum computing and modular AI services will shape the future, making 3d scanning even more powerful.

Point cloud tools have changed machine vision systems in 2025. Vision Lidar 2025, with its AI-driven processing, boosts both efficiency and precision in survey workflows. These tools help industries create accurate 3D models, speed up inspections, and support real-time decisions.

Key benefits include:

- Faster data processing

- Higher accuracy in 3D modeling

- Easy integration with other systems

Machine vision will keep growing as AI and point cloud technology advance. New solutions will help more industries solve complex problems with greater speed and detail.

FAQ

What is a point cloud image?

A point cloud image shows many points in 3D space. Each point has a location. These images help machines see shapes and surfaces. Engineers use them to build digital models of real objects.

How do ai-powered systems use point clouds?

Ai-powered systems use point clouds to understand 3D spaces. They find patterns and measure objects. These systems can scan rooms, check parts, or guide robots. The technology helps machines make smart choices.

Why is object detection important in machine vision?

Object detection lets machines find and label items in a scene. This skill helps robots pick up parts, cars avoid obstacles, and doctors spot problems in scans. It makes machine vision more useful and safe.

Can point cloud images work in real time?

Yes, new tools process point cloud images quickly. Real-time use helps robots move safely and lets cars avoid crashes. Fast processing also helps in factories and hospitals.

What industries use ai-powered systems with point cloud images?

Many industries use these systems. Factories, hospitals, and smart cities all benefit. They use point cloud images for inspection, planning, and safety. Ai-powered systems help people work faster and make better decisions.

See Also

How AI-Driven Vision Systems Are Changing Industries Now

Understanding Edge AI Applications In Real-Time Vision 2025

Exploring The Next Generation Of Component Counting Vision

Machine Vision Innovations Transforming Aerospace Production Methods