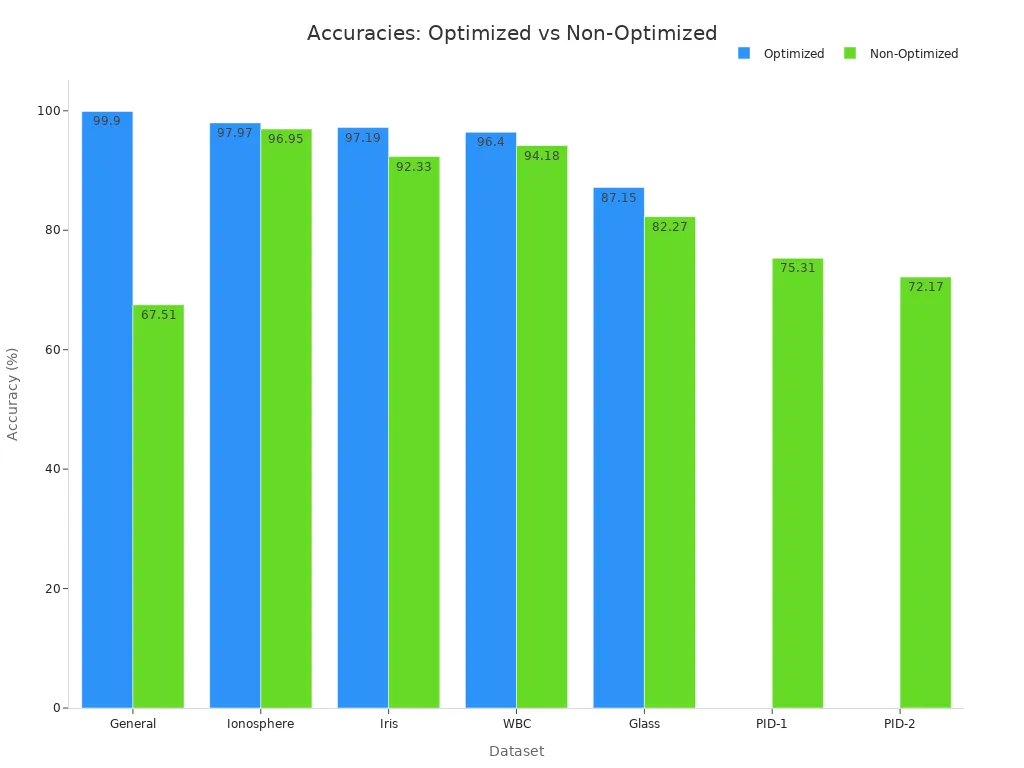

You depend on hidden layers when you use a machine vision system for image classification or object detection. Each hidden layer in a neural network transforms raw image data—like a 28×28 pixel grid—into patterns you can use for artificial intelligence. As you increase the number of hidden neurons, you boost accuracy and trust in tasks such as image classification and object detection. Studies show that with the right number of hidden nodes, accuracy can reach up to 99.9%.

In a Layer (Hidden Layer) machine vision system, hidden layers help artificial intelligence learn complex features, making your system more reliable for image classification and object detection.

Key Takeaways

- Hidden layers transform raw image data into useful patterns, greatly improving accuracy in image classification and object detection.

- Choosing the right number of hidden neurons and activation functions helps your neural network learn complex features and speeds up training.

- Deeper neural networks with multiple hidden layers can learn more detailed image features but need enough data to avoid poor results.

- Regularization methods like dropout prevent overfitting, keeping your machine vision system reliable on new images.

- Careful design of hidden layers boosts your AI system’s ability to recognize objects and classify images with high accuracy and trust.

Neural Network Layers in Machine Vision

Input, Hidden, and Output Layers

When you use a machine vision system, you work with three main types of layers in neural networks: input, hidden, and output. The input layer takes in raw image data, such as pixels from a photo. The output layer gives you the final result, like a label for image classification or a bounding box for object detection. The hidden layers sit between the input and output. These hidden layers do most of the work in a neural network.

You can see how the number of hidden neurons and the choice of training algorithm affect performance in the table below. This shows why tuning the hidden layer is so important for your machine vision system.

| Hidden Neurons | Training Algorithm | Nash-Sutcliff’s Efficiency | Mean Squared Error |

|---|---|---|---|

| 15 | Bayesian Regularization | 0.9044 | 0.002271 |

| 12 | Levenberg-Marquardt | 0.8877 | 0.00267 |

This table highlights how changing the hidden layer setup in neural networks can improve accuracy and efficiency for tasks like image classification and object detection.

Hidden Layer Functions

Hidden layers in a machine vision system help you find patterns that are not obvious in the raw data. In feed-forward neural networks, each hidden layer transforms the image step by step. You can think of the hidden layers as a series of filters. Each filter helps your neural network learn new features, such as edges, shapes, or textures.

Research shows that the size of the hidden layer matters. When you use fewer than 30 hidden neurons, your neural network may have lower accuracy and higher variability. If you use more than 50 hidden neurons, your model can generalize better and show less variability. For example, a neural network with 52 hidden neurons reached an average accuracy of 91.52% for image classification, with a low standard deviation. This means your machine vision system becomes more reliable for both image classification and object detection.

Feed-forward neural networks, convolutional neural networks, and recurrent neural networks all use hidden layers to process images. In convolutional neural networks, hidden layers use filters to detect features at different levels. In recurrent neural networks, hidden layers help your model remember patterns across sequences, which is useful for video analysis.

You can also see the power of layered design in advanced neural network architecture. For example, stacking multiple convolutional layers with activation functions improves training stability and performance. Some systems use four stacked graph convolutional layers with hyperbolic tangent activations to handle complex data. These feed-forward neural networks use hidden layers to boost performance in image classification and object detection.

Feed-forward neural networks also benefit from regularization. When you adjust hidden layer representations to mimic brain-like computations, your model becomes more robust. For example, regularizing a convolutional neural network to match neural responses in the brain improves object detection, even when images have noise or distortions.

Tip: When you design a machine vision system, pay close attention to the number and type of hidden layers in your neural network. This choice can make a big difference in how well your system performs on image classification and object detection tasks.

Layer (Hidden Layer) Machine Vision System

Feature Extraction

You rely on the layer (hidden layer) machine vision system to extract features from images in ways that simple models cannot. When you use feed-forward neural networks, each hidden layer transforms the image data step by step. The first hidden layer might detect basic shapes or edges. The next hidden layer can combine these shapes into more complex patterns, like corners or textures. As you move deeper into the neural network, hidden layers capture even higher-level features, such as objects or faces.

- Deep Belief Networks improve image classification in medical fields by extracting both low- and high-level features from mammograms and ultrasound images.

- These networks reduce noise and help you see important details, which makes diagnosis more accurate.

- Restricted Boltzmann Machines help with dimensionality reduction and pre-training, especially when you have only a small amount of labeled data.

- Autoencoders remove noise from images, making it easier to spot tumors in mammograms.

- The hierarchical structure of these models lets you learn more abstract features, which is critical for accurate image-based diagnosis.

Feed-forward neural networks with multiple hidden layers can learn complex patterns more efficiently than shallow networks. You need fewer parameters and can train your model on smaller datasets. Stacked Auto-Encoders show that deep models learn better features than handcrafted ones, especially in tasks like skin lesion classification. When you use unsupervised layer-wise pre-training, you help your model avoid poor local optima and improve feature learning. This makes the layer (hidden layer) machine vision system powerful for extracting hierarchical features from images.

You can see the impact of hidden layers in performance metrics. When you supervise hidden layers in image classification networks, you guide the model to group similar features. This improves accuracy and helps the model focus on the right parts of the image. For example, Grad-CAM heatmaps show that models with hidden layer supervision pay more attention to target regions. This leads to higher accuracy, precision, recall, and F1 scores.

| Metric | Description | Evidence of Improvement |

|---|---|---|

| Accuracy | Percentage of correctly detected instances | Accuracy improved in image classification networks after knowledge injection at hidden layers; IDS model accuracy >97% |

| Precision | Ratio of true positive detections to all positive detections | IDS model precision improved (e.g., 84.36% on KDD99 dataset) with neural network feature extraction |

| Recall | Ratio of true positive detections to all actual positives | High recall values reported (e.g., 98.44% on KDD99, 96.65% on UNSW-NB15) |

| F1 Score | Harmonic mean of precision and recall | F1 scores increased significantly with neural network-based feature selection |

| Recognition Rate | Overall detection success rate | Recognition rates of 98.38% (KDD99) and 96.71% (UNSW-NB15) with hidden layers |

You benefit from these improvements because the layer (hidden layer) machine vision system can extract features that help your neural network make better decisions in image classification and object detection.

Activation Functions

You need activation functions in every hidden layer of a feed-forward neural network. These functions introduce nonlinearity, which lets your model learn complex patterns that simple linear models cannot capture. Without activation functions, your neural network would only find straight-line relationships in the data. With activation functions, you can model nonlinear relationships and recognize more complicated patterns in images.

- Activation functions add non-linearity, so your neural network can solve problems that are not linearly separable. This is essential for pattern recognition.

- The Universal Approximation Theorem shows that nonlinear activations let your network model complex input-output relationships.

- Different activation functions, like Sigmoid, Tanh, and ReLU, affect how your model learns. They change the speed of training and the stability of your neural network.

- Functions like ReLU help prevent vanishing gradients, which means your model trains faster and reaches better accuracy in visual recognition tasks.

- New activation functions, such as the modulus, have shown even better results. For example, the modulus function improved accuracy by up to 15% on CIFAR100 and 4% on CIFAR10 compared to other activations. It also solved problems like vanishing gradients and dying neurons, making training more stable.

Feed-forward neural networks use activation functions in every hidden layer to help your model learn from images. Nonlinear activation functions like ReLU, Sigmoid, and Tanh allow your neural network to learn complex visual patterns. Studies on datasets like MNIST and CIFAR-10 show that advanced nonlinear functions outperform traditional ones in both accuracy and speed. When you choose the right activation function, you help your layer (hidden layer) machine vision system process complex image data and improve pattern recognition.

Tip: Always check which activation function works best for your feed-forward neural networks. The right choice can boost your machine vision system’s performance in image classification and object detection.

Deep Learning and Hidden Layer Impact

Model Depth and Performance

When you build a machine vision system, you often wonder how many hidden layers your neural network should have. Deep learning gives you the power to stack many hidden layers, which helps your model learn complex patterns in images. If you use only one hidden layer, your neural network can already reach over 97% accuracy on simple tasks like digit recognition. Adding a second hidden layer can push accuracy even higher, sometimes above 98%. For more difficult tasks, you need deep neural networks with many hidden layers. These deep models let your machine vision system learn both simple and complex features, from edges to objects.

You can see how model depth affects performance in the table below:

| Architecture | Depth (Layers) | Parameters (Millions) | Key Insight on Depth and Performance |

|---|---|---|---|

| AlexNet | 8 | 60 | Deeper than LeNet-5; won ILSVRC-2012, showing depth improves results |

| VGGNet | 16–19 | 134 | Increased depth with small filters improves accuracy |

| GoogLeNet | 22 | 4 | Deeper and wider; winner ILSVRC-2014 |

| Inception v3 | 42 | 22 | Deeper network with batch normalization for better performance |

| ResNet | 50–152 | 25.6–60.2 | Skip connections allow very deep networks and top results |

Deeper neural networks often perform better, but only if you have enough data. With small datasets, deep models may not help and can even hurt performance. You should start with one or two hidden layers and increase depth as your task gets harder. Feed-forward neural networks with multiple hidden layers learn faster and generalize better when you have lots of images.

Note: Deep learning models like ResNet use skip connections to solve problems like vanishing gradients, making it possible to train very deep neural networks for machine vision.

Overfitting and Regularization

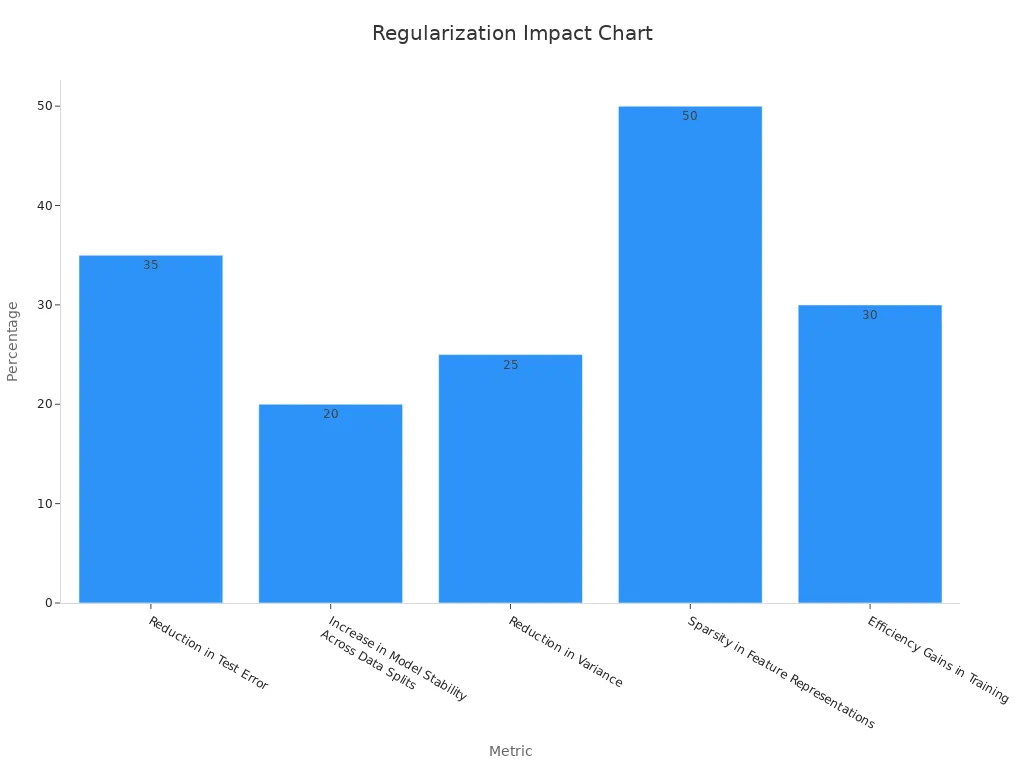

When you add more hidden layers to your neural network, you risk overfitting. Overfitting happens when your model learns the training data too well and fails to work on new images. Deep learning models with many hidden layers can memorize noise instead of learning useful patterns. You need regularization techniques to prevent this.

Regularization helps your machine vision system stay accurate on new data. Dropout, DropBlock, and MaxDropout are popular methods. Dropout randomly removes hidden neurons during training, which forces your neural network to learn more robust features. DropBlock works well in convolutional neural networks by dropping entire blocks of hidden units, improving accuracy on datasets like ImageNet and CIFAR-10.

| Regularization Technique | Model Example | Dataset | Effect on Overfitting / Accuracy |

|---|---|---|---|

| Dropout | MLPs, CNNs | Various | Improves generalization |

| DropBlock | ResNet-50, RetinaNet | ImageNet | Boosts accuracy by ~2% |

| MaxDropout | WideResNet-28-10 | CIFAR-100 | Outperforms Dropout |

| Shake-shake | Three-branch ResNets | CIFAR-10 | Increases accuracy by up to 0.6% |

You also benefit from implicit regularization in deep learning. Even without explicit methods, random initialization and stochastic gradient descent help control model complexity. Research shows that regularization can reduce test error by up to 35% and make your neural network more stable across different data splits.

Feed-forward neural networks with multiple hidden layers need regularization to avoid overfitting and to keep your machine vision system reliable. Always monitor your model’s performance on new images and adjust your regularization methods as needed.

You unlock the power of artificial intelligence in machine vision by using hidden layers. These hidden parts help your deep learning models see and understand complex images. When you design a system, you must focus on the importance of hidden layers. Deep networks with many hidden layers let artificial intelligence find patterns that simple models miss. You should always think about hidden layer choices to build strong, deep artificial intelligence solutions.

Remember: The right hidden layer design can make your deep learning system smarter and more reliable.

FAQ

What do hidden layers do in a machine vision system?

Hidden layers help you find patterns in images. Each layer learns new features, like edges or shapes. These features help your system recognize objects or classify images more accurately.

How many hidden layers should you use?

You should start with one or two hidden layers for simple tasks. For harder problems, you can add more layers. More layers help your system learn complex patterns, but too many can cause overfitting.

Why are activation functions important in hidden layers?

Activation functions let your neural network learn complex patterns. Without them, your model only finds simple relationships. Functions like ReLU or Tanh help your system recognize shapes, colors, and objects in images.

Can hidden layers work with generative adversarial network models?

Yes, you can use hidden layers in a generative adversarial network. These layers help both the generator and discriminator learn features from images. This makes the network better at creating or recognizing realistic images.

How do you prevent overfitting in deep machine vision models?

You can use regularization methods like dropout or DropBlock. These techniques help your model avoid memorizing training data. They make your system more reliable when you test it on new images.

See Also

The Impact Of Deep Learning On Machine Vision Technology

Understanding The Role Of Cameras In Vision Systems

A Guide To Computer Vision Models Within Vision Systems