A structured data machine vision system helps machines organize and understand image information. This system takes raw image data and uses feature extraction to find important patterns. Feature extraction picks out shapes, colors, and edges from each image. Machines use this process to turn image data into clear, structured data. The system uses feature extraction many times to compare and sort images. Beginners can see how feature extraction lets a structured data machine vision system "see" and make sense of the world.

Feature extraction is the key step that helps the system find meaning in every image.

Key Takeaways

- Structured data machine vision systems turn raw images into organized information using feature extraction, helping machines understand and analyze images quickly and accurately.

- Feature extraction picks out important image details like edges and shapes, making it easier for machines to detect defects, sort objects, and make smart decisions.

- Using structured data improves speed and accuracy in many industries, reducing errors, cutting costs, and boosting efficiency in tasks like inspection and navigation.

- Hardware like cameras and lighting, combined with software algorithms, work together to capture, process, and analyze images for reliable machine vision performance.

- Beginners can set up simple machine vision systems by following clear steps, focusing on good data preparation, hardware setup, and monitoring to achieve accurate results.

Structured Data Machine Vision System

What It Means

A structured data machine vision system helps computers make sense of images by turning them into organized information. In this system, feature extraction finds important parts of each image, such as shapes, colors, and edges. The system then arranges this information into tables or lists, making it easy for machine vision systems to use. Structured data has a fixed format, like rows and columns, so the system can quickly search and compare information.

Unstructured data, on the other hand, does not follow a set pattern. Images, videos, and free-form text are examples of unstructured data. Machine vision systems find it harder to process unstructured data because there is no clear way to organize or search it. The system must work harder to find useful details in each image.

| Aspect | Structured Data | Unstructured Data |

|---|---|---|

| Data Organization | Organized in tables with rows and columns | No fixed format; handles diverse data types |

| Schema Requirements | Predefined schema; data must conform | Flexible or schema-on-read approach |

| Query Methods | SQL queries for precise data retrieval | Document queries, key-value lookups, full-text search |

| Scalability | Vertical scaling; schema changes can be complex | Horizontal scaling; adapts to large data volumes |

| Use Cases | Transactional systems, reporting, analytics | Content management, social media analysis, IoT |

| Performance Characteristics | Optimized for speed and consistency | Excels with large, varied data types |

Feature extraction acts as the bridge between raw image data and structured data. The system uses feature extraction to pick out the most important details from each image, turning them into values that fit neatly into a table. This process makes it much easier for machine vision systems to analyze, compare, and use the data.

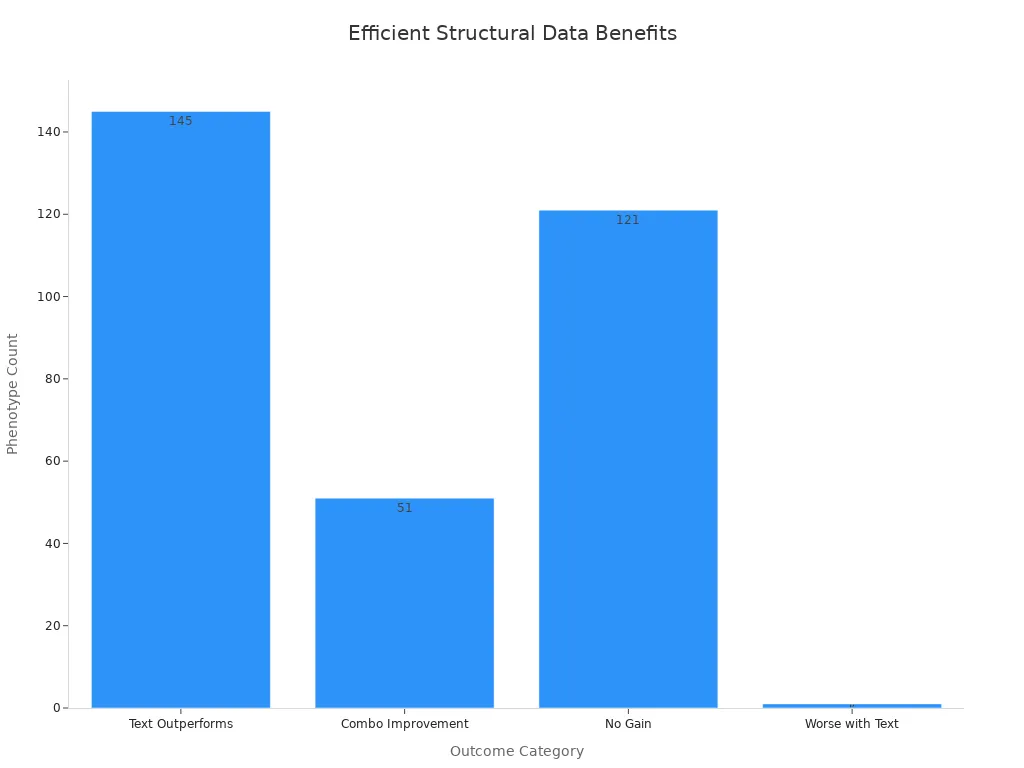

A real-world study in healthcare showed that combining structured data, like lab results, with unstructured data, such as clinical notes, improved the performance of machine vision systems in certain tasks. The study found that using both types of data together led to better results for some diseases, especially those related to the circulatory system and injuries.

Why It Matters

Structured data machine vision systems play a key role in making automation, inspection, and analysis faster and more reliable. When the system uses feature extraction to organize image data, it can spot defects, sort objects, and make decisions with high accuracy. Machine vision systems that rely on structured data can inspect products, count items, and check for errors much faster than humans.

- Inspection errors drop by over 90% compared to manual inspection.

- Picking accuracy increases by up to 25% when using 3D vision systems instead of 2D systems.

- Automated visual inspection reduces defect rates by up to 80%.

- Quality assurance labor costs decrease by about 50%.

- Robotic part-picking efficiency improves by over 40%.

Machine vision systems also help companies save time and money. For example, Walmart improved its inventory turnover by 25% using structured data machine vision systems. General Electric reduced inspection time by 75%. In agriculture, machine vision systems with feature extraction improved sorting accuracy and made results more consistent than manual inspection.

| Case Study / Source | Industry Sector | Quantitative Evidence | Key Outcome |

|---|---|---|---|

| Walmart | Retail | 25% improvement in inventory turnover | Increased operational efficiency |

| General Electric | Manufacturing | 75% reduction in inspection time | Faster and more efficient inspections |

| Crowe and Delwiche | Food & Agriculture | Improved sorting accuracy | Consistency over manual inspection |

| Zhang and Deng | Fruit Bruise Detection | Relative errors within 10% | High defect detection precision |

| Kanali et al. | Produce Inspection | Labor savings | Increased objectivity |

| ASME Systems Sales | Commercial Adoption | $65 million sales | Strong market trust |

Feature extraction makes these improvements possible. The system uses feature extraction to turn every image into structured data, which allows machine vision systems to work quickly and accurately. In manufacturing, the Zero Defect Manufacturing model uses structured data to predict problems before they happen. This approach helps companies fix issues early, reduce downtime, and keep production lines running smoothly.

Machine learning and artificial intelligence also use feature extraction to turn unstructured image data into structured data. This process helps machine vision systems find defects, control quality, and improve efficiency. Cloud technology gives these systems more power to process and analyze image data, making automation even better.

Tip: Feature extraction is the secret behind the speed and accuracy of modern machine vision systems. It helps the system turn every image into useful, structured data for smarter decisions.

Key Components

Hardware Overview

Structured data machine vision systems rely on several hardware components to capture and process images. The five main parts include illumination, image capture, image processing, analysis, and communication. Illumination provides the right lighting so the system can see objects clearly. Image capture uses cameras or sensors to collect image data. The image processing unit, often a computer or a dedicated processor, handles the heavy lifting of analyzing each image. Analysis modules interpret the processed data, while communication devices send results to other machines or operators.

Performance metrics help measure how well the hardware works. Important metrics include latency, which shows how fast the system processes each image, and throughput, which counts how many images the system can handle per second. Energy use and memory size also matter, especially for large-scale operations. For example, simple image processing tasks can run in less than 100 milliseconds on a Programmable Logic Controller (PLC), but more complex tasks like template matching may take over 4 seconds. This shows that execution time is a key factor in choosing the right hardware for real-time detection and analysis.

| Hardware Metric | Description | Importance in Machine Vision Systems |

|---|---|---|

| Latency | Time to process each image | Affects real-time detection and response |

| Throughput | Number of images processed per second | Determines system speed and efficiency |

| Energy Consumption | Power used during processing | Impacts operational cost and sustainability |

| Memory Footprint | Amount of memory needed for image data | Limits or expands processing capabilities |

| Accuracy | Precision of detection and analysis | Ensures reliable object detection |

Note: Choosing the right hardware ensures the system meets the demands of fast and accurate image processing.

Software and Algorithms

Software and algorithms form the brain of machine vision systems. They control how the system processes image data, detects objects, and makes decisions. The software uses different algorithms for tasks like image segmentation, object detection, and feature extraction. Some algorithms work best with structured data, while others handle more complex or unstructured images.

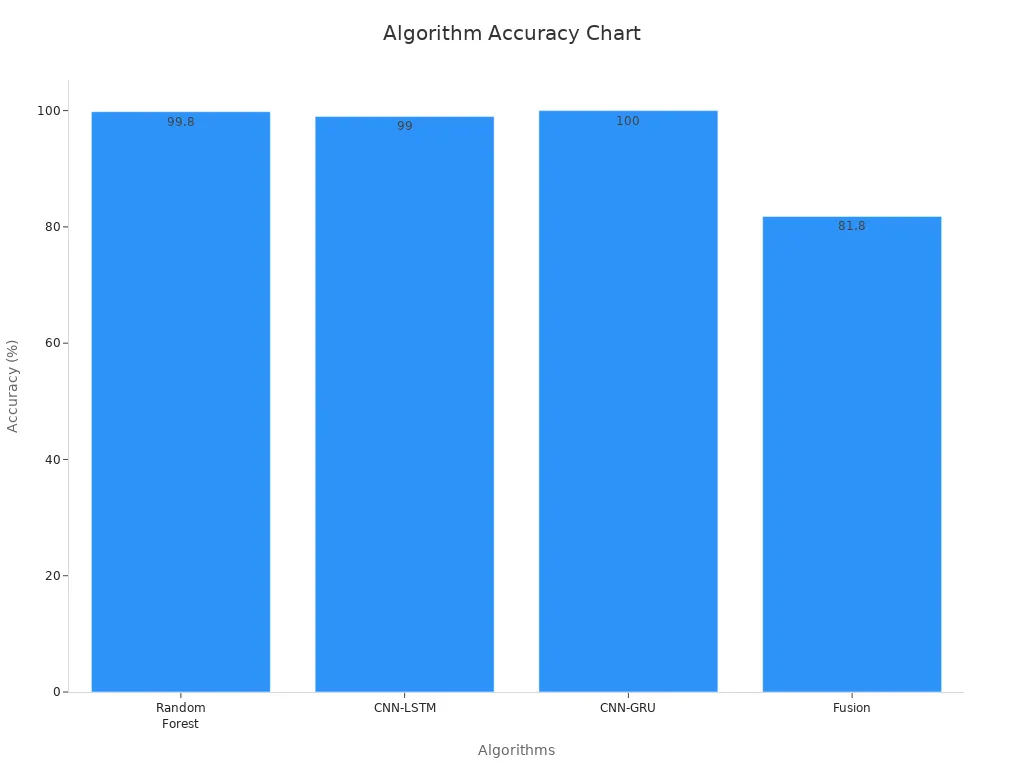

Traditional machine learning algorithms, such as Random Forest and Support Vector Machine, perform well on small, structured datasets. Deep learning-based feature extraction methods, like Convolutional Neural Networks (CNNs), excel at handling complex image data and can automatically learn important features. Hybrid models combine several algorithms to boost accuracy and stability.

| Algorithm Category | Typical Algorithms | Accuracy Range | Strengths | Limitations |

|---|---|---|---|---|

| Traditional Machine Learning | Random Forest, SVM, Logistic Regression | 47.2% – 99.8% | High accuracy on structured data; easy to interpret | Struggles with complex image data |

| Deep Learning | CNN, RNN, LSTM, CNN-LSTM, CNN-GRU | 60.7% – 100% | Handles complex images; automatic feature extraction | Needs lots of data and computing power |

| Dedicated Algorithms | Imitation learning, parameterization | 51.7% – 81.8% | Good for specific detection tasks | Limited to certain scenarios |

Deep learning-based feature extraction has become popular because it can find patterns in image data that humans might miss. These methods improve detection and classification accuracy, especially in tasks like object detection and image segmentation. Fusion strategies, which combine multiple algorithms, often lead to even better results.

Feature Extraction

Feature extraction stands at the heart of structured data machine vision systems. This process transforms raw image data into meaningful information that algorithms can use for detection, classification, and analysis. The system uses feature extraction to pick out edges, corners, textures, and shapes from each image. Popular methods include edge detection (Sobel, Canny), Histogram of Oriented Gradients (HOG), SIFT, SURF, and deep learning-based feature extraction using CNNs.

- Feature extraction simplifies image data, making it easier for algorithms to process.

- Extracted features boost the accuracy and efficiency of object detection and image segmentation.

- Effective feature extraction helps the system handle changes in scale, lighting, and object rotation.

- Combining several feature extraction techniques, such as HOG, Gabor filters, and wavelet transforms, creates a strong foundation for image processing.

- Dimensionality reduction, like Principal Component Analysis (PCA), shrinks large feature sets while keeping important information.

- Preprocessing steps, including feature extraction, improve the quality of the dataset and lead to more reliable detection results.

- Ensemble methods, applied after feature extraction, further increase classification accuracy.

Feature extraction also supports advanced tasks like structured light 3D scanning, where the system projects patterns onto objects and analyzes the reflected image to build a 3D model. This technique relies on accurate detection and segmentation of features in the image.

Tip: Strong feature extraction turns complex image data into structured information, making machine vision systems smarter and more reliable.

How It Works

Data Flow

A structured data machine vision system follows a clear path from image capture to decision-making. The process starts when the system uses cameras or sensors to collect image data. Good lighting helps the system see every detail. The next step is feature extraction. The system looks for important parts in each image, such as edges, shapes, and colors. Feature extraction turns raw image data into organized data that fits into tables. This structured data makes it easy for the system to compare, sort, and analyze images.

After feature extraction, the system uses algorithms for detection and classification. These algorithms check for defects, count objects, or sort items. The system then sends the results to other machines or operators. Fast processing and accurate feature extraction help the system make smart decisions in real time.

Machine-Vision Technology

Machine-vision technology gives the system the power to process image data quickly and with high accuracy. This technology uses advanced cameras, lighting, and computers to improve detection and feature extraction. In manufacturing, machine-vision technology increases inspection accuracy from 85-90% to over 99.5%. Processing speed jumps from 2-3 seconds per unit to just 0.2 seconds. Defect rates drop by 75%, and inspection costs fall by 62%. Product returns decrease by 78%, showing better reliability.

| Application Area | Metric | Traditional Value | Machine Vision Value | Improvement |

|---|---|---|---|---|

| Manufacturing Inspection | Accuracy | 85-90% | 99.5%+ | Up to 14.5% increase |

| Manufacturing Inspection | Speed per unit | 2-3 seconds | 0.2 seconds | 10x faster |

| Defect Rate Reduction | Defect rate | N/A | 75% reduction | Significant improvement |

| Inspection Cost | Cost | N/A | 62% reduction | Major cost savings |

| Product Returns | Returns | N/A | 78% fewer returns | Enhanced reliability |

Machine-vision technology also helps in agriculture and retail. Crop yields rise by 10-15%, and farms cut their environmental footprint by 35%. In stores, the system improves queue detection and shopper behavior analysis. Feature extraction and detection work together to boost accuracy and efficiency in every field.

Note: Machine-vision technology relies on strong feature extraction to turn image data into structured data for fast and accurate detection.

3D Image Reconstruction

3D image reconstruction adds another layer of power to machine-vision technology. The system uses special cameras and projectors to capture images from different angles. Feature extraction finds key points in each image. The system then combines this data to build a 3D model of the object. This reconstruction helps the system measure size, shape, and volume with high accuracy.

3D image reconstruction improves detection in complex tasks. For example, it helps robots pick up parts with the right grip or lets cars see obstacles in real time. The system uses feature extraction at every step to make sure the 3D model matches the real object. Accurate reconstruction leads to better decisions and safer automation.

Navigation becomes easier with 3D image reconstruction. Robots and vehicles use the 3D data to move safely and avoid obstacles. Feature extraction and reconstruction work together to give the system a clear view of the world. This process supports smart navigation and precise detection in many industries.

Tip: 3D image reconstruction, combined with feature extraction, gives machine-vision technology the ability to see and understand the world in three dimensions.

Getting Started

Setup Steps

Setting up a structured data machine vision system for surgical applications requires careful planning and attention to detail. Beginners can follow these steps to achieve real-time accurate navigation and high accuracy in surgical navigation:

- Create and activate a virtual environment to keep project files organized and separate from other tasks.

- Install all required packages using a requirements file. This ensures the system has the right tools for feature extraction and automated registration.

- Gather and prepare data by identifying sources, collecting raw images, and cleaning them. Proper data preparation improves accuracy in surgical navigation and tool tracking system performance.

- Split the data into training and testing sets using a dedicated script. This helps measure accuracy and supports instant flash registration.

- Organize the project with modular scripts for data ingestion, cleaning, training, and prediction. This structure makes it easier to update feature extraction methods and registration steps.

- Run the main script to load data, clean it, train the model, save results, and evaluate performance. Monitoring logs and printed metrics helps verify accuracy and feature extraction quality.

- Annotate images using manual, automated, or hybrid methods. Quality control checks, such as multi-tier reviews and spot checks, ensure reliable feature extraction and registration.

- Export annotated data in formats like JSON or CSV, including metadata for navigation-on-demand and continuous updates.

Tip: Beginners should monitor progress and review evaluation metrics to confirm that feature extraction and registration steps work as expected.

Navigation Tips

Choosing the right hardware and configuring software play a big role in surgical navigation systems. Cameras, lenses, lighting, and processing hardware all affect feature extraction and accuracy. Beginners should position cameras and lighting carefully to avoid errors in navigation and registration. Calibration with reference objects ensures the tool tracking system works with high accuracy.

A well-annotated dataset supports strong feature extraction and automated registration. Setting image parameters like contrast and brightness helps the system detect features needed for surgical navigation. Software should include modules for image acquisition, processing, and navigation-on-demand. Integration with data systems and PLCs allows real-time updates and instant flash registration.

| Aspect | Details |

|---|---|

| Hardware Components | Cameras, lenses, lighting, CPUs/GPUs, communication interfaces |

| Setup | Careful alignment and stable environment for accurate feature extraction |

| Calibration | Adjust focus, lighting, and algorithms using reference objects for precise registration |

| Software Configuration | Set image parameters, define inspection criteria, integrate with data systems |

| Data Flow | Structured from image capture to decision making and output |

Case studies show that these steps lead to success. For example, in surgical navigation, automated registration and feature extraction improve accuracy and reduce errors. Real-time accurate navigation helps surgeons make better decisions. Systems with navigation-on-demand and instant flash registration save time and increase safety.

Note: Building a strong foundation in feature extraction, navigation, and registration helps beginners set up reliable surgical systems.

Structured data machine vision systems help machines organize and understand images. These systems use feature extraction to turn raw images into useful information. Beginners can set up basic systems and see real results.

- Easy setup steps guide new users.

- Practical benefits appear in many industries.

- Feature extraction improves speed and accuracy.

Anyone can start learning about machine vision. With curiosity and practice, they can build smart systems that see the world in new ways. 🚀

FAQ

What is feature extraction in machine vision?

Feature extraction helps the system find important parts of an image, like edges or shapes. The system uses these features to understand and organize the image. This step makes it easier for computers to compare and analyze pictures.

How does structured data help machine vision systems?

Structured data puts information into tables or lists. This format lets the system search, sort, and compare images quickly. Machines can make decisions faster and with fewer mistakes when they use structured data.

Can beginners set up a machine vision system at home?

Yes! Beginners can start with a simple camera and free software. They can follow step-by-step guides to capture images and try basic feature extraction. Many online resources help new users learn and practice.

What industries use structured data machine vision?

Many industries use these systems. Examples include manufacturing, healthcare, agriculture, and retail. Each field uses machine vision to inspect products, sort items, or guide robots.

Do machine vision systems need special hardware?

Most systems use cameras, lights, and computers. Some advanced systems need special sensors or 3D cameras. The right hardware depends on the task and the level of detail needed.

Tip: Start simple. Upgrade hardware as skills and needs grow.

See Also

The Role Of Structured Light In Improving Vision Systems

A Deep Dive Into Synthetic Data For Vision Systems

Understanding Fundamental Concepts Of Sorting Vision Systems