Can a machine spot a tiny defect faster than a human? Artificial neural networks, inspired by the human brain, help machines "see" and make smart decisions. In factories, an Artificial Neural Networks machine vision system can detect flaws in real-time, cut inspection time, and reduce errors. These ai-powered systems adapt quickly to new products and improve accuracy. For example, BMW uses ai vision to learn new vehicle lines in 48 hours instead of weeks. The table below shows how artificial intelligence and vision technology boost automation and precision:

| Example Context | Measurable Improvement |

|---|---|

| Real-time defect detection | Reduces manual inspection time and human error |

| License plate recognition | Achieves up to 99% accuracy in reading plates |

| Process optimization | Cuts material waste by 15% |

Key Takeaways

- Artificial neural networks help machines see and recognize patterns, making tasks like defect detection faster and more accurate than humans.

- Deep learning, especially convolutional neural networks, improves image analysis by learning from large datasets and finding complex details.

- Different vision systems (1D, 2D, 3D) suit different tasks; choosing the right one boosts performance and efficiency.

- Preparing large, well-labeled datasets and using optimization techniques help neural networks learn better and run faster on limited hardware.

- Machine vision powered by neural networks benefits many industries but faces challenges like data needs, high computing power, and difficulty explaining decisions.

Artificial Neural Networks Machine Vision System

What Are Artificial Neural Networks?

Artificial neural networks are computer models inspired by the way the human brain works. Each network has layers of simple units called neurons. These neurons connect to each other and pass information forward. The network learns by adjusting the strength of these connections. This process helps the network recognize patterns in data.

Researchers have tested artificial neural networks in many fields. For example, one study used an artificial neural networks machine vision system to analyze coal samples. The system looked at 80 images from different angles and used 280 features from each image. The network learned to predict coal properties like carbon and moisture. The results showed strong accuracy, with R-squared values between 0.84 and 0.92. This means the network could make reliable predictions. The study compared the network to other models, such as support vector regression and Gaussian process regression. The artificial neural networks machine vision system performed as well as or better than these other methods.

A table below shows how researchers define and test artificial neural networks:

| Aspect | Description |

|---|---|

| ANN Definition | Artificial neural networks are models for classifying data into two groups. |

| Structural Design Factors | Network size and added noise affect how well the network learns. |

| Data Characteristics | Tests use data with different patterns and noise levels. |

| Performance Metrics | Learning ability, reliability, and accuracy are measured. |

| Key Findings | Larger networks and added noise can change how well the network predicts. |

| Numerical Data | Results show how accuracy changes with network size and noise. |

| Application Domains | Used in finance, marketing, and medical fields. |

Some networks use special designs. For example, genetic algorithms can help networks predict customer churn in wireless services. Medium-sized networks often give the best balance between accuracy and generalization.

Role in Machine Vision

Artificial neural networks play a key role in machine vision. These networks help machines "see" by analyzing images and videos. The artificial neural networks machine vision system can find patterns, detect objects, and even spot tiny defects that humans might miss.

Many studies show that neural networks improve machine vision tasks. In medical imaging, convolutional neural networks (a type of artificial neural network) help doctors find diseases in X-rays and scans. For example, CNNs have improved the detection of skin cancer, diabetic retinopathy, and Alzheimer’s disease. Peer-reviewed research shows that these networks often perform better than older models and sometimes even better than human experts.

Other types of networks, like variational autoencoders and generative adversarial networks, also help machine vision. These networks can make medical images clearer and help detect tumors. For example, variational autoencoders improved medical image resolution by 35%. Generative adversarial networks combine different features to make images sharper and help doctors find problems faster.

Researchers also use artificial neural networks machine vision systems in real-world tasks. For example, deep reinforcement learning networks help machines recognize objects and make decisions. One method reached an AUC score of 0.89 in classification tasks. Another network achieved 95.43% accuracy in breast ultrasound image analysis. These results show that neural networks can help machines work faster and more accurately.

Tip: Artificial neural networks machine vision systems can adapt to new tasks by learning from more data. This makes them useful in many industries, from healthcare to manufacturing.

The artificial neural networks machine vision system continues to grow in importance. As networks become larger and smarter, they help machines see and understand the world with greater accuracy.

Machine Learning and Computer Vision

Deep Learning in Vision

Machine learning helps computers solve many vision problems. In the past, engineers used simple machine learning algorithms to teach a machine how to find objects in pictures. These methods worked for basic tasks, but they often missed small details. Deep learning changed this by using deep neural networks with many layers. These networks learn from large sets of images and find complex patterns.

Deep learning now drives advances in computer vision. It helps with image classification, object detection, and segmentation. For example, a deep neural network can look at a picture and say if it shows a cat or a dog. It can also find where the animal is in the image. Deep learning models learn better as they see more data. This makes them very useful for ai systems that need to work with many types of images.

A key difference exists between machine vision and computer vision. Machine vision often means using cameras and computers to inspect products in factories. Computer vision is a broader field. It includes teaching computers to understand images and videos in many areas, such as healthcare, security, and robotics. Neural networks bridge these two fields. They help both machines and computers learn to see and understand the world.

A case study compared traditional machine learning algorithms and neural network-based computer vision. Traditional methods needed less time to set up, but neural networks reached higher accuracy. This shows that deep learning can improve performance, even if it takes more effort to train the network.

| Model Type | Dataset Type | Task Description | Accuracy | AUC | AUCPR |

|---|---|---|---|---|---|

| Traditional ML classifiers | Breast ultrasound images | Benign vs malignant breast lesion classification | ~0.85 | ~0.91 | N/A |

| CNN (e.g., ResNet50, InceptionV3) | Breast ultrasound images | Same classification task | 0.85 | 0.91 | N/A |

| AutoML Vision (Google) | Breast ultrasound images | Automated deep learning classifier | 0.85 | N/A | 0.95 |

Note: Neural network-based computer vision methods can match or beat traditional machine learning in accuracy and precision.

Convolutional Neural Networks (CNNs)

Convolutional neural networks are a special type of deep neural network. They work very well for image classification and object detection. A convolutional neural network uses layers that scan images for shapes, colors, and textures. This helps the network learn what makes each object unique.

Researchers tested CNNs on many tasks. For example:

- CNNs reach over 98% accuracy on the MNIST image classification task.

- On the CIFAR-10 dataset, deeper CNNs with more layers and dropout reach about 78-80% accuracy.

- Adding more layers and filters helps CNNs learn better features from images.

CNNs also work well for other vision tasks. They can find objects in crowded scenes and help ai systems understand complex images. Deep learning with CNNs has made computer vision much stronger than before. Other networks, like the recurrent neural network, help with tasks that use sequences, such as video analysis.

Researchers found that changing the depth and width of a CNN affects how well it learns. More layers can help, but too many can lower accuracy. Adjusting the network helps balance speed and performance.

System Types and Architectures

1D, 2D, and 3D Vision Systems

Machine vision systems come in different types. Each type works best for certain tasks. A 1D vision system looks at data in a single line. Factories use 1D systems to inspect wires or printed labels. These systems capture changes along one direction. They work fast but cannot see shapes or patterns.

A 2D vision system captures flat images. Most cameras use 2D vision. These systems help with tasks like barcode reading, surface inspection, and object counting. They can find shapes, colors, and defects on flat surfaces.

A 3D vision system adds depth. It uses special cameras or sensors to measure height, width, and depth. This helps robots pick up objects or check if parts fit together. 3D vision systems work well for tasks like bin picking, volume measurement, and quality control.

A study on EEG signal classification compared these systems. The results showed that combining 2D and 1D features gave a mean accuracy of 79.60% for emotion classification. The 3D approach added more detail by using spatial, spectral, and temporal information. Each system type has strengths and weaknesses. 1D systems work fast but miss spatial details. 2D systems see patterns but not depth. 3D systems give the most information but need more processing power.

| Performance Aspect | Description |

|---|---|

| Accuracy and Recall | Measure how well the system finds and identifies objects. |

| Mean Average Precision (mAP) | Shows object detection accuracy, used in self-driving cars. |

| Energy Consumption | Compares how much power each system uses. |

| Fault Tolerance | Tests if the system keeps working when errors happen. |

Tip: Choosing the right vision system depends on the task. Simple inspections may only need 1D or 2D, while complex tasks benefit from 3D.

Novel Architectures

New types of machine vision architectures keep emerging. Some systems use lensless opto-electronic neural networks. These networks process images without a traditional lens, making them smaller and faster. Engineers also design hardware like Google’s TPU and reconfigurable accelerators to run neural networks more efficiently.

Benchmarking tools such as QuTiBench help compare these new architectures. They test how well each system works on different hardware. Designers look at speed, energy use, and cost. Some architectures use multi-modal learning, combining data from cameras, LIDAR, and radar. This approach improves accuracy in fields like autonomous vehicles and security.

Researchers also study how the structure of a neural network affects vision tasks. Networks with higher latent dimensionality often model biological vision better. These insights help create systems that see and understand the world more like humans do.

Note: Novel architectures and benchmarking tools help engineers build better vision systems for real-world use.

Training and Learning

Data Preparation

Data preparation is a key step in machine vision. The process starts with collecting input images or signals. Each input must be clear and labeled correctly. Good data processing helps the network learn patterns and make accurate predictions. Researchers found that increasing the size of the training data improves both learning and accuracy. For example, when the training data size grew from 20% to 300% of the original set, classification accuracy increased. Some networks, like MCDCNN, reached above-chance accuracy even with smaller datasets. Doubling or tripling the training data led to even better results. This shows that more input data helps the network learn faster and reduces errors in output.

Studies also show that dataset size and features affect model accuracy. Larger datasets help reduce overfitting and improve learning. Hyperparameter tuning, such as adjusting the SVM kernel or KNN k-value, can further boost performance. The table below summarizes these findings:

| Factor | Impact on Learning and Accuracy |

|---|---|

| Dataset size | Larger size improves accuracy |

| Meta-level features | Influence model performance |

| Hyperparameter tuning | Optimizes learning outcomes |

| Data processing | Reduces errors and speeds up training |

Researchers also tested different data preparation methods. They found that dataset augmentation and adding physical constraints to the network architecture improved both speed and accuracy. Some models achieved better accuracy with lower inference time and memory use. This balance helps the network process input quickly and deliver reliable output.

- Increasing training dataset size lowers error rates.

- Scaling attention complexity improves accuracy as data grows.

- Data augmentation and physical constraints help the network learn better.

- Some configurations achieve high accuracy with less memory and faster output.

Optimization Techniques

Optimization techniques help the network learn more efficiently. These methods make the network smaller, faster, and less power-hungry. Quantization is a popular technique. It reduces model size by 60-70%, making processing faster. Inference speed can double, dropping from 40 ms to 20 ms. Power use also drops by about 50%. However, there may be a small drop in visual quality, around 8-10% for some computer vision tasks.

The table below shows how optimization affects learning and output:

| Metric | Quantitative Impact |

|---|---|

| Model Size Reduction | 60-70% smaller models via quantization |

| Inference Speed Improvement | Up to 2x faster inference (e.g., 40 ms to 20 ms) |

| Power Consumption Reduction | About 50% less power (e.g., 4J to 2J) |

| Accuracy Impact | 8-10% drop in visual quality for some tasks |

Quantization-Aware Training (QAT) helps keep accuracy high by including quantization effects during learning. Post-Training Quantization (PTQ) is simpler but may lower accuracy. Calibration methods, such as max, entropy, and percentile, help fine-tune the network. Other techniques like scaling, clipping, and rounding also improve output. These methods allow the network to process input quickly and deliver accurate output, even on devices with limited resources.

Tip: Choosing the right optimization technique depends on the network, the input data, and the desired output speed and accuracy. Careful tuning ensures the best balance for each application.

Applications and Challenges

Industrial and Commercial Uses

Industries use computer vision systems for many applications. In manufacturing, companies rely on ai-powered vision for defect detection, assembly guidance, and scene understanding. The food and beverage industry leads in adopting machine vision because it needs fast and accurate inspection for sorting and labeling. Asia Pacific countries, such as China and Japan, have the largest market share in machine vision due to rapid manufacturing growth and early adoption of quality-focused systems.

Recent advancements in ai vision sensors and high-speed imaging cameras have improved inspection accuracy and automation efficiency. For example, robotic pick-and-place systems in snack food manufacturing reduced injury risk scores from 14 to 4 and improved worker safety. In micro-electronics, high-speed robotic systems lowered risk scores from 14 to 2, with no injuries reported. These applications show how computer vision increases productivity and reduces costs.

| Case Study | Application | Initial Risk Score | Final Risk Score | Productivity Impact |

|---|---|---|---|---|

| #15 | Snack Food Pick & Place | 14 | 4 | Fewer injuries |

| #19 | Micro-electronics Pick & Place | 14 | 2 | No injuries |

| #20 | Metal Cylinder Deburring | 18 | 6 | No change |

Businesses expect a 31% reduction in costs from automation, up from 24% in 2020.

Benefits and Limitations

Computer vision powered by artificial neural networks brings many benefits. These systems can learn complex patterns for tasks like object detection and scene analysis. They handle noisy input data well and do not need assumptions about data distributions. Companies can extend these systems by stacking layers, which allows for more advanced learning and better output.

However, challenges remain. Neural networks often work as "black boxes," making it hard to understand how they reach decisions. They need large labeled datasets for supervised learning, which can be expensive and time-consuming to collect. Training deep models requires high computational resources and longer times. Sometimes, unrealistic expectations or poor application analysis can lead to project failure. Budget constraints may also force companies to choose less suitable hardware, affecting output quality.

| Benefits | Limitations |

|---|---|

| Learns complex, non-linear patterns | Hard to interpret decision process |

| Robust with noisy input | Needs large labeled datasets |

| Flexible and extendable | High computational and training requirements |

Note: Computer vision continues to advance, but companies must address data, reliability, and scalability challenges to achieve the best results.

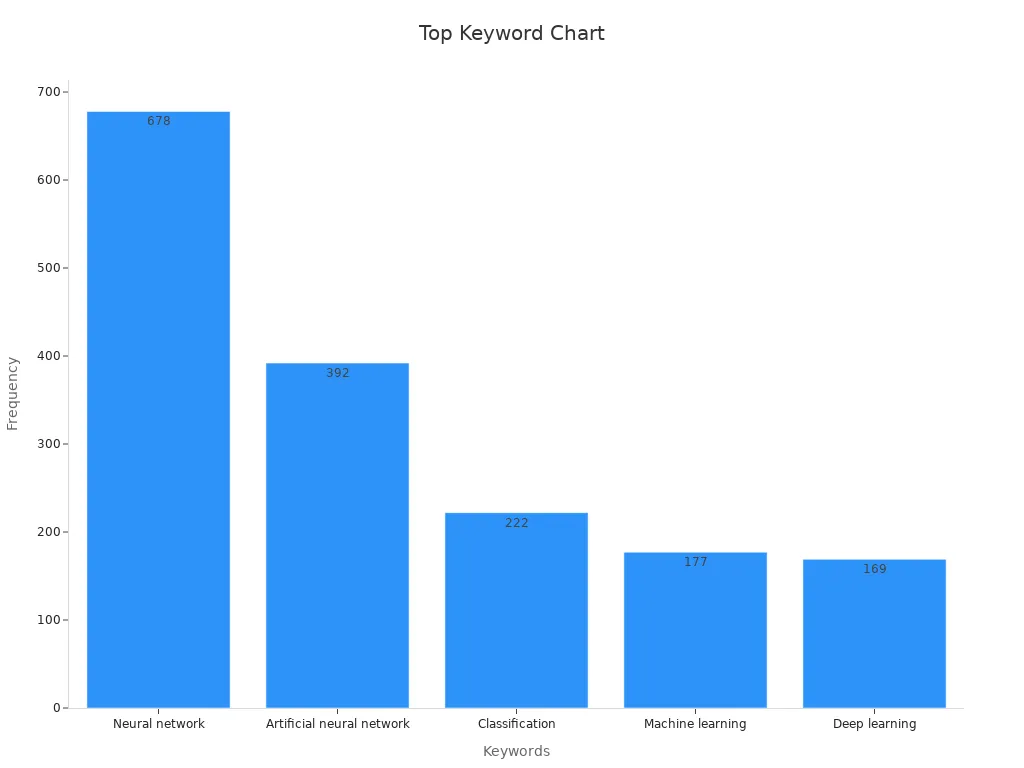

Artificial neural networks have changed how machines see and understand the world. Researchers studied 10,661 articles and found a strong rise in deep learning and artificial intelligence from 2007 to 2019. The table below shows this growth in research focus:

| Aspect | Details |

|---|---|

| Total Articles Analyzed | 10,661 |

| Top Keywords by Frequency | Neural network (678), Artificial neural network (392), Deep learning (169) |

| Deep learning growth | 544 → 702 → 1110 |

These advances bring better accuracy and new applications. Challenges remain, such as data needs and system reliability. Many industries can benefit by exploring artificial intelligence vision technologies for future solutions.

FAQ

What is the main difference between machine vision and computer vision?

Machine vision focuses on industrial tasks like inspection and sorting. Computer vision covers a wider range, including medical imaging and robotics. Neural networks help both fields by improving accuracy and speed.

How do artificial neural networks learn to recognize images?

Artificial neural networks learn by adjusting connections between neurons. They process many labeled images and find patterns. Over time, the network improves its ability to identify objects and features.

Why do machine vision systems need large datasets?

Large datasets help networks learn more patterns and reduce errors. More data means better accuracy and less chance of missing important details. This makes the system more reliable in real-world tasks.

Can neural networks work in real-time applications?

Yes, neural networks can process images quickly. With optimization techniques, they handle tasks like defect detection or object tracking in real time. This helps industries improve safety and productivity.

What are some challenges in using neural networks for machine vision?

Neural networks need lots of data and computing power. They can be hard to understand and explain. Sometimes, they make mistakes if the input data changes too much. Careful design and testing help reduce these problems.

See Also

Will Neural Networks Eventually Substitute Human Vision Tasks

The Impact Of Neural Network Frameworks On Vision Systems

The Role Of Synthetic Data Within Machine Vision Technology

Deep Learning Techniques Improving Machine Vision Performance

Understanding Computer Vision Models And Machine Vision Systems