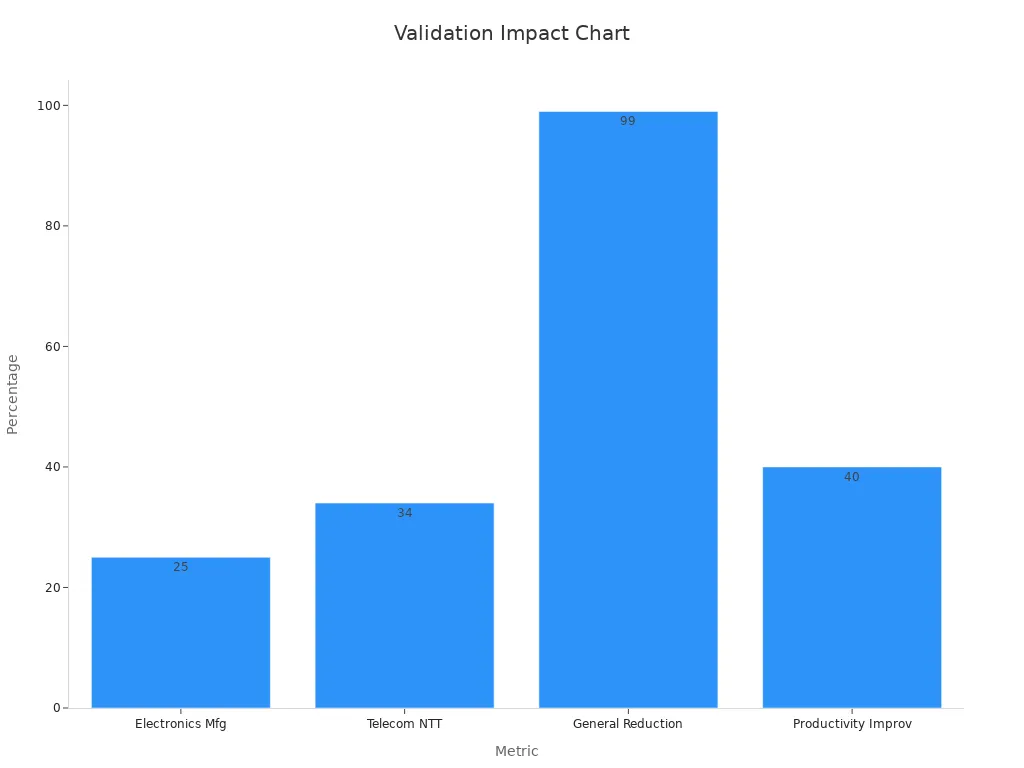

A validation machine vision system forms the backbone of any compliant vision system, guaranteeing reliable inspection and consistent quality. In one notable case, a manufacturer faced regulatory penalties when an unvalidated vision system failed to detect 25% of critical defects, leading to costly recalls. Proper validation reduces inspection errors by over 90%, cuts defect rates by up to 80%, and ensures the system meets rigorous quality standards.

A compliant vision system not only matches but often surpasses human accuracy, protecting product quality and business reputation.

Key Takeaways

- Validating machine vision systems ensures they detect defects and read barcodes accurately, improving product quality and reducing errors.

- Automated vision systems outperform humans by providing consistent, fast, and objective inspections without fatigue or distraction.

- Skipping validation risks missing defects, failing compliance, and facing costly recalls or penalties that harm reputation and business.

- A structured validation process includes installation, operational, and performance checks to confirm the system works well in real conditions.

- Following regulatory standards like 21 CFR Part 11 and keeping detailed records helps maintain trust, compliance, and ongoing quality control.

Validation Machine Vision System

Purpose and Benefits

A validation machine vision system ensures that automated inspection meets or exceeds the performance of human inspectors. The main goal of validation is to confirm that the vision system can detect defects, read data codes, and verify barcode quality with high reliability. Companies use a written validation plan to outline the steps for acceptance testing, which checks if the system meets all requirements before deployment.

A robust validation machine vision system delivers several benefits:

- It increases quality by reducing human error and ensuring consistent inspection.

- It supports compliance with barcode quality standards and industry regulations.

- It enables companies to track and document inspection results, which is essential for audits.

- It improves efficiency by automating repetitive tasks and reducing inspection time.

Deep learning models, such as convolutional neural networks, have transformed the way vision systems operate. These models learn from large datasets, both manually labeled and automated, to improve defect detection. For example, initial models identified only 8 out of 37 false ejects correctly. After expanding the training data, the model improved to 24 out of 37, showing a 65% success rate. This improvement highlights how AI and machine learning can reduce false rejects and enhance classification, surpassing traditional rule-based systems. These advancements also allow for root-cause analysis, which helps tune the system for even better results.

Note: A validation machine vision system must include acceptance testing and a written validation plan. This ensures the system meets barcode quality standards and delivers high-quality barcodes every time.

Human vs. Automated Inspection

Human inspectors have long played a key role in quality control. However, automated vision systems now offer significant advantages. These systems provide consistent, objective results and do not suffer from fatigue or distraction. Automated inspection can process thousands of items per hour, far beyond human capability.

A comparison between legacy and new systems shows clear improvements:

| Metric | Legacy System | New System | Statistical Significance |

|---|---|---|---|

| Defect Detection Rate | 93.5% | 97.2% | p-value < 0.05 |

| Accuracy | Improved | Higher | Confirmed statistically |

| False Negatives | Higher | Reduced | Confirmed statistically |

| Inspection Speed | Stable | Stable | No deterioration |

| Downtime | Stable | Stable | No deterioration |

These results confirm that a validation machine vision system can outperform manual inspection in both defect detection and barcode verification. Verification systems achieve over 99% accuracy in defect identification and 98.5% in object detection. For example, a model classified metal surface defects at 93.5% accuracy. AI-powered systems also reduce false reject rates by 20% in pharmaceutical packaging inspections.

Automated systems use a structured process: image acquisition, illumination, preprocessing, analysis, and pattern recognition. This process ensures precision and consistency. AI and machine learning help these systems adapt to variations, reduce human error, and increase inspection speed and accuracy. Companies in electronics, pharmaceuticals, automotive, and manufacturing rely on these systems for enhanced quality control and defect detection.

Barcode verification plays a critical role in ensuring high-quality barcode production. A verification system checks each code against barcode quality standards, confirming that only quality barcodes reach the market. Barcode verification also supports compliance with barcode quality standards, which is vital for regulated industries. Companies must use a verification system to meet barcode quality standards and deliver high-quality barcode labels.

Tip: Always include barcode verification in your validation plan. This step ensures that every product meets barcode quality standards and delivers quality barcodes to customers.

Risks of Skipping Validation

Quality and Compliance Issues

Skipping validation in machine vision systems creates serious risks for quality and compliance. Without proper verification, automated inspection may miss defects or misread barcodes, leading to products that fail to meet barcode quality standards. Companies that neglect verification often see a drop in quality, which can result in regulatory penalties or costly recalls. Verification ensures that systems meet barcode quality standards and deliver consistent results.

The following table shows how different metrics help measure the impact of missing validation on quality and compliance:

| Metric Type | Description | Relevance to Quality and Compliance Impact Measurement | Correlation Scores (SRCC) Range |

|---|---|---|---|

| Mean Average Precision (mAP) | Measures detection confidence and accuracy using IoU thresholds; less effective with few objects | Quantifies detection accuracy and false negatives/positives, indicating quality degradation | N/A |

| Average Precision (AP) | Combines precision and recall; limited for single images or frames | Differentiates information loss effects (false negatives) vs detector inaccuracies | N/A |

| Mean Intersection over Union (mean-IoU) | General accuracy measure over entire image; includes false negatives by zero IoU for missed objects | Evaluates detection accuracy but may mask object count effects, relevant for quality assessment | SRCC 0.29–0.37 |

| Object IoU | IoU for individual cropped objects, assessing size and location matching | Provides object-specific detection accuracy, critical for compliance in precise detection | SRCC 0.5–0.6 |

| Delta Object IoU | Difference in IoU between reference and compressed frames, quantifying compression impact | Directly measures performance degradation due to compression, key for assessing validation omission impact | SRCC 0.8–0.9 |

| Face Recognition Metrics | False Acceptance Rate (FAR), False Rejection Rate (FRR), Cosine Similarity of embeddings | Quantify recognition errors and embedding similarity, indicating recognition quality loss | N/A |

| License Plate Recognition Metric | Neural-network-based metric predicting recognition quality with high correlation (SRCC 0.85) | Predicts recognition success, reflecting compliance and quality degradation | SRCC 0.85 |

Metrics such as mean-IoU, Object IoU, and Delta Object IoU provide a clear picture of detection accuracy and the effects of skipping verification. Delta Object IoU, in particular, shows a strong link to performance loss, making it vital for tracking quality and compliance. Face recognition and license plate recognition metrics also reveal how missing verification can cause recognition errors and reduce quality. These specialized metrics outperform standard image quality checks, giving companies a better way to monitor quality control and compliance.

⚠️ Skipping verification can lead to undetected defects, poor barcode verification, and products that do not meet barcode quality standards. This puts both quality and compliance at risk.

Real-World Failures

Real-world failures highlight the dangers of skipping validation and verification. Google created an AI system to detect diabetic retinopathy with over 90% accuracy in the lab. When used in clinics in Thailand, the system failed to deliver results for more than 20% of scans because of poor image quality and weak internet connections. Nurses had to retake images, which slowed down care and caused frustration. This example shows that without real-world verification, even the best systems can fail to maintain quality.

- Machine vision research faces a reproducibility crisis. Many models cannot match published accuracy when tested outside the lab.

- Weak methods, unclear procedures, and data leakage—where test data mixes with training data—inflate performance metrics and hide real quality issues.

- Overfitting from data leakage causes models to fail on new data, leading to breakdowns after deployment.

- Incomplete reporting and lack of transparency make it hard for others to verify or reproduce results, which damages trust in verification and quality.

- These problems prove that skipping verification and proper validation leads to failures in machine vision, affecting both quality and compliance.

Verification is not just a technical step. It is a safeguard for quality, compliance, and trust. Companies that skip verification risk poor barcode verification, missed defects, and products that do not meet barcode quality standards. Reliable verification ensures high quality and supports ongoing quality control.

Machine Vision Verification and Validation

Key Differences

Machine vision verification and validation serve different roles in automated inspection. Verification checks if a system meets all technical specifications and published standards. This process uses static methods such as code inspections, design reviews, and unit testing. For example, standards-based verification ensures compliance with barcode quality standards like ISO 15415 or AIM DPM. Systems must enable all evaluation parameters and use high-quality optics and lighting to pass true verification. This approach produces formal reports as evidence of compliance.

Validation, on the other hand, confirms that the system solves the intended problem and meets user needs. It uses dynamic testing, such as functional and usability tests, to assess real-world performance. Validation measures accuracy, sensitivity, specificity, and reproducibility. Tools like Grad-CAM help explain which image features influence decisions, making sure the system focuses on meaningful signals. Validation often uses smart cameras with adaptable software, which can be more flexible and cost-effective than the hardware needed for standards-based verification.

| Aspect | Verification | Validation (Process Control) |

|---|---|---|

| Purpose | Ensures compliance with published barcode quality standards (e.g., ISO 15415, ISO 15416, AIM DPM) | Ensures barcodes are readable within a specific internal process |

| Parameters | All evaluation parameters enabled | Subset of verification parameters |

| Compliance Evidence | Produces formal reports as evidence of compliance | Provides objective measurements without requiring standard compliance |

| Application Context | Used when meeting formal standards is necessary | Used when compliance with published standards is not required or desired |

| Hardware Requirements | Higher-performance optics and lighting compliant with standards | Can use smart cameras with integrated optics and adaptable software for validation |

When to Use Each

Choosing between machine vision verification and validation depends on the inspection goals and regulatory needs.

- Use verification when the system must meet strict industry standards or regulatory requirements. Standards-based verification is essential for sectors like pharmaceuticals or automotive, where compliance is mandatory.

- Apply validation when the main goal is to ensure the system works well in a specific process or environment, even if formal standards do not apply. Validation checks if the system meets user expectations and performs reliably in real-world conditions.

- True verification starts early and continues throughout development. It includes requirements verification, design verification, and code verification. This process detects faults early and ensures the product is built right.

- Validation follows verification. It tests if the final product meets stakeholder needs through dynamic testing, such as usability and performance tests.

- A balanced approach combines both. Early and continuous verification ensures technical correctness, while later validation confirms the system’s fitness for purpose.

Tip: Overemphasizing verification without validation can lead to systems that meet technical standards but fail in real-world use. Combining both ensures quality, compliance, and user satisfaction.

Validation Phases and Methods

IQ, OQ, PQ

Machine vision systems require a structured validation process to ensure high quality and compliance with industry standards. The three main phases—installation qualification, operational qualification, and performance qualification—form the backbone of this approach. These phases follow the GAMP guidelines, which are widely accepted in regulated industries.

-

Installation Qualification (IQ):

This phase checks that the system is installed correctly and that all components match the design specifications. Technicians verify hardware, software, and network connections. They also confirm that the environment meets the requirements for stable operation. IQ ensures the foundation for quality and reliability. -

Operational Qualification (OQ):

During OQ, the team tests the system’s functions under normal and stress conditions. They run test cases to confirm that the system performs as expected. OQ includes checks for barcode reading, defect detection, and data processing. This phase uses industry standards to measure accuracy and repeatability. OQ helps catch issues before the system enters production. -

Performance Qualification (PQ):

PQ validates the system in real-world conditions. Operators use the system on actual products and monitor its performance over time. They track metrics like precision, recall, and error rates. PQ ensures the system maintains quality and meets customer requirements during daily use.

Tip: Always document each phase. Good records support audits, future upgrades, and ongoing quality control.

A typical validation process also includes Factory Acceptance Testing (FAT) and Site Acceptance Testing (SAT). FAT takes place at the manufacturer’s site. Here, engineers verify system completeness and functionality under controlled conditions. This early step helps detect problems and provides operator training. SAT happens at the customer’s site. The team tests the system in its real environment, checking integration and compliance with standards. SAT uses advanced techniques like k-fold cross-validation and bootstrapping to measure robustness, even with noisy data. Documentation during FAT and SAT builds transparency and trust.

A systematic review of machine vision methods for product code recognition shows that deep learning-based systems achieve over 91% accuracy. Conventional methods reach more than 99% accuracy on regular characters. These results prove that careful validation and verification improve quality, even when facing challenges like surface variations or low contrast.

Measurement Systems Analysis (MSA) supports these phases. Type I gauge studies and Gage R&R studies measure accuracy, repeatability, and reproducibility. Calibration to national standards ensures that measurements match real-world units. These studies are industry standards and should be completed before production use.

V-Model Approach

The V-model approach offers a clear framework for validation and verification in machine vision projects. This model aligns development and testing activities, ensuring that each stage meets quality and compliance goals.

The V-model starts with requirements definition. Teams document what the system must do and which standards it must meet. Next, they design the system architecture and develop detailed specifications. Each development phase has a matching verification activity. For example, requirements verification checks that the documented needs are clear and testable. Design verification ensures that the system blueprint matches the requirements.

On the right side of the V, teams perform testing and validation. Unit testing checks individual components. Integration testing verifies that modules work together. System testing confirms that the complete system meets all requirements. Finally, acceptance testing validates the system in the real world, using metrics like ROC curves and Mean-Squared-Error (MSE) to measure quality.

Simulation tools play a key role in pre-deployment testing. Engineers use these tools to create virtual environments and test the system’s response to different scenarios. Simulations help identify weaknesses and optimize performance before the system goes live.

🛠️ The V-model ensures that every requirement has a matching verification and validation activity. This structure reduces risks, improves quality, and supports compliance with industry standards.

A combined approach using FAT, SAT, and the V-model reduces risks and builds customer confidence. SAT ensures the system works in its intended environment and meets customer needs. Compliance checks during SAT confirm that the system follows all relevant standards, reducing legal risks and improving reliability.

| Validation Phase | Purpose | Key Activities | Quality Metrics Used |

|---|---|---|---|

| Installation Qualification (IQ) | Confirm correct installation and setup | Hardware/software checks, environment validation | Documentation, checklists |

| Operational Qualification (OQ) | Test system functions under expected conditions | Functional tests, stress tests, standards checks | Accuracy, repeatability |

| Performance Qualification (PQ) | Validate real-world performance | Live product runs, operator monitoring | Precision, recall, error rates |

| Factory Acceptance Test (FAT) | Verify completeness before shipment | Controlled tests, operator training | Functionality, compliance |

| Site Acceptance Test (SAT) | Validate in real environment | Integration tests, compliance checks | Precision, ROC, MSE |

Note: Each phase and method supports ongoing quality improvement and ensures the system meets both customer and regulatory expectations.

Compliance and 21 CFR Part 11

Regulatory Standards

Machine vision systems in regulated industries must meet strict standards. 21 cfr part 11 sets the rules for electronic records and electronic signatures. This regulation requires companies to validate their systems to ensure accuracy, reliability, and data integrity. Validation includes Installation Qualification, Operational Qualification, and Performance Qualification. Each phase checks that the system works as intended and meets all regulatory requirements. Companies must also create a clear validation plan and keep detailed records.

- 21 cfr part 11 mandates system validation, secure access, and audit trail features.

- Audit trail functions must record every change, approval, and user action with time stamps.

- Only authorized users can access the system, and all actions must be traceable.

- The US Drug Supply Chain Security Act and EU Annex 11 require similar validation and audit trail controls.

- Standards like GAMP guide companies in building compliance into machine vision systems from the design stage.

The FDA expects companies to explain their validation programs to inspectors. Companies must document risk assessments and validation decisions. Even when enforcement is flexible, systems must follow predicate rules and maintain audit trail records. Greenlight Guru and other solutions provide executed test case documentation and third-party assessments to support 21 cfr part 11 compliance.

Companies that follow these standards build trust and avoid costly penalties. A strong audit trail and proper validation protect both product quality and business reputation.

Ongoing Monitoring

Routine monitoring and documentation keep machine vision systems compliant with 21 cfr part 11. Companies use Computerized Maintenance Management Systems to track performance, schedule maintenance, and generate reports. These systems help maintain audit trail records and ensure all data changes are logged.

- Audit trail logs show who made changes, when, and why.

- Regular verification and calibration checks keep the system accurate.

- Standardized documentation protocols prevent incomplete or missing records.

- Deviation management tracks errors, root causes, and corrective actions.

- Data integrity follows ALCOA principles: Attributable, Legible, Contemporaneous, Original, and Accurate.

AI-powered systems need continuous monitoring and human oversight. Companies must review audit trail logs and system performance often. Ongoing verification and documentation support regulatory acceptance and help companies adapt to changing standards.

Routine monitoring and a complete audit trail ensure that machine vision systems stay validated and meet all 21 cfr part 11 requirements.

A robust validation machine vision system protects product quality, safety, and compliance. Neglecting validation increases the risk of defects and regulatory issues. Companies can maintain high quality by following these steps:

- Deploy vision systems tailored to industry needs.

- Automate inspection of labels, seals, and packaging.

- Integrate systems into production lines for real-time quality checks.

- Monitor performance using metrics like accuracy and recall.

- Upgrade non-compliant devices to maintain quality standards.

Staying updated on regulatory changes and best practices ensures ongoing quality improvement.

FAQ

What is the main goal of validating a machine vision system?

Validation ensures the system detects defects and reads codes as accurately as a human. Companies use validation to meet quality standards and regulatory requirements.

How often should companies revalidate their machine vision systems?

Companies should revalidate after any major software update, hardware change, or process modification. Regular reviews help maintain compliance and system accuracy.

What documentation supports machine vision validation?

Companies keep validation plans, test results, calibration records, and audit trails. These documents prove compliance during inspections and audits.

Can machine vision validation improve product safety?

Yes. Validation helps catch defects early. This process reduces the risk of unsafe products reaching customers.

What happens if a company skips validation?

| Risk | Impact |

|---|---|

| Missed Defects | Lower product quality |

| Regulatory Penalty | Fines or recalls |

| Data Integrity Loss | Loss of customer trust |

Skipping validation puts quality, compliance, and reputation at risk.

See Also

Understanding The Basics Of Quality Assurance Vision Systems

Do Filtering Techniques Improve Accuracy In Vision Systems

How Image Recognition Enhances Quality Control In Vision Systems

Verification Vision Systems Help Detect Every Possible Defect

Ensuring Precise Alignment With Machine Vision Systems In 2025