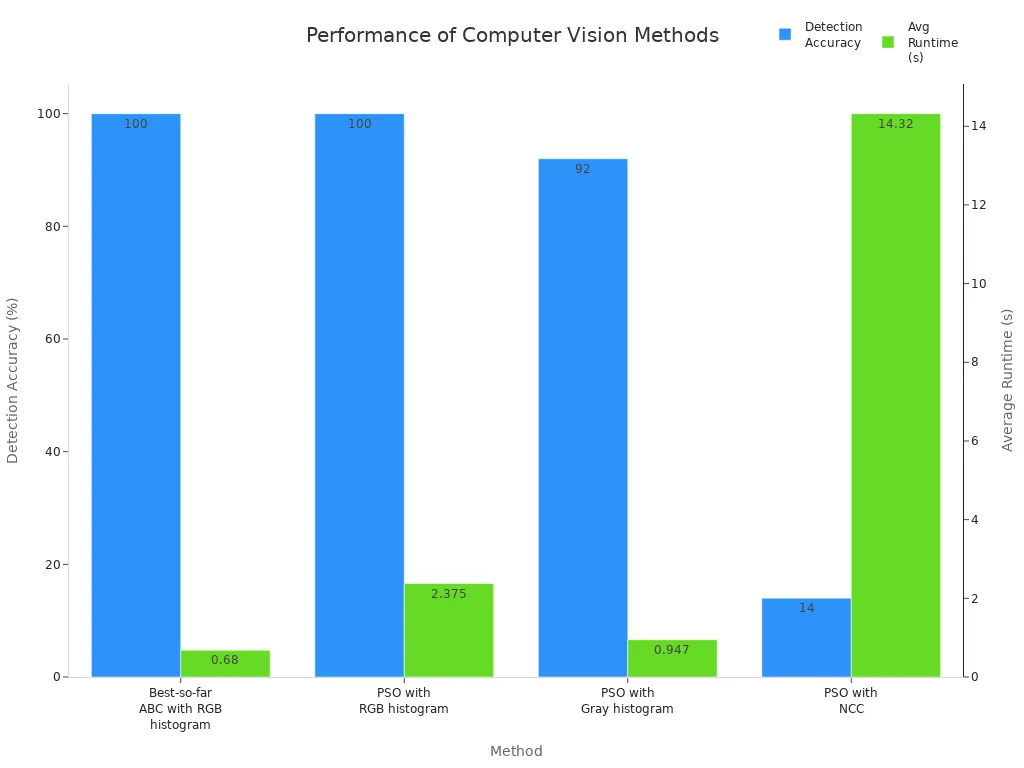

Template matching stands out as a core method in a template matching machine vision system due to its straightforward approach and high reliability. Users can identify patterns or objects quickly without complex model training. The process slides a template over an image, comparing pixel values directly, which makes it fast and easy to use. Template matching remains a foundation in both traditional and modern computer vision because of its effectiveness. In industrial applications, a template matching machine vision system often achieves top detection accuracy and the lowest runtime, as shown below:

| Method | Detection Accuracy | Average Runtime (seconds) | Notes on Adoption Potential |

|---|---|---|---|

| Best-so-far ABC with RGB histogram (Template Matching) | 100% | 0.680 | Highest accuracy and lowest runtime; suggests strong potential for industrial adoption |

| PSO with RGB histogram | 100% | 2.375 | Same accuracy but significantly slower than ABC method |

| PSO with Gray histogram | 92% | 0.947 | Lower accuracy and slower than ABC method |

| PSO with Normalized Cross-Correlation (NCC) | 14% | 14.320 | Poor accuracy and very slow; less suitable for industrial use |

Key Takeaways

- Template matching offers a simple and fast way to find objects in images without needing complex training or large datasets.

- This method slides a small template over a larger image and compares pixels to quickly detect patterns or objects in real time.

- Industries use template matching for quality control, object detection, and assembly verification to improve accuracy and reduce waste.

- Template matching works best in stable environments with little change in lighting, size, or rotation, making it reliable and easy to set up.

- While other methods handle complex changes better, template matching saves time and resources by delivering fast, dependable results on standard computers.

Template Matching in Machine Vision

What Is Template Matching?

Template matching is a technique in image processing that helps a template matching machine vision system find parts of an image that look like a smaller template image. In computer vision, this method slides the template over the main image and checks how similar each part is to the template. The system uses this process to spot objects or patterns quickly. Template matching does not need complex training, so it works well for many vision tasks. Many industries use template matching because it is simple and reliable.

How It Works

A template matching machine vision system uses a sliding window approach. The system moves the template image across the larger input image, one pixel at a time. At each position, it compares the pixel values of the template with the overlapping part of the input image. The system then calculates a similarity score for each position. The highest score shows where the template matches best. Many template matching methods use grayscale images to make the process faster. OpenCV, a popular computer vision library, offers functions like cv2.matchTemplate() to perform these steps. This approach helps the system detect objects or patterns in real time.

Key Similarity Measures

Template matching methods use different ways to measure similarity. The choice depends on the needs of the vision task. Some common measures include:

- Sum of Squared Differences (SSD): Good for simple cases but sensitive to brightness changes.

- Normalized Cross-Correlation (NCC): Works well when lighting changes because it ignores overall brightness.

- Correlation Coefficient: Useful for comparing patterns with different contrasts.

The right similarity measure depends on factors like how much lighting changes, how clear the edges are, and how fast the system needs to work. Edge-based matching is often faster and more robust, especially when the template has strong edges. Settings like angle and scale also affect which method works best. A template matching machine vision system can adjust these settings to improve accuracy for different tasks.

Why Template Matching Matters

Simplicity and Speed

Template matching stands out for its ease of use and fast processing. Many engineers choose this method because it does not require complex training or large datasets. They can set up a template matching system quickly and start using it right away. The process uses simple steps: slide the template over the image, compare pixels, and find the best match. This approach works well for real-time detection tasks.

- The A-MNS template matching method is about 4.4 times faster than advanced methods like DDIS. It uses a coarse-to-fine matching strategy and a low-cost similarity measure, which avoids slow sliding window scans.

- A-MNS does not need complex nearest-neighbor matches, making it easy to implement in many environments.

- The method stays robust even when the object rotates, moves, or changes shape. This helps users set up detection systems without worrying about difficult conditions.

- Benchmark tests show that A-MNS keeps high detection accuracy while being much faster than other vision techniques.

Template matching also works well in real-time applications. When engineers use correlation filters and fast Fourier transforms, the system can track objects frame by frame with high speed. Deep learning methods can be accurate, but they often need more computing power and time for training. Template matching, on the other hand, can deliver fast detection results, which is important for high-speed tracking and industrial automation.

Tip: Template matching can help companies save time and resources because it does not need long training periods or expensive hardware.

Reliability in Detection

Many industries trust template matching because it delivers reliable detection results. This method can find objects or patterns even when the image changes in size, angle, or lighting. Engineers often use template matching for object detection tasks where accuracy matters.

| Dataset | Proposed Method Error | FATM Error |

|---|---|---|

| David | 1.91 | 6.05 |

| Sylvester | Not listed | Qualitative results show better detection with the proposed method |

Studies show that template matching methods with 3D pose and size compensation outperform older methods like FATM. On the ‘David’ dataset, the proposed method had a much lower positional error, which means it found the object more accurately. Even when the object changed direction or size, template matching kept high detection accuracy. The method does not need recursive steps or training, so it works well in many situations.

Researchers have also shown that template matching can handle pose mismatches and size differences. By using depth information and 3D transformations, the system improves detection and reduces errors. This makes template matching a strong choice for object detection in real-world tasks.

Flexible Applications

Template matching offers flexibility for many machine vision tasks. Engineers use it for detection in quality control, assembly verification, and object detection in manufacturing. The method adapts to different environments and can handle changes in lighting, rotation, and scale.

Template matching does not need prior training, which makes it suitable for new or changing tasks. Users can update the template image easily if the object changes. This flexibility helps companies respond quickly to new production needs or product designs.

Template matching also supports recognition tasks in security, robotics, and medical imaging. The method can detect objects in cluttered scenes or under challenging conditions. Because it works without complex setup, template matching remains a popular choice for detection in many fields.

Applications in Industry

Quality Control

Manufacturers use template matching in automated inspection systems to improve quality control. The system compares each product image to a reference template. If the product matches the template, the system marks it as good. If the product does not match, the system draws a bounding box around the defect. This box helps workers find and fix problems quickly. Template matching works with other tools like edge detection and color analysis. These tools help the system detect objects and highlight defects. The results from detection go to automation systems, which can sort products or remove defective items. This process keeps quality high and reduces waste.

Object Detection

Object detection is a key part of many industrial applications. Template matching helps detect objects by sliding a template over the image and checking for matches. When the system finds a match, it draws a bounding box around the detected object. This box shows the location and size of the object. Factories use object detection to count parts, check for missing items, and guide robots. The system can detect objects even if they move or change shape. Template matching works with blob detection and pixel counting to improve accuracy. The detection results are sent to PLCs and HMIs, which control machines and track production. This integration supports fast and reliable object detection in real time.

Assembly Verification

Assembly verification checks if products are put together correctly. Template matching compares the assembled product to a template image. If parts are missing or out of place, the system draws a bounding box around the problem area. This box helps workers see what needs fixing. The system can detect objects in complex assemblies and mark errors for review. Template matching works with other vision tools to improve detection. The detection data goes to business systems, which can adjust the process or alert staff. This approach ensures each product meets standards before leaving the factory.

Note: Template matching supports object recognition and image recognition tasks in many industries. It helps detect objects, draw bounding boxes, and improve detection accuracy. By sharing detection results with automation systems, companies can boost efficiency and maintain high quality.

Limitations and Alternatives

Sensitivity to Changes

Template matching works well in many situations, but it faces some challenges. Changes in lighting, scale, or rotation can affect how well the system finds objects. For example, if the light in a factory changes or shadows appear, the accuracy of template matching may drop. Some methods handle these changes better than others.

- Color pattern matching keeps high accuracy when lighting is even. It works better than grayscale matching when shadows or uneven lighting appear.

- Grayscale pattern matching can handle objects that rotate from 0° to 360° and change size by about 5%.

- Combining color and grayscale matching helps when objects have similar shades or are see-through.

- Color pattern matching can still find the right position and orientation, even if the object rotates or scales a little.

Lighting and orientation changes can make detection harder, but using color pattern matching helps keep results reliable.

Comparison with Other Computer Vision Methods

Template matching uses a simple approach. It slides a template over an image and checks for matches. Feature-based methods, like SIFT or SURF, look for key points in the image. These methods work better when objects change shape, scale, or rotate a lot. Deep learning methods use neural networks to learn from many images. They can handle complex scenes and big changes in objects.

| Method | Handles Lighting Changes | Handles Rotation/Scale | Needs Training Data | Speed |

|---|---|---|---|---|

| Template Matching | Moderate | Limited | No | Fast |

| Feature-Based | Good | Good | No | Moderate |

| Deep Learning | Excellent | Excellent | Yes | Slower |

Template matching gives fast results and does not need training. Feature-based and deep learning methods offer more flexibility but need more setup and computing power.

Choosing the Right Approach

Engineers choose template matching when they need a quick and easy solution. It works best when the object and background do not change much. For tasks that need high speed and simple setup, template matching is a strong choice. If the scene changes a lot or objects look very different, feature-based or deep learning methods may work better.

Tip: Use template matching for real-time detection in stable environments. Try feature-based or deep learning methods for complex or changing scenes.

Template matching continues to play a vital role in machine vision because of its simplicity, speed, and reliability. Many industries rely on it for fast and accurate detection. While other methods may suit complex or changing environments, template matching remains a strong choice for stable tasks.

- Manufacturers use template matching with anomaly detection to spot defects and improve product quality.

- Companies often begin with ready-made vision tools, then move to custom models for better results.

- The market sees template matching as part of a growing toolkit that adapts to new AI technologies.

Template matching helps businesses reduce waste, lower costs, and keep quality high. It stands as a key tool in the future of computer vision.

FAQ

What is the main advantage of template matching in machine vision?

Template matching gives fast and reliable results. Engineers can set up systems quickly. The method does not need training data. Many industries use it for real-time detection tasks.

Can template matching handle changes in object size or rotation?

Template matching works best when objects stay the same size and orientation. Some advanced methods can handle small changes. Large changes in scale or rotation may reduce accuracy.

Where do companies use template matching most often?

Companies use template matching in quality control, object detection, and assembly verification. Factories rely on it to check products, count parts, and guide robots.

Does template matching need a lot of computing power?

Template matching uses simple calculations. Most systems run on standard computers. The method does not need expensive hardware or graphics cards.

How does template matching compare to deep learning?

| Feature | Template Matching | Deep Learning |

|---|---|---|

| Speed | Fast | Slower |

| Training Needed | No | Yes |

| Flexibility | Limited | High |

Template matching works quickly without training. Deep learning handles complex tasks but needs more resources.

See Also

Why Triggering Plays A Vital Role In Machine Vision

How Image Recognition Enhances Quality Control In Machine Vision

Key Insights About Transfer Learning For Machine Vision Systems

Ways Machine Vision Systems Achieve Precise Alignment In 2025

Why Machine Vision Systems Are Crucial For Efficient Bin Picking