Semantic segmentation is a computer vision task that assigns a class label to every pixel in an image. By analyzing each pixel individually, it allows machines to understand visual data in intricate detail. This pixel-level understanding is essential for tasks that require precision, such as identifying objects or regions in complex environments.

In 2025, advancements in AI and machine vision have elevated the importance of semantic segmentation. These innovations have made Semantic segmentation machine vision systems smarter and faster, enabling them to tackle real-world challenges with greater accuracy. As technology evolves, semantic segmentation continues to redefine how machines interact with the world around them.

Key Takeaways

- Semantic segmentation gives each pixel in a picture a category. This helps machines understand images better.

- New AI methods, like self-supervised learning and mixed models, make this process faster and easier for many industries.

- It is very important for things like self-driving cars and medical scans. It helps make driving safer and improves health checks.

- Problems like differences in data and limited information can be solved by using more varied datasets and creative models to make systems work better.

- Using semantic segmentation helps businesses find new ideas, make smarter choices, and work more efficiently.

Understanding Semantic Segmentation

What is semantic segmentation?

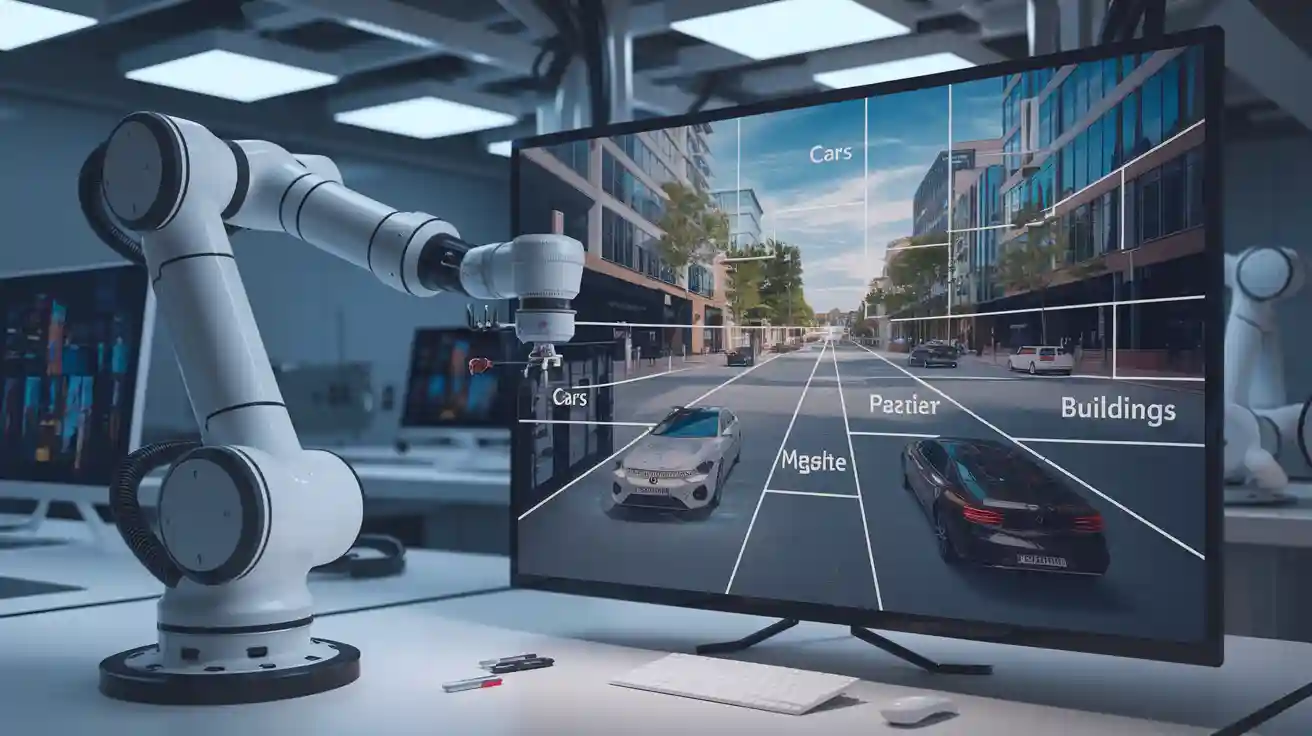

Semantic segmentation is a fundamental task in computer vision that assigns a class label to every pixel in an image. Unlike traditional image classification, which categorizes an entire image, semantic segmentation focuses on understanding the image at a granular level. This approach enables machines to identify and label specific regions, such as roads, buildings, or people, within a scene.

Recent advancements in deep learning have revolutionized semantic segmentation. Fully Convolutional Networks (FCNs) laid the groundwork for dense pixel-level predictions, while newer architectures like FFTNet leverage transformers to enhance performance. These innovations have expanded the applications of semantic segmentation, making it indispensable in fields like augmented reality, medical imaging, and autonomous driving.

Tip: Semantic segmentation is particularly useful when precision matters, such as in autonomous vehicles navigating complex environments or doctors analyzing medical scans for abnormalities.

How does semantic segmentation differ from instance segmentation and panoptic segmentation?

While semantic segmentation assigns a class label to every pixel, it does not differentiate between individual objects of the same class. For example, in an image of multiple cars, all cars would be labeled as "car" without distinguishing between them. This limitation is addressed by instance segmentation, which identifies and separates individual objects within the same class.

Panoptic segmentation combines the strengths of both semantic and instance segmentation. It provides a comprehensive analysis by labeling each pixel with both a semantic class and an instance identifier. This hybrid approach is particularly useful in scenarios requiring detailed object-level and scene-level understanding.

Here’s a comparison of the three segmentation types:

| Type of Segmentation | Description |

|---|---|

| Semantic Segmentation | Assigns a class label to every pixel in an image without distinguishing between different objects of the same class. |

| Instance Segmentation | Differentiates between individual objects of the same class, providing unique identifiers for each instance. |

| Panoptic Segmentation | Combines both semantic and instance segmentation, providing a complete analysis by labeling each pixel with a semantic label and instance identifier. |

Performance metrics also highlight the differences between these methods. For example, semantic segmentation excels in Intersection over Union (IoU) accuracy, while instance segmentation often requires more computation time due to its complexity. Panoptic segmentation balances these trade-offs, offering a holistic view of the scene.

Note: The rise of self-supervised learning methods has made segmentation tasks more accessible, reducing the need for large labeled datasets and enabling broader adoption across industries.

How Semantic Segmentation Works

The role of neural networks in semantic segmentation

Neural networks play a pivotal role in semantic segmentation by enabling machines to analyze images at the pixel level. These networks, particularly deep learning architectures, have replaced traditional methods like Support Vector Machines due to their superior accuracy. They generate segmentation maps where each pixel is color-coded based on its semantic class, allowing for precise image segmentation.

Advanced techniques like inception modules and attention mechanisms enhance segmentation accuracy. Inception modules capture multi-scale features by processing different receptive fields simultaneously. Attention mechanisms focus on important regions while suppressing irrelevant areas, improving boundary detection. Residual connections and multi-scale fusion blocks further refine the process by preserving spatial information and leveraging features across scales. These innovations make neural networks indispensable for tasks requiring detailed image segmentation.

| Enhancement Type | Description |

|---|---|

| Inception Modules | Capture multi-scale contextual features through parallel convolutions of varying receptive fields. |

| Attention Mechanisms | Emphasize informative regions while suppressing irrelevant background noise, improving focus on boundaries. |

| Residual Connections | Preserve spatial information, facilitate gradient flow, and enable deeper feature learning. |

| Multi-scale Fusion Block | Leverage global features across various scales to enhance segmentation accuracy. |

Key steps in the semantic segmentation process

The semantic segmentation process involves several key steps that transform raw images into meaningful segmentation maps. First, input images undergo pre-processing, where they are resized into smaller patches to reduce complexity. These patches are fed into a convolutional neural network (CNN), which extracts features through layers of convolution and pooling. The CNN produces a segmented output, highlighting areas of interest based on semantic classes.

Post-processing refines the results by stitching segmented patches together to create a coherent final image. This step removes artifacts and noise, ensuring the segmentation map accurately represents the original image. For example, experimental research has validated pipelines like aMAP, which use multi-atlas registration to adapt segmentation to new datasets. These systematic processes ensure the semantic segmentation models deliver reliable results across diverse applications.

Common challenges in implementing semantic segmentation

Implementing semantic segmentation in real-world scenarios presents several challenges. One major issue is the domain gap, where models trained in simulated environments struggle to generalize to real-world conditions. Training data limitations also pose problems, as simulation images often contain artifacts that reduce accuracy. Environmental variability, such as motion blur, dynamic lighting, and unpredictable changes, further complicates segmentation tasks.

To address these challenges, you can leverage techniques like generative pre-trained models, which improve generalization. Additionally, using diverse datasets and robust pre-processing methods can mitigate the impact of environmental variability. Overcoming these hurdles is essential for deploying semantic segmentation models effectively in practical applications.

Importance of Semantic Segmentation in Machine Vision

Why pixel-level understanding is critical for machine vision

Pixel-level understanding forms the foundation of modern machine vision systems. By analyzing each pixel, you enable machines to interpret images with unparalleled precision. This capability is essential for tasks like material classification, where hyperspectral cameras capture detailed spectral data for every pixel. Such data allows for accurate segmentation of materials, outperforming traditional imaging methods.

Machine vision software relies on pixel data to perform operations like edge detection and grayscale analysis. These processes depend on gradients between neighboring pixels, which help identify object boundaries. Proper lighting and advanced optics further enhance the accuracy of these systems. With new image sensors capturing broader light spectrums, pixel-level analysis continues to evolve, driving advancements in segmentation.

The role of semantic segmentation in 2025

In 2025, semantic segmentation plays a transformative role in computer vision. AI-assisted annotation reduces dataset creation time by up to 70%, accelerating the development of segmentation models. Transformer-based architectures, such as Meta AI’s Segment Anything Model, improve segmentation accuracy with minimal human input. Self-supervised learning enables models to learn from unlabeled data, reducing the need for extensive manual labeling. These innovations make segmentation more accessible and efficient.

Synthetic data generation also contributes to this evolution. By creating realistic datasets, you can train segmentation models without relying heavily on real-world annotations. This approach ensures that segmentation systems remain robust across diverse applications, from autonomous vehicles to environmental monitoring.

Benefits of semantic segmentation in real-world applications

Semantic segmentation delivers significant benefits across industries. In healthcare, it enables precise tissue segmentation, aiding in disease diagnosis and treatment planning. For example, automatic segmentation of medical images helps doctors detect abnormalities and monitor organ growth. This capability improves the accuracy and efficiency of medical imaging.

In autonomous driving, segmentation enhances safety by providing detailed information about the vehicle’s surroundings. It identifies objects like pedestrians, vehicles, and road signs, enabling real-time decision-making. These benefits extend to other fields, such as agriculture and smart cities, where segmentation supports tasks like crop monitoring and urban planning. By adopting semantic segmentation, you unlock new possibilities for innovation and efficiency.

Applications of Semantic Segmentation

Autonomous vehicles: Enhancing safety and navigation

Semantic segmentation plays a critical role in the development of autonomous vehicles. By analyzing every pixel in an image, it enables vehicles to detect and classify objects in their surroundings with remarkable precision. This capability ensures that vehicles can identify pedestrians, road signs, and other vehicles, even in complex environments. For example, segmentation allows autonomous systems to create a segmentation mask that highlights obstacles, helping the vehicle navigate safely.

Out-of-distribution segmentation is one of the most significant challenges in this field. Vehicles must recognize unexpected obstacles, such as debris or animals, and respond appropriately. AI equipped with semantic segmentation addresses this challenge by combining data from multiple sensors, such as RGB cameras and LiDARs. These systems use data fusion to merge geometric information with semantic details, creating a comprehensive understanding of the environment.

| Aspect | Description |

|---|---|

| Perception System | Detects and classifies objects with a low false negative rate. |

| Technology Used | Utilizes fish-eye cameras and 360° LiDARs for complete coverage. |

| Data Fusion | Combines LiDAR and RGB camera data for enhanced perception. |

| Real-time Operation | Operates efficiently on limited hardware resources. |

| Robustness and Redundancy | Features parallel processing pipelines for increased reliability. |

These advancements make autonomous vehicles safer and more reliable. By leveraging semantic segmentation, you can ensure that vehicles operate effectively in real-world conditions, reducing accidents and improving navigation.

Healthcare: Revolutionizing medical imaging and diagnostics

In healthcare, semantic segmentation has transformed medical imaging by enabling precise identification of tissues and abnormalities. This technology allows you to analyze medical images at a pixel level, creating segmentation masks that highlight critical areas such as tumors or lesions. For instance, in brain tumor segmentation, deep learning models achieve a Dice Similarity Coefficient of 93%, significantly outperforming traditional methods.

| Image Segmentation Task | Deep Learning Accuracy | Traditional Methods Accuracy |

|---|---|---|

| Brain Tumor Segmentation | 93% Dice Similarity Coefficient | 87% Dice Similarity Coefficient |

| Lung Nodule Segmentation | 92% Intersection over Union | 84% Intersection over Union |

| Cell Nuclei Segmentation | 85% F1 Score | 77% F1 Score |

These improvements enhance diagnostic accuracy and treatment planning. Doctors can rely on segmentation to monitor organ growth, detect diseases early, and plan surgeries with greater confidence. By integrating semantic segmentation into medical imaging, you can revolutionize patient care and improve outcomes.

Robotics: Enabling precise object recognition and manipulation

Robotics relies on semantic segmentation for accurate object recognition and manipulation. Robots equipped with this technology can identify objects in cluttered environments and interact with them precisely. For example, segmentation masks help robots distinguish between tools and background elements, enabling efficient task execution.

Deep learning models, such as convolutional neural networks (CNNs), excel in this domain. They achieve higher mean Dice scores and superior Intersection over Union (IoU) compared to transformer-based models. Additionally, models trained with synthetic data demonstrate an average precision of 86.95%, comparable to those trained on real-world datasets.

| Metric | CNN Model Performance | Transformer Model Performance |

|---|---|---|

| Mean Dice Score | Higher | Lower |

| IoU Comparison | Superior | Inferior |

| p-value | < 0.001 | N/A |

- Models trained with synthetic data achieve high precision.

- Performance remains consistent across various industrial applications.

- Multi-metric analysis validates the effectiveness of segmentation in robotics.

By using semantic segmentation, you can enhance the capabilities of robots, making them more efficient and versatile in tasks like assembly, inspection, and maintenance.

Other industries: Agriculture, retail, and smart cities

Semantic segmentation is transforming industries beyond healthcare, robotics, and autonomous vehicles. Its ability to analyze images at the pixel level opens new possibilities in agriculture, retail, and smart cities. By adopting segmentation, you can unlock innovative solutions that improve efficiency and decision-making.

Agriculture: Precision farming for healthier crops

In agriculture, segmentation enables precision farming by analyzing crop health at a granular level. You can use computer vision systems equipped with segmentation to monitor plant growth, detect diseases, and assess soil conditions. For example, segmentation maps highlight areas of pest infestation, allowing farmers to target treatments effectively. This reduces chemical usage and boosts crop yields.

Drones equipped with hyperspectral cameras further enhance precision farming. These drones capture detailed images of fields, and segmentation algorithms process the data to identify stressed plants or nutrient deficiencies. This technology empowers you to make informed decisions, ensuring healthier crops and sustainable farming practices.

Tip: Integrating segmentation with IoT sensors can provide real-time updates on soil moisture and weather conditions, helping you optimize irrigation and planting schedules.

Retail: Enhancing customer experience and inventory management

Retailers use segmentation to improve customer analytics and inventory management. By analyzing video feeds, segmentation identifies customer behavior patterns, such as foot traffic and product interactions. This data helps you optimize store layouts and marketing strategies. For instance, segmentation maps can reveal which shelves attract the most attention, guiding you to place high-demand products strategically.

In inventory management, segmentation automates stock monitoring. Cameras equipped with segmentation algorithms track inventory levels and detect misplaced items. This reduces manual labor and ensures accurate stock counts. You can also use segmentation to enhance security by identifying suspicious activities in real-time, protecting assets and improving customer safety.

Callout: Retailers adopting segmentation report a 25% increase in operational efficiency and a 15% boost in customer satisfaction.

Smart Cities: Building safer and more efficient urban environments

Smart cities leverage segmentation to address challenges in urban development and infrastructure management. By analyzing aerial images, segmentation detects structural anomalies in buildings, bridges, and roads. This helps you identify areas requiring maintenance, ensuring public safety.

Traffic management systems also benefit from segmentation. Cameras equipped with segmentation algorithms monitor vehicle flow and pedestrian movement, enabling you to optimize traffic signals and reduce congestion. Additionally, segmentation supports waste management by identifying overflowing bins and guiding collection routes.

| Industry | Application | Impact Description |

|---|---|---|

| Agriculture | Precision farming | Utilizes segmentation for crop health assessment. |

| Retail | Inventory management | Adopts segmentation for customer analytics. |

| Smart Cities | Urban development | Harnesses segmentation to detect structural anomalies. |

By integrating segmentation into smart city initiatives, you can create safer, cleaner, and more efficient urban environments. This technology empowers city planners to make data-driven decisions, improving the quality of life for residents.

Unlocking cross-industry potential

Semantic segmentation continues to redefine industries by offering innovative solutions to complex challenges. Whether you’re a farmer, retailer, or urban planner, segmentation provides tools to enhance productivity and decision-making. With advancements in data sets for semantic segmentation, the technology becomes more accessible, driving widespread adoption across diverse sectors.

Latest Advancements in Semantic Segmentation (2025)

Cutting-edge models and architectures

Recent advancements in semantic segmentation machine vision systems have introduced hybrid architectures that combine Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs). These models excel in handling low-contrast images, making them particularly effective in fields like medical diagnostics. Attention mechanisms and multi-scale feature extraction techniques further enhance segmentation accuracy by focusing on critical regions and capturing details across different scales. For example, MedSAM, a model pretrained on 1.5 million medical images, has significantly improved automated diagnostics by addressing data scarcity.

Despite these innovations, challenges remain. High computational demands and limited access to diverse datasets hinder the training and generalization of these models. However, ongoing research continues to refine these architectures, ensuring they remain robust and efficient for real-world applications.

| Metric | Description |

|---|---|

| Intersection over Union (IoU) | Measures the overlap between predicted segmentation and ground truth, providing a quantitative assessment of segmentation accuracy. |

| Pixel Accuracy | Calculates the percentage of correctly classified pixels, offering a simple yet effective measure of overall model performance. |

New datasets driving innovation

New datasets are revolutionizing semantic segmentation by addressing specific challenges in machine vision. For instance, a synthetic dataset designed for human body segmentation enables the extraction of anthropometric data. This dataset accurately represents clothing and body silhouettes, and models trained on it perform well when tested against real-world images. Similarly, a synthetic dataset for waterbody segmentation tackles out-of-distribution behavior. Models trained on this dataset demonstrate a strong correlation with real-world performance, proving its effectiveness in predicting environmental scenarios.

These datasets not only improve segmentation accuracy but also reduce the reliance on extensive manual labeling. By leveraging synthetic data, you can train semantic segmentation machine vision systems to handle diverse and complex tasks with greater efficiency.

Trends shaping the future of semantic segmentation

In 2025, several trends are shaping the future of semantic segmentation. Self-supervised learning is gaining traction, allowing models to learn from unlabeled data and reducing the need for costly annotations. Transformer-based architectures, such as those used in hybrid models, are becoming more prevalent due to their ability to process global image contexts. Additionally, synthetic data generation continues to expand, providing high-quality datasets for training segmentation models.

Another emerging trend is the integration of semantic segmentation with edge computing. This approach enables real-time processing on devices with limited hardware, making segmentation more accessible for applications like autonomous vehicles and robotics. As these trends evolve, they promise to make semantic segmentation machine vision systems more powerful and versatile.

Semantic segmentation has become a cornerstone of machine vision, enabling you to achieve pixel-level precision in image analysis. Its impact spans industries, from healthcare to autonomous vehicles, revolutionizing how machines interpret and interact with the world. In 2025, advancements like self-supervised learning and edge computing have made this technology more accessible and efficient.

As you look ahead, expect semantic segmentation to unlock new possibilities. From smarter cities to personalized healthcare, its potential to reshape industries remains limitless. By embracing this innovation, you can drive progress and solve complex challenges with confidence.

FAQ

1. What is the difference between semantic segmentation and image classification?

Image classification assigns a single label to an entire image. Semantic segmentation, on the other hand, labels every pixel in the image based on its class. This pixel-level precision allows you to analyze specific regions or objects within a scene.

2. Why is semantic segmentation important for autonomous vehicles?

Semantic segmentation helps autonomous vehicles understand their surroundings. It identifies objects like pedestrians, vehicles, and road signs at a pixel level. This detailed understanding ensures safer navigation and better decision-making in real-time driving scenarios.

3. Can semantic segmentation work with limited training data?

Yes, modern techniques like self-supervised learning and synthetic data generation allow models to perform well with limited labeled data. These methods help you train segmentation systems efficiently while reducing the need for extensive manual annotations.

4. How does semantic segmentation improve medical imaging?

Semantic segmentation highlights critical areas in medical images, such as tumors or organs. This precision helps doctors diagnose diseases, plan treatments, and monitor patient progress. For example, it can segment brain tumors with high accuracy, improving diagnostic confidence.

5. What industries benefit the most from semantic segmentation?

Industries like healthcare, autonomous vehicles, agriculture, and robotics benefit greatly. For instance, it aids in medical diagnostics, enhances vehicle safety, supports precision farming, and enables robots to recognize and manipulate objects accurately.

Tip: Explore how semantic segmentation can solve challenges in your industry by leveraging its pixel-level precision and adaptability.

See Also

Exploring Machine Vision System Segmentation Trends for 2025

Understanding Pixel-Based Machine Vision Applications Today

Navigating Semiconductor Systems in Machine Vision Technology

Grasping Object Detection Techniques in Current Machine Vision