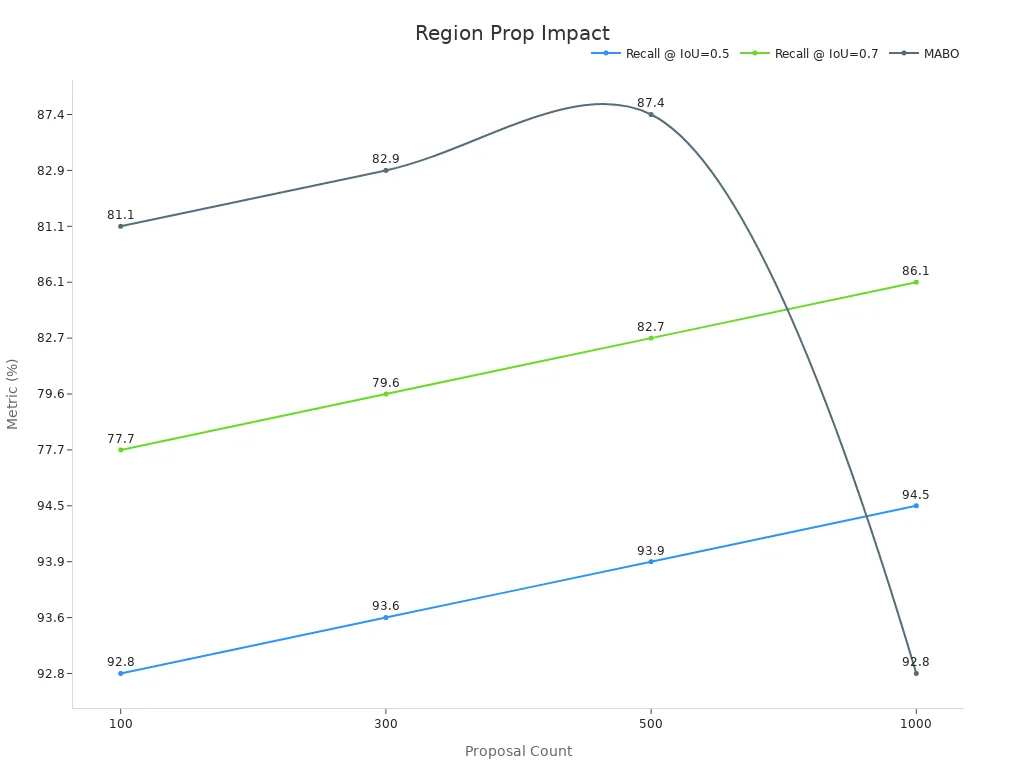

A region proposal system in machine vision identifies areas in an image likely to contain an object. This step helps object detection models focus on promising locations, which improves speed and accuracy. The Region Proposal machine vision system selects a small set of candidate regions instead of examining every possible spot. With this approach, detection becomes both faster and more reliable. For example, imagine a librarian searching for a specific book by looking only at labeled shelves rather than every single page. The following chart shows that using 100 proposals, the system achieves a recall of 92.8% at IoU=0.5, nearly matching the accuracy of models using 1,000 proposals. This efficiency means fewer checks lead to high-quality object detection.

Key Takeaways

- Region proposal systems help object detection models find likely object areas quickly, improving both speed and accuracy.

- Anchor boxes and Intersection over Union (IoU) work together to identify and refine candidate regions for better object localization.

- Deep learning advances, like Region Proposal Networks (RPNs), make region proposals faster and more precise than traditional methods.

- Efficient region proposals reduce computational costs, enabling real-time object detection in applications like self-driving cars and security cameras.

- Techniques like ROI pooling and bounding box regression further enhance detection accuracy while keeping processing efficient.

Region Proposal Machine Vision System

What Is a Region Proposal?

A region proposal is a candidate area in an image that likely contains an object. The region proposal machine vision system uses these candidate regions to focus on promising parts of the image. This approach reduces the need to search every pixel or location. Instead, the system selects a smaller set of regions that have a high chance of containing objects.

The technical process behind a region proposal machine vision system involves several steps:

- The system uses a fully convolutional network to analyze feature maps from a base convolutional neural network.

- It places anchor boxes of different sizes and shapes at each point on the feature map. These anchor boxes act like sliding windows, scanning for possible objects.

- For each anchor box, the network predicts if it contains an object (foreground) or not (background). It also adjusts the box to better fit the object.

- The system uses a metric called Intersection over Union (IoU) to decide if an anchor box matches a real object. If the IoU is above a certain threshold, the box is labeled as foreground.

- The network combines two types of loss: one for classifying boxes as object or background, and one for refining the box coordinates.

- The final output is a set of refined boxes, called region proposals, which are then passed to the next stage for detailed object detection.

Region proposal algorithms like Selective Search, Edge Boxes, and Region Proposal Networks (RPNs) help the region proposal machine vision system generate a manageable number of candidate regions. This process makes detection faster and more accurate.

Why Region Proposals Matter

Region proposals play a key role in the region proposal machine vision system. They help the system focus on areas most likely to contain objects, which improves both speed and accuracy. By narrowing down the search space, the system avoids wasting time on empty or irrelevant parts of the image.

Research shows that pre-training the region proposal network module reduces localization errors in multi-stage detectors. This targeted training leads to better performance, especially when labeled data is limited. Including the region proposal network in pre-training improves the accuracy of finding object locations, which boosts overall detection results.

The impact of region proposals can be seen in several ways:

- Region proposal networks are essential in models like Mask R-CNN, which need precise object localization.

- Newer models show measurable gains in accuracy. For example, DI-MaskDINO achieves higher average precision for both bounding boxes and masks on popular datasets.

- Frustum Voxnet V2 improves detection accuracy by 11% on RGBD images compared to earlier versions.

- Benchmark datasets such as MS COCO and Cityscapes report higher Intersection over Union (IoU) scores when using region proposal mechanisms.

- Metrics like Average Precision (AP) and Panoptic Quality (PQ) show that models using region proposals outperform traditional object detection systems.

| Model | Accuracy (mAP) | Speed (FPS) | Notes |

|---|---|---|---|

| Faster R-CNN | Highest | 1 | Best accuracy with 300 region proposals. |

| SSD on MobileNet | Highest mAP | Real-time | Optimized for real-time processing. |

| R-FCN | Good balance | N/A | Balances accuracy and speed effectively. |

| Faster R-CNN | Similar | N/A | Performs well even with 50 proposals. |

| Ensemble Model | 41.3% | N/A | Top entry in 2016 COCO challenge. |

This table shows that two-stage detectors like Faster R-CNN, which use a region proposal machine vision system, achieve the highest accuracy. These systems process fewer proposals but still find objects quickly and precisely.

Region proposals also reduce computational complexity. By focusing only on promising regions, the system can perform real-time object detection. This targeted approach increases detection precision and reduces false positives. Performance evaluations on datasets like PASCAL VOC and ILSVRC show that models with region proposals, such as Fast R-CNN and Faster R-CNN, improve both speed and accuracy. These improvements highlight the importance of region proposals in modern object detection.

Object Detection Challenges

Exhaustive Search Limitations

Exhaustive search methods try every possible option to find objects in an image. This approach works for simple cases but quickly becomes a problem as images get more complex. The number of possible regions grows very fast when the system looks for many features. This makes exhaustive search slow and hard to use for real-world object detection.

| Evidence Aspect | Explanation |

|---|---|

| Exponential search space | Exhaustive methods like grid search become impractical as the number of hyperparameters grows, leading to very large search spaces. |

| Computational cost | High computational resources are required, making exhaustive search inefficient for complex models and high-dimensional data. |

| Alternative methods | Random search and Bayesian optimization offer more efficient and resource-aware tuning approaches. |

| Deployment constraints | Resource-efficient methods enable tuning on devices with limited processing power, such as mobile phones or virtual headsets. |

Grid search, a common exhaustive method, checks every combination of settings. This works for small models but becomes too slow for modern object detection algorithms. Random search can find good solutions faster by picking settings at random. Studies show that random search often matches or beats grid search in less time. As a result, most object detection systems use smarter search methods to save time and power.

Need for Efficient Localization

Efficient localization helps object detection systems find objects quickly and accurately. Many real-world tasks, such as self-driving cars, security cameras, and image search, depend on fast and correct object detection. Early object detection algorithms used sliding windows, which checked every part of the image. This method was slow and used a lot of computer power.

- Efficient localization is important for safety and usability in real-world applications.

- Sliding window detectors are slow and need better solutions.

- Region-based CNNs improve accuracy but still use a lot of resources.

- Single-shot detectors like SSD and YOLO work faster but may lose some accuracy.

- Metrics such as precision, recall, mean Average Precision (mAP), and Frames Per Second (FPS) show the need to balance speed and accuracy.

- Top detectors now reach 20–30 FPS on high-resolution images, showing the demand for efficient localization.

A new 3D object detection method using RGBD cameras can process each frame in just 20 milliseconds. It finds object positions with high accuracy, even when computer resources are limited. This shows that efficient localization is not just helpful but necessary for modern object detection systems.

Evolution of Region Proposal

Traditional Methods

Early region proposal algorithms used hand-crafted features and simple rules to find objects in images. These methods often relied on sliding windows or selective search. Selective search grouped similar pixels to suggest possible object locations. The process was slow because the cnn had to process each region separately. Researchers improved efficiency by developing new frameworks and combining different techniques.

Some important traditional approaches include:

- R-CNN and Selective Search: The cnn processed each region proposal one by one, which took a lot of time.

- SPPNet: This method processed the image with a convolutional neural network only once, but training was still complex.

- Fast R-CNN: This approach used RoI pooling to speed up detection, but it still depended on selective search for proposals.

Many studies built on these ideas. For example, Yang et al. used Fast R-CNN for ship identification. Yao et al. combined deep neural networks with region proposal networks to detect ships. Chae et al. designed a fast detection method based on ResNet. Other researchers improved detection by using fully convolutional networks, better bounding box methods, and new ways to combine features.

These traditional region proposal algorithms set the foundation for modern object detection. They showed that focusing on promising regions could improve both speed and accuracy.

Deep Learning Advances

Deep learning changed how region proposal algorithms work. Modern cnn models now learn to generate proposals directly from data. Faster R-CNN introduced the region proposal network, which creates proposals much faster and with high accuracy. Feature Pyramid Networks improved detection of small objects by using multi-scale feature maps.

The table below shows how deep learning models have improved region proposal performance:

| Model / Metric | Improvement / Result |

|---|---|

| YOLOv10 | 1.4% increase in average precision; 46% latency reduction |

| YOLOv5 (improved) | mAP increased from 0.349 to 0.622; accuracy 0.865 |

| YOLO-MECD | +3.9 mAP; +0.2 precision; +4.1 recall; 75.6% fewer parameters; 74.4% smaller model |

| Faster R-CNN (RPN) | State-of-the-art accuracy but lower FPS than one-stage detectors |

| Bounding Box Regression | Anchor-free methods and optimization reduce errors |

| IoU Metrics | Adaptive thresholds improve detection quality |

Deep learning has made region proposal algorithms more accurate and efficient. For example, YOLO models now achieve higher precision and recall while using less memory and running faster. Two-stage detectors like Faster R-CNN still offer top accuracy, but one-stage detectors such as YOLO and SSD provide faster results by skipping explicit proposals. Improvements in bounding box regression and IoU metrics help reduce errors and boost detection quality. These advances allow cnn-based systems to handle real-world tasks with greater speed and reliability.

Region Proposal Network (RPN)

How RPNs Work

A region proposal network helps a cnn find objects in images quickly and accurately. The region proposal network uses a fully convolutional design. It shares features with the main detection network. This sharing makes the process fast and efficient.

The process starts when the cnn creates a feature map from the input image. A small sliding window moves across this feature map. At each spot, the region proposal network generates several anchor boxes of different sizes and shapes. These anchor boxes act like nets, ready to catch objects of many types.

For each anchor box, the network predicts if it contains an object or just background. It also adjusts the box to fit the object better. The region proposal network uses a combined loss function. This function helps the network learn to classify boxes and refine their positions at the same time.

The output is a set of region proposals. These proposals go to the next stage for more detailed object detection. The region proposal network can create about 300 proposals per image with very little extra computation. This design allows real-time object detection with high accuracy.

Experimental results show that a hierarchical ternary classification region proposal network improves the detection of new and unlabeled objects. This method works well even when there is not much training data. Tests on COCO and PASCAL VOC datasets show that this improved region proposal network outperforms older methods, especially in few-shot object detection.

The steps below summarize how region proposal networks work:

- The cnn processes the image to create a feature map.

- A sliding window moves over the feature map.

- At each location, the network generates anchor boxes of different sizes and shapes.

- Each anchor box gets a score for objectness and a refined position.

- The network uses a combined loss to train both classification and box adjustment.

- The final proposals go to the detection network for further analysis.

Anchor Boxes and IoU

Anchor boxes are a key part of the region proposal network. They help the cnn guess where objects might be, even before knowing what the objects are. Each anchor box has a set size and shape. The network places many anchor boxes at each spot on the feature map. This way, it can find objects of different sizes and shapes.

The region proposal network uses a metric called Intersection over Union (IoU) to measure how well an anchor box matches a real object. IoU compares the overlap between the anchor box and the ground-truth box. A higher IoU means a better match. The network uses IoU to decide which anchor boxes are good enough to become region proposals.

Empirical studies show that the number, size, and shape of anchor boxes affect detection accuracy. More anchor boxes usually lead to higher mean IoU values. When the mean IoU goes above 0.5, the network aligns well with real objects. Researchers often use clustering algorithms, like k-medoids, to optimize anchor box sizes for the training data.

Benchmark tests on the SeaDronesSee dataset reveal that anchor box optimization alone does not always improve detection. The best results come when anchor boxes work together with feature pyramid networks. This combination helps the region proposal network detect objects at different scales. Layer-wise anchor box optimization for each level of the feature pyramid further boosts accuracy.

During training, setting the right IoU threshold is important. A low threshold may accept poor matches, which lowers accuracy. A high threshold may miss true objects, which lowers recall. The region proposal network must balance these settings to get the best results.

A simple analogy helps explain anchor boxes and IoU. Imagine a fisherman using nets of different sizes to catch fish in a pond. Some nets fit small fish, while others fit big fish. The fisherman checks how much of each net covers a fish. The best nets are those that cover the fish the most. In the same way, the region proposal network uses anchor boxes and IoU to find the best matches for objects in an image.

Bounding Box Regression

Bounding box regression is a technique that helps the region proposal network adjust anchor boxes to fit objects more closely. The network predicts small changes to the position and size of each anchor box. These changes help the box match the object as closely as possible.

The region proposal network learns to make these adjustments during training. It uses a loss function that measures how close the predicted box is to the real object. Better bounding box regression leads to higher detection accuracy.

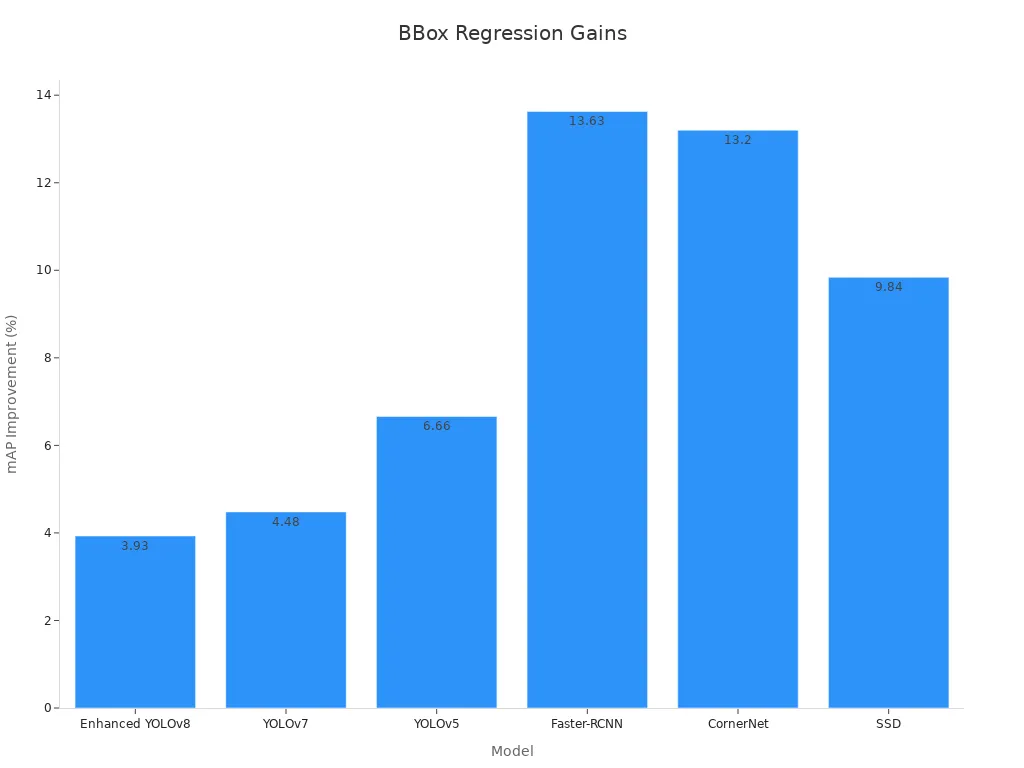

Experimental studies show that improvements in bounding box regression boost performance on many datasets. For example, the introduction of the AIoU loss function in YOLOv4 improved bounding box regression accuracy. This led to higher mean Average Precision (mAP) on both PASCAL VOC and Microsoft COCO datasets.

| Dataset | Detector | Improvement in mAP (%) | Key Contribution |

|---|---|---|---|

| PASCAL VOC | YOLOv4 | +0.61 | AIoU loss improves bounding box regression accuracy |

| Microsoft COCO | YOLOv4 | +1.98 | AIoU loss enhances convergence and focuses on difficult objects |

Other models, such as Enhanced YOLOv8 and Faster-RCNN, also show large gains in precision and mAP when using optimized bounding box regression loss functions.

| Model | Precision (%) | mAP Improvement over Baseline (%) | Notes |

|---|---|---|---|

| Enhanced YOLOv8 | 98.35 | +3.93 (precision) | Uses Shape-IoU optimized bounding box regression loss and attention mechanisms |

| YOLOv7 | N/A | +4.48 | Baseline comparison |

| YOLOv5 | N/A | +6.66 | Baseline comparison |

| Faster-RCNN | N/A | +13.63 | Baseline comparison |

| CornerNet | N/A | +13.20 | Baseline comparison |

| SSD | N/A | +9.84 | Baseline comparison |

Bounding box regression helps the region proposal network fine-tune its guesses. This step makes the final object detection more precise. The combination of anchor boxes, IoU, and bounding box regression allows the region proposal network to deliver fast and accurate results in modern machine vision systems.

Efficiency and Accuracy

Faster Object Detection

Region proposal systems help object detection models work much faster. They replace slow methods like selective search with Region Proposal Networks (RPN). RPNs use a fully convolutional network to scan feature maps and create candidate regions of interest. This process shares features with the detection network, which reduces extra work. Anchor boxes at different scales and shapes help the system find objects of many sizes. The network uses Intersection over Union (IoU) to focus on the most important regions of interest. A bounding box regressor then fine-tunes these proposals for better accuracy. These steps make real-time object detection possible and can cut running time by up to ten times compared to older methods.

- RPNs generate regions of interest quickly.

- Shared feature maps lower computational costs.

- IoU and bounding box regression improve accuracy.

Faster R-CNN combines RPN and Fast R-CNN into one network. This design allows end-to-end training and boosts both efficiency and accuracy. The system assigns each region of interest an objectness score, which helps filter out empty areas.

ROI Pooling

ROI pooling plays a key role in improving both speed and accuracy. It extracts fixed-size features from regions of interest, even if those regions have different shapes. This method lets the network reuse convolutional feature maps, which saves time during both training and testing. ROI pooling divides each region of interest into equal sections and applies max pooling. The result is a fixed-size output that works for any input size.

ROI pooling supports end-to-end training and lets the system process many regions of interest at once. This approach reduces overhead and speeds up region proposal processing.

Ross Girshick first introduced ROI pooling in Fast R-CNN. Today, it remains a standard in object detection pipelines.

Real-World Applications

Region proposal systems power many real-world applications. Self-driving cars use them to spot pedestrians and other vehicles quickly. Security cameras rely on these systems for real-time object detection in crowded places. Medical imaging tools use regions of interest to find tumors or other features with high accuracy. Retail stores use object detection to track products on shelves. Drones use these systems to detect objects in search and rescue missions.

- Self-driving cars need fast and accurate detection.

- Security and surveillance depend on real-time object detection.

- Medical imaging uses regions of interest for precise results.

These examples show how region proposal systems improve both efficiency and accuracy in daily life.

Region proposal systems play a vital role in modern machine vision. They help models find objects quickly and accurately. These systems solve key challenges in object detection by making searches faster and more precise. Ongoing research explores new ways to evaluate and improve these systems:

- Global groups promote responsible and inclusive research assessment.

- Countries like China and Japan shift toward qualitative evaluation.

- New trends include open science, AI, and better balance between peer review and metrics.

These advances shape the future of machine vision and impact many real-world applications.

FAQ

What is the main purpose of a region proposal system?

A region proposal system helps a computer vision model find areas in an image that may contain objects. This step makes object detection faster and more accurate.

How do anchor boxes improve object detection?

Anchor boxes let the model check for objects of different sizes and shapes. The system places these boxes at many spots in the image. This method helps the model find more objects.

Why is Intersection over Union (IoU) important?

IoU measures how much a predicted box overlaps with the real object. A higher IoU means a better match. The model uses this score to decide which boxes are good enough.

Where are region proposal systems used in real life?

Many industries use region proposal systems. Self-driving cars, security cameras, and medical imaging tools all rely on these systems for fast and accurate object detection.