Pooling in a pooling (max pooling) machine vision system helps computers find important parts of an image. Max pooling is a type of pooling that picks the biggest value from small sections. This process helps a system focus on key features for recognition tasks. Imagine looking at a huge photo and only keeping the most important spots—pooling works in a similar way. Studies show that pooling makes image analysis easier by shrinking the amount of data and helping computers learn patterns. Beginners who understand pooling build a strong base for working with machine vision.

Key Takeaways

- Pooling helps computers focus on important image features by shrinking data size, making models faster and more efficient.

- Max pooling picks the strongest signals in small image areas, helping models recognize objects even if they move or change size.

- Different pooling types offer unique benefits, like smoothing or handling various image sizes, improving model flexibility.

- Pooling reduces memory use and speeds up training, making it useful for devices with limited resources like phones.

- While pooling can lose some details, it generally helps models learn better and work well in real-world tasks like object detection and medical imaging.

Pooling (Max Pooling) Machine Vision System

What Is Pooling?

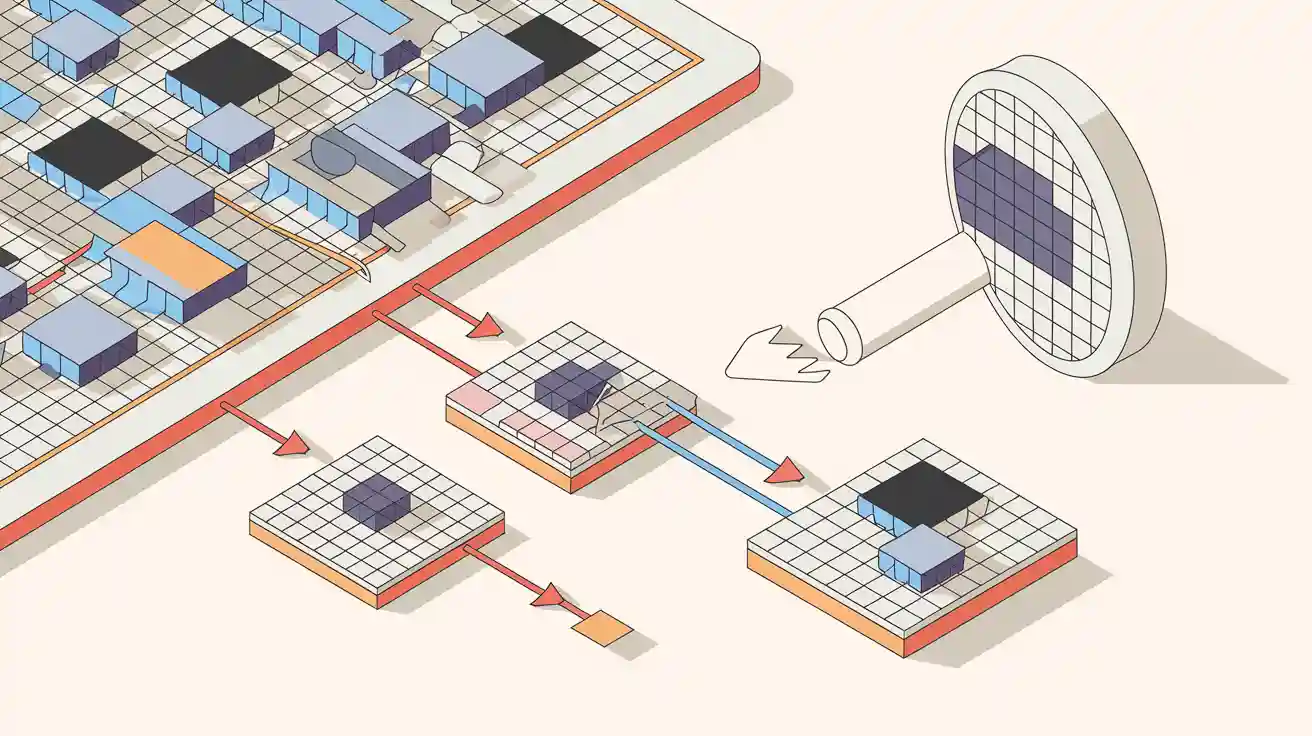

Pooling is a key step in a pooling (max pooling) machine vision system. It helps deep learning models focus on the most important parts of an image. A pooling layer works by sliding a small window, called a filter, over the image or feature map. Inside each window, the pooling operation summarizes the information. Max pooling, the most common type, picks the highest value in each window. This value often represents the strongest feature, like a bright edge or a sharp corner.

Other types of pooling exist. Average pooling takes the average value in each window, which gives a smoother result. Global pooling reduces each channel to just one value, summarizing the entire feature map. These methods help deep learning models learn patterns more efficiently.

Think of pooling like looking at a large painting through a small frame. Each time you move the frame, you only see a part of the painting. Max pooling keeps the brightest spot in each frame, helping the system remember the most important details.

Pooling layers appear in almost every deep learning model for images. They help build feature hierarchies and make the system less sensitive to small changes in the image, such as shifts or distortions.

Why Use Pooling?

A pooling (max pooling) machine vision system uses pooling for several reasons. First, pooling reduces the size of the data. This process, called down-sampling, makes the feature maps smaller. Smaller data means the deep learning model needs fewer calculations and less memory. For example, experiments with the LeNet-5 model on datasets like MNIST and CIFAR-100 show that pooling layers cut down the number of parameters and speed up training. This down-sampling effect allows deep learning models to work faster and handle larger images.

Pooling also helps prevent overfitting. By summarizing features, the pooling layer forces the model to focus on the most important patterns. This makes the system more robust and less likely to memorize noise. Max pooling, in particular, helps deep learning models find strong features, such as edges and textures, which are important for tasks like object detection and image classification.

The table below shows how pooling improves efficiency and accuracy in machine vision:

| Aspect | Description |

|---|---|

| Pooling Mechanism | Uses global average pooling and max pooling for channel and spatial attention |

| Efficiency Gains | Reduces parameters, FLOPs, and memory usage |

| Performance Improvements | Improves accuracy on tasks like ImageNet classification and MS COCO object detection |

| Model Architectures | Works well in MobileNetv2, ResNet, Deeplabv3 |

| Advantages | Better recognition of objects, suitable for mobile and embedded systems |

Pooling layers also help deep learning models handle real-world images. Early systems like LeNet-5 used pooling to improve accuracy and speed. Modern systems, such as ResNet and VGGNet, rely on pooling to process large images quickly and accurately. Pooling makes machine vision systems more reliable in tasks like quality control in factories or medical image analysis.

Pooling Layer in CNNs

The pooling layer plays a vital role in convolutional neural networks (CNNs). This layer helps deep learning models process images more efficiently by reducing the size of feature maps. When a CNN analyzes an image, it creates feature maps that highlight important patterns. The pooling layer then summarizes these maps, making the data smaller and easier for the network to handle. This step allows deep learning models to focus on the most important features while ignoring less useful details.

Max Pooling Explained

Max pooling stands out as the most common pooling operation in deep learning. In this method, a small filter, such as a 2×2 window, slides across the feature map. At each step, the pooling layer selects the highest value within the window. This value represents the strongest feature in that region, like a bright edge or a sharp corner. The stride parameter controls how far the filter moves each time. Usually, the stride matches the filter size, so the windows do not overlap.

Researchers have shown that max pooling helps CNNs become less sensitive to the exact location of features. For example, if an object shifts slightly in an image, the pooling layer still captures its main features. This property, called spatial invariance, allows deep learning models to recognize objects even when they appear in different positions or sizes. Max pooling also reduces the size of feature maps, which speeds up computation and lowers memory use. As a result, deep learning models can process larger images and make predictions faster.

- Max pooling reduces spatial dimensions by picking the maximum value in each region.

- The pooling window size and stride determine how much the feature map shrinks.

- Larger windows create lower-resolution maps, capturing more global features.

- This reduction in size lowers the computational load for later layers.

- Max pooling helps prevent overfitting by focusing on the most important features.

For example, if a 4×4 feature map uses a 2×2 filter with a stride of 2, the output becomes a 2×2 map. This process keeps the strongest signals and discards weaker ones, helping deep learning models learn faster and generalize better.

Tip: Max pooling helps CNNs recognize objects even if they move or change size in the image. This makes deep learning models more robust in real-world tasks.

Other Pooling Types

While max pooling is popular, other pooling methods also play important roles in deep learning. Each type offers unique benefits for different tasks.

| Pooling Method | Description | Key Characteristics and Advantages |

|---|---|---|

| Max Pooling | Takes the maximum value in each region | Simple, fast, improves generalization |

| Average Pooling | Computes the average value in each region | Smoother output, less sensitive to noise |

| Lp Pooling | Uses a norm parameter to blend max and average pooling | Flexible, can generalize both max and average pooling |

| Stochastic Pooling | Randomly selects a value based on probability | Adds randomness, helps avoid overfitting |

| Spectral Pooling | Reduces size by trimming frequency components | Preserves more structure, efficient with fast Fourier transforms |

| Spatial Pyramid Pooling (SPP) | Pools in spatial bins of different sizes | Handles images of varying sizes, creates fixed-length outputs |

| Def Pooling | Learns how to handle geometric changes in objects | Deals better with object deformations |

Average pooling works by taking the mean value in each window. This method creates smoother feature maps and reduces sensitivity to noise. Lp pooling blends max and average pooling by changing a parameter, offering more flexibility. Stochastic pooling introduces randomness, which can help deep learning models avoid overfitting. Spectral pooling uses frequency information to keep more structure from the original image. Spatial pyramid pooling allows CNNs to handle images of different sizes, which is useful for tasks like object detection. Def pooling learns how to manage changes in object shapes, making deep learning models more adaptable.

Recent research has introduced new pooling methods, such as Avg-TopK pooling. This method keeps the top K values in each region and averages them. Experiments on datasets like CIFAR-10 and CIFAR-100 show that Avg-TopK pooling can improve classification accuracy by over 6% compared to max pooling and by more than 16% compared to average pooling. These results suggest that choosing the right pooling layer can make deep learning models more accurate and robust.

Benefits and Drawbacks

Key Advantages

Pooling offers several important benefits in machine vision. It helps models keep the most important features while reducing the size of the data. This process makes deep learning models faster and more efficient. By shrinking feature maps, pooling saves memory and speeds up the time needed to process images. For example, when using the Euclidean-Distance-Preserving Feature Reduction method, researchers saw a dramatic drop in memory use and query time. The table below shows how reducing feature dimensions can help:

| Dataset | Feature Dimension | Memory Usage (MB) | Query Time (ms) |

|---|---|---|---|

| Market-1501 | High Dimension | 2263.1 | 11082.4 |

| Market-1501 | Reduced to 32 | 5.1 | 9.5 |

This method keeps the important distances between features, so accuracy does not drop. It also helps with knowledge distillation, making it easier to train smaller models. Pooling allows systems to work well even on devices with limited resources, such as mobile phones or embedded systems. Many modern models use pooling to handle large images quickly and to focus on the most useful patterns.

Pooling not only saves memory but also helps models learn faster and generalize better to new images.

Limitations

Despite its strengths, pooling has some drawbacks. One main issue is the risk of losing important information. When pooling reduces the size of feature maps, some details may disappear. Studies have shown that pooling can increase error rates in certain tests. The table below highlights some of these concerns:

| Aspect Evaluated | Quantified Findings / Statistics | Explanation / Implication |

|---|---|---|

| Type I Error Rate Inflation | Increased from nominal 5% to between 7% and 11% in some pooling scenarios | Pooling leads to higher false positive rates, which can affect test results. |

| Power Gains | No consistent or substantial increase; sometimes power is reduced rather than improved | Pooling does not always help models find true effects and may even make it harder. |

| Simulation Study Outcomes | Simulations with 100,000 runs showed deviations from expected error rates | Pooling effects depend on the design and settings, making results less predictable. |

| Philosophical and Statistical Issues | Pooling can bias p-values and confidence intervals, making some results less reliable | This can lead to unreliable conclusions in certain studies. |

| Recommendations | Pooling discouraged in confirmatory studies unless tested by simulations | Careful testing is needed before using pooling in important research. |

| Contextual Use | May be more acceptable in exploratory studies with practical limits | Pooling can still help in early research or when resources are limited. |

Pooling may also oversimplify the data, making it harder for models to spot small or subtle features. In some cases, this can lower the accuracy of the system. Researchers recommend using pooling carefully, especially in studies where every detail matters.

Applications in Machine Vision

Image Classification

Image classification is one of the most common uses of pooling in machine vision. In this task, a computer looks at an image and decides what it shows, such as a cat, a car, or a tree. Max pooling helps the system keep the strongest signals from each part of the image. This makes it easier for the model to focus on important features, like edges or shapes, and ignore small changes or noise. Many image recognition systems use max pooling to improve accuracy and speed. For example, popular models like VGGNet and ResNet use pooling layers to shrink the size of feature maps. This helps the computer learn faster and use less memory. By keeping only the most important details, max pooling supports better recognition of objects in different lighting or positions.

Object Detection

Object detection goes beyond image classification. Here, the system must find and label each object in an image. It also needs to know where each object is located. Pooling plays a key role in this process. Region of Interest (RoI) pooling, which uses max pooling, helps the system extract fixed-size features from different parts of the image. This method allows the computer to handle objects of many sizes and shapes. RoI pooling speeds up both training and testing, while keeping detection accuracy high. Models like Fast R-CNN and Mask R-CNN use RoI pooling to reuse feature maps and reduce computation. RoI Align, an improved version, uses bilinear interpolation to boost spatial accuracy. This leads to better object localization and recognition, especially when the system needs to find small or closely packed objects.

Other Uses

Pooling also helps in other machine vision tasks. In image segmentation, the system divides an image into different parts, such as separating a person from the background. Max pooling helps keep the main features clear, making it easier to draw sharp boundaries. In facial recognition, pooling layers help the model focus on key facial features, even if the face changes angle or lighting. Practical applications of max pooling appear in medical image analysis, where doctors use computers to spot signs of disease. Pooling helps these systems find important patterns quickly and accurately. In robotics, pooling allows machines to recognize and locate objects in real time, supporting tasks like sorting or navigation.

Tip: Pooling layers make image recognition systems faster and more reliable in real-world situations, from self-driving cars to smartphone cameras.

Pooling and max pooling remain essential in deep learning for machine vision. These techniques help deep learning models extract vital features, reduce overfitting, and speed up training. Experts like Fei-Fei Li and Andrew Ng highlight pooling as a powerful deep learning tool. Deep learning systems use pooling to lower computational complexity and improve accuracy. Advanced pooling methods in deep learning, such as hybrid pooling, further boost performance in tasks like medical image analysis. Beginners can start by adding pooling layers to deep learning projects. Many free deep learning tutorials and courses offer step-by-step guidance.

For those new to deep learning, exploring pooling layers in hands-on projects builds strong skills for future success.

FAQ

What is the main purpose of pooling in machine vision?

Pooling helps a model keep important features while making the data smaller. This process allows computers to work faster and use less memory. Pooling also helps models focus on strong patterns in images.

Can pooling layers cause a loss of information?

Yes, pooling layers can remove some details from images. They keep the most important features but may lose small or subtle patterns. Careful design helps reduce this problem.

How does max pooling differ from average pooling?

Max pooling keeps the highest value in each region. Average pooling takes the mean value. Max pooling highlights strong features, while average pooling creates smoother results. Each method works best for different tasks.

Do all deep learning models use pooling layers?

Not all models use pooling layers. Some modern models use other methods, like strided convolutions, to reduce data size. Many popular models still use pooling because it works well for many vision tasks.