Parameter initialization in a machine vision system plays a vital role in setting the foundation for the model’s learning process, directly impacting how effectively it converges toward optimal solutions. In the context of a machine vision system, improper initialization can lead to unstable or inefficient training of deep learning models.

When parameter initialization in a machine vision system is poorly executed, gradients may vanish or explode during backpropagation, hindering the training process or preventing the model from learning altogether. By implementing thoughtful parameter initialization strategies tailored to a machine vision system, you can achieve faster convergence, stable learning dynamics, and enhanced performance in vision tasks such as image classification and object detection.

Key Takeaways

- Setting parameters correctly helps models learn quickly and stay stable.

- Methods like He and Xavier initialization stop problems like tiny or huge gradients.

- Customizing weights for each task makes models more accurate and efficient.

- Check and test parameters often to fix problems early and improve results.

- Following good habits, like clear steps and using tools, makes vision systems work better.

The Importance of Parameter Initialization in Machine Vision Systems

Ensuring Convergence and Stability

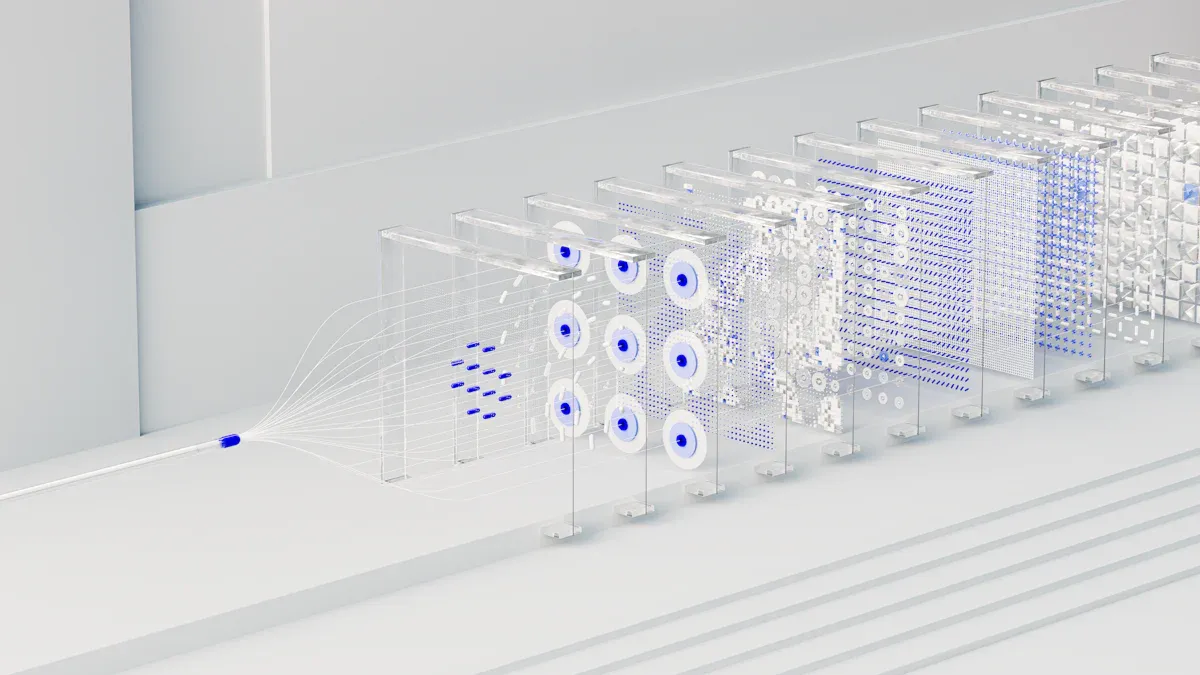

Parameter initialization plays a crucial role in ensuring the convergence speed and training stability of deep learning models. When you initialize parameters correctly, the model starts its learning process on the right track, avoiding unnecessary delays or instability. For instance, IDInit, a modern weight initialization method, demonstrates how a padded identity-like matrix can resolve rank constraints in non-square weight matrices. This approach not only improves convergence but also enhances stability across various scenarios, including large-scale datasets and deep architectures.

In machine vision systems, proper initialization techniques are particularly important. These systems often deal with complex tasks like object detection and image segmentation, where unstable training can lead to poor outcomes. By using well-designed initialization strategies, you can ensure that the model learns efficiently and avoids pitfalls like dead neurons or slow convergence. This is especially critical when working with deep learning models that require precise parameterization to function effectively.

Avoiding Vanishing and Exploding Gradients

Vanishing and exploding gradients are common challenges in deep learning, especially in architectures like recurrent neural networks (RNNs). These issues can severely hinder the learning process, making it difficult for the model to capture long-term dependencies. Proper parameter initialization helps mitigate these problems by maintaining a balanced flow of gradients during backpropagation.

For example, state-space models (SSMs) have been shown to address the sensitivity of gradient-based learning in networks with long memory. This sensitivity increases as the network’s depth grows, even without the presence of exploding gradients. By carefully designing initialization techniques, you can prevent these issues and ensure that the model remains trainable. This is particularly important in machine vision systems, where tasks like visual tracking rely on stable gradient flows to achieve accurate results.

Impact on Model Performance and Training Efficiency

The choice of parameter initialization directly impacts the generalization performance and efficient training of your model. Studies have shown that optimizing initialization can significantly improve both training outcomes and final model accuracy. For instance, experiments comparing different initialization methods in visual tracking revealed that inaccurate initialization could degrade performance. A proposed compensation framework, including spatial-refine and temporal-chasing modules, effectively addressed these issues, leading to better results.

In addition, proper initialization enhances the performance of machine vision systems by improving image quality and algorithm reliability. Techniques like Auto White Balance (AWB) and Auto Exposure Control (AEC) demonstrate how tuning parameters can lead to measurable improvements. For example, AWB ensures color constancy under challenging lighting conditions, while AEC prevents underexposed or overexposed images. These adjustments not only improve detection algorithms but also contribute to the overall efficiency of the system.

By adopting modern weight initialization strategies, you can achieve faster convergence speed, better training stability, and superior generalization performance. Whether you’re working on small-scale projects or large-scale machine vision systems, the right initialization techniques can make a significant difference in your results.

Common Weight Initialization Strategies for Neural Networks

Xavier Initialization

Xavier initialization, also known as Glorot initialization, is a widely used method for initializing weights in neural networks. It ensures that the variance of activations remains consistent across layers, preventing gradients from vanishing or exploding during training. This technique calculates the initial weights based on the number of input and output neurons in a layer.

You can apply Xavier initialization when working with activation functions like sigmoid or tanh. These functions are sensitive to the scale of input values, and improper initialization can lead to unstable training. Xavier initialization helps maintain a balanced flow of information, enabling your model to learn effectively.

Tip: Use Xavier initialization for shallow networks or tasks where sigmoid or tanh activations are prevalent. It provides a solid foundation for stable training dynamics.

He Initialization

He initialization, also known as Kaiming initialization, is specifically designed for networks using ReLU activation functions. It addresses the limitations of Xavier initialization by scaling weights to maintain variance across layers, ensuring better signal propagation. This method calculates weights based on the number of input neurons, making it ideal for deep architectures.

Empirical studies highlight the advantages of He initialization:

- It improves model accuracy and training speed, optimizing network learning.

- Faster convergence and enhanced predictive accuracy are observed in experiments using datasets like ImageNet and WMT.

- He initialization leads to higher final model accuracy, making it a preferred choice for modern vision systems.

By using He initialization, you can prevent issues like dead neurons, which often occur with ReLU activations. This technique ensures that your model learns efficiently, even in deep networks.

Orthogonal Initialization

Orthogonal initialization is a powerful technique for initializing weights in deep architectures. It involves setting weights as orthogonal matrices, ensuring that gradients flow smoothly during backpropagation. This method effectively combats exploding and vanishing gradients, particularly in networks with many layers.

Research findings emphasize the effectiveness of orthogonal initialization:

- Visualizations show improved training stability, especially when combined with gradient clipping.

- The study "Sparser, Better, Deeper, Stronger" demonstrates that Exact Orthogonal Initialization (EOI) outperforms other sparse initialization methods.

- EOI enables the training of highly sparse networks, including 1000-layer MLPs and CNNs, without residual connections or normalization techniques.

Orthogonal initialization is ideal for tasks requiring deep architectures, such as image segmentation or object detection. It ensures stable training dynamics, allowing your model to achieve optimal performance.

Uniform and Normal Distributions

When initializing weights in neural networks, you often encounter two popular distribution types: uniform and normal. These distributions play a critical role in ensuring your model trains effectively and avoids common pitfalls like vanishing or exploding gradients.

Uniform distributions spread values evenly across a specified range. For weight initialization, this range is carefully calculated to maintain balanced variance across layers. For example, Xavier initialization uses a uniform distribution to ensure that the variance of weights remains consistent as data flows through the network. This consistency helps prevent gradients from becoming too small or too large, which can disrupt training.

Normal distributions, on the other hand, generate values that cluster around a mean, typically zero. This clustering ensures that weights are centered, which is crucial for effective training. Techniques like normalized xavier weight initialization rely on normal distributions to maintain a balanced variance while keeping weights close to zero. This approach is particularly useful when working with deep architectures, where maintaining stability across layers is essential.

Here’s a quick comparison of how these distributions are applied in popular weight initialization methods:

| Method | Distribution Type | Formula |

|---|---|---|

| He Normal Initialization | Normal | w_i ∼ N[0, σ] where σ = √(2/fan_in) |

| He Uniform Initialization | Uniform | w_i ∼ U[-√(6/fan_in), √(6/fan_out)] |

| Xavier/Glorot Initialization | Uniform | w_i ∼ U[-√(σ/(fan_in + fan_out)), √(σ/(fan_in + fan_out))] |

| Normalized Xavier/Glorot | Normal | w_i ∼ N(0, σ) where σ = √(6/(fan_in + fan_out)) |

Tip: Use he weight initialization with normal distributions for networks using ReLU activations. For sigmoid or tanh activations, normalized xavier weight initialization with uniform distributions works best.

In practice, your choice between uniform and normal distributions depends on the architecture and activation functions of your model. Uniform distributions, like those used in Xavier initialization, balance variance across layers, making them ideal for shallow networks. Normal distributions, such as those in normalized xavier weight initialization, are better suited for deeper networks where maintaining a mean of zero is critical.

By understanding these distributions and their applications, you can make informed decisions about weight initialization. This ensures your model trains efficiently and achieves optimal performance.

Advanced Weight Initialization Techniques for Vision Systems

Layer-Wise Initialization

Layer-wise initialization focuses on assigning initial weights to each layer based on its specific characteristics. This approach ensures that each layer starts with optimal conditions for learning, improving training stability and convergence. For example, experiments on convolutional and transformer architectures have shown that layer-wise initialization enhances performance in tasks like image classification and autoregressive language modeling. By tailoring the initialization to each layer, you can address challenges like gradient instability and ensure smoother training.

A case study demonstrated the effectiveness of Layer-wise Relevance Propagation (LRP) heatmaps in reducing background bias in deep classifiers. When synthetic bias was introduced into images, the proposed method (ISNet) outperformed eight state-of-the-art models. It achieved superior generalization performance on external test databases by focusing on relevant features. This highlights the importance of layer-wise initialization in improving robustness and accuracy in vision systems.

Variance Scaling for Deep Architectures

Variance scaling initialization adjusts the scale of initial weights based on the size of the network and dataset. This technique ensures that gradients remain balanced during backpropagation, preventing issues like vanishing or exploding gradients. Research has revealed a power-law relationship in neural scaling, showing that variance scaling can significantly enhance model performance without requiring massive increases in model or dataset size. This makes it a valuable strategy for deep architectures, where maintaining stability across layers is critical.

By using variance scaling initialization, you can optimize the learning process for deep networks. This approach is particularly effective in vision systems, where tasks like object detection and segmentation demand high accuracy and efficiency.

Pretrained Weights as Initialization

Using pretrained weights as a form of initialization leverages knowledge from previously trained models. This method reduces the number of misaligned filters at the start of training, leading to lower test errors. It is especially beneficial in scenarios like federated learning, where data heterogeneity can pose challenges. Pretrained models store extensive knowledge in their parameters, which can be fine-tuned for specific tasks. This makes them highly effective for downstream applications in vision systems.

For example, starting with pretrained weights can improve performance in tasks like image classification and object detection. By building on existing knowledge, you can achieve faster convergence and better accuracy compared to training from scratch.

Task-Specific Initialization for Vision Models

Task-specific initialization tailors the starting weights of a model to suit the unique requirements of a particular vision task. This approach ensures that your model begins training with a foundation that aligns closely with the task’s characteristics. By doing so, you can achieve faster convergence and better performance, especially in specialized applications like medical imaging or autonomous driving.

One effective method for task-specific initialization is incorporating task context into the initialization process. For example, the Aviator approach adapts model weights based on the specific task at hand. This method has shown remarkable success in few-shot learning scenarios, where limited data is available for training. Unlike traditional methods like MAML, which often struggle with diverse tasks, Aviator excels by handling model variations more effectively.

Here’s a summary of findings related to task-specific initialization:

| Evidence Description | Findings |

|---|---|

| Task-specific initialization | The Aviator approach incorporates task context into model initialization, leading to improved performance in few-shot learning tasks. |

| Comparison with MAML | Aviator’s initialization handles model diversities effectively, unlike MAML which is too conservative. |

| Experimental validation | Experiments on synthetic and benchmark datasets show that Aviator achieves state-of-the-art performance. |

Tip: Use task-specific initialization when working on niche vision tasks. It helps your model adapt quickly and perform better, even with limited data.

By leveraging task-specific initialization, you can fine-tune your model to excel in specialized tasks. This strategy not only improves accuracy but also reduces the time and computational resources needed for training. Whether you’re working on object detection in crowded environments or segmenting medical images, task-specific initialization provides a significant advantage.

Practical Tips for Implementing Parameter Initialization

Choosing the Right Strategy for Your Model

Selecting the best weight initialization strategy depends on your model’s architecture and the task at hand. For instance, pretrained weights from ImageNet often boost performance in general vision tasks. However, for medical applications like segmentation, CheXpert initialization may yield better results. Random initialization serves as a baseline but typically underperforms compared to task-specific strategies.

| Initialization Strategy | Performance Impact | Notes |

|---|---|---|

| ImageNet | Statistically significant boost | Popular transfer learning strategy, but may not suit medical tasks |

| CheXpert | Comparable to ImageNet | More suitable for medical segmentation tasks |

| Random Initialization | Lower performance | Baseline for comparison |

When choosing an initialization method, consider the depth of your network and the activation functions used. For deep architectures with ReLU activations, He initialization ensures stable gradients. For shallow networks or sigmoid activations, Xavier initialization works well. Tailoring your strategy to the task and architecture improves training efficiency and model accuracy.

Code Snippets for Popular Frameworks (e.g., PyTorch, TensorFlow)

Implementing weight initialization in frameworks like PyTorch and TensorFlow is straightforward. PyTorch allows you to define custom initialization functions and apply them to layers. TensorFlow provides similar flexibility with its apply method. Below are examples of how you can initialize weights in both frameworks:

| Framework | Code Snippet |

|---|---|

| PyTorch | def init_constant(module): ...net.apply(init_constant)net[0].weight.data[0](tensor([1., 1., 1., 1.]), tensor(0.)) |

| TensorFlow | net = tf.keras.models.Sequential([...])net(X)net.weights[0], net.weights[1](<tf.Variable 'dense_2/kernel:0' shape=(4, 4) ...> |

These snippets demonstrate how to initialize weights effectively, ensuring balanced gradients and stable training dynamics. Use PyTorch for its flexibility in defining custom initialization methods. TensorFlow excels in handling complex architectures with built-in initialization options.

Debugging and Fine-Tuning Initialization

Debugging weight initialization involves monitoring training metrics like loss and gradient flow. If your model struggles to converge, re-initializing weights can resolve issues. Numerical studies show that proposed re-initialization methods achieve the lowest mean squared error (MSE) and require fewer training epochs compared to existing methods.

| Method | MSE | Training Epochs |

|---|---|---|

| Proposed Re-initialization | Lowest | Fewest |

| Existing Methods | Higher | More |

Fine-tuning initialization involves adjusting parameters based on task-specific requirements. For example, in vision systems, you might scale weights to improve image quality or algorithm reliability. Regularly evaluate your model’s performance and adjust initialization strategies to optimize results.

Best Practices for Vision-Specific Applications

When working on vision-specific applications, following best practices for parameter initialization ensures your models perform efficiently and reliably. These practices help you maintain consistency, reduce errors, and achieve better results in tasks like image classification or object detection.

-

Establish Clear Workflows: Create a structured initialization process. This ensures consistency across different projects and benchmarking activities. A clear workflow helps you avoid confusion and keeps your team aligned.

-

Prioritize Data Integrity: Always verify the accuracy and completeness of your data before initializing parameters. High-quality data leads to better model performance and reduces the risk of errors during training.

-

Optimize System Configuration: Adjust your system settings to match the requirements of your vision task. Proper configuration prevents skewed results and ensures your model starts with the right foundation.

-

Leverage Automation: Use automated tools to handle initialization tasks. Automation reduces human error and saves time, especially when working with large-scale vision systems.

-

Validate Parameters: Regularly check your initialization parameters to ensure they align with your objectives. Validation helps you identify and fix potential issues early in the process.

-

Monitor Performance in Real-Time: Keep track of how your initialization impacts training. Real-time monitoring allows you to spot problems quickly and make adjustments as needed.

-

Document and Share Practices: Record your initialization methods and share them with your team. Documentation fosters consistency and helps others learn from your experience.

By following these best practices, you can improve the reliability and efficiency of your vision-specific applications. Whether you are working on small projects or large-scale systems, these steps will help you achieve consistent and high-quality results.

Parameter initialization is the cornerstone of effective training in modern vision systems. It influences how quickly your model converges and how well it performs. Studies show that initialization methods directly impact metrics like Peak Signal-to-Noise Ratio (PSNR), highlighting their role in achieving stable and efficient training. The non-convex nature of deep learning models makes this choice even more critical, as it determines whether your model reaches optimal solutions or gets stuck in suboptimal states.

You’ve explored strategies ranging from Xavier and He initialization to advanced techniques like task-specific initialization. Each method offers unique benefits, whether you’re working on shallow networks or deep architectures. Tailoring these strategies to your specific application ensures your machine vision system achieves the best results. Whether you’re denoising medical images or detecting objects in real-time, the right initialization sets your model up for success.

FAQ

What is the best weight initialization method for deep networks?

He initialization works best for deep networks with ReLU activations. It ensures stable gradients and faster convergence. Use it when training models for tasks like object detection or segmentation.

How can I debug weight initialization issues?

Monitor training metrics like loss and gradient flow. If the model struggles to converge, reinitialize weights. Use tools like gradient visualizations to identify problems and adjust initialization strategies accordingly.

Should I always use pretrained weights for vision tasks?

Pretrained weights improve performance for general tasks like image classification. For specialized applications like medical imaging, task-specific initialization often yields better results. Choose based on your task requirements.

Can I mix different initialization strategies in one model?

Yes, you can combine strategies like layer-wise initialization and pretrained weights. Tailor each layer’s initialization to its function. This improves training stability and performance, especially in complex architectures.

How do I implement weight initialization in PyTorch?

Use PyTorch’s torch.nn.init module. For example:

import torch.nn.init as init

init.xavier_uniform_(layer.weight)

Apply this to layers during model construction for effective initialization.

See Also

Essential Tips for Calibrating Vision System Software

Comparing Firmware Machine Vision With Conventional Systems

Understanding Computer Vision Models and Machine Vision Systems

The Impact of Neural Networks on Machine Vision Technology

Effective Strategies to Minimize False Positives in Machine Vision