Imagine a superhero who gives machines the power to see and decide. The Model Training machine vision system works like this hero, helping machines use ai to spot details, find defects, and make smart choices. With ai, machine vision systems learn from thousands of images, which boosts accuracy by 20% over old methods. Machines using ai now spot defects better, cut false positives by 85%, and adapt to new parts with over 95% accuracy.

Machines trained with ai keep learning, improving inspection speed and reducing errors by more than 90%. See the table below for key improvements:

| Improvement Aspect | Impact with AI Model Training |

|---|---|

| Classification Accuracy | 20% higher than rule-based systems |

| False Positive Reduction | 85% fewer false alarms in defect detection |

| Adaptability to Part Variations | Over 95% accuracy, even with new or different parts |

| Inspection & Efficiency | Errors reduced by 90%, part-picking efficiency up by 40% |

Machines with ai superpowers now lead the way in smart automation, making every process sharper, faster, and more reliable.

Key Takeaways

- AI-powered model training helps machines learn from images, improving accuracy and reducing errors in detecting defects and objects.

- High-quality data and precise image annotation are essential for training reliable machine vision models that perform well in real-world tasks.

- Using advanced deep learning frameworks and continuous validation ensures models stay accurate and adapt to new challenges after deployment.

- AI in machine vision boosts efficiency and quality across industries like manufacturing, healthcare, and autonomous vehicles.

- Ongoing learning and ethical practices keep machine vision systems fair, safe, and ready for future innovations.

Model Training Machine Vision System

What Is Model Training?

Model training machine vision system gives machines the ability to learn from images and data. In computer vision, model training means teaching a machine to recognize patterns, objects, or defects by showing it many labeled images. The machine uses these examples to build a set of rules for making decisions on new images. Unlike traditional systems that rely on fixed rules, model training lets the machine adapt and improve over time.

The process uses several important components. The table below shows the main parts of model training for computer vision:

| Component | Role in Model Training Machine Vision System |

|---|---|

| Large Datasets | Provide many examples for the machine to learn patterns |

| Optimization Techniques | Improve accuracy, speed, and adaptability |

| Adversarial Training | Makes the model more robust against tricky or unusual images |

| Meta-Learning | Helps the machine learn how to learn new tasks faster |

| Quantization & Pruning | Reduce model size and speed up processing |

| Knowledge Distillation | Transfers knowledge from large models to smaller, faster ones |

| Hyperparameter Tuning | Finds the best settings for the highest performance |

Model training in computer vision uses these tools to help machines handle complex tasks and make fewer mistakes.

Why It Matters

Model training machine vision system changes how machines see and understand the world. With ai, machines no longer need engineers to write every rule. Instead, they learn from data and improve with each new image. This approach makes computer vision systems more flexible and powerful.

The table below compares traditional and ai-powered model training in machine vision:

| Training Aspect | Traditional Rule-Based Machine Vision | AI-Powered Machine Vision (Deep Learning & Edge Learning) |

|---|---|---|

| Training Method | Engineers write rules | Machines learn from labeled images |

| Expertise Required | Needs expert programming | Needs product knowledge and labeled data |

| Data Requirement | No training data | Hundreds of labeled images, sometimes only a few needed |

| Adaptability | Handles simple, repeatable tasks | Handles complex, changing tasks |

| Deployment Speed | Slow, manual rule creation | Fast, uses pre-trained models |

| Use Cases | Consistent products | Complex defect detection, classification |

Model training lets machines extract features from data without human help. With ai, computer vision systems can use transfer learning to adapt to new tasks with less data. Progressive learning allows the machine to keep improving, even after deployment. Distributed training uses many processors to speed up learning, so machines update quickly. These advances mean that model training machine vision system helps machines get smarter and more reliable as they process more data.

Tip: Early stopping during model training prevents overfitting. The machine stops learning when it no longer improves on new images, which keeps the model accurate and efficient.

AI in Machine Vision

AI-Powered Machine Vision Systems

AI in machine vision gives machines the power to see, understand, and act. These systems use computer vision to process images and videos, allowing machines to perform pattern recognition and object detection. AI-powered machine vision systems help machines learn from data, making them smarter and more adaptable.

Many industries use ai in machine vision for different tasks. Some common applications include:

- Object detection in autonomous vehicles to identify other cars, pedestrians, and road signs.

- Materials inspection in manufacturing, where machines find defects and imperfections.

- Biomedical research, where computer vision automates the analysis of microscopic images.

- Optical character recognition (OCR) to extract text from printed or handwritten documents.

- Signature recognition to prevent fraud by verifying handwriting.

- Object counting to improve productivity and reduce errors in factories.

AI in machine vision uses deep learning models like CNNs, YOLO, and Faster RCNN. These models help machines extract features from images, supporting real-time decision-making. Machines can now detect subtle defects that traditional systems often miss.

AI-powered machine vision systems adapt quickly to new products and environments, making them essential for modern automation.

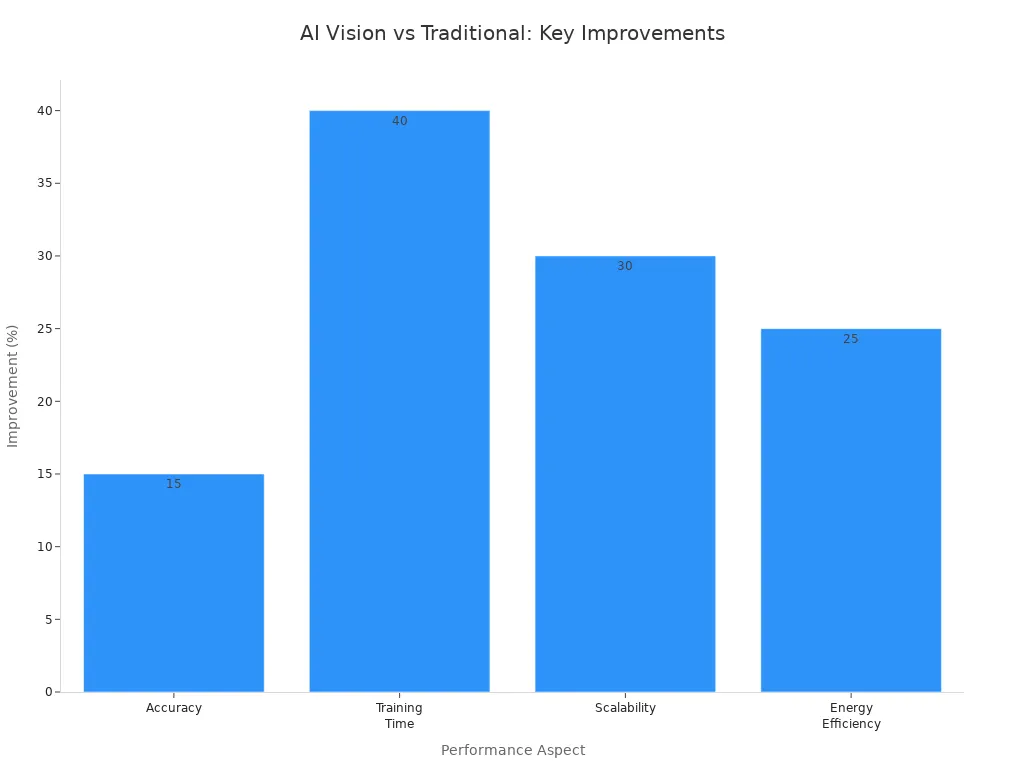

Benefits of AI

AI in machine vision brings many measurable benefits. Machines using computer vision now achieve higher accuracy and faster processing. The table below shows how ai-powered machine vision systems outperform traditional systems:

| Performance Aspect | AI-Powered Machine Vision Improvement Compared to Traditional Systems |

|---|---|

| Accuracy | Up to 15% higher accuracy due to deep learning and advanced algorithms |

| Inference Speed | Faster inference times enabled by models like YOLO and Faster RCNN |

| Training Time | Reduced by up to 40%, allowing quicker model development and deployment |

| Scalability | Improved by approximately 30%, facilitating real-world application scaling |

| Energy Efficiency | Increased by about 25% through optimized algorithms and hardware |

| False Positives/Negatives | Reduced, enabling detection of subtle defects beyond traditional capabilities |

Organizations report big improvements after using ai in machine vision. In manufacturing, machines now inspect parts 25% faster and reach over 99% accuracy in defect detection. Retailers see an 18% drop in shrinkage and a 15% rise in profit margins. Farmers use computer vision for object detection, reducing pest losses by 7% and increasing crop yield by 0.7 tons per acre.

AI in machine vision also saves money. General Motors cut material waste by 30% with ai-powered planning. Schneider Electric saved 20% on energy costs by using ai to optimize power use. Tesla reduced product defects by 90% with ai-driven quality control. Synthetic data helps train computer vision models at a fraction of the cost, making ai in machine vision more affordable for many industries.

AI in machine vision transforms how machines perform pattern recognition and object detection, making every process smarter, faster, and more reliable.

Model Training Process

Data Collection

High-quality data collection forms the backbone of any successful machine vision project. The process starts with capturing clear, detailed images that represent the real-world environment where the machine will operate. Lighting, background, and camera settings play a crucial role in ensuring that images are sharp and free from noise or distortion.

- Use in-house cameras, crowdsourcing, automated systems, or generative AI to gather images.

- Ensure the dataset covers a wide range of scenarios and object types for robust model training.

- Maintain high image quality with proper exposure, color balance, and resolution.

- Align data collection with the intended use case and environment.

- Use the same edge devices for both data collection and inference to streamline the process.

- Validate and test datasets to confirm relevance and accuracy.

- Continuously retrain models to address data drift and changing conditions.

Image quality directly impacts the machine’s ability to perform image classification, object detection, and image segmentation. Poor lighting or motion blur can reduce accuracy, causing the model to misclassify objects or miss defects. Even small changes in pixels can confuse deep neural networks, so maintaining consistent quality is essential for improved accuracy and reliability.

Tip: Always perform quality checks on collected images to prevent errors during model training.

Image Annotation

Data labeling and annotation give meaning to raw images, making them useful for machine learning. Accurate annotation allows the machine to learn how to perform image classification, object detection, and image segmentation tasks. Annotation quality depends on clear guidelines, reliable tools, and regular quality checks.

| Annotation Technique | Description | Advantages | Limitations/Considerations |

|---|---|---|---|

| Manual Annotation | Human experts label images for context and accuracy. | High precision, handles complex cases. | Time-consuming, costly, variable consistency. |

| Semi-Automatic Annotation | Combines software tools with human review. | Efficient, balances speed and quality. | Needs human oversight, tool quality matters. |

| Automatic Annotation | Uses machine learning to label images. | Fast, scalable for large datasets. | May miss nuance, errors with complex images. |

| Crowdsourcing | Distributed workers annotate images. | Scalable, cost-effective, quick for big sets. | Quality control and consistency challenges. |

| 2D Bounding Boxes | Draws rectangles around objects. | Simple, good for object detection. | Less precise for odd shapes. |

| 3D Cuboid Annotation | Adds depth by extending boxes into 3D. | Captures spatial relationships. | More complex, needs extra data. |

| Key Point Annotation | Marks specific points for features. | Useful for facial landmarks, postures. | Needs precision, can be slow. |

| Polygon Annotation | Draws polygons around irregular shapes. | Precise for complex objects. | Labor-intensive. |

| Semantic Segmentation | Labels each pixel by category. | Detailed understanding for image segmentation. | Computationally heavy, needs detailed work. |

| 3D Point Cloud Annotation | Labels 3D data for object recognition. | Useful for robotics, autonomous vehicles. | Needs special tools and skills. |

Accurate data labeling and annotation help the machine learn to recognize objects as humans do. High-quality annotation improves accuracy in image classification, object detection, and image segmentation. Human expertise adds context that automated tools may miss, while quality control reduces errors and bias. Reliable annotation tools and thorough processes lead to faster training cycles and better results.

Deep Learning Frameworks

Deep learning and neural networks drive the success of modern machine vision systems. Several frameworks support model training for image classification, object detection, and image segmentation. Each framework offers unique features for building, training, and deploying models.

- TensorFlow: Popular for image recognition, supports CPUs and GPUs, integrates with TensorBoard for visualization.

- PyTorch: Known for flexibility, strong GPU acceleration, and ease of use in vision tasks.

- Keras: High-level API on TensorFlow, beginner-friendly.

- Caffe: Fast and efficient, used in visual recognition.

- Microsoft Cognitive Toolkit: Scalable, supports CNNs and RNNs.

- MXNet: Designed for distributed computing, supports multiple languages.

- JAX: High-performance, supports multi-GPU training.

- PaddlePaddle: Optimized for scaling, used in vision tasks.

- MATLAB: Specialized toolboxes for easy model creation and deployment.

| Framework | Key Features and Usage in Machine Vision Models |

|---|---|

| PyTorch | Python-based, strong GPU acceleration, widely used for vision tasks. |

| TensorFlow | Flexible data flow graphs, supports CPUs/GPUs, popular for image recognition. |

| JAX | High-performance, supports distributed training. |

| PaddlePaddle | Intuitive interface, optimized for scaling training. |

| MATLAB | Easy model creation, automatic CUDA code generation. |

These frameworks help the machine learn complex patterns in images, improving accuracy and efficiency in tasks like image classification, object detection, and image segmentation. Pre-trained models and transfer learning further speed up model training and boost performance.

Training and Validation

Model training involves teaching the machine to recognize patterns in images. The process uses labeled data to help the machine learn how to perform image classification, object detection, and image segmentation. Validation ensures that the model works well on new, unseen data.

- Split data into training, validation, and test sets to prevent data leakage.

- Use cross-validation methods like k-fold or stratified k-fold to estimate performance.

- Keep validation data clean and representative of real-world conditions.

- Measure performance using metrics such as accuracy, precision, recall, and F1-score.

- Monitor for bias and fairness across different groups.

- Continuously track model performance in production to detect drift.

- Document the validation process and use version control for reproducibility.

- Automate validation pipelines for consistency and efficiency.

- Use domain expertise to interpret results and identify issues.

- Avoid overfitting and data leakage by following best practices.

During training, the machine’s performance is monitored through hyperparameter tuning, feature selection, and cross-validation. Pre-deployment checks include pass/fail tests, inference time measurement, and robustness checks. After deployment, continuous monitoring and drift detection ensure the model maintains high accuracy and efficiency. Alerts and retraining pipelines keep the machine’s performance at its best.

Deployment and Learning

Deploying a trained model brings machine vision to real-world environments. The deployment strategy depends on the use case, such as real-time object detection on edge devices or batch processing in the cloud. Key considerations include inference speed, infrastructure, cost, and security.

- Convert models to formats like TensorFlow Lite or ONNX for compatibility.

- Use containerization (Docker), API serving (FastAPI), and cloud tools for scalable deployment.

- Test models with comprehensive test suites to ensure reliability.

- Plan rollback strategies to revert to stable versions if needed.

- Optimize performance with hardware acceleration and batch processing.

- Secure models with encryption and access controls.

After deployment, the machine continues to learn and adapt. Continuous monitoring tools track performance, detect data drift, and trigger retraining when needed. Feedback loops, such as actor-critic systems, help the machine adjust to new data and changing environments. Regular updates and validation keep the model accurate and efficient, supporting improved accuracy and reliability in image classification, object detection, and image segmentation.

Note: Ongoing learning and adaptation ensure that machine vision systems stay effective as data and environments evolve.

Challenges in Model Training

Data Quality

High-quality data is the foundation for any successful machine vision model. Machines need clear, accurate images to learn how to recognize objects and patterns. Many common data quality issues can affect model training:

- Gaps in content or missing rare cases make it hard for the machine to learn all possible scenarios.

- Poor annotation or labeling mistakes reduce accuracy and cause confusion.

- Synthetic datasets may lack realism, which limits the machine’s ability to perform well in real environments.

- Environmental factors like lighting or background changes can introduce noise.

- Collecting ground truth data is often difficult and expensive.

- Noisy or inconsistent data lowers both accuracy and efficiency.

- Large datasets are hard to label at scale, which slows down the process.

Manual labeling gives high precision but takes time and money. Automated labeling is fast and scalable, but it may miss important details. Hybrid approaches try to balance speed and accuracy. AI-driven labeling systems can reduce error rates below 1%, which boosts both accuracy and efficiency. Synthetic data can improve model accuracy by about 10% and cut data collection costs by 40%.

| Data Quality Issue | Impact on Model Training Outcomes |

|---|---|

| Random Disturbances | Moderate decrease in accuracy; machines can handle some noise. |

| Systematic Biases | Large drop in model quality, reducing accuracy and reliability. |

| Missing or Incorrect Data | Bias in scoring data further lowers performance. |

| Insufficient Data Quantity | Fewer examples reduce accuracy; more data improves results. |

| Missing Important Variables | Removing key features decreases accuracy, though some compensation is possible. |

Poor data quality leads to models that overfit noise and fail to generalize. This reduces both accuracy and efficiency, making the machine less reliable in real-world tasks.

Avoiding Bias

Bias in machine vision models can cause unfair or unsafe outcomes. Machines may make mistakes that affect certain groups more than others. For example, a machine might miss pedestrians wearing dark clothing or misidentify people based on race or gender. These errors can lead to safety risks, discrimination, or unreliable results.

To avoid bias, teams use several strategies:

- Generate synthetic data to balance minority classes and improve accuracy.

- Rebalance datasets and use fairness constraints during training.

- Adjust model outputs to ensure equal opportunity for all groups.

- Monitor and audit models for bias over time.

- Increase diversity in data collection and among team members.

- Use advanced tools for continuous bias detection and correction.

- Apply ethical principles and adaptive algorithms to improve fairness.

Machines trained with diverse and representative data show higher accuracy and efficiency. Real-world testing and ongoing monitoring help maintain fairness and reliability.

Computational Demands

Training machine vision models requires significant computing power. Machines must process large datasets and complex neural networks, which can slow down training and reduce efficiency. Organizations address these demands by:

- Using high-performance servers and storage systems.

- Leveraging cloud platforms to scale resources as needed.

- Applying data augmentation to increase data diversity without extra collection.

- Using regularization to prevent overfitting and improve efficiency.

- Adopting transfer learning to reuse existing models and save time.

- Encouraging teamwork and clear communication to manage resources well.

Cloud solutions like Oracle Cloud Infrastructure provide scalable compute and storage, which supports both accuracy and efficiency. These strategies help machines train faster and perform better, even as data and model complexity grow.

Real-World Impact

Manufacturing

Manufacturing plants now use ai-powered machine vision to improve quality and speed. Dell and Cognex worked together to bring ai to the factory floor. Their system uses deep learning tools trained on labeled images. The ai checks for defects, reads text, and sorts products. Dell’s NativeEdge platform helps deploy these ai models quickly. The machine vision system finds problems in real time, which boosts accuracy and saves time. Factories also use ai with models like Gaussian Mixture Models and Support Vector Machines. These models help machines sort fruit by shape or spot flaws in mesh materials. K-Nearest Neighbors models let machines classify objects by their shape. These ai tools make machines smarter and help factories run better.

Healthcare

Hospitals and clinics use ai to help doctors find diseases faster. Machine vision models trained with influence-based data selection pick the best images for learning. This method helps ai spot rare diseases and avoid mistakes. Transfer learning lets ai use knowledge from other tasks, which can raise diagnostic accuracy by 30%. Human-in-the-loop systems add expert review to ai results. When radiologists check ai’s low-confidence cases, errors drop by 37%. These ai systems help doctors give better care and make fewer mistakes. The machine vision models also adapt to new diseases, keeping healthcare safe and fair.

Autonomous Vehicles

Self-driving cars rely on ai and machine vision to see the road. Training with large datasets helps ai detect lanes, signs, and people. The machine vision system learns to avoid obstacles and drive safely. Sensor fusion combines data from cameras, radar, and LiDAR. This gives the ai a full view of the world. Machine learning models keep improving as they see more data. They adapt to new roads and weather. Defensive training helps ai spot and resist tricks that could fool the system. These advances make autonomous vehicles safer and more reliable.

Future of AI-Powered Machine Vision Systems

The future of ai in machine vision looks bright. New trends will shape how machines learn and work. Self-supervised learning lets ai learn from unlabeled data, making training faster and cheaper. Vision Transformers help ai see patterns across whole images. 3D vision and depth estimation give machines a better sense of space. Hyperspectral imaging lets ai see details beyond normal sight, which helps in farming and medicine. Edge computing puts ai on devices, so machines can make decisions quickly. Multi-agent ai systems let machines work together to solve big problems. Generative virtual playgrounds help ai train in safe, fake worlds. Explainable ai makes it easier to trust machine decisions. Ethical ai ensures fairness and safety. These trends will help ai-powered machine vision systems become even more powerful and trusted in every field.

Model training gives every machine the power to see and make smart choices. Like a superhero, this process transforms a simple machine into a tool that solves real problems. AI-powered vision systems now help people in factories, hospitals, and on the road. New advances will let each machine learn faster and adapt to new challenges. The future holds even more possibilities for smart machines.

FAQ

What is the main goal of model training in machine vision?

Model training helps machines learn to recognize objects, patterns, or defects in images. The goal is to improve accuracy and reliability so machines can make smart decisions in real-world tasks.

How does data quality affect machine vision models?

High-quality data leads to better learning and higher accuracy. Poor data, such as blurry images or wrong labels, can confuse the model. Machines need clear and well-labeled images to perform well.

Can machine vision systems keep learning after deployment?

Yes! Many systems use continuous learning. They collect new data, monitor performance, and retrain models. This process helps machines adapt to changes and stay accurate over time.

What industries use AI-powered machine vision the most?

Manufacturing, healthcare, and transportation use machine vision often. Factories inspect products, hospitals analyze medical images, and self-driving cars detect objects on the road. Many other fields also benefit from this technology.

See Also

An Overview Of Machine Vision And Computer Vision Models

How Guidance Machine Vision Enhances Robotics Performance

Comparing Firmware-Based Machine Vision With Conventional Systems

A 2025 Perspective On Robotic Guidance Machine Vision Systems

Machine Vision Technology Shaping The Future Of Assembly Verification