Selecting the right model for a machine vision system does not have to feel overwhelming. Many tools and metrics now make the process easier.

- Cross-validation methods report clear accuracy scores, such as 84.4%, to compare models directly.

- Packages like scikit-learn and R’s glmnet offer built-in regularization and cross-validation, which help users avoid overfitting.

- Metrics such as Mean Absolute Error and R-squared provide easy ways to judge model performance.

A step-by-step approach clarifies each stage, from defining the problem to choosing the best model. This structure helps teams align their work with real-world goals. With the right guidance, Model selection machine vision system becomes manageable for any team.

Key Takeaways

- Choose machine vision models based on the task complexity; simple tasks fit traditional models, complex tasks need deep learning.

- Use good hardware like high-resolution cameras and proper lighting to improve image quality and model accuracy.

- Evaluate models with clear metrics such as accuracy, precision, recall, and F1 score to ensure reliable performance.

- Follow a step-by-step workflow including data quality checks, feature selection, model tuning, and fairness testing for best results.

- Avoid common mistakes like overfitting, ignoring bias, and poor documentation to build trustworthy machine vision systems.

Application Needs

Vision Task

A machine vision system must match its vision task to the needs of the application. In factories, image processing systems help with many jobs. These include sorting parts, guiding robots, counting items, and checking for defects. For example, a system might count screws on a conveyor belt or check if a product has the right label. Some tasks need the system to measure the size of objects or read barcodes. Others require the system to spot small flaws on surfaces or make sure workers wear safety gear.

Industrial image processing systems often use cameras to capture real-time images. The image information processing then checks these images for problems or counts items. Programmable logic controllers can use this information to remove bad products or alert workers. The choice of hardware and software depends on the vision task. For example, surface defect detection needs high-definition cameras and advanced image processing. In contrast, food temperature monitoring uses thermal imaging and deep learning. The table below shows how different tasks need different hardware and software:

| Application Need / Task | Hardware Choice | Software Choice | Reason / Impact |

|---|---|---|---|

| Surface defect detection | High definition cameras | Deep learning, image processing | Detailed inspection, high accuracy |

| Internal composition analysis | Hyperspectral sensors, X-ray | Multispectral image classification | Internal properties, advanced imaging |

| Volume and size measurement | 3D stereo cameras, RGB-D cameras | Depth analysis algorithms | Accurate volume estimation |

Performance Criteria

Performance is key for any image processing system. The system must work fast and give correct results. Common performance measures include accuracy, precision, recall, and F1 score. These help users know if the machine vision system finds defects or counts items correctly. For example, accuracy shows how often the system is right. Precision tells how many detected defects are real. Recall shows how many real defects the system finds. F1 score combines precision and recall for a balanced view.

Other performance factors include how fast the system works and how much energy it uses. Some systems must process images in real time, so low latency is important. Model size also matters, especially for small devices. Standard datasets, like ImageNet, help compare different systems. Statistical tests and confidence intervals make sure the results are meaningful. Good image information processing depends on both strong hardware and smart software. Specialized hardware, like GPUs, can make some algorithms work better, but may limit others. This shows that application needs shape both hardware and software choices in a machine vision system.

Model Selection Machine Vision System

Model Types

Model selection machine vision system starts with understanding the main types of models. Two major groups exist: traditional machine learning models and deep learning models. Each group has unique strengths and fits different needs in a machine vision system.

The table below compares these two groups:

| Aspect | Traditional Machine Learning (ML) | Deep Learning (DL) |

|---|---|---|

| Training Time | Shorter; seconds to minutes | Longer; needs more time and powerful GPUs |

| Computational Resources | Runs on standard CPUs; cost-effective | Needs GPUs or special hardware |

| Dataset Size Requirement | Works with small datasets | Needs large labeled datasets |

| Model Complexity | Simple models (e.g., decision trees, linear regression) | Complex, multi-layer architectures |

| Scalability | Limited with big data | Improves with more data; highly scalable |

| Interpretability | Easy to understand and debug | Hard to explain; often a "black box" |

| Processing Time | Very fast inference | Slower inference compared to ML |

Traditional models include decision trees, support vector machines, and logistic regression. These models work well when data is limited and the problem is simple. Deep learning models, such as convolutional neural networks (CNNs), handle complex images and large datasets. They learn patterns directly from raw data, making them powerful for tasks like object detection and image classification.

When to Use Each

Model selection machine vision system depends on the task and the data. Simple tasks, such as counting objects or reading barcodes, often use traditional models. These models need less data and run on basic hardware. They also give clear reasons for their decisions, which helps users trust the results.

Deep learning models shine in complex tasks. For example, detecting tiny defects on a surface or recognizing faces in a crowd needs deep learning. These models handle many image details and learn from large datasets. They require more computing power, but they can find patterns that traditional models miss.

Researchers found that task complexity affects which model works best. When a machine vision system faces a simple job, it can use a model-free approach, like a traditional model. As the task gets harder, the system benefits from a model-based approach, such as deep learning. The brain also uses this strategy. It switches to more flexible and explorative methods when tasks become complex. When uncertainty is high, the system may return to simpler models for reliability.

Tip: Always match the model to the task. Simple jobs need simple models. Complex jobs need advanced models.

Model selection machine vision system also needs strong evaluation. Cross-validation helps teams test models and avoid overfitting. Overfitting happens when a model learns the training data too well but fails on new data. Cross-validation splits the data into parts, trains the model on some parts, and tests it on others. This process checks if the model works well on different data.

Common cross-validation strategies include:

- K-Fold cross-validation splits data into K groups and tests each group.

- Nested cross-validation helps pick the right model complexity and checks generalization.

- Stratified cross-validation keeps class balance, which is important for rare defects.

- Time series cross-validation respects the order of images in time-based tasks.

- Regularization adds penalties to the model, making it simpler and less likely to overfit.

- Keeping related data together in the same fold avoids data leakage.

Model selection machine vision system becomes easier with these tools. Teams can compare models using average scores from cross-validation, such as R² or mean absolute error. These steps ensure the chosen model works well in real-world settings.

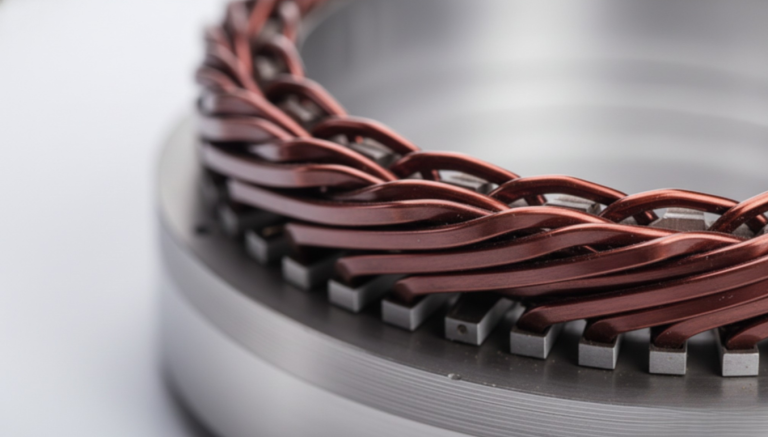

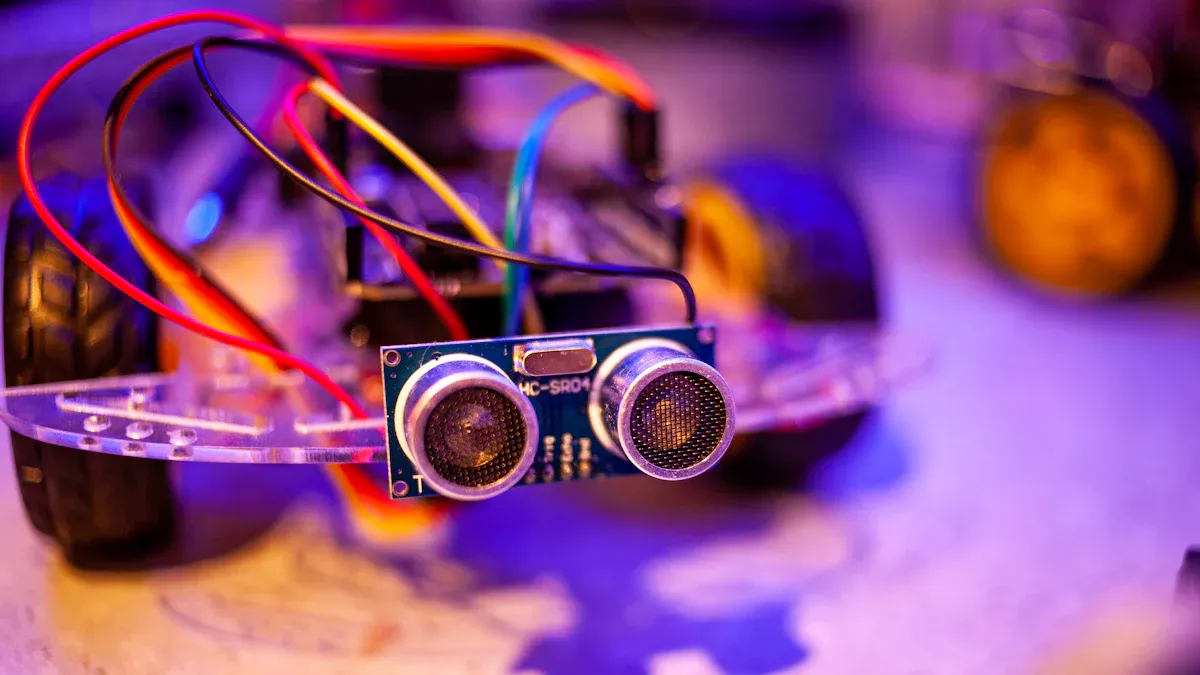

Machine Vision Hardware

Camera and Lens

The camera and lens form the core of any image acquisition unit in machine vision hardware. Their choices shape the quality and usefulness of the captured images. The field of view and resolution set the limits for what the system can see and measure. High-resolution cameras allow the image acquisition unit to spot tiny defects, which boosts performance in tasks like surface inspection. The lens also plays a big role. Different types of lens distortion, such as barrel or pincushion, can change how objects appear. These distortions may cause errors in size estimation, sometimes by as much as 30%. Calibration and correction algorithms help fix these problems and improve measurement accuracy.

| Lens Distortion Impact | Effect on Machine Vision Performance |

|---|---|

| Maximum distortion up to 1.4 pixels in certain lenses | Causes measurable decreases in measurement accuracy if uncorrected |

| Small distortions statistically significant | Cannot be ignored in practical applications due to impact on reliability |

| Distortion parameters highly significant without overfitting | Indicates consistent influence on system accuracy |

A well-chosen camera and lens combination in the image acquisition unit ensures the machine vision hardware meets the needs of the application. High-speed cameras support real-time inspection, which is important for fast production lines. Optical resolution and magnification help the system see small features clearly.

Sensors and Lighting

Sensors and lighting complete the image acquisition unit and directly affect machine vision hardware performance. Sensor resolution determines how much detail the system can capture. Advanced sensors paired with the right lighting reveal defects that lower-quality setups might miss. Lighting conditions, such as brightness and angle, change how features appear in images. Studies show that optimized lighting improves image clarity, which helps neural network models reach high accuracy. For example, EfficientNet achieved over 98% defect detection accuracy when paired with good lighting and sensor choices.

Environmental factors, including dust and humidity, can also affect sensor performance. Machine vision hardware often uses sensor fusion, combining data from electric current, vibration, and vision. This approach increases model accuracy and helps the system adapt to different tasks. The right combination of sensors and lighting in the image acquisition unit ensures reliable results and supports the best model selection for each application.

Model Evaluation

Comparison Criteria

Teams use clear criteria to compare models in machine vision projects. They look at how well each model solves the image processing task. Common metrics include accuracy, precision, recall, and F1 score. These numbers show if a model finds defects or counts objects correctly. For object detection, teams use Intersection over Union (IoU) and mean Average Precision (mAP). Image segmentation tasks rely on the Dice Coefficient and Jaccard Index. Each metric gives a different view of model performance.

| Task Type | Common Evaluation Metrics | Purpose/Description |

|---|---|---|

| Image Classification | Accuracy, Precision, Recall, F1 Score, Confusion Matrix | Measure classification correctness, balance between precision and recall, and error types |

| Object Detection | Intersection over Union (IoU), mean Average Precision (mAP) | Evaluate localization accuracy and detection precision across classes |

| Image Segmentation | Dice Coefficient, Jaccard Index, Pixel Accuracy | Assess overlap and similarity between predicted and true segmentation masks |

| Image Generation | Inception Score (IS), Frechet Inception Distance (FID) | Quantify quality and diversity of generated images compared to real data |

Some teams use a Multi-Criteria Decision Analysis (MCDA) framework. This method combines several metrics, such as accuracy and computational complexity, to rank models. The Analytic Hierarchy Process (AHP) helps teams decide which criteria matter most. This approach works well for complex image information processing tasks where one metric is not enough.

Teams also use A/B testing to compare model predictions with ground truth. They track model versions and use visual tools like confusion matrices. Custom metrics help when standard ones do not fit the image processing task.

Tools and Benchmarks

Industry uses trusted tools and benchmarks to test machine vision models. MLPerf stands out as a gold standard for image processing and object detection. DAWNBench measures training time and cost across cloud platforms. DeepBench checks hardware performance for image information processing. TensorFlow and Nvidia CUDA benchmarks help teams profile models and optimize GPU use.

- MLPerf: Tests models on many hardware types and image processing tasks.

- DAWNBench: Focuses on speed and cost for training and inference.

- DeepBench: Measures low-level hardware performance.

- TensorFlow benchmark suite: Profiles model performance across devices.

- Nvidia CUDA benchmarks: Checks GPU speed and energy use.

Teams look at latency, throughput, memory use, and energy efficiency. They choose tools that match their image processing needs and hardware. These benchmarks help teams pick the best model for real-world image information processing.

Selection Workflow

Step-by-Step Process

A clear workflow helps teams choose the best model for a machine vision system. The following checklist guides users through each important step:

-

Check Data Quality

Remove low-quality data and samples with missing values. For example, filter out features with a minimum frequency less than 0.01 to keep only reliable information. -

Assess Feature Relevance

Compute correlation coefficients for each feature. This step shows which features matter most for the task. -

Select and Test Features

Train models using different feature subsets. Measure how each set affects accuracy and resource use. -

Split Data Properly

Use separate sets for training, validation, and testing. Always keep the final test set disjoint from the others to avoid bias. -

Tune Model Parameters

Adjust settings like the number of trees in a random forest or the kernel type in an SVM. This step improves model performance. -

Evaluate with Multiple Metrics

Use accuracy, recall, and other metrics. Conduct A/B testing to see how model changes affect business goals. -

Test for Fairness and Bias

Collect data on under-represented groups. Check if any features link to protected categories. -

Check Model Staleness

Compare new models to older ones. Decide how often to retrain based on performance drops. -

Ensure Reproducibility

Minimize randomness in training. Run unit and integration tests on the full pipeline. -

Report Clearly

Follow a structured checklist, such as the 7-item PRIME list, to reduce errors and bias.

Tip: Teams that follow this checklist often see fewer errors and more reliable results.

Common Pitfalls

Many teams face similar problems during model selection. Knowing these pitfalls helps avoid costly mistakes:

- Skipping data quality checks can lead to poor results.

- Using overlapping data for training and testing causes overfitting.

- Ignoring feature relevance wastes resources and lowers accuracy.

- Failing to test for bias may create unfair models.

- Not updating models leads to outdated predictions.

- Missing clear documentation makes results hard to reproduce.

Teams should review each step and avoid shortcuts. Careful planning leads to a successful machine vision system.

Examples

Defect Detection

Machine vision systems help factories find defects in products quickly and accurately. These systems use cameras and smart models to spot problems like cracks, missing parts, or surface flaws. Engineers measure how well these systems work using clear metrics.

| Metric | Definition and Role in Defect Detection |

|---|---|

| Accuracy | Shows how many total predictions are correct, both for defects and non-defects. |

| Precision | Measures how many detected defects are actually real defects. |

| Recall | Tells how many real defects the system finds out of all possible defects. |

| F1-Score | Balances precision and recall, giving a single score for both. |

| ROC Curve | Plots true positive rate against false positive rate, showing how well the model separates defects from good parts. |

| AUC | Gives a single number for the ROC curve, showing the model’s overall ability to tell defects from non-defects. |

For example, a ResNet-50 model used for welding defect detection reached an average accuracy of 96.1%. This high score means the system can find most defects and avoid false alarms. Teams also look at precision and recall to make sure the system does not miss real problems or flag too many good parts as bad. These metrics help factories trust their machine vision systems.

Teams often use these metrics to compare different models and pick the best one for their needs.

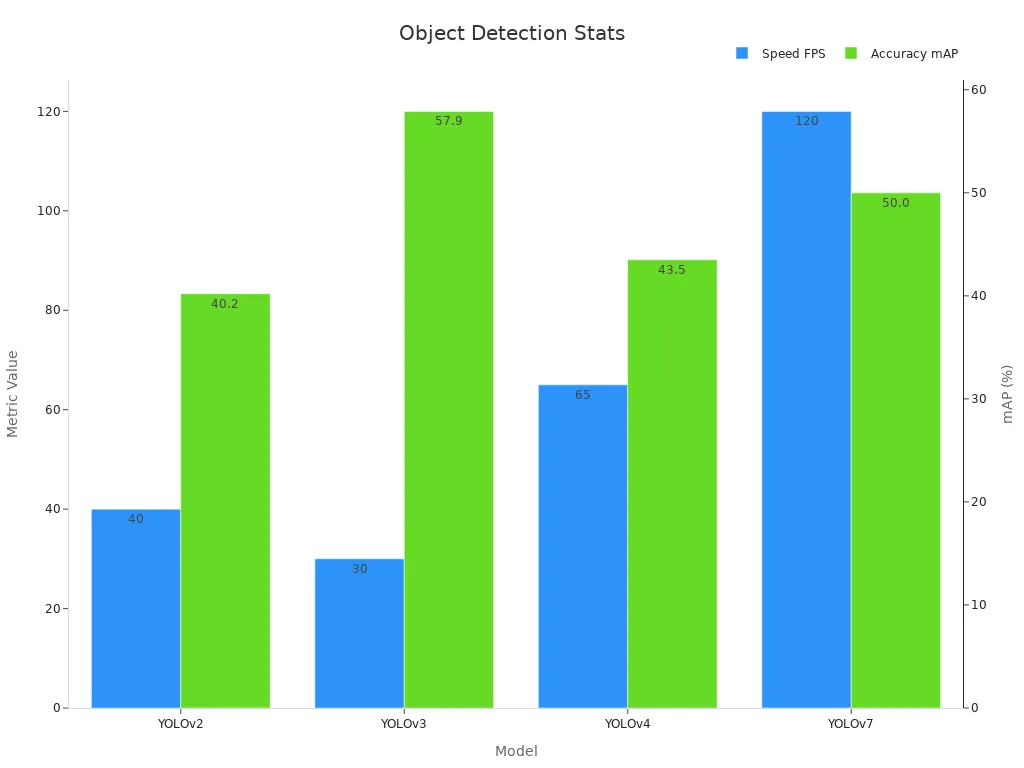

Object Counting

Object counting is another common task for machine vision. Systems count items like bottles on a conveyor belt or cars on a road. Fast and accurate counting helps companies track production and manage inventory.

| Model | Speed (FPS) | Accuracy/Efficiency (mAP) |

|---|---|---|

| YOLOv2 | 40 | 40.2% |

| YOLOv3 | 30 | 57.9% |

| YOLOv4 | 65 | 43.5% |

| YOLOv7 | 120 | 50.0% |

One-stage detectors like YOLOv7 process images very quickly, reaching up to 120 frames per second. This speed allows real-time counting in busy environments. YOLOv5 stands out with 98.1% detection accuracy for vehicle counting, beating other models such as Yolo4-CSP and VC-UAV. These models also use less computer power, so factories can run them on smaller devices.

- YOLO models balance speed and accuracy, making them ideal for real-time tasks.

- Companies use these systems in traffic monitoring, packaging, and warehouse management.

Machine vision systems with strong counting models help businesses save time and reduce errors.

Model selection in machine vision systems follows clear steps. Teams define the task, choose the right model, and test performance. Proper imaging hardware, such as lighting and cameras, can drive up to 80% of system success. Software algorithms then process pixel-level data for accurate results. Key metrics include:

- Synchronization accuracy for precise timing

- Energy consumption for efficient operation

- Throughput for fast data processing

By following this workflow, teams can build reliable systems. Anyone can achieve strong results with the right approach and tools.

FAQ

What is the most important factor in choosing a machine vision model?

Teams should focus on the task requirements. Simple tasks need basic models. Complex tasks need deep learning. Data quality and hardware also play big roles. Matching the model to the job ensures the best results.

Can traditional machine learning models work for all vision tasks?

Traditional models handle simple jobs well, such as counting or sorting. They struggle with complex images or large datasets. Deep learning models perform better for tasks like defect detection or face recognition.

How does hardware affect model performance?

Hardware, such as cameras and sensors, sets the limits for image quality. High-resolution cameras and good lighting help models find small details. Weak hardware can hide defects or slow down processing.

How can teams avoid overfitting in machine vision systems?

Teams use cross-validation and regularization to prevent overfitting. They split data into training and testing sets. This process checks if the model works well on new images, not just the training data.

See Also

Understanding Machine Vision Systems And Computer Vision Models

How To Properly Position Equipment Within Machine Vision Systems

Using Machine Vision Systems To Identify Parts Reliably In Manufacturing

An Overview Of Cameras Used In Machine Vision Systems

Explaining Image Processing Techniques In Machine Vision Systems