An image sensor forms the core of a machine vision system in 2025. It captures light and turns it into digital signals, enabling precise inspection and measurement. The global market for image sensor machine vision system technology continues to expand, driven by rapid adoption in manufacturing, healthcare, and automotive industries. Recent integration of AI and real-time processing allows these systems to analyze images within milliseconds, reducing errors and improving efficiency. This integration transforms machine vision system performance and supports advanced automation across key sectors.

Key Takeaways

- Image sensors convert light into digital signals, forming the core of machine vision systems that improve inspection and automation.

- CMOS sensors lead the market due to their speed, low power use, and flexibility, while CCD sensors offer higher image quality but cost more.

- Machine vision systems combine lighting, lenses, cameras, processors, and software to capture and analyze images accurately and quickly.

- Choosing the right sensor depends on factors like resolution, frame rate, sensor type, spectral sensitivity, and environmental conditions.

- New trends in 2025 include AI integration, 3D imaging, ultra-compact sensors, and global shutter technology for better performance and real-time analysis.

Image Sensor Machine Vision System

What Is an Image Sensor?

An image sensor is a semiconductor device that forms the heart of any image sensor machine vision system. It captures light from a scene and transforms it into electrical signals. These signals become digital images that machines can analyze. In 2025, image sensors play a vital role in every machine vision system, supporting tasks like inspection, measurement, and automation.

The table below shows the main types of image sensors and their primary functions in machine vision systems:

| Image Sensor / Camera Type | Primary Function in Machine Vision Systems | Key Advantages / Characteristics |

|---|---|---|

| CCD (Charge-Coupled Device) | Converts light into electrical signals to capture raw image data for digital images | High resolution, excellent light sensitivity; drawbacks include higher power consumption, slower readout, and higher cost |

| CMOS (Complementary Metal-Oxide-Semiconductor) | Converts light into electrical signals with individual pixel readout for image capture | High sensitivity, fast readout speeds, low power consumption, cost-effective |

| Area Scan Cameras | Capture images in a single frame using CCD or CMOS sensors | Versatile, suitable for low to high resolution, multiple mounting options; limited field of view and depth perception |

| Line Scan Cameras | Capture images one line at a time for sequential image acquisition | High resolution, excellent for moving objects, higher cost, less versatile |

| Smart Cameras | Integrate camera, processing, and communication functions | Compact, simple, improving performance though sometimes lower resolution than area or line scan cameras |

CMOS sensors have become the preferred choice for modern imaging because they offer high sensitivity, fast readout, and low power use. These sensors can detect both visible and infrared light, making them suitable for a wide range of imaging tasks in manufacturing and other industries.

How Image Sensors Work

Image sensors in a machine vision system use arrays of tiny photosensitive pixels. Each pixel collects light, or photons, from the scene. The pixel converts this light into an electrical charge. The amount of charge depends on the intensity of the light hitting the pixel.

The process of imaging in an image sensor machine vision system follows these steps:

- Lenses and filters focus incoming light onto the image sensor.

- Each pixel on the sensor collects photons and converts them into electrical charges.

- In CCD sensors, the charges move across the chip to a readout register. In CMOS sensors, each pixel reads out its charge directly.

- The sensor amplifies and conditions the electrical signals to improve quality.

- An analog-to-digital converter changes the analog signals into digital data.

- The system processes the digital signals to create clear images, using techniques like noise reduction and color correction.

- Color imaging uses filters, such as Bayer filters, to separate red, green, and blue light.

Note: The number of pixels in an image sensor determines the resolution of the imaging system. More pixels mean higher resolution, but also require more processing power.

Components of a Machine Vision System

A complete image sensor machine vision system includes several hardware components that work together to capture and process images. Each component has a specific role in the imaging process.

- Lighting/Illumination: Provides consistent light to make object features clear and reduce background noise.

- Staging (Fixture and Sensors): Holds the object in place and signals when it is ready for imaging.

- Lens: Focuses the light onto the image sensor for sharp and bright images.

- Camera (Sensor): Captures the image and converts it into electronic signals.

- Image Acquisition (Frame Grabber): Digitizes the camera’s signals and sends the data to the processor.

- Vision Processor (Processing Engine): Analyzes the digital image using specialized software.

- Processing Software: Extracts features, measures objects, recognizes patterns, and makes decisions.

- Control Unit: Uses the results from the software to control machines or processes.

The interaction between these hardware components ensures that the image sensor machine vision system operates with speed and accuracy. Lighting prepares the object for imaging. The lens focuses the light. The camera captures the image. The frame grabber digitizes the signal. The processor and software analyze the image. The control unit acts based on the results.

Tip: Careful selection and integration of hardware components are essential for reliable imaging. Environmental factors like dust, temperature, and vibrations can affect performance, so robust design is important.

Sensor Types and Technology

CCD vs CMOS

In 2025, CMOS sensors lead the market for industrial imaging. CMOS sensors offer high speed, low power use, and easy integration with on-chip AI. These features make them ideal for most machine vision camera applications. CCD sensors still provide excellent image quality and color accuracy, especially in low-light imaging. However, CCDs cost more and use more power. Most industries now choose CMOS for their camera sensors because of cost, speed, and flexibility. The table below compares the two main types of camera sensors:

| Aspect | CCD Sensors | CMOS Sensors |

|---|---|---|

| Performance | Superior image quality, excellent color, minimal noise, better dynamic range, slower readout | Faster readout, improved low-light performance, now less noise |

| Cost | Higher manufacturing costs | Cost-effective, standard processes |

| Power Consumption | Higher power use | Much lower power use |

| Integration | Limited, needs separate processing | High integration, on-chip AI, compact designs |

| Application Suitability | Best for scientific and high-precision imaging | Dominant in industrial automation, medical, security, and consumer imaging |

2D and 3D Vision Sensors

Most machine vision systems in 2025 use 2D cameras and line-scan camera sensors. 2D cameras remain popular because they are affordable, compact, and easy to deploy. These imaging systems work well for surface inspection and barcode reading. Line-scan cameras capture images one line at a time, making them perfect for inspecting moving materials like fabric or paper. 3D vision sensors add depth information to imaging. They use lasers or stereo vision to measure shapes and positions. 3D imaging helps robots pick objects from bins or measure volume. However, 3D systems cost more and need advanced processing.

- 2D Vision Sensor Advantages:

- Affordable and simple

- Fast and accurate for flat object inspection

- 2D Vision Sensor Disadvantages:

- No depth information

- Sensitive to lighting and object placement

- 3D Vision Sensor Advantages:

- Measures depth and shape

- Works in changing lighting and object positions

- Enables complex tasks like robotic guidance

- 3D Vision Sensor Disadvantages:

- Higher cost and complexity

- Needs more processing power

Some imaging systems combine both 2D and 3D sensors for total quality inspection.

Smart Cameras and Embedded Vision

Smart cameras and embedded vision systems have changed industrial imaging. These devices now include powerful processors and AI, allowing real-time analysis at the edge. Smart cameras handle tasks like barcode reading, pattern matching, and object recognition without needing a separate computer. This reduces latency and increases reliability. Modern smart cameras, such as those from Cognex and Luxonis, support both simple and complex imaging tasks. Embedded vision modules use compact camera sensors and efficient processors. They enable imaging in small devices, from factory robots to IoT products. New technologies like event-based cameras and sensor fusion with LiDAR improve perception and accuracy. TinyML vision allows even small microcontrollers to run imaging tasks, making advanced imaging available for more applications.

Note: Compact imaging modules balance cost and performance. They offer easy integration and high-quality imaging for industrial use.

Key Features

Resolution and Pixel Size

Resolution describes how many pixels an image sensor uses to capture a scene. Higher resolution means the system can detect smaller objects or defects. For example, a camera with more pixels can spot tiny cracks or scratches that a lower-resolution camera might miss. The minimum detectable object size depends on the field of view and the number of pixels in the sensor. Smaller pixel sizes improve spatial resolution, allowing the system to capture finer details. However, smaller pixels may reduce sensitivity, especially in low-light imaging. Larger pixels collect more light, which helps in dark environments but may lower the level of detail. Balancing resolution, pixel size, and field of view is important for accurate inspections and efficient imaging.

Sensitivity and Dynamic Range

Sensitivity measures how well an image sensor captures light. High sensitivity helps the system work in low-light conditions. Dynamic range shows the range of light levels the sensor can handle, from dark shadows to bright highlights. A wide dynamic range allows the sensor to keep details in both very bright and very dark areas. Larger pixels often improve both sensitivity and dynamic range because they collect more light. In fast-moving or low-light scenes, sensors with high sensitivity and dynamic range produce better image quality. These features help machine vision systems perform well in challenging lighting.

Shutter Types

Image sensors use either global or rolling shutters. Global shutters capture the entire image at once. This method prevents motion blur and skew, which is important for imaging fast-moving objects. Rolling shutters capture images row by row, which can cause distortions like the "jello effect" when objects move quickly. Global shutters cost more and may have lower light sensitivity, but they provide clear, distortion-free images. Rolling shutters are more affordable and work well for static scenes. For high-speed machine vision tasks, global shutters deliver the best image quality.

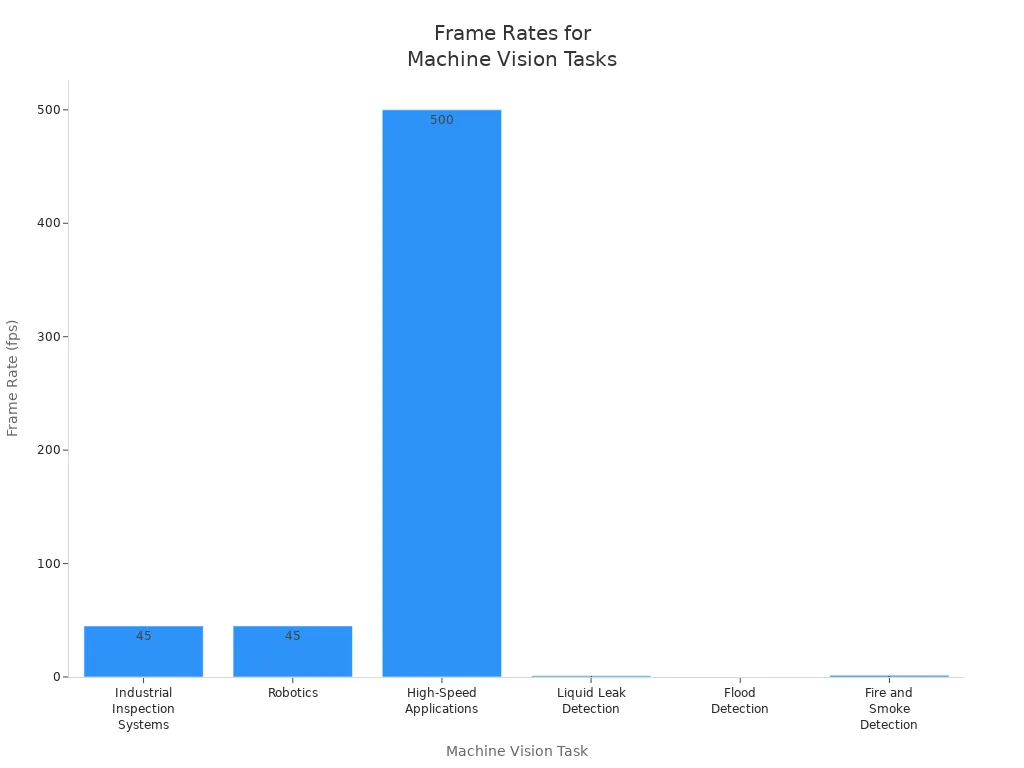

Frame Rate

Frame rate tells how many images a sensor captures each second. Different machine vision tasks need different frame rates. High-speed applications, such as rapid inspection lines, may require over 500 frames per second (fps). General inspection and robotics often use 30-60 fps for smooth and timely imaging. Some tasks, like leak or flood detection, need only 1-2 fps. Event-based sensors can reach effective frame rates of 10,000 fps or more, making them ideal for tracking fast changes. The right frame rate ensures the system captures all important details without missing events.

| Machine Vision Task | Typical Frame Rate Requirement | Notes |

|---|---|---|

| Industrial Inspection | 30-60 fps | Good for most inspection and robotics tasks |

| Robotics | 30-60 fps | Supports real-time analysis |

| High-Speed Applications | Over 500 fps | Needed for fast-moving objects |

| Liquid Leak Detection | 1 fps or less | For slow or static scenes |

| Flood Detection | 1 frame per minute | For slow environmental changes |

| Fire and Smoke Detection | 1-2 fps | For slow but critical events |

Spectral Response

Spectral response describes how an image sensor reacts to different wavelengths of light. Some sensors detect visible light, while others can sense infrared or terahertz waves. Using spectral information helps the system find specific materials or defects, even on shiny or complex surfaces. For example, terahertz imaging can reveal hidden flaws inside materials that visible light cannot reach. Combining spectral data with shape information improves defect detection and reduces false alarms. This makes imaging systems more reliable and accurate in manufacturing and inspection.

Machine Vision Applications

Industrial Inspection

Manufacturers use image sensors for many visual inspection tasks. These sensors help detect defects, measure parts, and guide robots. Industries such as automotive, electronics, and aerospace rely on machine vision to check gears, inspect battery welds, and verify assembly. In the automotive sector, machine vision software can spot wrinkles in seat covers with 99% accuracy. The table below shows common uses:

| Application Area | Example Use |

|---|---|

| Automotive | Seat inspection, gear machining |

| Electronics | Stator core inspection |

| Packaging | Flexible plastic packaging checks |

| Medical | Syringe final inspection |

These applications lead to improved product quality and lower production costs by reducing errors and saving materials.

Robotics and Automation

Robots in factories use image sensors to see and understand their environment. High-resolution CMOS sensors allow robots to find objects, avoid obstacles, and move safely. Miniature cameras fit into small robots and mobile machines. 2D and 3D cameras give robots depth perception, which helps with tasks like picking items from bins. Auto exposure and global shutter features ensure clear images, even when objects move quickly. These advances make automation more reliable and flexible.

- Robots use vision for:

- Navigation and obstacle avoidance

- Barcode scanning

- Assembly verification

Quality Control

Image sensors play a key role in quality control across many industries. They work in harsh environments and capture clear images, even at high speeds. Sensors with large pixels and high quantum efficiency detect tiny defects that people might miss. Global shutter technology prevents motion blur, which is important for fast-moving production lines. Reliable sensors help companies maintain high standards and avoid costly recalls.

Note: Quality control systems use sensors to check for scratches, missing parts, and incorrect assembly. This ensures only the best products reach customers.

Real-Time Analysis

Real-time analysis allows machine vision applications to make instant decisions. High-speed cameras and smart software process images as soon as they are captured. This immediate feedback helps factories fix problems right away. Real-time systems support tasks like defect detection, barcode reading, and process control. Cloud-based solutions and deep learning algorithms improve accuracy and adapt to new inspection needs.

- Real-time analysis provides:

- Immediate data for process improvements

- Fast detection of defects and hazards

- Support for predictive maintenance

These technologies help companies respond quickly to changes and keep production running smoothly.

Choosing the Right Sensor

Application Requirements

Selecting the right image sensor starts with understanding the specific needs of the application. Each machine vision task has unique requirements that shape the choice of sensor features. Engineers must consider several key factors to ensure the sensor matches the job:

- Resolution: The sensor must capture the smallest feature size needed for inspection. Higher resolution helps detect tiny defects, but it also increases data and processing needs.

- Sensor Technology: The choice between CCD and CMOS, as well as global or rolling shutter, depends on the required image quality, speed, and budget.

- Frame Rate: Fast-moving objects or high-throughput lines need sensors with higher frame rates to avoid missing critical details.

- Spectral Sensitivity: Some applications require sensors that see beyond visible light, such as infrared or ultraviolet, to reveal hidden features.

- Interface and Connectivity: The sensor must support data transfer standards like Ethernet or CoaXPress for smooth integration with processing systems.

- Environmental Conditions: Sensors must withstand temperature changes, dust, vibration, and lighting variations. Outdoor or harsh environments may need extra protection.

- Software Compatibility: The sensor should work seamlessly with the vision software for image analysis and decision-making.

Note: The Modulation Transfer Function (MTF) measures how well the sensor and lens together can resolve fine details. Matching the MTF of both components ensures sharp, high-contrast images for reliable inspection.

Different industries prioritize these factors based on their needs. For example, automotive and electronics manufacturers often require high resolution and speed for quality control, while medical imaging may focus on sensitivity and color accuracy. Mobile robots and embedded systems need compact, energy-efficient sensors that fit tight spaces and operate reliably on battery power.

Trends for 2025

Image sensor technology continues to evolve rapidly. In 2025, several trends shape the future of machine vision:

| Manufacturer | Sensor Type | Key Features | Application Areas |

|---|---|---|---|

| Gpixel | Time-of-Flight (ToF) CMOS sensor | 5µm three-tap iToF pixel, 640×480 resolution, pixel-level stacked backside illuminated CMOS | 3D imaging |

| Hamamatsu Photonics | 3D ToF back-thinned sensor | High sensitivity in near-infrared, improved background light tolerance | Measurement, hygiene management, social distancing |

| OmniVision Technologies | Ultra-compact medical sensor | 0.55×0.55mm package, 1.0µm pixel, 400×400 RGB at 30fps, 20mW power | Medical endoscopes, catheters, guidewires |

| On Semiconductor | Global shutter CMOS sensor | 2.3MP, 1080p video at 120fps, low noise, extended temperature range | Industrial IoT, outdoor applications |

| Semi Conductor Devices | SWIR detector | High frame rate: 1500 Hz full VGA, 25 KHz event channel | Advanced machine vision applications |

| Teledyne e2v | Industrial CMOS sensors | 2MP and 1.5MP, chip-scale package, optical and mechanical center alignment | Barcode engines, mobile terminals, IoT, robotics |

Manufacturers now offer sensors with advanced features such as:

- Time-of-Flight (ToF) and 3D imaging for depth measurement and object recognition.

- Ultra-compact sensors for medical and embedded applications.

- Global shutter CMOS for high-speed, low-distortion imaging.

- Short-wave infrared (SWIR) detectors for specialized inspection tasks.

- Integrated AI processing within smart cameras for real-time analysis at the edge.

Recent innovations in Contact Image Sensor (CIS) technology allow high-speed, high-resolution imaging in tight spaces. CIS modules combine the camera, lens, and lighting, making them ideal for compact production lines. The trend toward embedding AI directly into vision systems, such as smart cameras, enables faster decision-making and reduces the need for external computers.

Selection Tips

Choosing the best image sensor involves balancing technical needs, budget, and deployment speed. Here are some practical tips for engineers and decision-makers:

- Match Resolution and Field of View: Select a sensor with enough pixels to capture the smallest required detail, but avoid excessive resolution that slows processing.

- Consider Lighting: Ensure the workspace has proper illumination. For quality control, lighting levels above 1000 lux help produce clear images.

- Assess Speed and Downtime: Choose sensors that meet the required frame rate and minimize system downtime.

- Evaluate Custom vs. Off-the-Shelf Solutions:

- Custom sensors work best for unique tasks, harsh environments, or when optimizing for performance and security.

- Commercial off-the-shelf (COTS) sensors offer quick deployment and lower cost but may lack customization.

- Check Integration Needs: Make sure the sensor fits with existing hardware, software, and connectivity standards.

- Balance Cost and Features: Focus on essential features that meet the application’s needs without overspending on unnecessary extras.

Tip: Always test the sensor in real-world conditions before final selection. This helps confirm that it meets all operational and environmental requirements.

Engineers should also consider future needs, such as scalability and compatibility with new technologies. Staying updated on the latest sensor trends and innovations ensures the chosen solution remains effective as machine vision systems continue to advance.

Selecting the right image sensor shapes the success of any machine vision system in 2025. Engineers must understand sensor types, features, and application needs to achieve high accuracy and efficiency. Staying informed about new advancements brings many benefits:

- Higher resolution and faster processing improve inspection speed and quality.

- AI-powered sensors enable real-time analysis and better defect detection.

- Miniaturized sensors fit into more devices and environments.

| Resource Type | Description |

|---|---|

| Industry Insights | Trends, articles, and expert advice |

| Webinars | Latest technical updates from industry leaders |

| Certifications | Professional development and skill validation |

Regularly exploring these resources helps organizations stay competitive and make smart decisions in machine vision.

FAQ

What is the main difference between CCD and CMOS sensors?

CCD sensors offer high image quality and color accuracy. CMOS sensors provide faster speeds, lower power use, and cost less. Most industries now use CMOS sensors for machine vision.

How does pixel size affect image quality?

Larger pixels collect more light, which improves sensitivity in low-light conditions. Smaller pixels capture finer details but may reduce sensitivity. Engineers must balance pixel size and resolution for the best results.

Can image sensors work in harsh environments?

Many industrial image sensors have rugged designs. They resist dust, vibration, and temperature changes. Some models include protective housings for outdoor or extreme settings.

Why is frame rate important in machine vision?

Frame rate shows how many images a sensor captures each second. High frame rates help track fast-moving objects and prevent missed details. Low frame rates work for slow or static scenes.

See Also

Understanding How Machine Vision Systems Process Images

Exploring The Role Of Cameras Within Machine Vision

Comprehensive Guide To Machine Vision In Semiconductors