An image pixel machine vision system uses cameras and sensors to help machines see and understand images, one pixel at a time. These systems often check products for defects, making them important for quality control in factories. The global market for image pixel machine vision system technology reached about $10.75 billion in 2023 and is growing quickly as more industries use them for tasks like sorting, barcode reading, and process monitoring.

Key Takeaways

- Image pixel machine vision systems help machines see and analyze images by examining each tiny pixel, improving defect detection and quality control.

- High camera resolution and proper lighting are essential for capturing clear, detailed images that allow the system to find small defects accurately.

- These systems use advanced software and AI to process pixel data, making them flexible and able to adapt to new products and changing environments.

- Core components like cameras, sensors, lighting, and processing units work together to capture and analyze images quickly and precisely.

- Machine vision systems are widely used in industries like manufacturing, healthcare, and robotics to improve quality, safety, and efficiency.

Image Pixel Machine Vision System

What It Is

A machine vision system uses cameras and sensors to help machines see and understand images. An image pixel machine vision system focuses on analyzing images at the smallest level: the pixel. Each pixel is a tiny square that captures light and color, forming the building blocks of every digital image. By examining these pixels, the system can find details that might be invisible to the human eye.

Machine vision systems combine hardware and software to capture, process, and analyze images. The main goal is to automate tasks such as inspection, measurement, and guidance in industrial settings. These systems use pixel-level data to identify the position, shape, and features of objects. This process allows for fast, accurate, and repeatable inspection and defect detection.

Note: Machine vision systems do not rely on a single component. They use a combination of parts to work together smoothly.

- Lighting: Different lighting techniques, such as front, back, or diffused light, help the system capture clear images based on the object’s surface.

- Lens: The lens focuses light onto the image sensor, affecting how sharp and detailed the image appears.

- Camera: The camera collects light from the object and converts it into a digital image made up of pixels.

- Image Sensors: CCD and CMOS sensors turn light into electrical signals, creating the pixel data for analysis.

- Processing Units and Software: These parts analyze the pixel-level data using advanced algorithms, including AI, to detect defects and guide decisions.

- Cabling and Interface Peripherals: These connect all the parts and allow data to move between them.

- Computing Platforms: These provide the power needed to process images quickly and accurately.

Machine vision systems come in different types. Some use area scan cameras to capture an entire image at once, while others use line scan cameras to build images one line of pixels at a time. The choice depends on the task and the type of object being inspected.

A key difference between traditional machine vision systems and modern image pixel machine vision systems lies in their technology and flexibility. The table below shows how they compare:

| Feature/Criteria | Traditional Machine Vision Systems | Image Pixel Machine Vision Systems |

|---|---|---|

| Technology | Hardware-driven, rule-based algorithms | AI-driven, deep learning (e.g., CNNs) |

| Operational Environment | Controlled, stable settings | Dynamic, real-world environments |

| Primary Use | Inspection, measurement, quality control | Object identification, classification |

| Adaptability | Limited, manual updates | High, adapts to new products and defects |

| Processing Speed | High speed in stable settings | Moderate, depends on hardware |

| Hardware Requirements | CPUs or embedded processors | GPUs or AI accelerators |

| Error Handling | May miss subtle defects | Detects subtle defects, reduces errors |

| Implementation Complexity | Simpler, rule-based | Complex, needs large datasets and AI |

This comparison shows that image pixel machine vision systems use AI to analyze pixel data for flexible and accurate object recognition, even in complex environments.

Why Pixels Matter

Pixels play a central role in every machine vision system. Each pixel represents a small part of the image, capturing light and color information. The system examines these pixels to find patterns, edges, and differences that reveal important details about the object.

High pixel resolution allows the machine vision system to see fine details. For example, a camera with more pixels can capture tiny scratches or defects that a lower-resolution camera might miss. The system uses this detailed pixel data to perform tasks such as:

- Defect Detection: By analyzing each pixel, the system can spot small defects, such as cracks or spots, that affect product quality.

- Inspection: The system checks every part of the image for differences in color, shape, or texture, ensuring products meet strict standards.

- Measurement: The system measures distances and sizes by counting pixels, allowing for precise, non-contact measurements.

- Positioning: The system finds the exact location of objects by analyzing pixel patterns, helping robots or machines move accurately.

Pixel-level analysis improves the accuracy of defect detection. The system assigns each pixel to a defect or non-defect class, making it possible to measure the size and shape of defects with high precision. This method is especially important when the shape or area of a defect affects product quality, such as in coatings or microfractures.

Modern pixel machine vision systems use deep learning algorithms to analyze pixel-level data. These algorithms can handle challenges like low contrast or weak textures in industrial images. They also adapt to new types of defects and changing production needs, making them more flexible than older systems.

Tip: High image contrast and a high-quality lens help the system capture sharp images. However, the camera’s pixel resolution sets the limit for how much detail the system can see.

Core Components

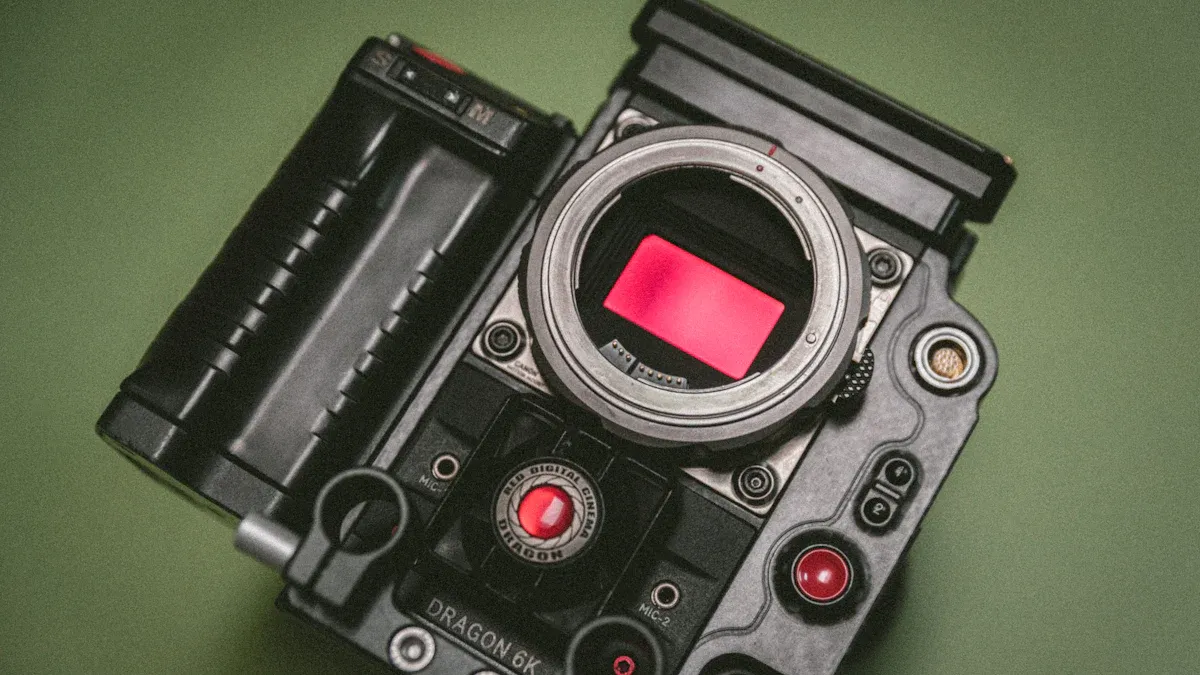

Cameras and Sensors

A machine vision system depends on the right camera and vision sensor to capture clear images. The camera collects light from the object and sends it to the image sensor. The image sensor then converts this light into digital signals. Two main types of image sensor exist: CCD and CMOS. Each vision sensor type has strengths and weaknesses, as shown in the table below.

| Image Sensor Type | Advantages | Disadvantages |

|---|---|---|

| CCD | High image quality, excellent light sensitivity, low noise | Higher power consumption, slower readout, more expensive |

| CMOS | Lower power consumption, faster readout, cost-effective | Generally higher noise (improved in modern sensors) |

The choice of camera and image sensor affects how well the system detects small defects. High resolution in the camera means more pixels in each image, which helps the vision sensor find tiny flaws. For example, high-resolution images allow the system to spot defects as small as 1.5 micrometers. However, higher camera resolution also means the system must handle more data, which can slow down processing if not managed well.

Different camera types suit different tasks. Line scan cameras scan objects one line at a time, making them ideal for moving materials like textiles or paper. Area scan cameras capture the whole image at once, which works best for stationary objects. 3D cameras use special vision sensors to capture depth, helping with tasks like robotic guidance.

No single vision sensor fits every job. The right camera resolution and image sensor depend on the object, the size of defects to detect, and the speed needed.

Processing Units

Processing units analyze the data from the image sensor. The main types include CPUs, GPUs, and VPUs. The table below explains their roles.

| Processing Unit | Role in Machine Vision Systems | Key Characteristics |

|---|---|---|

| CPU | General-purpose processor handling system operations | Sequential task processing, versatile, general use |

| GPU | Specialized in graphics rendering and parallel computing | Excels in image/video data processing, high throughput |

| VPU | Specialized for vision-related tasks in machine vision | Optimized for object detection, facial recognition, real-time analysis, energy efficient, AI integration |

High-performance processing units, such as GPUs, help the system process high-resolution images quickly. They support deep learning models that need lots of computing power. Cloud platforms can also provide extra resources for real-time image analysis.

Lighting

Lighting plays a key role in machine vision. Good lighting helps the camera and vision sensor capture sharp, clear images. Different lighting types suit different tasks:

| Lighting Type | Role in Machine Vision Performance | Applications and Benefits |

|---|---|---|

| Backlighting | Creates high-contrast silhouettes to reveal defects and edges | Used for presence/absence detection, edge detection; highlights holes, gaps, missing components |

| Ring Lighting | Provides uniform illumination reducing shadows and glare | Ideal for inspecting small, cylindrical, or symmetrical objects; enhances surface detail inspection |

| Coaxial Lighting | Minimizes glare on reflective surfaces by directing light along camera axis | Best for shiny or polished objects; captures clear images of reflective surfaces |

| Diffuse Lighting | Offers soft, even illumination to reduce shadows and glare | Useful for curved or shiny surfaces; improves visibility of fine surface features and printed codes |

| Dome Lighting | Surrounds object with light for uniform illumination from all angles | Effective for shiny or uneven surfaces; eliminates shadows and reflections, enhancing image clarity |

| Dark Field Lighting | Illuminates at low angle to highlight raised surface defects by reflecting light back to camera | Detects subtle surface flaws like scratches or cracks on smooth surfaces |

Proper lighting increases contrast and reduces noise, making it easier for the vision sensor to detect defects. The choice of lighting depends on the object’s surface and the type of inspection needed. Good lighting, paired with the right camera resolution and image sensor, ensures the system captures reliable data for analysis.

Pixel Machine Vision and Image Processing

Image Acquisition

Pixel machine vision starts with image acquisition. The process begins when the system identifies features or defects to inspect. Engineers define what makes a good or bad part and build an image database for evaluation. The next step involves selecting the right lighting and material-handling methods. Proper lighting ensures the camera captures clear images, which is vital for accurate inspection. The choice of optics depends on camera resolution and the field of view. Monochrome or color cameras, as well as specialized types like line-scan cameras, help capture the best visual data for each task.

The hardware for image acquisition, such as cameras and frame grabbers, must match the frame rate and resolution needs. Integrating image capturing with motion control allows the system to synchronize image capture with object position. Calibration and testing follow, where the team checks lighting uniformity and system parameters. Finally, an operator interface helps with calibration, setup, and retraining for new defect types.

| Camera Type | Signal Quality | Pixel Depth | Frame Rate | Best Use Case |

|---|---|---|---|---|

| Analog | Lower | 8-10 bit | Slower | Basic inspection |

| Digital | Higher | 10-16 bit | Faster | High-speed, high-resolution |

| Progressive-scan | High | 10-16 bit | Fast | Moving objects, less blur |

| Line-scan | Very High | 10-16 bit | Fast | Continuous materials |

Image Processing Steps

After image acquisition, the pixel machine vision system processes the image using several steps. Preprocessing comes first. The system reduces noise and adjusts brightness and contrast to improve image quality. Common techniques include Gaussian filtering, median filtering, and histogram equalization. These methods help the system see small defects and features more clearly.

Next, the system segments the image. Segmentation divides the image into regions, separating objects from the background. The system then extracts features such as shape, texture, and color. Morphological operations like erosion and dilation help refine object boundaries and remove noise. These steps prepare the pixel-level data for accurate inspection and object recognition.

- Preprocessing: Noise reduction, brightness, and contrast adjustment

- Segmentation: Dividing the image into meaningful parts

- Feature Extraction: Identifying shapes, textures, and colors

- Morphological Processing: Refining object boundaries

Algorithms

Image processing algorithms play a key role in pixel machine vision systems. These algorithms interpret patterns of light and dark pixels to find defects and features. Edge detection methods, such as Sobel and Canny filters, highlight boundaries between objects. Thresholding converts grayscale images into black and white, making it easier to spot flaws.

Many systems use advanced algorithms like YOLO for real-time object recognition. YOLO divides the image into a grid and predicts object locations and classes. Other common algorithms include histogram equalization for contrast, Hough Transform for detecting lines and circles, and convolutional neural networks for deep learning tasks.

| Algorithm Category | Examples and Description |

|---|---|

| Point Processing | Thresholding, histogram equalization |

| Local Operators | Mean, median, Sobel, Prewitt, Laplacian filters |

| Global Transforms | Fourier Transform, Discrete Cosine Transform |

| Feature Detection | Hough Transform, template matching, CNNs |

These algorithms help machine vision systems analyze visual data at the pixel level, improving inspection accuracy and supporting reliable object recognition.

System Accuracy

Resolution

Camera resolution plays a major role in the accuracy of machine vision systems. A high resolution camera captures more pixels, allowing the vision sensor to detect smaller defects and features. When the image sensor provides a dense grid of pixels, the system can measure tiny objects with greater precision. For example, a camera with high resolution can spot a 0.25 mm defect in a 20 mm field of view if the sensor has enough pixels. The image quality improves as the camera resolution increases, but only up to the limits set by the optics and environment. The vision sensor must match the camera resolution to ensure the smallest features are visible. High resolution also supports subpixel measurement, which means the system can detect changes smaller than the size of one pixel. However, designers must balance camera resolution, speed, and cost for the best results.

Lighting Effects

Lighting conditions have a direct impact on image quality and the performance of the vision sensor. Good lighting helps the image sensor capture clear, sharp images, while poor lighting can cause overexposure or underexposure. The vision sensor may struggle to detect features if the lighting is uneven or too dim. Using the right lighting setup, such as ring or diffuse lighting, improves image quality and helps the camera resolution reach its full potential. Some systems use alternative color spaces or specific channels to boost accuracy under changing lighting. Technologies like wide dynamic range cameras and infrared sensors help the vision sensor handle difficult lighting situations. Consistent lighting ensures the image sensor and vision sensor work together to deliver reliable results.

Calibration

Calibration improves measurement accuracy beyond what camera resolution and image quality alone can achieve. The process corrects lens distortions and compensates for differences in pixel size across the image sensor. Calibration uses reference patterns and subpixel techniques to locate features with high precision. The vision sensor relies on calibration to map pixel data to real-world units, ensuring measurements are true and repeatable. Common calibration methods include linear and non-linear models, lens distortion correction, and geometric calibration using checkerboard patterns. Regular calibration keeps the vision sensor and image sensor accurate, even as conditions change. Subpixel algorithms and high resolution optics allow the system to measure features smaller than a single pixel, but only if calibration is precise. Sensor quality, stable calibration targets, and good lighting all support accurate results.

Applications

Manufacturing

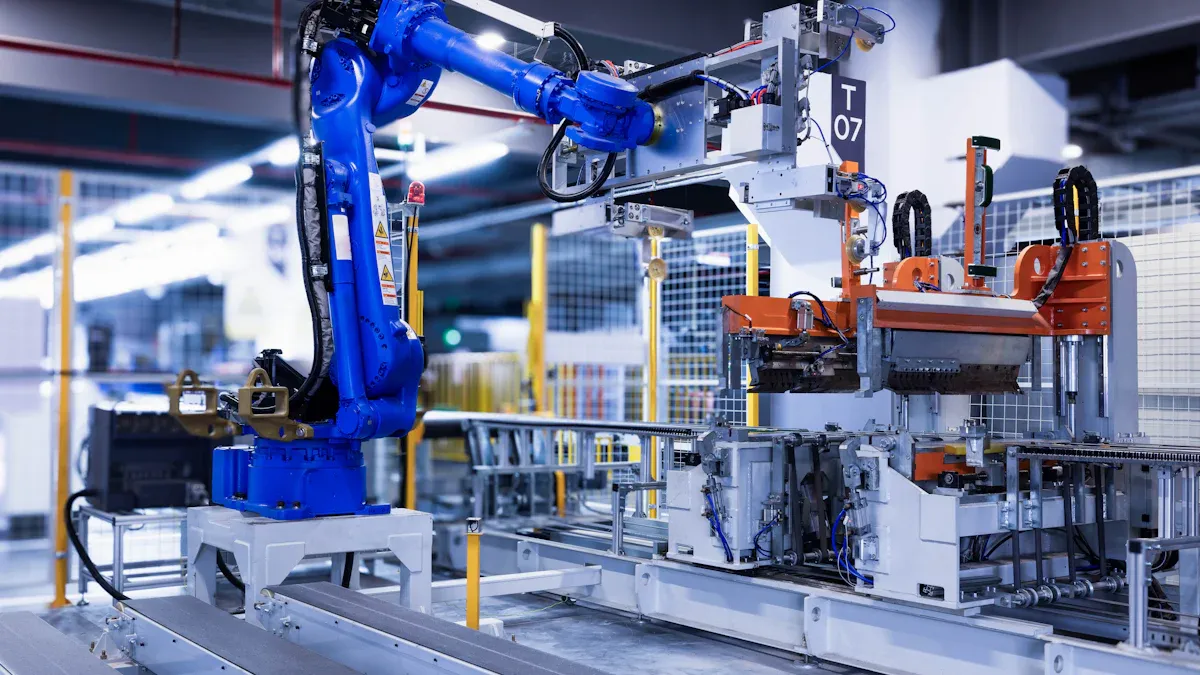

Manufacturing industries rely on machine vision systems for many tasks. These systems use cameras to capture detailed images of products. They then analyze each image at the pixel level for detection of defects and other issues. The main applications include:

- Quality Control: Automated inspections check for defects, measure dimensions, verify packaging, inspect fill levels, and detect contamination. Machine vision systems also inspect circuit boards and verify codes on products.

- Assembly Automation: Vision-guided robots use image data to pick and place parts, verify component presence, and ensure correct assembly.

- Identification and Tracking: Machine vision systems read barcodes and QR codes, track products, and sort items based on visual features.

- Process Optimization and Predictive Maintenance: Real-time monitoring uses image analysis to assess equipment health and trigger maintenance before failures occur.

- Safety Monitoring: Cameras track movement of people and machines, using detection algorithms to prevent accidents.

Automated inspections improve accuracy and speed. They reduce human error and provide consistent results. Machine vision systems also lower costs by reducing waste and rework.

| Benefit | Impact on Manufacturing |

|---|---|

| Enhanced Accuracy | Detects smaller defects, leading to higher product quality |

| Wide Coverage | Inspects large parts quickly |

| Lower Costs | Reduces waste and manual labor |

| Improved Efficiency | Enables faster, automated workflows |

Healthcare

Healthcare professionals use machine vision systems to analyze medical images from X-rays, CT scans, and MRIs. These systems help with detection of tumors, lesions, and other abnormalities. Object recognition locates misplaced devices and highlights diseased tissue. Semantic segmentation classifies each pixel, helping doctors see the difference between healthy and unhealthy areas. Instance segmentation identifies individual cells or tumor spots. These tools support early detection, improve diagnosis, and help plan treatments. Machine vision systems reduce human error and increase the reliability of medical image analysis.

Robotics

Robotics has advanced with the help of machine vision systems. Robots use cameras to capture images and locate objects. Vision processing converts pixel data into real-world coordinates. Early systems required manual programming, but modern machine vision systems use packaged software and AI. Robots now perform complex tasks like bin picking and quality inspection. 3D imaging and improved lighting allow robots to operate with more flexibility and accuracy. Machine vision systems enable robots to adapt to new objects and environments. This technology increases automation, improves detection, and supports intelligent decision-making in many industries.

Image pixel machine vision systems use cameras, sensors, and smart software to inspect images at the pixel level. These systems process pixel data to detect defects and measure objects with high accuracy. Key factors like camera resolution, lighting, and calibration affect results.

- Industries now use AI, edge computing, and advanced lenses for faster, smarter inspections.

- New uses include healthcare, agriculture, and robotics.

Machine vision continues to grow, helping many fields improve quality, safety, and efficiency. 🚀

FAQ

What is the main job of a pixel machine vision system?

A pixel machine vision system checks images for details like defects or measurements. It helps machines "see" by analyzing each pixel. This process improves quality control and speeds up inspections in many industries.

How does camera resolution affect inspection results?

Higher camera resolution means more pixels in each image. More pixels help the system find smaller defects and measure objects more accurately. Low resolution may miss tiny flaws.

Why is lighting important in machine vision?

Lighting helps the camera capture clear images. Good lighting increases contrast and reduces shadows. This makes it easier for the system to spot defects or measure parts.

Can machine vision systems work in different industries?

Yes!

Machine vision systems help in manufacturing, healthcare, robotics, and agriculture. They inspect products, guide robots, and analyze medical images.

Do these systems use artificial intelligence?

Many modern systems use AI.

- AI helps the system learn from examples.

- It can find new types of defects.

- AI improves accuracy and adapts to changes.

See Also

Understanding Pixel-Based Vision Technology In Contemporary Uses

A Comprehensive Guide To Image Processing In Vision Systems

Fundamental Concepts Of Camera Resolution In Vision Systems