A hallucination machine vision system describes an ai system that sometimes "sees" things that do not exist in the real world. Imagine looking at clouds and spotting a dragon or a face—your mind creates images that are not really there. In ai, these hallucinations happen when a machine vision model makes up objects or actions. This can cause problems, especially in important areas like self-driving cars or robots. Studies, such as the HEAL study, show hallucination rates in ai can be up to 40 times higher in tricky situations. Even with new fixes, ai models still struggle to reject impossible tasks, which leads to mistakes. The Berkeley article points out real risks, like accidents, when ai in vision systems hallucinates. The Nature article says there is no single way to define hallucination, but it happens often and is hard to stop. Learning about hallucination machine vision system helps beginners understand why ai sometimes fails and why researchers keep working to make these systems safer.

Key Takeaways

- Hallucination machine vision systems sometimes see or report things that do not exist, causing errors in AI outputs.

- Poor data quality, model bias, and low-quality input are main causes of AI hallucinations in vision systems.

- Researchers use training methods, detection tools, and human oversight to find and reduce hallucinations.

- AI hallucinations can create serious risks in areas like healthcare, self-driving cars, and finance.

- Improving data, models, and human review helps make AI vision systems safer and more reliable for the future.

Hallucination Machine Vision System

Definition

A hallucination machine vision system describes a type of artificial intelligence that sometimes creates images or objects that do not exist in reality. In these systems, ai hallucinations happen when the computer "sees" or reports something that is not present in the real world. This can mean detecting a stop sign where there is none or identifying a person in an empty room. Hallucinations in ai can be positive or negative. In the early 2000s, researchers used the word "hallucination" to describe creative or helpful image generation, such as filling in missing parts of a photo. Today, most people use the term to describe mistakes or errors in ai vision, where the system invents things that are not there. These ai hallucinations can cause confusion and even danger, especially in fields like healthcare or self-driving cars.

Researchers have studied the causes and types of hallucinations in ai for many years. They use large datasets, such as those from Schuhmann et al. (2022), Hudson and Manning (2019), and Mishra et al. (2019), to train and test these systems. Studies by Huang et al. (2023c), Li et al. (2023a), Zhou et al. (2023a), and Liu et al. (2024) show that hallucinations can happen because of problems with data, the model, or the way the ai learns. These studies also show that ai hallucinations can be factual, where the system makes up facts, or cross-modal, where the system mixes up information from different sources.

Note: Hallucinations in ai do not mean the system is "seeing" like a human. Instead, it means the ai is making a mistake in its output.

History

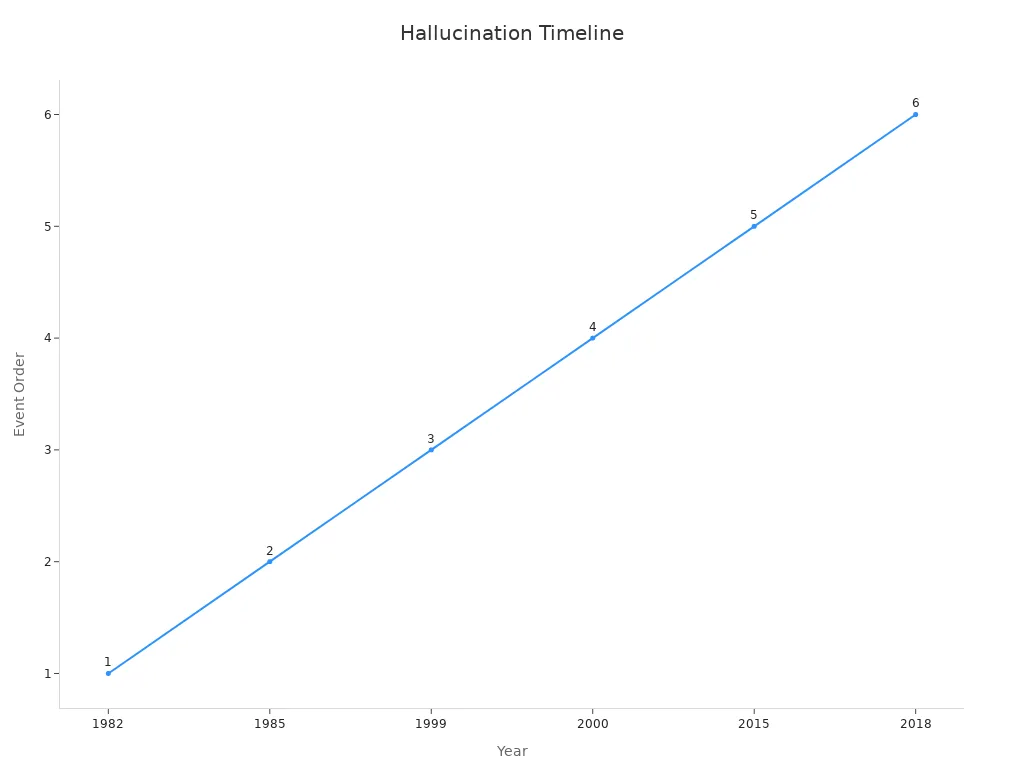

The idea of hallucination in computer vision systems has a long history. Early researchers used the term to describe both errors and creative outputs in artificial intelligence. The table below shows important moments in the history of hallucination machine vision system research:

| Year | Contributor(s) | Work/Context | Significance/Notes |

|---|---|---|---|

| 1982 | John Irving Tait | Technical report "Automatic Summarising of English texts" | Earliest documented use of ‘hallucination’ in computing discourse, indicating early conceptual use. |

| 1985 | Eric Mjolsness | Thesis "Neural Networks, Pattern Recognition, and Fingerprint Hallucination" | Further early use of the term in pattern recognition and neural networks context. |

| 1999 | Simon Baker & Takeo Kanade | Paper "Hallucinating Faces" | Popularized the term in machine vision, specifically in image generation and face recognition. |

| 2000 | Simon Baker & Takeo Kanade | IEEE Conference presentation "Hallucinating Faces" | Reinforced the term’s use in machine vision research. |

| 2015 | Andrej Karpathy | Blog post on RNN effectiveness | Introduced ‘hallucination’ in natural language processing and generative AI contexts. |

| 2018 | Google AI researchers | Research paper on hallucinations in neural machine translation | Helped frame modern understanding of hallucinations as plausible but incorrect AI outputs. |

Over time, the meaning of hallucination in ai has changed. In the past, some researchers saw ai hallucinations as a way for computers to be creative. Now, most experts focus on the risks and errors caused by hallucinations in ai. The shift in meaning shows how important it is to understand and control ai hallucinations in modern systems.

Types

Hallucination machine vision system errors can appear in different types of vision systems. Each type uses a different way to "see" the world, and each can experience ai hallucinations in unique ways.

- 1D Vision Systems: These systems use a single line of data, such as a barcode scanner. Hallucinations in 1D systems might cause the ai to read a barcode that does not exist or misinterpret a line as a code.

- 2D Vision Systems: Most cameras and image recognition tools use 2D vision. In these systems, ai hallucinations can create objects, faces, or text that are not present in the image. For example, a security camera might "see" a person in a photo where there is only a shadow.

- 3D Vision Systems: These systems use depth sensors or multiple cameras to build a 3D model of the world. Hallucinations in 3D systems can cause the ai to invent objects in space, such as a box floating in midair or a wall that is not there.

Researchers have found that ai hallucinations can happen in any of these systems. The causes often relate to the data used to train the ai, the way the model works, or the quality of the input. Some studies, like those by Liu et al. (2023d), Yu et al. (2023b), Wang et al. (2023a), and Yue et al. (2024), show that adding better data and using new training methods can help reduce hallucinations. The POPE benchmark (Li et al., 2023a) helps measure how well these fixes work.

- Key points from research:

- Large datasets help train ai, but poor data can increase hallucinations.

- Studies by Huang et al. (2023c) and others show that hallucinations can be factual, faithfulness-related, or cross-modal.

- New methods, such as adding negative examples and rewriting captions, can lower the rate of ai hallucinations.

A hallucination machine vision system can affect many areas, from self-driving cars to healthcare. Understanding the types and causes of ai hallucinations helps researchers build safer and more reliable ai systems.

Causes of AI Hallucinations

Data Issues

Data issues play a major role in ai hallucinations. When ai learns from flawed training data, it can develop a tendency to see things that are not present. Large datasets help train ai, but if the data contains errors, missing information, or bias, hallucinations become more common. For example, if an ai vision system trains on images with hidden objects or mislabeled items, it may start to invent objects in new images. Researchers have found that overfitting, data noise, and anomalies all contribute to ai hallucinations. Adversarial attacks, such as adding small changes to images, can also trick ai into seeing things that do not exist. Data quality improvement is critical for reducing hallucinations in ai. Training on balanced, high-quality datasets helps minimize these errors.

Note: Reinforcement learning and human feedback can help correct ai hallucinations by guiding the model toward more accurate outputs.

Model Bias

Model bias is another key cause of ai hallucinations. When an ai model learns patterns that do not match reality, it may produce hallucinations. Studies show that model bias can cause the system to generate outputs far from the real data. For example, in experiments with synthetic datasets, researchers filtered out up to 96% of hallucinated samples by measuring variance. However, recursive training on its own outputs led to more hallucinations and mode collapse. This means that hallucination-prone ai can drift away from the original data, making mistakes more often. High model complexity and improper training methods increase the risk of hallucinations in ai.

Input Quality

Input quality affects how often ai hallucinations occur. Low-quality images, blurry photos, or distorted data make it harder for ai to see what is real. Benchmarks like POPE and NOPE show that poor input quality leads to more hallucinations. When ai receives unclear or biased input, it relies on statistical patterns instead of real features. This can cause the system to give consistent but incorrect answers. Improving input quality, such as using higher resolution images and diverse data, reduces hallucinations. Better alignment between visual and text information also helps lower the rate of ai hallucination. Researchers have found that decoding strategies and enhanced training objectives further decrease hallucinations in ai vision systems.

- Key factors that increase ai hallucinations:

- Flawed training data

- Model bias

- Poor input quality

AI in Vision Systems

Detection

Researchers use many methods to detect ai hallucinations in vision systems. Some techniques focus on training, while others work during or after the ai makes predictions. The table below shows several approaches that help spot hallucinations and improve ai reliability:

| Approach Category | Method/Technique | Key Contribution | Benefits/Limitations |

|---|---|---|---|

| Training-based | RLHF (Reinforcement Learning from Human Feedback) | Uses human-like feedback to guide ai | Improves accuracy but needs lots of computing power |

| Training-based | Fine-grained AI feedback | Breaks down responses to find hallucinations | Scalable but complex |

| Training-free | OPERA (beam-search variant) | Ranks outputs to avoid over-trust | Slower than other methods |

| Training-free | Contrastive decoding | Compares outputs from different inputs | Reduces hallucinations but increases wait time |

| Inference-only | SPIN (attention head suppression) | Blocks parts of the model that cause hallucinations | Faster and more efficient |

Other studies suggest using semantic entropy, which measures uncertainty in ai outputs, to detect hallucinations. This method checks if the ai gives different answers to the same question, helping spot when it invents information.

Prevention

Preventing ai hallucinations requires both better training and smarter model design. Researchers use methods like annotation enrichment to improve training data and reduce bias. They also use post-processing to filter out outputs that look suspicious. Multimodal alignment helps the ai match images and text more closely, lowering the chance of hallucinations. Some teams use Latent Space Steering, which guides the ai to produce more accurate results by adjusting how it connects images and words. Training-free methods, such as self-feedback correction, let the ai check its own answers and fix mistakes. These strategies, along with regular benchmarking, help keep hallucinations under control.

Tip: Regularly updating datasets and using feedback loops can further reduce ai hallucinations in real-world systems.

Human Oversight

Human oversight remains a key part of managing ai hallucinations. Even with advanced detection and prevention tools, people must review ai outputs, especially in important fields like healthcare. Studies show that ai can still make mistakes, so experts check results to catch hallucinations that machines miss. Best practices include using multiple models to compare answers, setting clear rules for what counts as a hallucination, and checking ai outputs against real facts. Adding knowledge graphs helps ground ai answers in truth. Human-in-the-loop systems ensure that ai hallucinations do not go unnoticed, keeping vision systems safe and trustworthy.

Real-World Impact

Risks

AI hallucinations create real risks in many industries. When ai systems make mistakes, people can face serious consequences. For example, chatbots hallucinate about 27% of the time, with 46% of their answers containing factual errors. This leads to confusion and unreliable information. In healthcare, ai sometimes misdiagnoses benign skin lesions as malignant, causing unnecessary treatments and distress for patients. Autonomous vehicles have faced accidents because ai vision systems failed to detect objects correctly. Google Bard once gave incorrect scientific information, which spread misinformation and hurt public trust. In law and finance, ai hallucinations can cause legal problems and financial losses. The table below shows some real-world cases:

| Industry / Case Study | Incident / Example | Causes of Hallucination | Consequences / Impact | Mitigation Strategies |

|---|---|---|---|---|

| Autonomous Vehicles | 2018 Tesla autopilot crash: failed to detect white semi-truck | Sensor confusion, overreliance on data | Loss of life, legal issues | Multi-sensor checks, human override |

| Healthcare Diagnostics | 2016 IBM Watson Oncology: incorrect treatment recommendations | Biased data, lack of fact-checking | Patient safety risks, financial damage | Human oversight, diverse training data |

| Legal Research | 2023 GPT-4: hallucinated legal citations in a brief | Probabilistic models, no fact-checking | Legal repercussions, trust erosion | Human verification, real-time legal databases |

| Military and Defense | False target identification in surveillance | Misclassifications, hallucinated data | Friendly fire, misinformation | Human oversight, multi-source verification |

| Financial Markets | 2012 Knight Capital: trading algorithm malfunction | Algorithm errors, lack of safeguards | $440 million loss, market disruption | Enhanced testing, human monitoring |

AI hallucinations erode trust in both the technology and the organizations that use it. Improving data quality, model validation, and human oversight helps reduce these risks.

Applications

AI vision systems support many applications, but hallucinations can affect their performance. Machine vision systems using ai have achieved about 80% accuracy in predicting brain activity patterns before hallucinations occur. This helps in early detection and therapy for mental health. In healthcare, ai assists with diagnosis and treatment planning. In finance, ai analyzes market trends and detects fraud. In law, ai reviews documents and suggests legal strategies. Military and defense use ai for surveillance and threat detection. Each application benefits from ai, but hallucinations can limit reliability.

- Machine vision systems with spatial regularization improve accuracy and balance sensitivity.

- Predictive models work better for auditory hallucinations due to more data in this area.

- Advanced models identify key brain regions, supporting clinical use.

- Combining supervised and unsupervised learning helps understand and predict hallucinations.

- Unsupervised analysis reveals important patterns, even with low explained variance.

Future Trends

Researchers continue to improve ai vision systems to reduce hallucinations. They focus on better data, smarter models, and stronger human oversight. New training methods, such as adversarial training and feedback loops, help ai learn from mistakes. Developers use multi-modal systems that combine images, text, and other data to make ai more reliable. In the future, ai will play a bigger role in healthcare, transportation, and security. Reducing hallucinations will make ai safer and more trustworthy for everyone.

Hallucination in machine vision systems remains a challenge. Researchers study ai to understand why ai sometimes creates errors. Many industries use ai, so learning about ai reliability helps everyone. People can read new studies to see how ai improves. Staying alert to ai mistakes protects users. Experts recommend checking ai results often. Teachers explain how ai works in simple ways. Students can ask questions about ai safety. Companies test ai before using it. Everyone benefits when ai becomes safer.

FAQ

What is a hallucination in a machine vision system?

A hallucination in a machine vision system happens when the system reports something that does not exist. The system might see an object or person that is not present. This can cause confusion or errors in real-world tasks.

Why do ai vision systems make mistakes?

Ai vision systems make mistakes because of poor data, model bias, or low-quality input. These problems can lead the system to invent things that are not real. Researchers work to reduce these errors with better training and oversight.

How can people reduce hallucinations in ai vision systems?

People can reduce hallucinations by using high-quality data and regular model checks. Human oversight helps catch mistakes. Teams also use new training methods and feedback loops to improve accuracy.

Are ai hallucinations dangerous?

Ai hallucinations can be dangerous in some cases. For example, self-driving cars or medical ai systems might make wrong decisions. These mistakes can lead to accidents or harm. Careful testing and human review help lower the risks.

Can ai vision systems learn from their mistakes?

Yes, ai vision systems can learn from mistakes. Researchers use feedback and new data to help the system improve. Over time, the system becomes more reliable and makes fewer errors.

See Also

Understanding Semiconductor Vision Systems For Industrial Applications

Exploring Machine Vision Systems And Computer Vision Models

How Cameras Function Within Machine Vision Technologies