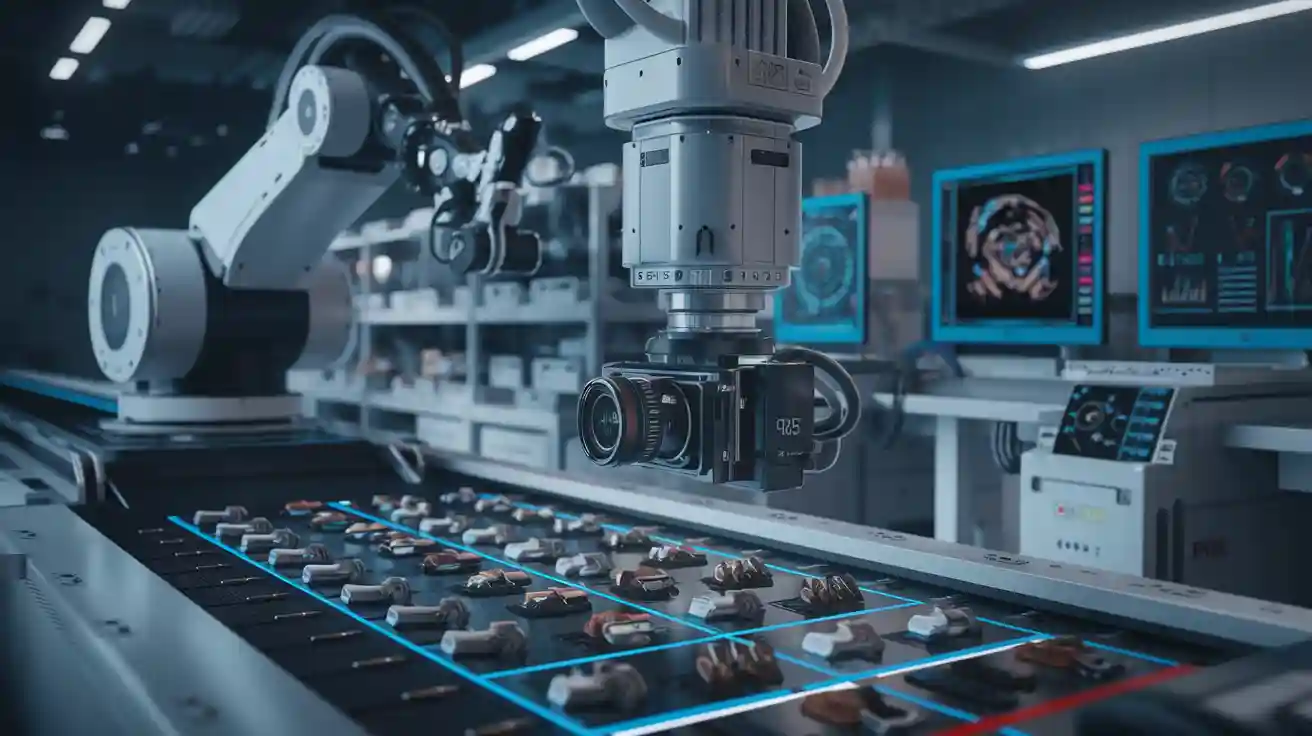

Grid search machine vision system helps you optimize machine vision systems by tuning hyperparameters. It evaluates every combination within a predefined space to identify the best configuration. By using k-fold cross-validation, the grid search machine vision system ensures the model performs well across different data subsets. This systematic approach reduces overfitting and enhances precision, which is crucial in tasks like object detection and image classification.

Key Takeaways

- Grid search tries different hyperparameter combinations to find the best ones for machine vision models.

- Using cross-validation during grid search prevents overfitting and helps the model work well on new data.

- Tuning hyperparameters with grid search can boost model accuracy, which is important for tasks like sorting images or finding objects.

Understanding Grid Search in Machine Vision

What is Grid Search?

Grid search is a systematic method for hyperparameter tuning that helps you find the best combination of hyperparameters for machine learning models. It involves creating a grid of possible parameter values and evaluating each combination to identify the optimal configuration. This approach ensures that no potential solution is overlooked, making it a reliable technique for optimization.

Historically, grid search emerged as a brute-force method to explore parameter spaces in optimization algorithms. Researchers defined lower and upper bounds for each parameter and divided these intervals into equally spaced points. By calculating likelihood values for all combinations, grid search avoided local maxima and improved accuracy. Despite its computational challenges, especially in high-dimensional spaces, grid search remains a cornerstone of hyperparameter tuning in machine vision systems.

How Does Grid Search Work?

Grid search operates through a structured process that ensures thorough exploration of the hyperparameter space:

- Define a hyperparameter grid: You start by creating a dictionary of parameters and their possible values. For example, in a Random Forest model, you might specify the number of trees and maximum depth as parameters.

- Model training and evaluation: Using cross-validation, grid search evaluates each combination of parameters across multiple data subsets. This step minimizes overfitting and ensures generalization.

- Retrieve the best parameters: After evaluating all combinations, grid search identifies the configuration that delivers the highest model performance. You can access these optimal parameters through the

best_params_attribute in tools likeGridSearchCV.

For instance, in predictive maintenance, grid search optimized a Random Forest model with 100 trees and a maximum depth of 20, yielding the best prediction accuracy. Similarly, tuning parameters for gradient boosting models involved evaluating six configurations, leading to improved performance metrics.

Benefits of Grid Search for Model Performance

Grid search offers several advantages that directly enhance model performance:

- Improved accuracy: By systematically exploring all parameter combinations, grid search ensures that your model achieves the highest possible accuracy. For example, in skin lesion analysis, grid search optimization significantly improved classification tasks across multiple CNN models.

- Reduced overfitting: Cross-validation, integrated into grid search, evaluates model performance on different data subsets. This approach prevents overfitting and ensures that your model generalizes well to unseen data.

- Enhanced optimization: Grid search provides a structured framework for tuning hyperparameters, making it easier to identify the best combination of hyperparameters for your machine vision system.

In addition to these benefits, grid search has been instrumental in applications like credit scoring and customer spending predictions. By refining hyperparameters, it improves metrics such as precision, recall, and AUC, demonstrating its versatility across various domains.

Applications of Grid Search in Machine Vision Systems

Optimizing Image Classification Models

Grid search plays a vital role in improving image classification models by identifying the best combination of hyperparameters. When you apply grid search to a convolutional neural network (CNN), it systematically evaluates parameters like learning rate, batch size, and the number of filters. This process ensures that the model achieves optimal accuracy while avoiding overfitting. For example, using stratified k-fold cross-validation during training allows the model to generalize better across unseen data. By fine-tuning these parameters, you can significantly enhance model performance, making it more reliable for tasks like facial recognition or medical image analysis.

Enhancing Object Detection Algorithms

Object detection algorithms benefit greatly from grid search optimization. Tools like GridSearchCV evaluate all possible parameter combinations, ensuring the model is fine-tuned for specific tasks. This approach improves accuracy and reduces overfitting by leveraging cross-validation techniques. For instance, when tuning parameters for YOLO (You Only Look Once) or Faster R-CNN, grid search ensures the best performance by testing configurations systematically.

Example in Practice:

GridSearchCV has been shown to enhance object detection outcomes by identifying optimal settings. This process involves hyperparameter tuning, model evaluation, and selecting the best configuration.

| Process | Description |

|---|---|

| Hyperparameter Tuning | GridSearchCV identifies optimal parameters for machine learning models. |

| Model Evaluation | It tests each combination on various dataset sections to assess accuracy. |

| Optimal Settings | It provides the best parameter combination for enhanced model performance. |

Improving Feature Extraction Techniques

Feature extraction, a critical step in machine vision, also benefits from grid search. By optimizing parameters, you can improve the accuracy of extracted features, which directly impacts the model’s ability to classify or detect objects. For example, in a benchmark test, grid search improved recall rates from 87% to 95% and increased average accuracy to 96.53% for 300 samples. These improvements demonstrate how grid search enhances both precision and generalization in feature extraction tasks.

When you use grid search for feature extraction, it ensures that the model captures the most relevant data patterns. This optimization reduces errors and enhances the overall performance of the machine vision system. Whether you’re working with edge detection or texture analysis, grid search ensures that your model delivers the best results.

Practical Implementation of Grid Search for Precision

Code Example for Grid Search in Machine Vision

Grid search simplifies hyperparameter tuning for machine learning models by automating the process of testing parameter combinations. Below is an example of how you can implement grid search using Python’s GridSearchCV in a machine vision project:

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

from sklearn.datasets import load_digits

# Load dataset

data = load_digits()

X, y = data.data, data.target

# Define the model and hyperparameter grid

model = RandomForestClassifier()

param_grid = {

'n_estimators': [50, 100, 150],

'max_depth': [10, 20, 30],

'min_samples_split': [2, 5, 10]

}

# Perform grid search with cross-validation

grid_search = GridSearchCV(estimator=model, param_grid=param_grid, cv=5, scoring='accuracy')

grid_search.fit(X, y)

# Retrieve the best parameters and evaluate the model

best_params = grid_search.best_params_

best_model = grid_search.best_estimator_

print("Best Parameters:", best_params)

print("Best Accuracy:", accuracy_score(y, best_model.predict(X)))

This code demonstrates how grid search systematically evaluates hyperparameter combinations using cross-validation. By identifying the best configuration, you can achieve optimal performance for your machine vision system.

Hyperparameter Tuning Strategies

Effective hyperparameter tuning requires a structured approach. Grid search excels in this area by ensuring exhaustive exploration of parameter combinations. Here are some strategies validated by industry case studies:

- Learning Rate Adjustment: Fine-tuning the learning rate can significantly improve training efficiency and model performance. For example, reducing the learning rate by 0.01 improved accuracy by 16.7% in a CNN-based image classification project.

- Dropout Rate Refinement: Adjusting dropout rates enhances generalization and reduces overfitting. In one case study, refining dropout rates led to a 94.3% accuracy improvement.

- Convolutional Layer Configuration: Experimenting with layer depths and kernel sizes can reveal non-intuitive configurations that boost performance.

Other advanced methods, such as random search and Bayesian optimization, offer alternatives to grid search. Random search is computationally efficient in high-dimensional spaces, while Bayesian optimization uses probabilistic models to guide the search, reducing the number of evaluations. Tools like Optuna combine these techniques for intelligent hyperparameter tuning.

Evaluation Methods for Model Performance

Evaluating model performance is crucial to ensure the effectiveness of grid search. Several methods and metrics have been statistically proven to assess improvements:

| Method | Description |

|---|---|

| Racing Methods | Evaluate models on an initial subset of resamples, discarding poorly performing parameter sets early. |

| ANOVA | Conduct statistical significance testing for different model configurations using tune_race_anova(). |

| Futility Analysis | Interim analysis to discard poor parameter settings, similar to clinical trial methods. |

| Bradley-Terry Model | Measures the winning ability of parameter settings, treating the data as a competition. |

These methods ensure that your model achieves optimal performance while avoiding overfitting. Cross-validation remains a cornerstone of evaluation, providing insights into how well your model generalizes across different data subsets. By combining these techniques, you can refine your machine vision system for real-world applications, such as low-visibility scenarios where precision is critical for safety.

Grid search plays a pivotal role in achieving precision in machine vision systems. It systematically explores hyperparameter combinations, ensuring optimal configurations that enhance accuracy and reduce overfitting. Statistical significance tests during tuning prevent overfitting and improve generalization, maintaining a balance between bias and variance.

Best Practices for Grid Search

- Use small search spaces for computational efficiency.

- Incorporate cross-validation to ensure robust model evaluation.

- Apply significance tests to confirm performance improvements.

Tips to Avoid Common Pitfalls

| Aspect | Grid Search | Random Search |

|---|---|---|

| Simplicity | Easy to understand and implement. | More complex due to randomness. |

| Computational Cost | High for large search spaces. | Generally lower, especially in large spaces. |

| Exploration | Exhaustive, guarantees all combinations tested. | Randomly samples, may miss some combinations. |

| Best Use Case | Small search spaces. | Large search spaces with few critical hyperparameters. |

Focus on tuning critical parameters like learning rate, tree depth, and dropout rates. Balance computational efficiency with precision to optimize your machine vision system effectively.

FAQ

What is the difference between grid search and random search?

Grid search evaluates all combinations in a parameter grid. Random search samples combinations randomly, making it faster for large spaces.

How does cross-validation improve model performance?

Cross-validation tests your model on multiple data subsets. This process reduces overfitting and ensures better generalization to unseen data.

Can grid search be used for automated machine learning?

Yes, grid search integrates well with automated machine learning. It systematically tunes hyperparameters to achieve optimal performance in machine learning models.

See Also

Do Filtering Systems Enhance Accuracy In Machine Vision?

Understanding Camera Resolution Fundamentals In Machine Vision

A Deep Dive Into Sorting Fundamentals In Machine Vision

Grasping The Concept Of Thresholding In Machine Vision

Ensuring Precise Alignment With Machine Vision Systems By 2025