Fine-tuning machine vision systems transforms pre-trained models into powerful tools for specific tasks. By adjusting existing models, you can leverage their learned knowledge to improve accuracy and adapt them to unique requirements. This process enhances efficiency, especially when labeled data is limited. For example, fine-tuning machine vision systems reduces training time by up to 90% and boosts task performance by 10-20%, as recent studies show. Whether detecting fruits with YOLOv8n or diagnosing skin cancer with CNN models, fine-tuning machine vision systems offers remarkable results. It saves resources and unlocks tailored solutions for complex vision challenges.

Key Takeaways

- Fine-tuning adjusts pre-trained models to work better for tasks.

- It uses less data and time than starting from zero.

- Picking the right pre-trained model is very important.

- The model should match the task you want to do.

- Checking results with tools like accuracy ensures it works well.

- Fine-tuning helps create custom solutions for many industries.

- It improves work in areas like healthcare and factories.

Understanding Fine-Tuning in Machine Vision

What Is Fine-Tuning?

Fine-tuning is a process where you adapt a pre-trained model to perform a specific task. Instead of training a model from scratch, you start with one that has already learned general features from a large dataset. For example, a pre-trained model trained on millions of images can recognize basic patterns like edges, shapes, and textures. You then refine this model using a smaller dataset tailored to your specific task, such as identifying rare plant species or detecting defects in manufacturing.

This approach allows you to maintain the original capabilities of the pre-trained model while specializing it for your needs. It’s especially useful when you have limited data or resources. According to technical sources, fine-tuning enables efficient model creation and can outperform the original model on specific tasks. The table below summarizes the key aspects of fine-tuning:

| Aspect | Description |

|---|---|

| Definition | Fine-tuning is the process of adapting a pretrained model to a specific task using a smaller dataset. |

| Purpose | To maintain original capabilities while specializing the model for targeted use cases. |

| Benefits | Enables efficient model creation, especially when resources are limited or data is scarce. |

| Performance | Fine-tuned models can outperform original models on specific tasks. |

| Example | Training a language model on specific company data to improve customer support responses. |

| Starting Point | Begins with a model trained on a large, diverse dataset, learning a wide range of features. |

By leveraging fine-tuning, you can save time, reduce costs, and achieve higher accuracy for your specific vision tasks.

Why Fine-Tuning Is Essential for Vision Tasks

Fine-tuning plays a critical role in enhancing the performance of machine vision systems. It allows you to adapt models to specific tasks, ensuring they deliver accurate results. For instance, a general-purpose image classification model may struggle with niche tasks like identifying rare diseases in medical imaging. Fine-tuning helps bridge this gap by tailoring the model to your unique requirements.

Empirical studies highlight the importance of fine-tuning for vision tasks. One study on Parameter Efficient Fine-Tuning (PEFT) found that increasing the number of fine-tunable parameters improves performance, especially when tasks differ from the original pre-training. Another study revealed that fine-tuning is particularly effective when the dataset size is small, as it allows the model to focus on task-specific features without requiring extensive data.

| Study Focus | Findings | Consistency with Pre-training |

|---|---|---|

| Parameter Efficient Fine-Tuning (PEFT) | Performance is influenced by data size and fine-tunable parameters | Consistent tasks show different performance trends compared to inconsistent tasks |

| Downstream Tasks | Increasing fine-tunable parameters benefits performance if inconsistent | Data size has no effect if tasks are consistent with pre-training |

Fine-tuning ensures that your model not only learns from the data but also adapts to the nuances of your specific task. This makes it an indispensable technique in machine vision.

Fine-Tuning vs. Training from Scratch

When building a machine vision model, you have two main options: fine-tuning a pre-trained model or training a new model from scratch. Each approach has its advantages and limitations, but fine-tuning often stands out as the more practical choice for most applications.

Fine-tuning requires significantly less data compared to training from scratch. While training a model from scratch might need hundreds of thousands of labeled examples, fine-tuning can work with just a few hundred or thousand examples. This makes it ideal for tasks where data is scarce or expensive to collect. Additionally, fine-tuning is faster and more cost-effective. You can achieve results in hours or days, whereas training from scratch might take weeks or even months.

The table below compares the two approaches:

| Fine-Tuning | Training from Scratch | |

|---|---|---|

| Data Requirements | Less data required (100s to 1000s of examples) | Large datasets required (100,000s+ examples) |

| Implementation Time | Rapid (hours to days) | Slow (weeks to months) |

| Compute Required | Low | High |

| Model Customization | Constrained architecture | Full customization |

| Performance Potential | Lower ceiling | Higher ceiling |

| Problem Similarity | Excels on similar domains | Better on dissimilar domains |

Fine-tuning is particularly effective when your task is similar to the one the pre-trained model was originally trained on. For example, if you’re working on image classification tasks, a pre-trained model like ResNet or EfficientNet can provide a strong starting point. On the other hand, training from scratch might be necessary for entirely new tasks or when you need complete control over the model architecture.

By choosing fine-tuning, you can leverage the power of transfer learning to achieve high accuracy and performance without the need for extensive resources.

How Fine-Tuning Works in Machine Vision Systems

Choosing a Pre-Trained Model

Selecting the right pre-trained model is the first step in fine-tuning. A pre-trained model serves as a foundation, offering a head start by leveraging knowledge from large datasets. For example, models like ResNet, EfficientNet, and YOLO are widely used in computer vision tasks such as image classification, object detection, and image segmentation. These models already understand general features like edges, textures, and shapes, which you can adapt to your specific task.

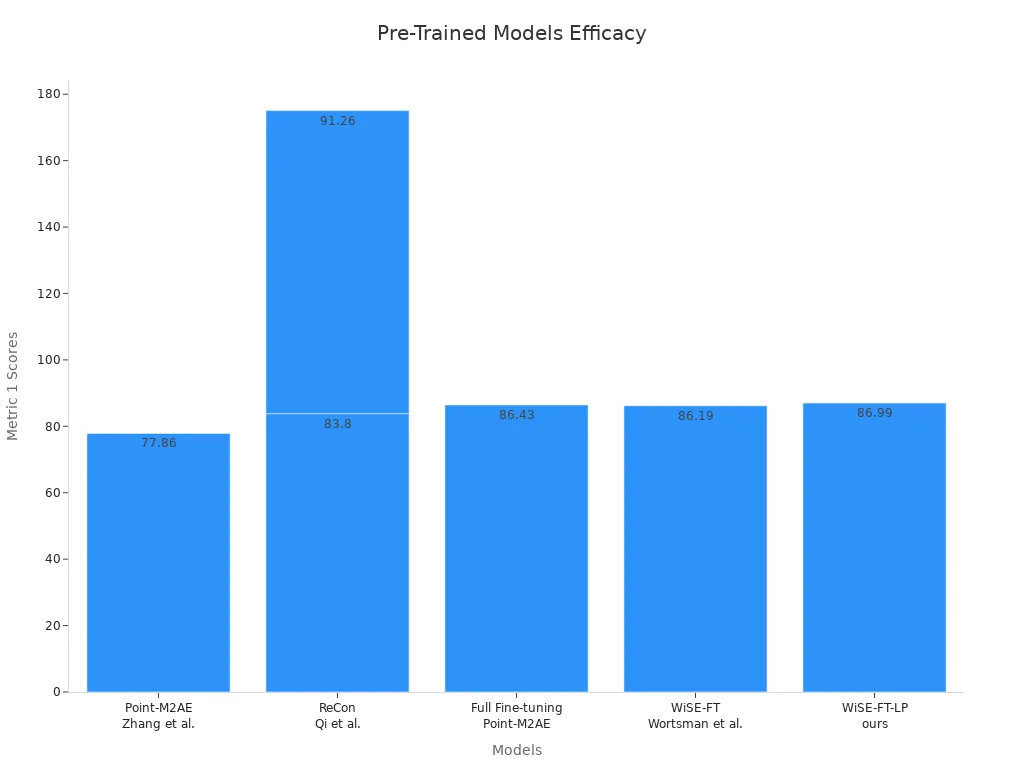

When choosing a pre-trained model, consider the similarity between your task and the original training dataset. If your task involves medical imaging, a model pre-trained on X-ray datasets will likely perform better than one trained on natural images. The table below highlights the performance of various pre-trained models:

| Model | Metric 1 | Metric 2 | Metric 3 | Metric 4 |

|---|---|---|---|---|

| Point-M2AE (Zhang et al., 2022a) | 77.86 | 86.06 | 84.85 | 92.9 |

| ReCon (Qi et al., 2023) | 83.80 | 90.71 | 90.62 | 92.34 |

| Full Fine-tuning: Point-M2AE | 86.43 | N/A | N/A | 89.59 |

| Full Fine-tuning: ReCon | 91.26 | N/A | N/A | 91.82 |

| WiSE-FT (Wortsman et al., 2022) | 86.19 | N/A | N/A | 89.71 |

| WiSE-FT-LP (ours) | 86.99 | N/A | N/A | 90.68 |

This data shows that starting with a strong pre-trained model can significantly improve accuracy and model performance. By leveraging transfer learning, you can save time and resources while achieving better results.

Freezing and Unfreezing Layers

Freezing and unfreezing layers is a critical technique in fine-tuning. It allows you to control which parts of the model get updated during training. Early layers in a model typically capture general features like edges and textures. These layers are often well-trained and do not require further adjustments. Later layers, however, focus on task-specific features and need more training to adapt to your dataset.

To freeze layers, you set their requires_grad attribute to False. This prevents them from being updated during backpropagation. For example:

for param in model.features.parameters():

param.requires_grad = False

Once the top layers are trained, you can unfreeze some earlier layers to fine-tune the entire model. This gradual approach reduces the risk of overfitting and ensures efficient use of computational resources.

Key points about freezing and unfreezing layers include:

- Freezing layers reduces the number of trainable parameters, simplifying the model.

- Early layers are frozen to retain general features, while later layers are fine-tuned for specific tasks.

- Gradually unfreezing layers allows the model to adapt without losing its pre-trained knowledge.

This strategy is especially useful for tasks like object detection or segmentation, where the model needs to learn domain-specific features while retaining its foundational understanding.

Adjusting Model Parameters

Fine-tuning also involves adjusting model parameters to optimize performance. Start with sensible default parameters provided by frameworks like TensorFlow or PyTorch. These defaults offer a strong baseline for most tasks. Next, test different configurations using small subsets of your data. This approach saves time and helps you identify the best settings quickly.

Here are some guidelines for parameter tuning:

- Employ Hyperband and Successive Halving: These methods allocate resources dynamically, focusing on promising trials.

- Leverage Bayesian Optimization Tools: Use probabilistic models to guide your search for optimal parameters.

- Balance Manual and Automated Tuning: Combine automated tools with your intuition for better results.

For large-scale tuning, consider parallelizing searches across multiple cores or using cloud services. This strategy speeds up the process and ensures efficient resource management. By fine-tuning parameters, you can enhance accuracy and tailor the model to your specific needs.

Validating and Evaluating Results

After fine-tuning your machine vision model, validating and evaluating its performance ensures it meets your task’s requirements. This step helps you measure how well the model performs and identify areas for improvement. By using the right metrics and methods, you can gain valuable insights into your model’s strengths and weaknesses.

Key Metrics for Evaluation

To evaluate your fine-tuned model, you can rely on several benchmark metrics. These metrics provide a clear picture of your model’s performance across different tasks:

- Accuracy: Measures the percentage of correct predictions. It is a straightforward way to assess overall performance.

- Precision and Recall: Precision focuses on the percentage of true positives among all positive predictions, while recall measures the percentage of true positives among all actual positives. These metrics are crucial for tasks like binary classification.

- F1-score: Combines precision and recall into a single value by calculating their harmonic mean. It is especially useful when you need a balance between the two.

- Intersection over Union (IoU): Used in object detection and segmentation, this metric measures the overlap between predicted and actual bounding boxes or masks.

- Mean Average Precision (mAP): Evaluates average precision across multiple IoU thresholds, making it a popular choice for object detection tasks.

- Mean Intersection over Union (mIoU): Calculates the average IoU across multiple classes, often used in semantic segmentation.

These metrics allow you to quantify your model’s performance and compare it against benchmarks or other models.

Advanced Methods for Validation

To validate your fine-tuned model effectively, follow these steps:

- Upload your trained model along with a validation dataset.

- Assess its performance using the metrics mentioned above.

- Use visualization tools like confusion matrices or ROC curves to interpret the results.

- Identify areas where the model underperforms, such as specific classes or conditions.

- Make adjustments to the model or training process and re-validate.

You can also implement observability tools to monitor key metrics like precision, recall, and detection accuracy. Using separate datasets for training and validation prevents overfitting and ensures reliable results. Stress-testing your model with datasets that include variations in lighting, angles, or other conditions can help you evaluate its robustness.

Tools for Visualization and Analysis

Visualization tools play a vital role in understanding your model’s performance. For example, confusion matrices show how well your model distinguishes between classes. ROC curves help you analyze the trade-off between true positive and false positive rates. These tools make it easier to pinpoint weaknesses and guide your next steps in fine-tuning.

By combining metrics, advanced methods, and visualization tools, you can ensure your fine-tuned model performs optimally for your specific task. This process not only validates your model but also provides actionable insights for further improvement.

Benefits of Fine-Tuning Machine Vision Models

Enhanced Accuracy and Performance

Fine-tuning significantly improves the accuracy of machine vision models by adapting them to specific tasks. When you fine-tune a pre-trained model, it retains its foundational knowledge while learning task-specific features. This dual advantage enhances precision and recall, which are critical for many applications.

- Precision ensures accurate positive predictions, vital for tasks like email spam detection or fraud prevention.

- Recall measures the ability to identify all positive cases, crucial in areas like disease screening.

For example, fine-tuning can reduce training time by up to 90% and improve task-specific performance by 10-20%. In high-stakes scenarios like medical imaging or fraud detection, these improvements make a significant difference. Whether you’re working on image classification or object detection, fine-tuning ensures your model delivers reliable results.

Tip: Use fine-tuning techniques to maximize accuracy without requiring extensive datasets or computational resources.

Faster Training and Lower Costs

Fine-tuning accelerates the training process and reduces costs, making it an efficient choice for machine vision applications. Instead of training a model from scratch, you start with a pre-trained model, which already understands general features. This approach saves time and resources.

- Fine-tuning achieves good performance with less data and fewer processing resources.

- In workflows like mortgage application automation, it speeds up approval times by 20x and cuts costs by 80% per document.

By leveraging pre-trained models, you can focus on refining task-specific features, reducing the need for extensive computational power. This efficiency makes fine-tuning ideal for industries where time and cost savings are critical.

Tailored Solutions for Specific Domains

Fine-tuning allows you to create models tailored to specific domains, unlocking unique solutions for specialized applications. By adapting pre-trained models with domain-specific data, you can address challenges in fields like agriculture, manufacturing, and law.

- In agriculture, companies like Bayer use fine-tuned AI models to enhance crop protection and agronomy.

- In manufacturing, researchers refine models to improve code generation and query understanding.

- In legal fields, firms customize AI tools to analyze large datasets, gaining a competitive edge in healthcare private equity deals.

These tailored solutions demonstrate the versatility of fine-tuning. Whether you’re optimizing image classification for niche industries or enhancing decision-making in complex workflows, fine-tuning ensures your model meets the demands of your domain.

Challenges in Fine-Tuning Machine Vision Systems

Overfitting Risks

Overfitting is a common challenge when fine-tuning machine vision models. It occurs when your model learns patterns specific to the training data but fails to generalize to new, unseen data. This issue becomes more pronounced when working with small datasets. For example, studies show that fine-tuning pre-trained models on limited data can lead to overfitting, reducing their performance on out-of-distribution tasks. Larger neural networks, while powerful, are particularly vulnerable to this problem.

Fine-tuning with generated data can also increase risks. Research reveals that fine-tuning Pythia models with synthetic data raised the likelihood of extracting sensitive information by 20%. This highlights the importance of careful validation and testing to ensure your model performs well across diverse scenarios. To mitigate overfitting, you can use techniques like data augmentation, dropout layers, and early stopping during training.

Computational Limitations

Fine-tuning machine vision models often demands significant computational resources. Training deep learning models, especially large ones, can take considerable time and hardware power. Benchmarks like MLPerf Training v4.0 emphasize the need for efficient methods, such as LoRA fine-tuning, which optimizes resource usage while maintaining high performance. However, even with these advancements, hardware limitations can restrict your ability to fine-tune models effectively.

For instance, fine-tuning large-scale models like SLMs or LLMs requires high-end GPUs or TPUs, which may not be accessible to everyone. This limitation can slow down your workflow and increase costs. To address this, you can explore cloud-based solutions or lightweight fine-tuning techniques that reduce computational demands without compromising accuracy.

| Method | Accuracy (ACC) | F1 Score | Matthews Correlation (MCC) |

|---|---|---|---|

| Full Fine-tuned SLM | High | High | High |

| LoRA Fine-tuned LLM | Slightly Higher | Higher | Higher |

Selecting the Right Pre-Trained Model

Choosing the right pre-trained model is crucial for successful fine-tuning. The model’s architecture, pre-training data, and performance metrics should align with your specific task. For example, if your task involves computer vision tasks like medical imaging, selecting a model pre-trained on similar datasets will yield better results.

You should also consider the size of your dataset and available computational resources. A smaller dataset may benefit from a lightweight model, while a larger dataset might require a more complex architecture. Additionally, the desired level of performance plays a role. Fine-tuning pre-trained models tailored to your domain can improve efficiency, accuracy, and time to market. By carefully evaluating these factors, you can minimize risks and maximize the benefits of fine-tuning.

Real-World Applications of Fine-Tuning in Vision

Image Classification in Niche Industries

Fine-tuning has revolutionized image classification in specialized industries by enabling models to adapt to domain-specific requirements. You can use this technique to train models on smaller datasets while achieving high accuracy. For example, in legal tech, fine-tuning helps models understand complex terminology unique to legal documents. In healthcare, it ensures models recognize visual patterns in medical images, such as identifying abnormalities in X-rays or MRIs.

This approach also offers significant cost savings and improved performance. Startups and small businesses benefit from fine-tuning because it reduces the need for extensive data collection and computational resources. Whether you’re working in agriculture, manufacturing, or law, fine-tuning provides practical solutions for image classification challenges in niche industries.

Object Detection for Real-Time Use Cases

Fine-tuning enhances object detection models for real-time applications, making them faster and more accurate. Models like YOLOv8 excel in scenarios requiring quick inference speeds, such as autonomous vehicles or surveillance systems. By refining pre-trained models, you can achieve high precision and speed, even in resource-constrained environments.

For example, D-FINE utilizes Fine-grained Distribution Refinement (FDR) to improve bounding box accuracy. This technique ensures precise detection of objects, making it ideal for tasks like monitoring traffic or detecting defects in manufacturing. The table below highlights the performance benchmarks of popular object detection models:

| Model | Dataset Size | Average Precision | Inference Speed | Comments |

|---|---|---|---|---|

| YOLOv8 | 1500, 2500, 6500 | High | Fast | Best overall performance |

| Faster R-CNN | 1500, 2500, 6500 | Competitive | Moderate | Strong on PlastOPol dataset |

| DETR | 1500, 2500, 6500 | Lower | Slow | Needs further development |

By fine-tuning models like YOLOv8, you can optimize object detection for real-time use cases, ensuring reliable performance across diverse scenarios.

Medical Imaging and Diagnostics

Fine-tuning has transformed medical imaging by improving diagnostic accuracy and efficiency. You can use this technique to train models on specialized datasets, enabling them to detect diseases with high precision. For instance, hyperparameter tuning and transfer learning have enhanced models for kidney condition detection, achieving precision and recall metrics close to 99%. This capability allows healthcare professionals to identify cysts and tumors accurately.

Research also shows that fine-tuning reduces reading time for radiologists and improves diagnostic sensitivity. Radiology residents experienced a 14% decrease in reading time, while radiologists saw a 12% reduction. Macro sensitivity improved to 0.935, highlighting the effectiveness of fine-tuned models in clinical settings. The table below summarizes performance improvements across various medical domains:

| Fine-Tuning Strategy | Performance Improvement | Medical Domain |

|---|---|---|

| Auto-RGN | Up to 11% | Various |

| LP-FT | Notable improvements in over 50% of cases | Various |

| Standard Techniques | Varies by architecture | Various |

By leveraging fine-tuning, you can enhance diagnostic workflows, reduce errors, and improve patient outcomes in medical imaging and diagnostics.

Autonomous Systems and Robotics

Fine-tuning plays a vital role in advancing autonomous systems and robotics. It allows these systems to adapt to specific tasks and environments, improving their reliability and efficiency. By refining pre-trained models, you can enhance the performance of robots in real-world applications, from industrial automation to scientific research.

In robotics, fine-tuning ensures that systems maintain high accuracy over time. For example, the AutoEval system demonstrated resilience to aging effects. Evaluations showed that its reset policy and success classifiers achieved a consistent accuracy of 96%. This highlights how fine-tuning can enhance the longevity and reliability of robotic systems, even during continuous operation.

Fine-tuning also optimizes autonomous systems for scientific research. The autonomous lab system (ANL) used fine-tuning techniques to improve biotechnological experiments. By combining modular devices with Bayesian optimization algorithms, the ANL enhanced the performance of a recombinant Escherichia coli strain. This led to better cell growth rates and maximum cell growth. These results show how fine-tuning can drive innovation in scientific fields.

Robotic manipulation policies also benefit from fine-tuning. In the AutoEval study, policies maintained a high accuracy of 96% over two months of continuous operation. This demonstrates the robustness of fine-tuned systems in handling real-world challenges. Whether you are working on robotic arms for manufacturing or autonomous drones for delivery, fine-tuning ensures reliable performance.

By leveraging fine-tuning, you can unlock the full potential of autonomous systems and robotics. This approach not only improves accuracy but also ensures adaptability, making it a cornerstone of modern robotics.

Fine-tuning in machine vision systems offers a transformative approach to adapting models for specialized tasks. By leveraging pre-trained models, you can achieve enhanced accuracy, faster training times, and tailored solutions for niche applications. Metrics like precision, recall, and F1 score help quantify the impact of fine-tuning, while techniques such as data preprocessing and feature engineering optimize model performance.

- Benefits of Fine-Tuning:

- Improves accuracy by focusing on task-specific features.

- Reduces training costs and time, making it accessible for smaller datasets.

- Enables customized outputs aligned with industry-specific needs.

The growing trend of enterprises seeking tailored AI solutions underscores the importance of experimenting with fine-tuning techniques. For example, advanced strategies like Mixture of Experts (MoE) ensure models deliver reliable results. Case studies, such as Air Canada’s chatbot failure, highlight the risks of neglecting fine-tuning, emphasizing its role in ensuring AI reliability.

By exploring pre-trained models and refining them for your domain, you can unlock their full potential and create solutions that meet your unique requirements. Fine-tuning empowers you to innovate while maintaining efficiency and precision.

FAQ

What is the difference between fine-tuning and transfer learning?

Fine-tuning adapts a pre-trained model to your specific task by updating its parameters. Transfer learning uses the knowledge from a pre-trained model without significant modifications. Fine-tuning offers more customization, while transfer learning is faster for general tasks.

How much data do I need for fine-tuning?

You need fewer data compared to training from scratch. A few hundred to a few thousand labeled examples often suffice. The exact amount depends on your task and the complexity of the pre-trained model.

Can fine-tuning work with limited computational resources?

Yes, fine-tuning is resource-efficient. Techniques like freezing layers and lightweight fine-tuning methods reduce computational demands. Cloud-based services or smaller pre-trained models can also help if hardware is limited.

How do I avoid overfitting during fine-tuning?

Use data augmentation, dropout layers, and early stopping to prevent overfitting. Validate your model on diverse datasets to ensure it generalizes well. Gradually unfreeze layers to retain foundational knowledge while adapting to your task.

Which pre-trained model should I choose for my task?

Choose a model trained on data similar to your task. For medical imaging, select models pre-trained on healthcare datasets. For general vision tasks, models like ResNet or YOLO work well. Match the model’s architecture to your dataset size and computational capacity.

See Also

Essential Calibration Software For Vision Systems: A Starter Guide

An In-Depth Overview Of Machine Vision In Automation

Understanding The Fundamentals Of Metrology Vision Systems

Key Concepts Of Camera Resolution In Machine Vision

Fundamentals Of Sorting Systems In Machine Vision Technology