Ensemble methods machine vision system uses several machine learning models together to solve vision problems. Imagine a group of doctors discussing a complex case; their combined opinions often lead to better decisions. In the same way, ensemble methods help ai systems become more reliable. Machine vision with these methods can spot objects and patterns more accurately. Studies show that random forests reduce misclassification errors by up to 30% compared to single trees, and boosting can increase accuracy by 10–20% with each step. Beginners find that ensemble methods make ai smarter and more robust.

Key Takeaways

- Ensemble methods combine multiple machine learning models to improve accuracy and reliability in machine vision tasks.

- Using ensembles reduces errors, lowers overfitting risk, and makes systems more robust against noisy or complex data.

- Popular ensemble techniques include bagging (like random forests), boosting, stacking, and voting, each improving results in different ways.

- Beginners can start with simple ensembles using tools like Scikit-learn, TensorFlow, and OpenCV, following clear steps from data preparation to model tuning.

- While ensembles boost performance, they can increase complexity and cost; strategies like model distillation and careful tuning help manage these challenges.

Ensemble Methods Overview

What Are Ensemble Methods?

Ensemble methods combine several models to solve a problem in a machine learning or machine vision system. Each model, called a base learner, makes its own prediction. The system then combines these predictions to make a final decision. This approach helps the system become more accurate and reliable than using just one model.

In machine vision, ensemble methods play a key role. They help ai systems recognize objects, classify images, and detect patterns. These methods use different types of models or the same model with different settings. When the system combines their outputs, it reduces the chance of mistakes.

There are two main types of ensembles:

- Homogeneous ensembles use the same type of model, such as several decision trees in a random forest.

- Heterogeneous ensembles use different types of models, like combining a neural network, a support vector machine, and a decision tree.

Tip: Homogeneous ensembles often use the same algorithm but change the data or settings. Heterogeneous ensembles mix different algorithms to increase diversity.

Ensemble learning has become popular in machine learning and vision because it improves predictive performance. Researchers have shown that ensemble methods can boost accuracy and make systems more robust. For example, stacking, bagging, and boosting are common ensemble learning techniques. Each one uses a different way to combine models and improve results.

Why Use Ensemble Methods?

Ensemble methods machine vision system offers many benefits. By combining multiple models, the system can:

- Increase accuracy by averaging predictions and reducing errors.

- Lower the risk of overfitting, which happens when a model learns noise instead of useful patterns.

- Improve robustness, making the system less sensitive to noisy data or outliers.

- Balance the bias-variance trade-off, leading to more stable predictions.

Recent studies highlight the advantages of ensemble learning in machine vision. For example:

- Ensemble methods improve accuracy by averaging multiple predictors, which reduces individual model errors.

- They lower variance by a factor of about (1/n), where (n) is the number of models.

- Ensembles show strong resilience to noisy data and outliers, which is important in image recognition tasks with changing lighting or occlusions.

- Model diversity within ensembles helps cancel out errors and improves predictive performance.

A study compared ensemble models to single models in machine vision tasks. The results showed that ensemble approaches, such as majority voting classifiers, improved prediction accuracy by about 6.9% and sensitivity by 14.5%. The table below shows more details:

| Aspect | Ensemble Models | Single Models |

|---|---|---|

| Accuracy (ImageNet) | Ensembles of smaller models match or exceed accuracy of larger single models | Single large models achieve high accuracy but with higher computational cost |

| Computational Cost (FLOPS) | ~50% fewer FLOPS than comparable single large models | |

| Training Cost | Lower total TPU days (e.g., 96 TPU days for two B5 models vs. 160 TPU days for one B7) | |

| Inference Latency (TPUv3) | Up to 5.5x speedup with cascades compared to single models with similar accuracy | |

| Efficiency Regime | Ensembles outperform single models especially in large computation regimes (>5B FLOPS) |

Ensemble methods machine vision system not only improves accuracy but also reduces computational cost and latency. This makes them practical for real-world ai applications.

Researchers have also found that stacking, a type of ensemble learning, can reach up to 100% accuracy on some datasets. Bagging methods like random forests and boosting methods such as XGBoost and LightGBM also perform well, but stacking often gives the best results. These findings support the use of ensemble methods to enhance predictive performance in machine vision.

Note: While ensemble methods increase accuracy and robustness, they can also make the system more complex and resource-intensive. Techniques like model distillation can help reduce this complexity for deployment.

Ensemble methods machine vision system stands out as a powerful approach in ai. By combining the strengths of different models, these methods help machine learning systems achieve higher accuracy, better reliability, and improved predictive performance in vision tasks.

Types of Ensemble Learning

Ensemble learning techniques help machine vision systems become more accurate and reliable. These methods combine several models to solve complex problems. Each technique uses a different way to bring models together and improve results.

Bagging and Random Forests

Bagging, or bootstrap aggregating, creates many versions of a model by training each one on a different random sample of the data. The system then averages or votes on their predictions. This approach reduces errors and helps with large datasets.

- Bagging improves prediction accuracy for multiple regression models, especially when the dataset is large and has many features.

- It nearly eliminates the ‘curse of dimensionality’ in big datasets.

- Random forests use bagging with decision trees. They often rank high in accuracy for large datasets.

- An improved random forest model with bagging reached an average accuracy of 90.20%, higher than other models that scored between 84.41% and 88.33%. In vegetation classification, this model achieved user accuracy up to 0.97, mapping accuracy of 0.97, and a Kappa coefficient of 0.81.

Boosting

Boosting builds models one after another. Each new model tries to fix the mistakes of the previous ones. This method works well for image recognition tasks.

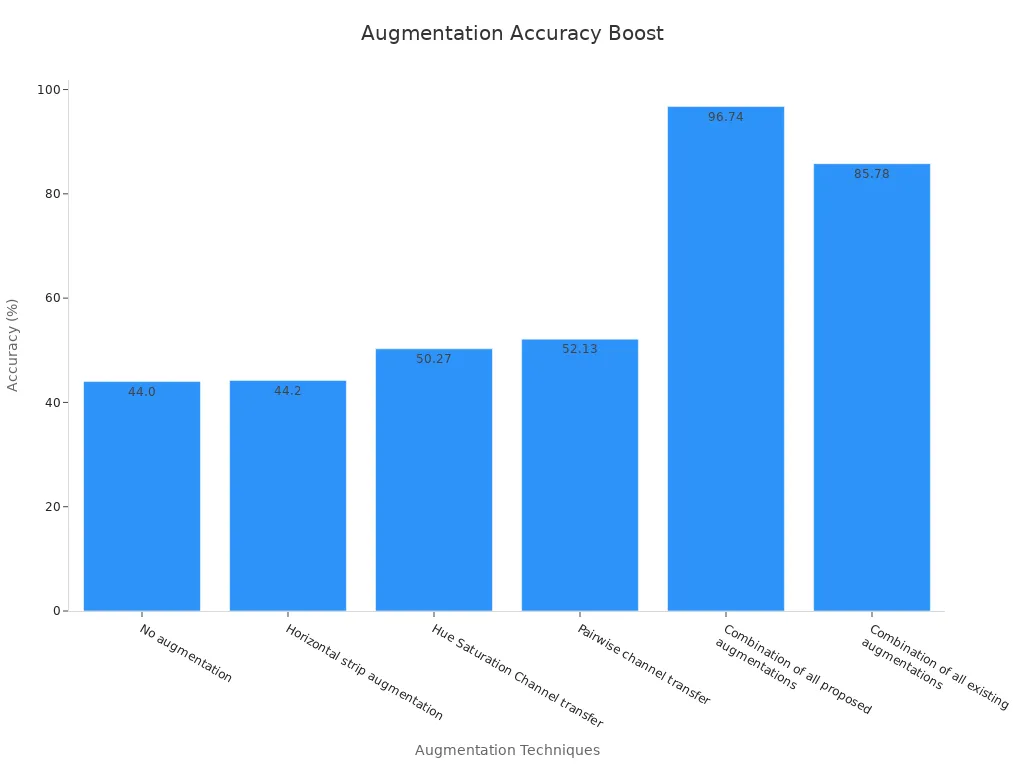

| Augmentation Technique | Accuracy Improvement Example |

|---|---|

| No augmentation | Baseline accuracy at 44.0% (EfficientNet_b0) |

| Horizontal strip augmentation | Slight increase to 44.20% |

| Hue Saturation Channel transfer | Increased accuracy to 50.27% |

| Pairwise channel transfer | Further increase to 52.13% |

| Combination of all proposed augmentations | Dramatic boost to 96.74% accuracy |

| Combination of all existing augmentations | Accuracy at 85.78% |

Boosting methods, especially with data augmentation, can raise accuracy from 44% to over 96% in some machine vision tasks.

Stacking

Stacking combines different types of models. A new model, called a meta-model, learns how to best mix the predictions from the base models. This technique works well when the base models are diverse.

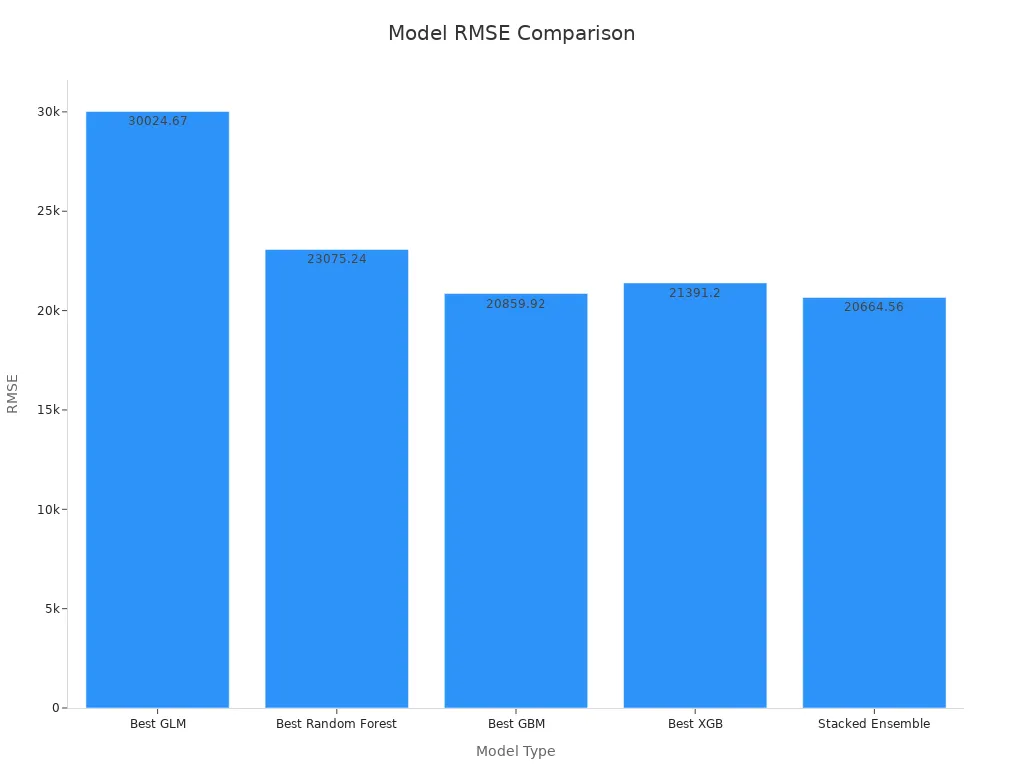

| Model Type | RMSE | MSE | MAE | RMSLE |

|---|---|---|---|---|

| Best GLM | 30024.67 | N/A | N/A | N/A |

| Best Random Forest | 23075.24 | N/A | N/A | N/A |

| Best GBM | 20859.92 | N/A | N/A | N/A |

| Best XGB | 21391.20 | N/A | N/A | N/A |

| Stacked Ensemble | 20664.56 | 469579433 | 13499.93 | 0.1061244 |

Stacking reduces bias and variance, leading to better accuracy. It also increases robustness by using different algorithms and settings.

Voting and Averaging

Voting and averaging methods combine predictions from several models to reach a consensus. In voting, each model casts a "vote" for its prediction, and the most common answer wins. Averaging takes the mean of all predictions. These methods work well for noisy or complex data.

A study showed that voting and averaging, especially with dynamic time warped-space averaging, produced accurate consensus signals from noisy data. The results matched the gold standard more closely, showing improved accuracy and repeatability in machine vision tasks.

Tip: Weighted voting gives more importance to stronger models, while unweighted voting treats all models equally.

Ensemble learning differs from hybrid methods. Ensemble methods combine several models of the same or different types to improve performance. Hybrid methods mix different algorithms or approaches, such as combining machine learning with rule-based systems, to solve a problem.

Applications in Machine Vision Systems

Object Detection

Object detection helps machines find and label objects in images or videos. Many industries use this computer vision task to improve safety and quality. For example, factories use object detection to spot defects in products. In a real-world case, engineers used an ensemble method called Selective Box Fusion (SBF) to detect defects in Electron Beam Selective Melting (EBSM) components. SBF combines results from several detection models, using a voting strategy and weighted fusion of bounding boxes. This approach improved detection performance by 1% to 3% compared to single models. The system also kept fast runtime and low memory use.

| Ensemble Method | Average Runtime (ms/image) | Average RAM Usage (MiB) |

|---|---|---|

| WBF | 3.43 | 432 |

| SBF | 3.66 | 433 |

SBF and Weighted Boxes Fusion (WBF) both show strong predictive performance. These methods help increase accuracy and reliability in object detection, even when the data is noisy or complex. Many companies now use ensemble methods for face detection and segmentation tasks, making their vision systems more robust.

Image Classification

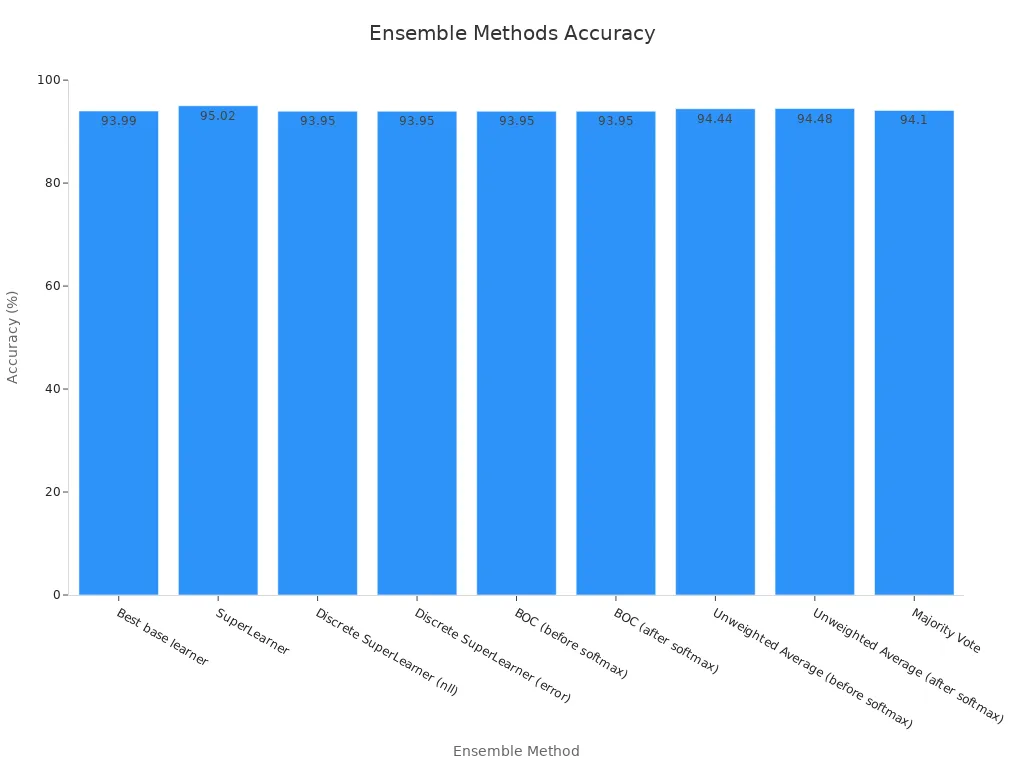

Image classification lets machines sort images into categories. This task is common in healthcare, security, and social media. Ensemble methods boost predictive accuracy by combining different models. In one study, researchers tested several ensemble methods on the CIFAR-10 dataset. The SuperLearner ensemble reached 95.02% accuracy, while the best single model had 93.99%. Other methods, like unweighted averaging and majority vote, also improved classification results.

| Ensemble Method | Accuracy (%) |

|---|---|

| Best base learner | 93.99 |

| SuperLearner | 95.02 |

| Unweighted Average | 94.44 |

| Majority Vote | 94.10 |

Researchers also found that combining outputs from different neural networks and statistical features improved classification accuracy across ten datasets. These results show that ensemble methods make vision systems more reliable for tasks like face detection and image classification. By using ensembles, engineers can achieve better predictive results in many computer vision tasks.

Getting Started with Ensemble Methods

Popular Algorithms and Tools

Many tools help beginners use ensemble methods in machine vision. These libraries make it easier to build, test, and deploy models. The table below lists some of the most popular options:

| Library/Tool | Description | Relevance to Ensemble Methods and Machine Vision |

|---|---|---|

| OpenCV | Open-source library with 2500+ algorithms for image processing, face detection, object identification, and 3D model extraction. Widely adopted with multi-language support. | Provides foundational image processing capabilities essential for machine vision projects that can be combined with ensemble learning frameworks. |

| TensorFlow | Leading open-source ML platform with tools for model training and deployment, including TensorFlow Lite for edge devices. | Supports development of complex models including ensemble architectures; widely used in vision tasks. |

| Keras | Python-based high-level API for TensorFlow, beginner-friendly for neural network development. | Facilitates quick prototyping of ensemble models in vision projects due to ease of use and backend support. |

| CAFFE | Deep learning framework optimized for image classification and segmentation with good speed. | Suitable for vision tasks and can be integrated into ensemble pipelines. |

| Open VINO | Intel’s cross-platform toolkit for vision applications. | Enhances deployment of vision models, potentially including ensemble models, on various hardware. |

| Viso Suite | Enterprise platform integrating OpenCV, TensorFlow, Open VINO, and others for end-to-end vision application development. | Supports modular integration of ensemble methods within vision workflows. |

Python’s Scikit-learn package also supports many ensemble algorithms, such as random forest, stacking, and boosting. Libraries like lightgbm and catboost offer advanced options for gradient boosting, which work well in image classification tasks.

Step-by-Step Guide

Beginners can follow these steps to use ensemble methods in a machine learning project for vision:

- Prepare the Data: Collect and clean images. Use OpenCV for basic image processing.

- Select Base Models: Choose different models, such as decision trees, neural networks, or support vector machines.

- Train Models: Use Scikit-learn, TensorFlow, or Keras to train each model on the training data.

- Combine Models: Apply ensemble techniques like bagging, boosting, or stacking. For example, use random forest or combine models with majority voting.

- Evaluate Performance: Test the ensemble on new images. Compare results with single models.

- Tune and Optimize: Adjust model settings. Use cross-validation to avoid overfitting and improve accuracy.

Tip: Start with simple ensembles, such as bagging or voting, before trying advanced methods.

Tips for Beginners

- Test each model before adding it to the ensemble. Poor models can lower overall performance.

- Use cross-validation and early stopping to prevent overfitting.

- Try different ensemble methods to see which works best for the task.

- Remember that ensembles often reduce prediction errors and improve results, but they do not always beat the best single model.

- Many ai competitions, like ILSVRC, have shown that ensembles of neural networks achieve top results in machine vision.

| Evidence Aspect | Description |

|---|---|

| Competition Success | Ensembles of residual networks achieved a 3.57% error rate on the ImageNet test set, winning 1st place in the ILSVRC 2015 classification task. |

| Theoretical Basis | Ensembles reduce variance in prediction errors, improving average performance over individual models. |

| Practical Advice | Testing and tuning ensembles is essential. Poor models can degrade performance. |

| Complementary Techniques | Cross-validation and early stopping help prevent overfitting. |

Note: Practice and experimentation help beginners learn which ensemble methods work best for their machine learning projects.

Challenges and Solutions

Computational Cost

Ensemble methods often require running several models for each image. This increases the time and resources needed. Many machine vision systems struggle with high computational costs, especially when using large ensembles. For example, running multiple models at once can slow down predictions and use more memory. Some methods, like SPIREL, help by picking only the most useful models for each image. This approach can cut running time by about 80% and only lose a small amount of accuracy. Pretrained models also help reduce costs. Fine-tuning a pretrained model can save up to 60% of development time. Model distillation can shrink model size by 70%, making predictions faster and cheaper. Serverless systems can adjust resources as needed, lowering costs by 40%. These strategies make it easier to use ensembles in real-world projects.

Interpretability

Understanding how an ensemble makes decisions can be hard. Random forests and other ensembles often give better accuracy than simple models, but they are harder to explain. Studies show that more information does not always help people understand model decisions. Local explanation tools, like LIME and SHAP, can show why a model made a choice for one image. However, these tools do not explain the whole model and can sometimes be tricked. There are few good ways to measure how easy a model is to understand. Developers often find it hard to judge if users will trust or understand complex ensembles. This makes it important to balance accuracy with the need for clear explanations.

Best Practices

The following table lists best practice guidelines for popular ensemble methods in machine vision:

| Ensemble Method | Best Practice Guidelines | Key Hyperparameters | Benefits / Challenges Addressed |

|---|---|---|---|

| Random Forest | Use bootstrap sampling; tune max_depth and max_leaf_nodes; set random_state. | max_depth, bootstrap, max_leaf_nodes | Reduces overfitting; robust; handles high-dimensional data. |

| Gradient Boosting | Tune n_estimators and learning_rate; use min_samples_leaf and subsample. | n_estimators, learning_rate, subsample | High accuracy; controls overfitting; manages computational cost. |

| AdaBoost | Use weak learners; tune n_estimators and learning_rate. | n_estimators, learning_rate | Efficient; simple; focuses on hard cases; controls overfitting. |

| XGBoost/LightGBM | Optimize n_estimators, learning_rate, max_depth, and feature/bagging fractions. | n_estimators, learning_rate, max_depth | Fast; scalable; balances accuracy and efficiency. |

Tip: Tuning model settings and using efficient tools can help overcome many challenges in ensemble machine vision projects.

Ensemble methods help machine vision systems become more accurate and reliable. They use strong statistical tools and resource-aware strategies to improve results. Beginners can try these methods to boost their own projects.

- Multiple metrics like R2, RMSE, and MAPE show how well ensembles work.

- Data Envelopment Analysis helps compare models by accuracy and resource use.

- Weighted voting based on these methods often beats traditional approaches.

For more learning, explore tutorials on Scikit-learn, TensorFlow, and OpenCV.

FAQ

What is the main benefit of using ensemble methods in machine vision?

Ensemble methods help machine vision systems make more accurate predictions. They combine the strengths of different models. This reduces errors and increases reliability.

Can beginners use ensemble methods without advanced coding skills?

Many libraries like Scikit-learn and Keras offer simple tools for building ensembles. Beginners can use these tools with basic Python knowledge. Tutorials and guides help users get started quickly.

Do ensemble methods always improve results over single models?

Ensemble methods often improve accuracy, but not always. Sometimes a single strong model performs better. Testing both approaches helps find the best solution for each task.

How does someone combine model predictions in an ensemble?

# Example: Majority voting in Python

from sklearn.ensemble import VotingClassifier

ensemble = VotingClassifier(estimators=[('rf', rf), ('svc', svc)], voting='hard')

ensemble.fit(X_train, y_train)

This code shows how to combine predictions using majority voting.

See Also

Complete Overview Of Machine Vision For Industrial Automation

Understanding The Role Of Synthetic Data In Vision Systems

Ways Deep Learning Improves Machine Vision Technology Today

Introduction To Metrology Systems Using Machine Vision Techniques

Fundamentals Of Machine Vision Systems For Sorting Applications