You might wonder how machines interpret the world with such precision. Conditional GANs (Generative Adversarial Networks) play a pivotal role in this transformation. These models generate highly realistic visual data by learning from specific conditions or labels. For example, they can create lifelike images of objects based on textual descriptions or sketches.

Their significance in AI-driven technologies is undeniable. A study revealed that Conditional GANs improve model performance for underrepresented classes, ensuring fairer outcomes across diverse groups. They also synthesize realistic datasets to fill gaps in real-world data, addressing privacy concerns and enhancing machine learning systems. Conditional GAN machine vision systems thrive in such scenarios, making them indispensable for modern AI advancements.

Key Takeaways

- Conditional GANs create lifelike pictures using given labels. This helps machine vision work better.

- These models make tasks more accurate by adding fake data to real datasets. This improves jobs like finding objects in pictures.

- Conditional GANs are great at changing one type of image into another. For example, they can turn drawings into realistic photos.

- They give more control and flexibility but need powerful computers. Using them also needs careful thought about right and wrong.

Understanding Conditional Generative Adversarial Networks

The Basics of Generative Adversarial Networks

A generative adversarial network, or GAN, is a type of deep learning model that consists of two neural networks working together in a competitive setup. One network, called the generator, creates synthetic data, such as images, while the other, known as the discriminator, evaluates whether the data is real or fake.

The training process is adversarial, meaning the generator tries to fool the discriminator by producing realistic outputs, while the discriminator improves its ability to distinguish between real and fake data. This dynamic helps both networks improve over time. For example:

- The generator minimizes the chance of the discriminator identifying its outputs as fake.

- The discriminator maximizes its accuracy in detecting fake data.

This back-and-forth process allows GANs to generate highly realistic outputs, making them a cornerstone of modern machine vision systems.

Conditional GANs: How They Work

A conditional generative adversarial network builds on the foundation of a traditional GAN by introducing conditional inputs. These inputs guide the generator and discriminator during the training process, ensuring the generated outputs align with specific labels or conditions. For instance, if you provide a label like "cat," the generator will create an image of a cat, and the discriminator will verify if the output matches the label.

Conditional GANs achieve this by modifying the mathematical structure of the GAN to include conditional probabilities. This adjustment allows both networks to incorporate labeled data during training. Unlike standard GANs, which operate without context, cGANs require labeled datasets to function effectively. This makes them particularly useful for tasks where precision and context are critical, such as generating labeled datasets for underrepresented categories.

Key Components: Generator and Discriminator

The generator and discriminator in a conditional GAN work together to produce high-quality outputs that meet specific conditions. Here’s how they interact:

- Generator: This network uses the conditional input to create data that matches the given label. For example, if the condition is "dog," the generator will produce an image of a dog. The generator’s goal is to make its outputs indistinguishable from real data.

- Discriminator: This network evaluates the generator’s output against the real data and the provided condition. It checks whether the generated data is authentic and whether it aligns with the specified label.

The conditional information plays a crucial role in this process. It not only helps the generator produce accurate outputs but also stabilizes the training process. Some advanced models, like the Auxiliary Classifier GAN (AC-GAN), even modify the discriminator to predict class labels in addition to assessing authenticity. This dual role enhances the interaction between the generator and discriminator, leading to better results.

Tip: Conditional GANs are particularly effective in applications like image-to-image translation, where the goal is to transform one type of image into another while preserving specific features.

Conditional GANs vs. Traditional GANs

When comparing a conditional generative adversarial network (cGAN) to a traditional generative adversarial network (GAN), you notice significant differences in how they handle machine vision tasks. Both models share the same foundational structure of a generator and a discriminator, but their capabilities diverge due to the inclusion of conditional inputs in cGANs.

Traditional GANs operate without context. They generate data based solely on random noise, which limits their ability to produce outputs tailored to specific requirements. For instance, if you wanted a GAN to create an image of a dog, it would lack the contextual guidance to ensure the output matches your request. This lack of control often results in less precise and less diverse outputs.

In contrast, a conditional GAN introduces labeled data as a guiding factor. This allows you to specify the type of output you need, such as an image of a dog or a cat. By incorporating this conditional input, cGANs provide greater control over the generated data. This makes them ideal for tasks requiring precision, such as image-to-image translation or generating datasets for specific categories.

The table below highlights the key differences between these two models:

| Feature | Conditional GANs (cGANs) | Traditional GANs |

|---|---|---|

| Customization | Allows control over defect characteristics | Limited customization options |

| Data Diversity | Generates a wide range of variations | Less diversity in generated data |

| Convergence Speed | Faster convergence due to pattern learning | Slower convergence |

| Output Control | Precise control over generated data | Less control over outputs |

You can see how cGANs outperform traditional GANs in terms of customization, diversity, and control. Their ability to learn patterns based on labeled data also enables faster convergence during training. This efficiency makes cGANs a preferred choice for modern machine vision systems.

Note: While cGANs offer more advantages, they require labeled datasets, which can be resource-intensive to create. Traditional GANs, on the other hand, work with unlabeled data, making them easier to implement in scenarios where labeled data is unavailable.

By understanding these differences, you can choose the right model for your specific needs. Whether you prioritize precision or simplicity, both models have their place in advancing machine vision technologies.

Applications in Conditional GAN Machine Vision Systems

Conditional GANs have revolutionized how machines interpret and process visual data. Their ability to generate realistic outputs tailored to specific conditions makes them invaluable in various machine vision applications. Let’s explore some of the most impactful use cases.

Image-to-Image Translation

Image-to-image translation is one of the most exciting applications of conditional GANs. This process involves transforming one type of image into another while preserving key features. For example, you can convert a black-and-white photo into a colorized version or turn a sketch into a photorealistic image.

Conditional GANs excel in this area because they use labeled data to guide the transformation. By providing specific conditions, such as "daytime" or "nighttime," you can control the output with remarkable precision. This capability has practical uses in fields like:

- Medical Imaging: Enhancing X-rays or MRI scans for better diagnosis.

- Urban Planning: Converting satellite images into detailed maps.

- Creative Design: Generating artistic styles from simple sketches.

Fun Fact: Did you know that some video game developers use image-to-image translation to create realistic textures from hand-drawn designs? This saves time and enhances creativity.

Object Detection and Recognition

Conditional GANs also play a crucial role in object detection and recognition. These tasks involve identifying and classifying objects within an image or video. Unlike traditional methods, which rely on predefined algorithms, conditional GANs learn directly from labeled datasets. This allows them to adapt to complex scenarios and improve accuracy.

For instance, in autonomous vehicles, conditional GANs help detect pedestrians, traffic signs, and other vehicles. They analyze visual data in real time, ensuring safe navigation. In security systems, they enhance facial recognition by generating high-quality images from low-resolution inputs.

You can also use conditional GANs to create synthetic datasets for training object detection models. This is especially useful when real-world data is scarce or difficult to collect. By generating diverse and realistic images, you can improve the performance of your machine vision system.

Video Generation and Prediction

Video generation and prediction represent another groundbreaking application of conditional GANs. These tasks involve creating realistic video sequences or predicting future frames based on existing ones. For example, you can generate a video of a moving car from a single image or predict how a scene will evolve over time.

Conditional GANs achieve this by learning temporal patterns in video data. They use conditional inputs, such as the starting frame or motion trajectory, to guide the generation process. This makes them ideal for applications like:

- Surveillance: Predicting suspicious activities in real time.

- Entertainment: Creating realistic animations or special effects.

- Sports Analysis: Simulating player movements for strategy planning.

Tip: When using conditional GANs for video generation, ensure your dataset includes diverse scenarios. This helps the model learn a wide range of patterns and improves its predictive accuracy.

By leveraging the power of conditional GANs, you can unlock new possibilities in machine vision. Whether you’re transforming images, detecting objects, or generating videos, these models offer unparalleled flexibility and precision.

Style Transfer and Image Enhancement

Style transfer and image enhancement are two transformative applications of conditional GANs. These techniques allow you to modify images by applying specific styles or improving their quality while preserving essential details. Whether you are an artist, a designer, or a researcher, these capabilities can open up new possibilities in your work.

Style Transfer: Adding Artistic Flair to Images

Style transfer involves applying the visual characteristics of one image, such as a painting, to another image. For example, you can transform a photograph into a Van Gogh-inspired masterpiece. Conditional GANs excel in this area because they use labeled data to guide the transformation process. This ensures that the output retains the content of the original image while adopting the desired style.

You might find this particularly useful in:

- Art and Design: Creating unique artwork or enhancing creative projects.

- Marketing: Generating visually appealing advertisements.

- Entertainment: Developing game textures or movie effects.

Tip: When using conditional GANs for style transfer, ensure your dataset includes diverse styles. This helps the model learn a wide range of artistic patterns.

Image Enhancement: Improving Visual Quality

Image enhancement focuses on improving the quality of images by removing noise, increasing resolution, or adjusting colors. Conditional GANs outperform traditional methods in this domain because they can learn complex patterns from labeled datasets. For instance, they can upscale a low-resolution image to a high-resolution version without losing important details.

Here’s how conditional GANs enhance images:

- Noise Reduction: Removes unwanted artifacts while preserving clarity.

- Super-Resolution: Converts pixelated images into sharp, high-resolution outputs.

- Color Correction: Adjusts tones and hues for a more natural look.

These capabilities are invaluable in fields like medical imaging, where clarity and accuracy are critical. For example, conditional GANs can enhance MRI scans, making it easier for doctors to identify abnormalities.

Comparing Conditional GANs with Conventional Methods

Conditional GANs offer significant advantages over conventional methods for style transfer and image enhancement. The table below highlights some key metrics:

| Metric | Conditional GANs (1024×1024) | Conventional Methods |

|---|---|---|

| FID Score | Better than whole slice view | N/A |

| LPIPS Score | Optimized model shows best | N/A |

| Image Quality | High detail preservation | Lower detail |

| Context Awareness | Effective with whole images | Limited with small crops |

As you can see, conditional GANs provide superior results in terms of detail preservation and context awareness. This makes them a preferred choice for tasks requiring high-quality outputs.

Unlocking New Possibilities

By leveraging conditional GANs, you can achieve remarkable results in style transfer and image enhancement. These models combine the power of deep learning with the flexibility of labeled data, enabling you to create visually stunning and highly accurate outputs. Whether you are enhancing photos, creating art, or improving medical images, conditional GANs can help you push the boundaries of what’s possible in machine vision.

Advantages of Conditional GANs in Machine Vision

Enhanced Control Over Generated Outputs

Conditional GANs provide you with unparalleled control over the outputs they generate. By incorporating labeled data, these models allow you to specify the exact characteristics of the desired output. For instance, in geotechnical subsurface schematization, a model like schemaGAN has demonstrated its ability to produce highly accurate representations of soil layers. This model trained on 24,000 synthetic geotechnical cross-sections, outperformed traditional interpolation methods by delivering clear layer boundaries and precise anisotropy representation.

| Aspect | Details |

|---|---|

| Model | schemaGAN |

| Application | Geotechnical subsurface schematization |

| Training Data | 24,000 synthetic geotechnical cross-sections with corresponding Cone Penetration Test (CPT) data |

| Performance Comparison | Outperformed several interpolation methods |

| Key Features | Clear layer boundaries, accurate representation of anisotropy |

| Validation | Confirmed through a blind survey and two real case studies in the Netherlands |

This level of control makes Conditional GANs ideal for applications where precision is critical, such as medical imaging or urban planning.

Improved Accuracy in Visual Data Processing

Conditional GANs significantly enhance the accuracy of visual data processing. By augmenting datasets with realistic synthetic data, these models improve the performance of machine learning systems. For example:

- Classification accuracy reached 96.67% with real data but increased to 110% when augmented with generated data.

- In NIRS-based systems, Conditional GANs improved the classification of brain activation patterns, demonstrating their effectiveness in complex tasks.

These improvements highlight the power of Conditional GANs in refining deep learning models. Whether you’re working with images, videos, or other visual data, these models can help you achieve superior results.

Versatility Across Machine Vision Applications

The versatility of Conditional GANs makes them a cornerstone of modern machine vision systems. They excel in diverse applications, including medical imaging, video generation, and image enhancement. For example:

- In medical imaging, Conditional GANs segment retinal vessels from fundus photographs and enhance diagnostic clarity.

- They transform low-resolution inputs into high-resolution outputs, improving the quality of visual data.

- By generating diverse training datasets, they enable the development of robust diagnostic algorithms.

This adaptability ensures that Conditional GANs remain relevant across various fields, from healthcare to entertainment. Their ability to tackle multiple challenges with precision and efficiency sets them apart from traditional methods.

Challenges in Implementing Conditional GANs

Computational Complexity and Resource Demands

Implementing Conditional GANs requires significant computational resources. These models demand high processing power due to their complex architecture and large datasets. For example, training a Conditional GAN for machine vision tasks involves billions of floating-point operations (FLOPs) and millions of trainable parameters. The table below highlights key metrics:

| Metric | Value |

|---|---|

| FLOPs | 35.98 TeraFLOPs |

| Trainable Parameters | 54.4 million |

| Memory Usage | 207.62 MB |

| Inference Time | 0.2912 s for batch of 32 |

These requirements can strain hardware, especially when working with limited resources. You might need advanced GPUs or cloud-based solutions to handle the workload effectively. Optimizing the model architecture and reducing memory usage can help mitigate these challenges, but they often come at the cost of performance.

Training Instability and Mode Collapse

Training Conditional GANs can be unstable. You may encounter mode collapse, where the generator produces repetitive outputs instead of diverse ones. This issue prevents the model from representing the full data distribution, reducing its effectiveness.

Several strategies can address these challenges:

- Add constraints to strengthen the relationship between input and output.

- Augment the generator to diversify outputs.

- Modify loss functions to better measure discrepancies.

- Impose gradient penalties to stabilize training.

Researchers have proposed solutions like the Auto-Encoding Generative Adversarial Network (AE-GAN). This approach uses multiple generators and clustering algorithms to maintain sample distribution consistency. By implementing these techniques, you can improve the stability and reliability of your Conditional GAN system.

Ethical Considerations in Visual Data Generation

Conditional GANs can generate highly realistic visual data, which raises ethical concerns. You must consider the implications of creating synthetic images that could be misused. For instance, generating fake images of individuals or altering visual content can lead to misinformation or privacy violations.

To address these concerns, you should establish clear guidelines for using Conditional GANs responsibly. Transparency in data generation and labeling can help build trust. Additionally, implementing safeguards to prevent misuse, such as watermarking generated images, can reduce ethical risks. By prioritizing ethical practices, you can ensure that Conditional GANs contribute positively to machine vision advancements.

Future Potential of Conditional GANs in Machine Vision

Emerging Trends in Conditional GAN Technology

Conditional GAN technology continues to evolve, addressing challenges like data imbalance and improving performance in machine vision tasks. Researchers have developed innovative models to tackle these issues. For example, Wasserstein conditional GANs (WCGAN-GP) enhance detection rates while reducing false positives. Federated generative models, such as HT-Fed-GAN, balance multimodal and categorical distributions, making them ideal for privacy-preserving datasets.

The table below highlights some of the latest advancements:

| Study | Methodology | Focus | Results |

|---|---|---|---|

| WCGAN-GP | Wasserstein conditional GAN | Generating synthetic NIDS tabular data | Improves detection rates, minimizes false positives |

| HT-Fed-GAN | Federated generative model | Balancing multimodal distributions | Addresses data imbalance in privacy-preserving datasets |

| MCGAN | Modified conditional GAN | Class imbalance in intrusion detection | Enhances predictive performance |

| CTGAN | Conditional tabular GAN | Minority class generation | Combats skewed class distributions |

These trends demonstrate how conditional GANs are becoming more versatile and effective in solving real-world problems. You can expect these advancements to further enhance conditional GAN machine vision systems.

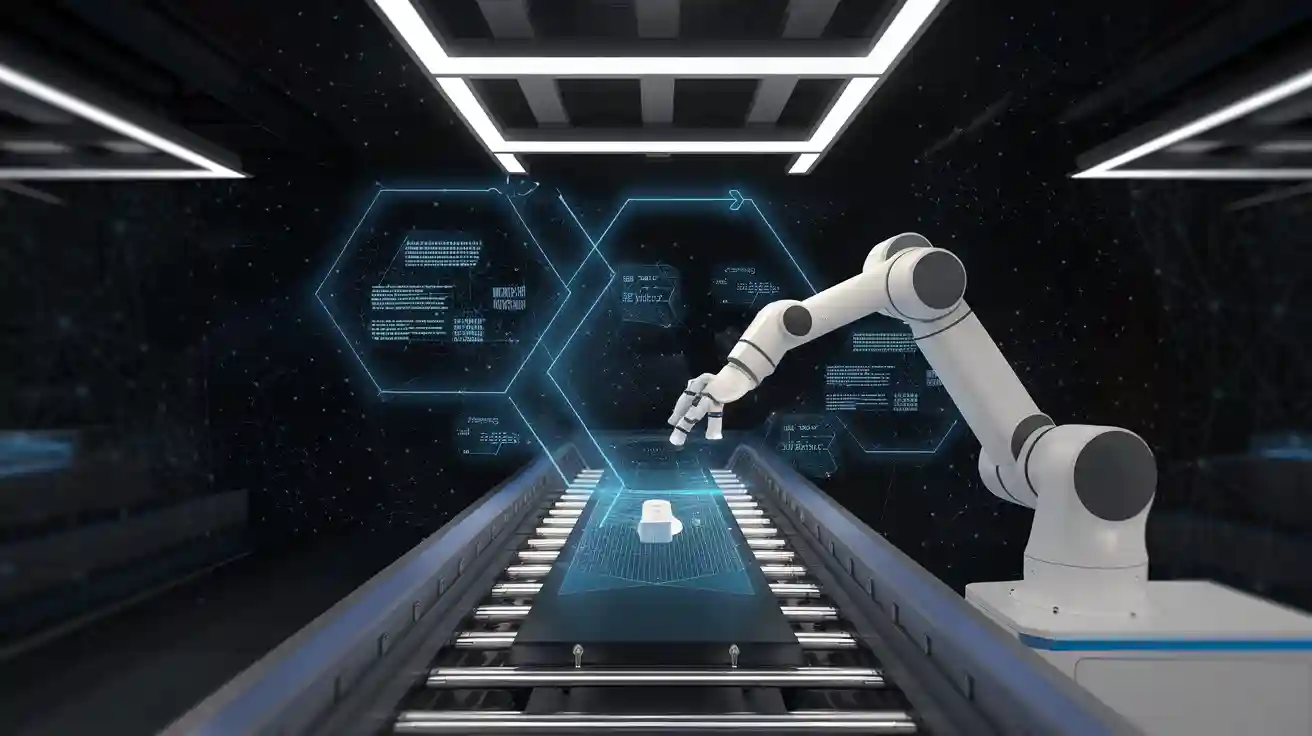

Real-Time Applications in Machine Vision

Conditional GANs are paving the way for real-time applications in machine vision. These models process visual data quickly, enabling tasks like real-time video enhancement and object detection. For instance, in autonomous vehicles, GANs analyze live video feeds to identify obstacles and predict motion paths. This ensures safer navigation.

In surveillance, conditional GANs predict suspicious activities by analyzing live footage. They also enhance low-quality video streams, making it easier to identify critical details. These real-time capabilities make GANs indispensable for applications requiring immediate decision-making.

Integration with Advanced AI Systems

Conditional GANs are increasingly integrated with advanced AI systems to unlock new possibilities. By combining GANs with reinforcement learning, you can create models that adapt to dynamic environments. For example, in robotics, this integration allows machines to learn from visual data and improve their performance over time.

Additionally, conditional GANs complement natural language processing systems. They generate images based on textual descriptions, bridging the gap between visual and linguistic data. This integration enhances applications like virtual assistants and content creation tools.

As AI systems become more sophisticated, conditional GANs will play a crucial role in shaping the future of machine vision.

Conditional GANs have revolutionized machine vision by enabling precise, context-aware visual data generation. You can leverage their advantages, such as enhanced control and versatility, to tackle complex tasks like image translation and object detection. However, challenges like computational demands and ethical concerns require careful consideration.

Looking Ahead: Conditional GANs hold immense potential to shape AI-driven technologies. As advancements continue, you can expect real-time applications and seamless integration with other AI systems to redefine what’s possible in machine vision.

By understanding their capabilities and limitations, you can unlock new opportunities in this transformative field.

FAQ

What makes Conditional GANs different from traditional GANs?

Conditional GANs use labeled data to guide the generation process. This allows you to control the output based on specific conditions, like generating an image of a cat when given the label "cat." Traditional GANs lack this contextual guidance.

Can Conditional GANs work with small datasets?

Yes, but small datasets may limit the model’s ability to generalize. You can use techniques like data augmentation or transfer learning to improve performance. These methods help the model learn patterns more effectively, even with limited data.

How do Conditional GANs improve image quality?

Conditional GANs enhance image quality by learning from labeled datasets. They can remove noise, increase resolution, and adjust colors. For example, they can upscale a blurry image into a sharp, high-resolution version while preserving important details.

Are Conditional GANs suitable for real-time applications?

Yes, Conditional GANs can handle real-time tasks like video enhancement and object detection. However, you need powerful hardware, such as GPUs, to process data quickly. Optimizing the model architecture can also improve speed and efficiency.

What are the ethical concerns with Conditional GANs?

Conditional GANs can generate realistic fake images, which might lead to misuse, such as spreading misinformation. To address this, you should implement safeguards like watermarking and ensure transparency in data generation practices.

See Also

Understanding How Guidance Machine Vision Enhances Robotics

Investigating The Use Of Synthetic Data In Vision Systems

Clarifying The Concept Of Pixel Machine Vision Today