Automation is transforming factories across the globe. Machine vision systems now play a vital role in making production faster and more reliable. Recent projections show the machine vision market will reach $9.3 billion by 2028, driven by industries that demand high accuracy and speed. A backbone machine vision system acts like the spinal cord in a body—it supports every movement and decision. This system quickly finds and understands each object on a production line. Companies using backbone machine vision systems have seen fewer errors and faster inspections, proving their value in boosting industrial performance and innovation.

Key Takeaways

- Backbone machine vision systems act as the core of industrial automation, helping machines see and understand objects quickly and accurately.

- These systems use deep learning models like ResNet and DenseNet to improve defect detection, speed up inspections, and reduce errors by over 90%.

- Efficient hardware combined with strong backbones enables real-time processing, making factories faster and more reliable.

- Industries such as automotive, electronics, and food processing benefit from backbone vision systems through better quality control and lower costs.

- Future trends like edge AI and hybrid models will make these systems smarter, more flexible, and easier to customize for specific tasks.

What Is a Backbone Machine Vision System?

A backbone machine vision system forms the core of modern industrial automation. This system uses advanced neural networks to help machines see and understand their environment. In factories, these systems guide robots to spot defects, sort products, and keep production lines running smoothly. The backbone acts as the main structure, supporting all deep learning processes in machine vision. It works much like a strong spine, holding up the rest of the system and making sure every part works together.

Backbone in Deep Learning

Deep learning has changed how machines process images. In a backbone machine vision system, the backbone is a special neural network that learns to find important patterns in pictures. These patterns help machines recognize objects, read labels, and check for errors. Convolutional neural networks play a big role in this process. They scan images in layers, picking out shapes, edges, and colors. Each layer in the backbone learns deeper features, making the system smarter with every step.

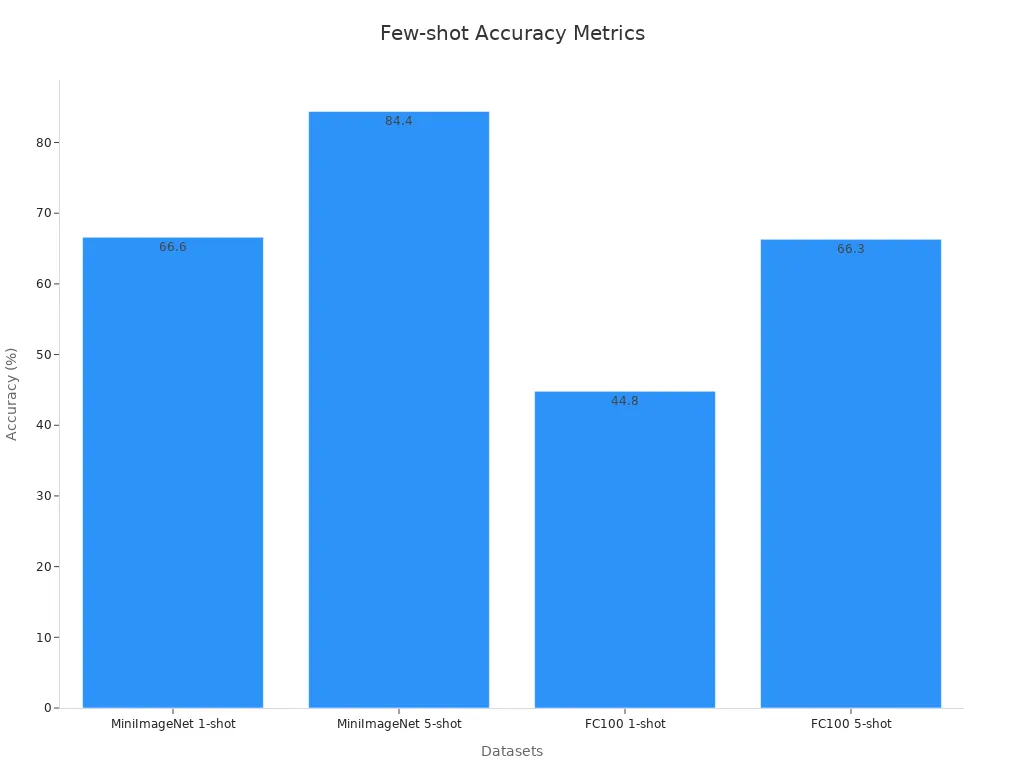

Researchers have tested many backbone models for computer vision tasks. Models like VGG, ResNet, and DenseNet have become popular because they work well in deep learning. These backbones help machines learn from just a few examples, which is important in factories where new products appear often. The chart below shows how well these backbones perform in few-shot learning tasks, where the system must learn from very little data.

Studies show that using a strong backbone in deep learning helps machines adapt quickly to new tasks. For example, in plant disease detection, these systems reach over 90% accuracy even with few samples. This makes backbone machine vision systems very useful in industries that need fast and reliable results.

A recent industry report highlights the growing use of these systems. The market for pipeline machine vision systems is expected to grow from $12.9 billion in 2024 to $20.8 billion by 2030. Companies using backbone machine vision systems have reduced inspection costs by up to 30% and cut human error rates from 25% to under 2%. These systems also speed up inspections, with some factories checking one part every two seconds.

| Metric | Result/Impact |

|---|---|

| Inspection Cost Reduction | 20-30% lower |

| Human Error Rate | Reduced from 25% to under 2% |

| Inspection Speed | 1 part every 2 seconds |

| Unplanned Downtime | 25% reduction |

| Customer Retention | 23% improvement |

| Market Share | 17% increase |

Deep learning backbones also support real-time data processing and predictive maintenance. This means machines can spot problems before they cause delays, keeping factories running smoothly.

Feature Extraction Role

Feature extraction is the heart of every backbone machine vision system. The backbone learns to pick out the most important details from images. This process helps machines focus on what matters, like finding cracks in metal or reading barcodes. Convolutional networks do this by breaking down images into small parts and learning which features are useful for each task.

Traditional methods, such as Histogram of Oriented Gradients and Local Binary Patterns, needed people to design features by hand. These older methods struggled with complex computer vision tasks like object detection and image classification. Deep learning changed this by letting neural networks learn features directly from raw data. Convolutional neural networks now handle tasks like object localization and segmentation with much higher accuracy.

- Deep learning models like YOLO and Faster R-CNN learn features automatically, making them more flexible.

- Convolutional networks can adapt to new tasks without manual changes.

- Deep neural networks achieve better results in complex computer vision tasks than older methods.

- Support Vector Machines work well for simple tasks, but deep learning backbones perform better in real-world settings.

A case study in financial distress detection showed that using too many features without proper extraction can hurt accuracy. By using deep learning for feature extraction, the model became more accurate and robust. This example shows why backbone machine vision systems need strong feature extraction to work well.

Recent studies confirm that backbone neural networks, especially convolutional neural networks, serve as the foundation for industrial feature extraction. These networks show strong accuracy in both image classification and object detection, even when data is limited. Transfer learning, where a backbone trained on one task is used for another, helps machines learn faster and with better results.

The VIBES study and the "Battle of the Backbones" benchmark both show that choosing the right backbone for each task is important. Some backbones work better for certain computer vision tasks, so picking the best one can improve performance. New research also shows that combining strong backbones with good data can help simple learning methods beat more complex ones.

Note: Effective feature extraction in backbone machine vision systems leads to better accuracy, faster learning, and more reliable results in industrial settings.

Key Functions and Advantages

Performance and Accuracy

Backbone machine vision systems deliver high performance in industrial settings. These systems use advanced neural networks to improve object detection, recognition, and segmentation. Factories rely on these systems to spot defects, sort objects, and ensure quality. The backbone helps machines reach high accuracy, often above 98%. Machines can inspect parts much faster than humans, sometimes 80 times quicker. This speed means factories can check thousands of objects every hour.

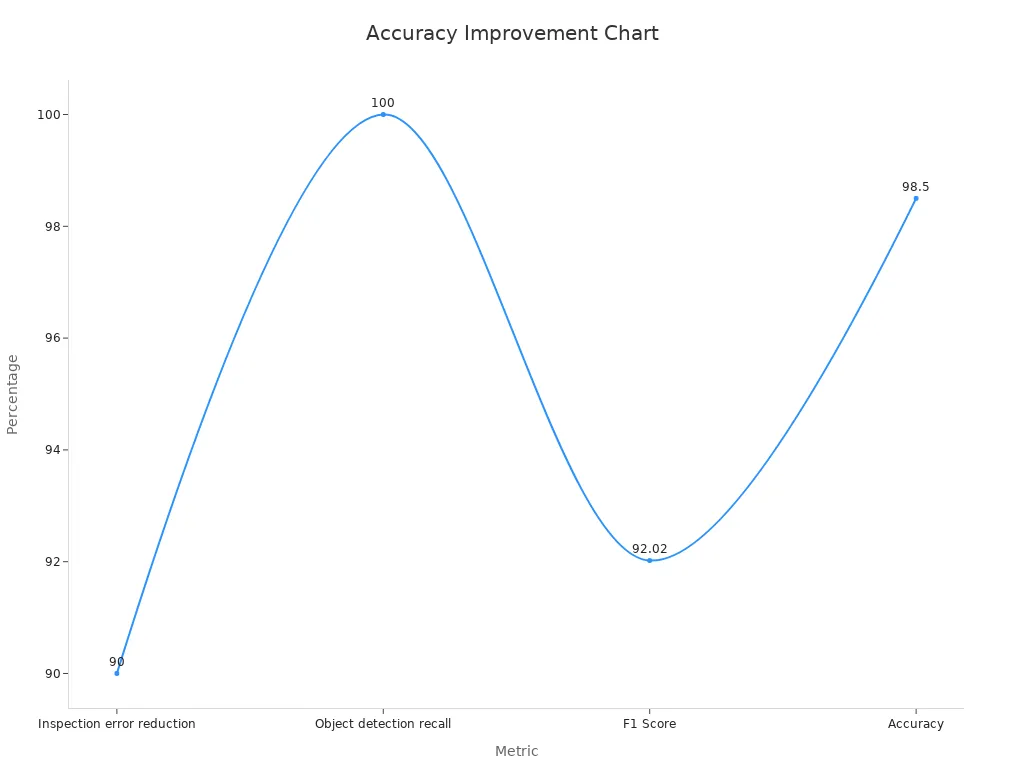

A strong backbone reduces inspection errors by over 90%. It also lowers the number of defective products by up to 99%. Machines using these systems show better precision and recall than human inspectors. For example, the F1 score, which measures balance between precision and recall, often goes above 90%. The table below shows how backbone machine vision systems compare to traditional methods:

| Metric | Traditional Methods | AI-Driven Systems |

|---|---|---|

| Accuracy | 85-90% | Over 99.5% |

| Speed | 2-3 seconds per unit | 0.2 seconds per unit |

Factories using backbone vision systems see fewer errors, faster inspections, and higher product quality. The chart below highlights improvements in key metrics:

Note: High accuracy and speed in object detection and recognition help companies reduce waste and improve customer satisfaction.

Hardware Efficiency

Hardware efficiency plays a key role in backbone machine vision systems. The right hardware speeds up detection and recognition tasks. Processors, GPUs, and memory all affect how fast and accurately a system works. For example, high-speed processors and large memory allow machines to handle complex object detection and semantic segmentation tasks in real time.

Research from NVIDIA shows that new backbone designs, like hybrid Mamba-Transformer models, boost both speed and accuracy. These models perform well in image classification, object detection, and segmentation. They also use less power and run faster, making them ideal for factories that need reliable and quick vision systems.

The table below explains how different hardware parts impact machine vision performance:

| Hardware Component | Impact on Machine Vision Performance Metrics |

|---|---|

| Processor type and speed | Reduces execution time and speeds up detection |

| GPUs/TPUs | Handles complex models and improves training efficiency |

| Memory capacity and type | Supports large datasets, boosts accuracy and throughput |

| Storage type and capacity | Speeds up data access, lowers system latency |

Efficient hardware, paired with a strong backbone, ensures that vision systems can process images quickly and accurately. This leads to better detection, faster inspections, and more reliable results in industrial environments.

Industrial Applications

Object Detection in Manufacturing

Factories use object detection to find and track items on assembly lines. This technology helps machines spot defects, sort products, and guide robots. AI-powered computer vision tasks, such as image classification and object detection, have reached over 95% accuracy in finding tiny defects in brake parts. The automotive industry uses object detection for assembly line automation and defect detection, which leads to higher product quality and faster cycles. Food factories use object detection for inspection and packaging, which improves safety and lowers contamination risks. Logistics companies use drones with object detection to count inventory quickly and save costs. Advanced models like YOLOv7 can process images at 286 frames per second, making real-time detection possible in high-speed environments. These systems use bounding boxes to mark objects and support instance segmentation for more detailed analysis.

Tip: Combining object detection with semantic segmentation allows factories to separate objects from backgrounds and understand their shapes, which improves sorting and quality checks.

Quality Control

Quality control depends on accurate detection and recognition of defects. Backbone machine vision systems use image classification, object detection, and segmentation to check products for errors. Statistical methods, such as regression and time series analysis, help track quality over time. Multivariate analysis examines many variables at once for better predictions. Confusion matrices and classification accuracy rates show how well these systems work. In one case, a convolutional neural network separated defective and non-defective samples with high accuracy. Another deep network model for test tubes showed strong generalization and used fewer resources. These results prove that backbone machine vision systems improve quality control by making fast, reliable decisions. Companies see cost savings, higher ROI, and fewer quality control costs.

Wafer Inspection

Wafer inspection in electronics uses backbone machine vision for defect detection and recognition. Researchers tested convolutional neural networks on over 1,000 real wafer maps and found high accuracy in classifying defects. Another study used a deep layered CNN on a dataset with more than 800,000 wafer maps, showing strong performance in object detection and image classification. One system reached 90.5% accuracy in defect detection using manifold learning. These results show that backbone machine vision systems outperform traditional methods in wafer inspection. They support image recognition, semantic segmentation, and other computer vision tasks, making them essential for modern electronics manufacturing.

Backbone Architectures in AI Vision

Popular Models (ResNet, VGG, DenseNet)

Many industries rely on deep learning to power their machine vision systems. The backbone of these systems often uses well-known neural networks. ResNet, VGG, and DenseNet stand out as popular choices. Each model brings unique strengths to deep learning tasks.

ResNet uses residual connections. These connections help neural networks learn deep features without losing important information. VGG uses simple 3×3 filters, making it easy to understand and a good starting point for deep learning projects. DenseNet connects each layer to every other layer, which helps the network learn deep and complex patterns with fewer parameters.

The table below compares several backbone architectures and their key features:

| Backbone Architecture | Key Innovation / Feature | Reference Paper | Benefits / Differences |

|---|---|---|---|

| ResNet | Residual connections to address vanishing gradients | arXiv:1512.03385 | Enables training of very deep networks, improving accuracy and stability |

| VGG | Simple, uniform 3×3 convolutional filters | arXiv:1409.1556 | Straightforward architecture, good baseline for feature extraction |

| MobileNet | Designed for mobile/embedded devices focusing on efficiency and low latency | arXiv:1704.04861 | Lightweight, fast inference suitable for resource-constrained environments |

| EfficientNet | Compound scaling of depth, width, and resolution for optimal efficiency | arXiv:1905.11946 | Balances accuracy and computational cost effectively |

| Vision Transformers (ViT) | Applies Transformer architecture to image patches | arXiv:2010.11929 | Leverages attention mechanisms, competitive with CNNs on large datasets |

| CSPDarknet | Incorporates Cross Stage Partial networks, used in YOLOv5 and later | Ultralytics YOLOv5 docs | Balances speed and accuracy for real-time detection tasks |

Performance metrics show that DenseNet-121 can boost accuracy from 85.4% to 93.2% and F1-score from 82.8% to 92.2% when using data augmentation. VGG16 also improves with data augmentation, reaching 92.6% accuracy. These results highlight the power of deep learning and the importance of choosing the right backbone for each task.

Note: Increasing the depth of a neural network does not always mean better results. Sometimes, smaller models like ResNet18 can outperform deeper ones like ResNet50 on certain datasets.

Hybrid and Transformer-Based Backbones

Recent advances in deep learning have led to hybrid and transformer-based backbones. These models combine the strengths of convolutional neural networks and transformers. Hybrid models use CNNs to extract local features and transformers to capture global patterns. This approach helps the backbone learn both fine details and big-picture context.

The MambaVision hybrid backbone uses redesigned blocks and transformer layers. It achieves top accuracy and fast image processing on large datasets. This backbone outperforms pure CNN and pure transformer models in object detection, segmentation, and other deep learning tasks. Studies show that adding self-attention blocks at later stages helps the model understand long-range relationships in images.

| Model Type | Industrial Application | Key Advantages | Performance Highlights |

|---|---|---|---|

| Vision Transformers (ViTs) | Vascular Biometric Recognition | Pre-training on large datasets enables robust feature extraction | Accuracy between 96% and 99.86% across multiple datasets |

| Hybrid CNN + ViT (e.g., R50+ViT-B/16) | Image Retrieval | Combines CNN local feature extraction with Transformer global encoding | Achieved 88.3% and 91.9% accuracy on ROxf and RPar datasets respectively |

| ViTAE and ViTAEv2 (Transformer-based) | Object Detection, Segmentation, Pose Estimation | Incorporate inductive biases and multi-stage design for local and global feature extraction | Achieved 88.5% accuracy, improved computational efficiency |

Hybrid backbones like CoAtNet and CvT show that combining deep learning methods leads to better accuracy and efficiency. These models help factories and industries solve complex vision problems faster and with fewer errors.

Tip: Hybrid and transformer-based backbones allow deep learning systems to adapt quickly to new tasks and changing environments.

Future Trends in Backbone Machine Vision

AI and Edge Computing

AI and edge computing are shaping the next wave of backbone machine vision systems. Companies now use deep learning and reinforcement learning to process data right where it is collected. This approach reduces delays and improves decision-making. For example, factories use edge AI to predict machine problems before they happen. This helps prevent downtime and saves money. In healthcare, edge AI speeds up medical decisions and improves accuracy. Self-driving cars rely on edge AI to process sensor data quickly, making roads safer.

Many industries use reinforcement learning to teach machines how to react to new situations. Robots in factories learn to sort items and spot defects using deep learning and reinforcement learning. These systems can adapt to changes on the production line. Real-world ai applications show that edge computing helps keep data secure by processing it locally. This also saves network bandwidth and protects privacy.

A table below highlights key trends in backbone machine vision:

| Trend | Impact |

|---|---|

| 3D Vision | Robots see depth and space, improving accuracy |

| Predictive Maintenance | AI detects wear early, reducing downtime |

| Edge AI | Real-time processing, lower latency, better security |

| Human-Robot Collaboration | Machines understand gestures and commands |

| Energy Efficiency | Systems use less power, saving costs |

Tip: Edge AI and reinforcement learning make backbone machine vision systems faster, smarter, and more secure for real-world ai applications.

Customization and Fine-Tuning

Customization and fine-tuning help backbone machine vision systems work better in different industries. Deep learning models can be adjusted for specific tasks using reinforcement learning. This means companies can train their systems to spot unique defects or handle new products. Smaller deep learning models are easier to fine-tune and cost less to run. Research shows that using large batch sizes and lower learning rates improves model performance. Early training results can predict how well a model will work, so companies can stop training early if needed.

Reinforcement learning and deep learning allow for quick updates and changes. Companies often reuse existing models and fine-tune them with high-quality data. This saves time and money. Parameter-efficient fine-tuning methods, like adapter tuning and LoRA, let engineers adjust only a small part of the model. These methods use less memory and still give strong results. For example, delta-tuning can cut memory use by up to 75% for small batches.

A list of best practices for customization and fine-tuning includes:

- Use high-quality data for training and reinforcement learning.

- Choose the right deep learning backbone for each task.

- Apply parameter-efficient fine-tuning to save resources.

- Monitor early training to predict final results.

- Use cloud platforms for scalable and cost-effective deployment.

Note: Customization and reinforcement learning make backbone machine vision systems flexible and ready for new challenges in deep industrial settings.

Backbone machine vision systems drive modern industry by boosting efficiency, accuracy, and adaptability. Companies see real benefits, such as reduced errors and better quality control. The table below shows strong market growth:

| Metric | Value |

|---|---|

| Market Size (2024) | USD 11.92 Billion |

| Projected CAGR (2026-2033) | 7.3% |

| Market Size Forecast (2033) | USD 21.72 Billion |

- Real-time decision-making and advanced ai help factories work faster.

- New ai models and 3D imaging improve safety and flexibility.

- Future trends point to even smarter, easier-to-use vision systems.

FAQ

What is a backbone machine vision system?

A backbone machine vision system uses deep learning to help machines see and understand images. It acts as the main structure for processing visual data in factories and other industries.

How do backbone systems improve quality control?

These systems find defects and sort products quickly. They help companies reduce errors and improve product quality. Machines can check thousands of items every hour with high accuracy.

Which industries use backbone machine vision systems?

Many industries use these systems. Examples include automotive, electronics, food processing, and logistics. Each industry benefits from faster inspections and better accuracy.

Are backbone machine vision systems expensive to install?

The initial cost can be high. However, companies save money over time by reducing errors, lowering labor costs, and improving efficiency. Many see a return on investment within a few years.

Can backbone machine vision systems work with existing factory equipment?

Yes, most systems can connect to current machines. Engineers often update software or add cameras to improve performance without replacing all equipment.

Tip: Companies should consult with experts before upgrading to ensure the best fit for their needs.

See Also

Transforming Aerospace Production Through Advanced Machine Vision Technology

The Impact Of Machine Vision Systems On Modern Farming

Essential Benefits And Features Of Machine Vision In Healthcare

Comparing Firmware-Based Machine Vision With Conventional Solutions