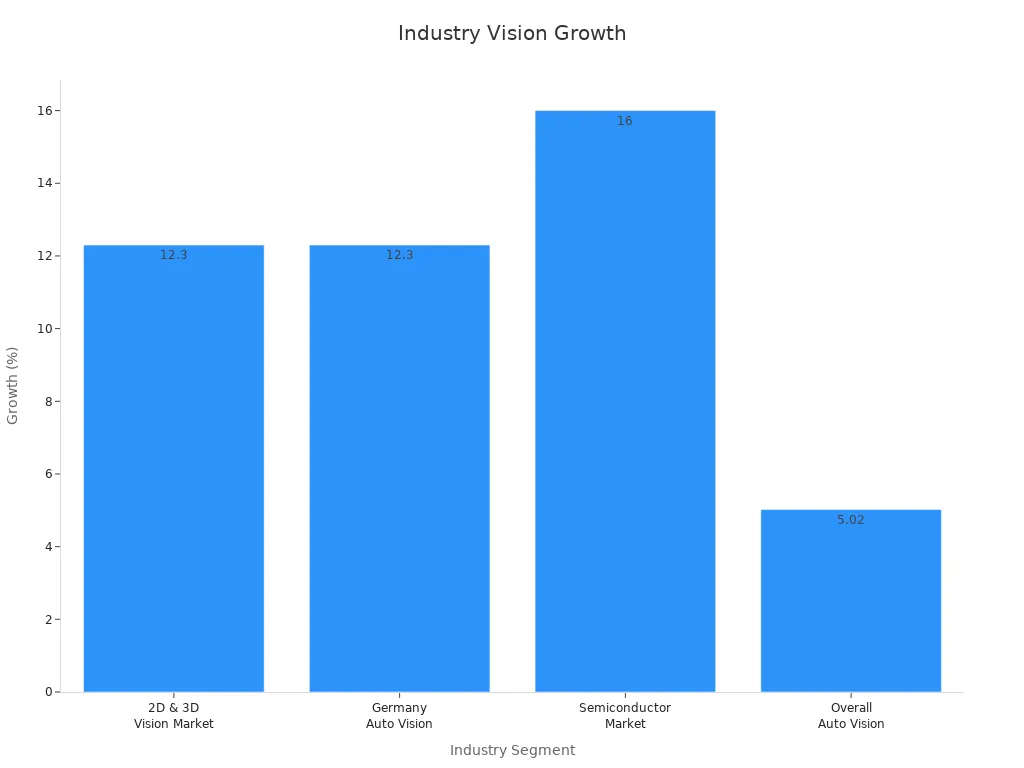

An algorithm machine vision system uses advanced computer programs to help machines see and understand the world through images and videos. These systems can spot objects with up to 99.9% accuracy in controlled settings, which makes them valuable in many industries. Algorithms select the most important parts of an image, often reducing thousands of features to just a few hundred while keeping accuracy high. The global market for algorithm machine vision system technology is growing fast, as shown below:

Readers will find this guide clear and free from confusing terms.

Key Takeaways

- Algorithm machine vision systems help machines see and understand images to perform tasks like sorting and inspection with high accuracy.

- These systems use different types of cameras and algorithms to capture and analyze images, making them useful in industries like manufacturing, healthcare, and autonomous vehicles.

- High-quality cameras, lenses, and precise hardware improve image clarity and system accuracy, reducing errors and increasing efficiency.

- Popular computer vision algorithms include edge detection, feature detection, segmentation, and object detection, many powered by deep learning for better results.

- Beginners can start learning machine vision using open-source tools like OpenCV and Scikit-image, building skills through simple projects and tutorials.

Algorithm Machine Vision System Basics

What Is It?

An algorithm machine vision system uses computer vision to help machines understand what they see. These systems use algorithms to process an image or a series of images. The main goal is to extract useful information from each image. For example, a factory robot can use a computer vision system to check if a product looks correct. The system takes an image, runs it through a set of rules, and then decides if the product passes inspection. Computer vision makes it possible for machines to perform tasks that need sight, such as sorting objects or reading labels.

Human vs. Machine Vision

Human vision and machine vision work in different ways. The human brain uses complex circuits to process what the eyes see. Neuroscientific studies show that the human ventral visual stream uses recurrent circuits for object recognition. This means the brain looks at an image many times, making sense of it over time. In contrast, most computer vision systems use feedforward methods. They process an image in one pass, without going back and forth.

Humans can often understand images that confuse computer vision systems. For example, people can recognize objects in tricky or blurry images, while machines may struggle. Deep neural networks, which power many computer vision systems, can be fooled by images that look normal to humans. This shows that humans and machines use different methods to understand images.

- Key differences between human and machine vision:

- Humans use dynamic, recurrent processing.

- Machines often use simple, feedforward steps.

- People can handle confusing or tricky images better.

- Computer vision systems may miss details or get fooled by odd images.

Types of Systems

Algorithm machine vision systems come in several types. Each type works best for certain tasks:

- 1D Systems: These systems scan images in one line. They work well for tasks like reading barcodes.

- 2D Area Scan Systems: These systems capture a flat image, like a photo. They are common in quality checks and object sorting.

- 2D Line Scan Systems: These systems build an image one line at a time. They are useful for inspecting items on moving belts.

- 3D Systems: These systems create a three-dimensional view of an object. They help machines measure shapes and sizes, which is important in robotics and packaging.

Each type of computer vision system uses images in a special way. The choice depends on what the machine needs to see and do.

Core Components and Workflow

Image Acquisition

Every algorithm machine vision system starts with image acquisition. The system uses a camera or sensor to capture an image of the target object or scene. The quality of this first image shapes the entire process. If the camera captures a blurry or dark image, the system may struggle to find important details. High-quality cameras and sensors help the system see small features and differences. For example, a factory robot might use a camera to take an image of a product moving on a conveyor belt. The system needs a clear image to check for defects or missing parts.

Optics and Hardware

Optics and hardware play a key role in machine vision. Lenses focus light onto the camera sensor, creating a sharp image. Advanced optics, such as infrared lenses, can capture images even in low light or harsh environments. Studies show that retinal imaging with infrared lenses scored 8.25 out of 10 for image quality. Machine vision systems with precise optics and high-quality hardware can reduce inspection errors by over 90% and lower defect rates by up to 80%. Proper calibration, using methods like the Zhang algorithm, ensures the system measures objects accurately. Vision-guided robots with advanced optics increased production throughput by 27% and reduced waste by 34%. These results highlight the importance of investing in good hardware for reliable image analysis.

Image Processing

After capturing the image, the system begins image processing. This step uses algorithms to improve the image and find useful information. The system might adjust brightness, remove noise, or sharpen edges. Next, it looks for patterns, shapes, or colors that match what it needs to find. For example, the system can spot a scratch on a metal part or read a printed code. A convolutional neural network can predict image quality with a mean absolute error of only 0.9, showing how precise image processing can be with the right tools.

Output and Decisions

The final step is output and decisions. The system uses the processed image data to make a choice or send a signal. It might sort a product, trigger an alarm, or guide a robot arm. Error detection and correction features help the system work faster and more accurately. For instance, these features can reduce operational time by 30% on the first day and by 23% on the second day. The system can also decrease the time needed to switch tasks by about 70 milliseconds. Reliable output depends on every step, from capturing the first image to making the final decision.

Tip: High-quality images and precise hardware make every step of the workflow more accurate and efficient.

Computer Vision Algorithms

Modern computer vision relies on a wide range of computer vision algorithms. These algorithms help machines find important features, separate objects, and make sense of images. Each algorithm has a special role in tasks like image segmentation, object detection, and feature matching. Some algorithms work best for finding edges, while others focus on recognizing objects or understanding the whole scene. Deep learning has changed the field by making computer vision systems more accurate and flexible.

Edge Detection

Edge detection helps computer vision systems find the boundaries of objects in an image. The algorithm looks for sudden changes in brightness or color. These changes often mark the edges of shapes or features. Edge detection is important for tasks like segmentation, feature detection, and object recognition. Traditional edge detectors, such as the Canny or Sobel operators, use simple rules to find edges. Newer methods use deep learning and convolutional neural networks to improve accuracy.

Studies show that deep learning-based edge detection algorithms, like the Pixel Difference Network, can even outperform human vision in accuracy. Deeper architectures, such as ResNet, help extract better features and improve results.

Edge detection makes it easier for computer vision algorithms to find features and match them across images. This step is often the first part of more complex tasks, such as object detection and image segmentation.

Feature Detection (SIFT)

Feature detection finds key points in an image that stand out from their surroundings. These points, called features, help with tasks like feature matching, object recognition, and image classification. The Scale-Invariant Feature Transform (SIFT) is a popular algorithm for feature detection. SIFT finds features that do not change when the image is rotated, scaled, or slightly changed in brightness.

SIFT works by looking for areas in the image with strong changes in intensity. It then describes each feature with a vector, which helps with feature matching between images. SIFT is robust and works well for object recognition and 3D reconstruction. However, SIFT can be slow because it creates high-dimensional feature descriptors. It also struggles with large changes in lighting.

| Algorithm | Strengths | Weaknesses |

|---|---|---|

| SIFT | Robust to scale and rotation, good for feature matching and recognition | Slow, less reliable with big lighting changes |

Feature detection and feature matching are key steps in many computer vision applications, such as motion tracking and robotic navigation.

Segmentation

Segmentation divides an image into different parts, making it easier to analyze. This process helps computer vision systems separate objects from the background or from each other. There are two main types: semantic segmentation and instance segmentation.

- Semantic segmentation labels each pixel in the image with a class, such as "car" or "road."

- Instance segmentation goes further by separating each object, even if they belong to the same class.

Image segmentation helps with object detection, feature extraction, and localization. For example, in medical imaging, segmentation can highlight tumors or organs. In self-driving cars, segmentation helps the system understand where the road, cars, and pedestrians are.

Many computer vision algorithms use segmentation as a key step. Deep learning models, especially convolutional neural networks, have improved segmentation accuracy. These models can learn complex features and handle difficult images.

Segmentation is important for tasks that need precise localization and recognition. It also helps with feature matching and object detection in crowded scenes.

Object Detection

Object detection finds and locates objects in an image. The algorithm draws a box around each object and labels it. Object detection combines feature detection, segmentation, and localization. It is used in many areas, such as security cameras, self-driving cars, and industrial inspection.

Popular object detection algorithms include YOLO, SSD, and Faster R-CNN. Each algorithm balances speed, accuracy, and computational cost. For example, YOLOv3 works faster and more efficiently than SSD and Faster R-CNN on the Microsoft COCO dataset. YOLO is good for real-time applications because it has high precision and recall with low false positives.

| Algorithm | Speed | Accuracy | Best Use Case |

|---|---|---|---|

| YOLO | High | High | Real-time detection |

| SSD | Medium | Medium | Multi-scale detection |

| Faster R-CNN | Low | High | High-precision tasks |

Metrics such as mean Average Precision (mAP), Intersection over Union (IoU), and Detection Error Rate (DER) help measure how well object detection algorithms work. Lower error rates and higher IoU scores mean better localization and recognition.

Deep Learning

Deep learning has transformed computer vision. Deep learning models, especially convolutional neural networks, can learn features directly from raw images. These models handle complex tasks like segmentation, object detection, and feature extraction with high accuracy.

Deep learning-based computer vision algorithms outperform traditional methods in many areas. For example, deep edge detection models beat older algorithms and even human vision in some tests. Deep learning also powers advanced segmentation, object detection, and feature matching systems.

Deep learning models, such as YOLOv5 and YOLOX, run on edge devices like NVIDIA Jetson Nano and Google Coral Dev Board. These models balance accuracy, speed, and power use, making them practical for real-world computer vision applications.

Deep learning continues to push the limits of what computer vision systems can do. It improves feature detection, segmentation, and object recognition in many fields.

Implementation in Practice

Software Tools (OpenCV, Scikit-image)

Many developers use OpenCV and Scikit-image for embedded vision projects. OpenCV stands out because it uses optimized C++ code and supports hardware acceleration. This makes it fast and suitable for real-time computer vision tasks. OpenCV also works well with multi-core processors and has a large community for support. Scikit-image, on the other hand, is a Python-only library built on top of NumPy. It offers a simple interface and easy installation, which helps beginners get started quickly. However, Scikit-image may run slower than OpenCV, especially for large or complex tasks. It focuses on high-quality image processing algorithms but has fewer features and less third-party support.

- OpenCV runs faster and supports real-time applications.

- Scikit-image is easier to use but may have performance overhead.

- OpenCV has more functions and better community support.

- Scikit-image offers high-quality algorithms for image processing.

Both libraries help users build ai-powered embedded vision solutions. The choice depends on the project’s needs and the user’s experience.

Programming Languages

Python and C++ are the most popular languages for embedded vision. Python is easy to learn and read. Many beginners choose Python because it works well with libraries like Scikit-image and OpenCV. C++ gives more control and speed, which helps with ai optimization in computer vision. Developers often use C++ for real-time or resource-limited embedded vision systems. Some projects use both languages together, combining Python’s simplicity with C++’s power.

Getting Started

Beginners can start with simple projects, such as detecting shapes or colors in images. They can install OpenCV or Scikit-image using pip or conda. Many online tutorials and guides show step-by-step instructions. A basic example in Python looks like this:

import cv2

image = cv2.imread('sample.jpg')

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

cv2.imshow('Gray Image', gray)

cv2.waitKey(0)

cv2.destroyAllWindows()

Tip: Start with small projects and build up skills. Try using both OpenCV and Scikit-image to see which fits best for different embedded vision tasks.

With practice, anyone can create computer vision applications for embedded vision. These skills open doors to ai-powered embedded vision solutions in many fields.

Applications

Industrial Automation

Industrial automation uses algorithm machine vision systems to improve precision and quality control. These systems analyze each image from assembly lines to detect defects and sort objects. Factories use segmentation to separate products from the background. Machine vision systems use feature matching to compare each object with a standard model. This process reduces waste and errors. Robotics with embedded vision increases assembly line speed and accuracy. AI and machine learning help with predictive maintenance by finding features that signal equipment problems. High-quality lenses capture clear images, making feature extraction and matching more reliable. The rise of electric vehicles creates new needs for inspection and battery monitoring, where embedded vision supports both object recognition and localization.

Machine vision systems in industrial automation help companies save money and improve safety.

Healthcare

Healthcare relies on machine vision for medical imaging, surgical assistance, and patient monitoring. Hospitals use segmentation to highlight features in MRI and CT scans. Algorithms detect illness signals in real time by analyzing image features. Robotic surgery systems use embedded vision to guide precise movements. Patient monitoring systems track changes in features such as skin color or movement, alerting staff to early signs of trouble. The demand for automation in healthcare grows as more clinics adopt cloud-based solutions and smart cameras. Machine vision improves diagnosis accuracy and speeds up treatment by matching features in medical images to known patterns.

Autonomous Vehicles

Autonomous vehicles depend on machine vision for safe navigation. These vehicles use segmentation to separate lanes, cars, and pedestrians in each image. Feature detection and matching help the system recognize objects and track their movement. Embedded vision hardware processes images quickly, supporting real-time decisions. Studies show that computer vision techniques like edge detection and feature matching enable lane detection and steering control. Deep learning and LiDAR sensors improve feature extraction, but even simple algorithms can support effective autonomous driving. Machine vision reduces accidents and helps people with disabilities by providing reliable object recognition and localization.

Consumer Uses

Consumers benefit from machine vision in many devices. Smartphones use embedded vision for face recognition and photo enhancement. Home security cameras use segmentation and feature matching to detect objects and alert users. Smart appliances use image analysis to identify features like food freshness or object presence. Gaming systems use feature detection and matching for motion tracking. These applications rely on fast, accurate image processing and robust feature extraction. Embedded vision makes these features possible in small, affordable devices.

Algorithm machine vision systems help machines see and understand images. These systems drive automation and quality checks in many industries. The global market reached $13.89 billion in 2024 and could grow to $22.42 billion by 2029. 2D and 3D vision systems, along with AI, make factories smarter and reduce errors. Beginners can start with open-source tools like OpenCV. Online courses and tutorials offer easy ways to learn. Machine vision will shape the future of robotics, healthcare, and daily life.

FAQ

What is the main purpose of an algorithm machine vision system?

An algorithm machine vision system helps machines see and understand images. It uses computer programs to find important details in pictures or videos. These systems support tasks like sorting, inspecting, and recognizing objects.

Can beginners use machine vision tools without coding experience?

Many beginners start with simple tools and tutorials. Open-source libraries like OpenCV offer step-by-step guides. Some platforms provide drag-and-drop interfaces. Anyone can experiment with basic projects and learn as they go.

How do machine vision systems differ from regular cameras?

A regular camera only captures images. A machine vision system analyzes those images using algorithms. It can detect objects, measure sizes, and make decisions based on what it sees.

What industries use machine vision the most?

Factories, hospitals, car makers, and electronics companies use machine vision. These systems help with quality checks, medical imaging, self-driving cars, and smart devices.

Are machine vision systems expensive to set up?

Costs vary. Some systems use affordable cameras and open-source software. Large factories may invest in advanced hardware. Beginners can start with low-cost kits and free tools.

See Also

Understanding How Machine Vision Systems Process Images

Comprehensive Overview Of Machine Vision For Semiconductors

Complete Guide To Industrial Automation Using Machine Vision

Essential Tips For Positioning Devices In Vision Systems

Exploring Computer Vision Models Within Machine Vision Systems