Adversarial training strengthens a machine vision system by preparing it to handle adversarial attacks. You expose the system to adversarial examples—carefully crafted inputs designed to deceive models—during the training process. This approach teaches the system to recognize and resist such manipulations. By enhancing resilience, adversarial training machine vision system techniques ensure that these systems operate reliably, even in challenging scenarios. Adversarial machine learning, as a discipline, plays a critical role in improving the security and robustness of AI technologies.

Key Takeaways

- Adversarial training helps machine vision systems by showing them tricky examples. This makes them better at handling attacks.

- Adding tricky examples to training makes models stronger. This helps them work well in real-life tasks like healthcare and self-driving cars.

- Adversarial training makes systems tougher but takes more time and resources. It’s important to balance how well they work with how fast they train.

- Generative adversarial networks (GANs) create extra data for training. This improves accuracy, which is needed for important tasks.

- Scientists are studying adversarial training to stop new threats. This keeps AI safe and reliable for everyone.

Adversarial Examples in Machine Vision

Definition of Adversarial Examples

Adversarial examples are inputs intentionally crafted to mislead machine learning models. These inputs often appear normal to humans but cause significant errors in machine vision systems. For instance, an image of a stop sign might be altered slightly so that a machine vision system misclassifies it as a speed limit sign. This discrepancy arises because machines process visual data differently than humans. Adversarial examples exploit this gap, making them a critical focus in adversarial machine learning.

Adversarial images challenge machine classifiers while remaining understandable to humans. This highlights the complex relationship between human and machine perception. Despite this, adversarial examples remain a serious concern in practical applications.

Exploiting Vulnerabilities in Machine Vision Systems

Adversarial attacks exploit weaknesses in machine vision systems by targeting their decision-making processes. These attacks can manipulate models with minimal resources. For example, researchers introduced the concept of ℓp-norm adversarial examples in 2013, and these vulnerabilities persist today. Physical adversarial attacks, such as placing stickers on road signs, demonstrate how attackers can exploit these systems in real-world scenarios. Even naturally occurring images can confuse models, revealing widespread vulnerabilities.

Adversarial machine learning research has shown that these vulnerabilities extend beyond vision systems. Similar issues have been identified in language models, robotic policies, and even superhuman Go programs. This underscores the importance of addressing these weaknesses to improve the reliability of machine vision systems.

Real-World Examples of Adversarial Attacks

Adversarial attacks have real-world implications across various domains. In medical imaging, studies have explored factors affecting adversarial vulnerabilities in ophthalmology, radiology, and pathology. For example, pre-training on ImageNet significantly increases the transferability of adversarial examples across different model architectures. This finding highlights the need for robust defenses in critical applications like healthcare.

| Focus Area | Methodology | Key Findings |

|---|---|---|

| Medical Image Analysis | Study of unexplored factors affecting adversarial attack vulnerability in MedIA systems across three domains: ophthalmology, radiology, and pathology. | Pre-training on ImageNet significantly increases the transferability of adversarial examples, even with different model architectures. |

Adversarial machine learning continues to address these challenges, ensuring that systems can withstand attacks and operate securely in real-world environments.

Mechanisms of Adversarial Training

Generating Adversarial Examples

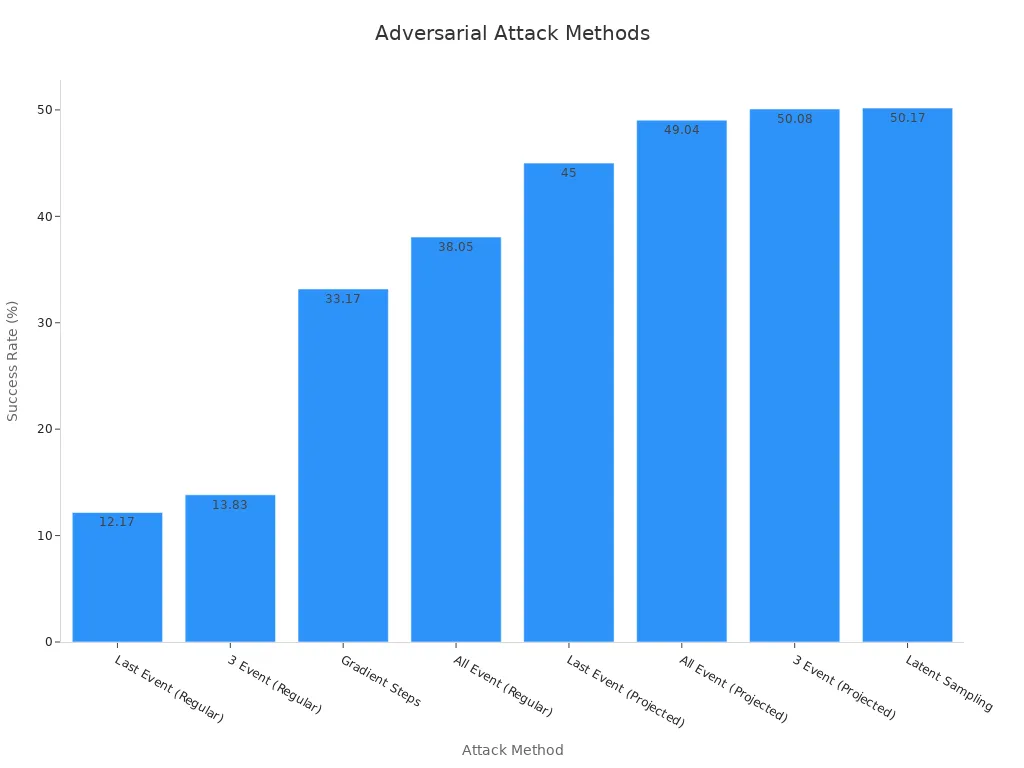

Adversarial examples are the foundation of adversarial training. These examples are crafted to exploit weaknesses in machine learning models, forcing them to make incorrect predictions. You can generate these examples using various methods, each with unique success rates and characteristics. For instance, regular attacks manipulate the input space, while projected attacks and latent sampling focus on the latent space. Studies show that latent space perturbations often produce more natural adversarial examples because they preserve the structural relationships within the data.

| Attack Method | Success Rate |

|---|---|

| Last Event (Regular) | 12.17% |

| 3 Event (Regular) | 13.83% |

| Gradient Steps | 33.17% |

| All Event (Regular) | 38.05% |

| Last Event (Projected) | 45% |

| All Event (Projected) | 49.04% |

| 3 Event (Projected) | 50.08% |

| Latent Sampling | 50.17% |

These methods highlight the importance of selecting the right approach for generating adversarial examples. By introducing noise in the latent space, you can create examples that are harder for models to detect, making them ideal for testing and improving adversarial training machine vision systems.

Integrating Adversarial Examples into Training

Once adversarial examples are generated, they must be integrated into the training process. This involves retraining machine learning models with a mix of legitimate and adversarial samples. By doing so, you expose the model to potential vulnerabilities, allowing it to learn how to resist adversarial attacks. For example, retraining classifiers with adversarial examples has been shown to significantly improve their robustness against perturbations and experimental noise.

- Adversarial training using generative adversarial networks enhances the diversity of training data. This is particularly useful in applications like autonomous driving, where diverse datasets improve model performance.

- Synthetic data generated by GANs also boosts the quality of datasets, aiding tasks such as defect detection and object recognition.

However, this process is not without challenges. Near phase transition points, the flattening of weight parameters can lead to a slight decrease in performance. Despite this, the benefits of integrating adversarial examples far outweigh the drawbacks, especially in critical applications like medical imaging and autonomous vehicles.

Enhancing Model Robustness Through Adversarial Training

Adversarial training strengthens the robustness of machine vision systems by teaching them to handle adversarial examples effectively. Experimental results validate this approach, showing significant improvements in metrics like Precision, Recall, and F1-Score. For instance, models trained with adversarial examples demonstrated enhanced resilience, particularly in challenging classes like "Pituitary" and "No Tumor." Confidence bar plots further revealed that these models exhibited balanced and reduced confidence in misclassified adversarial examples, indicating increased caution and accuracy.

| Model | Attack Success Rate (ASR) | Adversarial Robustness |

|---|---|---|

| CLIP (clean) | 100% | Vulnerable |

| TeCoA2, FARE2, TGA2, AdPO2 | Varying degrees | More robust |

| ϵ=4/255 versions | Significantly higher | More robust |

| Attack Type | CLEVER Metric Improvement |

|---|---|

| Combined PGD/EAD/HSJA | Higher average lower bounds |

| PGD only | Lower average lower bounds |

Adversarial training also benefits from benchmark datasets like CIFAR10, SVHN, and Tiny ImageNet. These datasets provide reliable evidence of performance gains achieved through adversarial training. For example:

| Dataset | Description |

|---|---|

| CIFAR10 | A widely used dataset for evaluating image classification and adversarial robustness. |

| SVHN | A dataset of street view house numbers, commonly used for digit recognition tasks. |

| Tiny ImageNet | A smaller version of ImageNet, used for benchmarking in various machine learning tasks. |

By leveraging these datasets and integrating adversarial examples into the training process, you can build machine vision systems that are not only more robust but also more reliable in real-world applications.

Benefits and Challenges of Adversarial Training

Advantages of Adversarial Training in Machine Vision

Adversarial training offers several advantages that make it a valuable defense technique for machine learning systems. By exposing models to adversarial examples during training, you can significantly improve their ability to withstand adversarial attacks. This process enhances the robustness of machine vision systems, ensuring they perform reliably even under adverse conditions. For instance, empirical studies show that adversarial training can lead to a 40% improvement in object recognition accuracy when models encounter challenging scenarios.

Another key benefit is the ability to use generative adversarial networks for data augmentation. These networks generate synthetic adversarial examples, enriching the training dataset and improving model generalization. This technique is particularly useful in applications like autonomous driving, where diverse datasets are critical for accurate object detection. Additionally, adversarial training strengthens decision boundaries, reducing the likelihood of misclassification and improving overall performance metrics.

Tip: Incorporating adversarial examples into training not only boosts robustness but also prepares models to handle real-world threats effectively.

Limitations and Challenges in Implementation

Despite its advantages, adversarial training comes with notable challenges. One major issue is the computational overhead associated with generating adversarial examples and retraining models. Studies reveal that adversarial training increases training time by 200-500%, requiring advanced GPU clusters and higher energy consumption. This economic impact can be a barrier for organizations with limited resources.

| Challenge | Numerical Impact |

|---|---|

| Computational Overhead | Increases training time by 200-500% |

| Resource Intensity | Requires advanced GPU clusters |

| Economic Impact | Higher energy consumption and costs |

| Improvement in Object Recognition | 40% improvement under adverse conditions |

Another limitation is the risk of overfitting when using one-step adversarial attack methods. Large datasets like ImageNet exacerbate this issue, making models vulnerable to black-box attacks. Generating strong adversarial examples also incurs high computational costs, which can make adversarial training impractical for large-scale applications. Furthermore, adversarial training often increases margins along decision boundaries, negatively impacting original accuracy. Balancing robust and original accuracy remains a significant challenge.

Researchers have proposed mitigation strategies to address these limitations. For example, Shafahi et al. developed a cost-effective version of adversarial training that reduces overhead by utilizing gradient information from model updates. Similarly, Zhang et al. suggested focusing on the least adversarial examples among confidently misclassified data to optimize training efficiency.

Balancing Robustness and Performance in Machine Vision Models

Balancing robustness and performance is a critical aspect of adversarial training. While adversarial training enhances model resilience against adversarial attacks, it can sometimes compromise accuracy on clean data. You need to carefully manage this trade-off to ensure machine learning systems perform well across diverse scenarios.

Empirical research highlights various techniques for achieving this balance. For instance, the Delayed Adversarial Training with Non-Sequential Adversarial Epochs (DATNS) method optimizes the trade-offs between efficiency and robustness. This approach allows models to maintain high performance metrics while improving their ability to resist adversarial threats.

Quantitative assessments further illustrate the impact of adversarial training on model performance. For example, studies comparing LeNet-5 and AlexNet-8 show that adversarial training maintains high accuracy on clean datasets while improving robustness against perturbations. However, performance can fluctuate with higher levels of adversarial noise.

| Metric | LeNet-5 Performance | AlexNet-8 Performance |

|---|---|---|

| Training Set Accuracy | Close to 1.0 with small perturbations; drops below 0.7 with perturbations of 9.0 and 11.0 | Consistently close to 1.0 |

| MNIST-Test Accuracy | Remains close to 1.0 with perturbations below 9.0; fluctuates with higher perturbations | Consistently close to 1.0 |

| MNIST-C Random Robustness | Slight variations around 0.9 with small perturbations; decreases with higher perturbations | High level with perturbations < 3.5; decreases with higher perturbations but remains > 0.8 |

To further enhance this balance, researchers suggest differentiating between misclassified and correctly classified inputs during adversarial training. This approach minimizes the impact of adversarial examples on clean data accuracy while improving robustness. By adopting these strategies, you can build machine vision systems that are both resilient and high-performing.

Note: Balancing robustness and performance requires careful consideration of training methods and datasets. Leveraging advanced techniques can help you achieve optimal results.

Applications of Adversarial Training in Machine Vision Systems

Securing Facial Recognition Systems

Facial recognition systems play a vital role in security and authentication. However, they are vulnerable to adversarial attacks, which can manipulate images to deceive these systems. Adversarial training helps you secure these systems by exposing them to adversarial examples during the learning process. This approach strengthens their ability to identify manipulated inputs and maintain accuracy. For instance, adversarial training can improve the system’s resilience against spoofing attempts, such as altered facial images or masks. By enhancing robustness, you ensure that facial recognition systems remain reliable in real-world scenarios.

Enhancing Object Detection in Autonomous Vehicles

Object detection is critical for the safe operation of autonomous vehicles. Adversarial training significantly improves the performance of detection systems by preparing them to handle adversarial perturbations. A robust training framework enhances the resilience of models like PointPillars against attacks. This framework uses a novel method to generate diverse adversarial examples, which boosts both detection accuracy and robustness. LiDAR-based models benefit greatly from this approach, and the improvements often transfer to other detectors. By adopting adversarial training, you can ensure that autonomous vehicles detect objects accurately, even in challenging conditions.

- Key advancements include:

- Enhanced resilience of PointPillars against adversarial attacks.

- Improved detection accuracy through diverse adversarial examples.

- Potential transferability of robustness to other detection models.

Improving Accuracy in Medical Imaging

Medical imaging systems require high accuracy to support critical diagnoses. Adversarial training addresses vulnerabilities in these systems by improving their performance under adversarial conditions. For example, a base model might achieve less than 20% accuracy under attacks like PGD or FGSM. With adversarial training, the same model can maintain up to 80% accuracy under these attacks at a perturbation level of ϵ=1/255. Even at stronger attack levels, such as ϵ=3/255, the model retains about 40% accuracy, while untrained models collapse to zero. This improvement ensures that medical imaging systems remain reliable, even when adversarial inputs are present.

Adversarial training also enhances image generation techniques, which are essential for creating synthetic medical datasets. These datasets improve the training of machine learning models, leading to better diagnostic outcomes. By adopting adversarial training, you can build medical imaging systems that are both accurate and resilient.

Adversarial training plays a vital role in strengthening machine vision systems against adversarial attacks. By enhancing resilience, it ensures these systems remain reliable in real-world applications. Recent studies highlight its effectiveness, with F1 scores reaching 0.941 in intrusion detection tasks. However, ongoing innovation is essential. Research shows that adversarial training improves robustness without sacrificing accuracy, which is critical as AI applications expand. Future advancements, such as those explored in the AdvML-Frontiers workshop, aim to address emerging threats and ethical challenges. These efforts will shape the future of secure and trustworthy AI technologies.

FAQ

What are adversarial attacks in machine vision systems?

Adversarial attacks involve manipulating input data to deceive machine vision models. These attacks exploit weaknesses in the model’s decision-making process, causing it to misclassify images or make incorrect predictions. For example, a slightly altered stop sign image might trick a model into identifying it as a speed limit sign.

How does adversarial training improve model robustness?

Adversarial training exposes your model to adversarial examples during the learning process. This helps the model recognize and resist such manipulations. By learning from these examples, the system becomes more resilient to attacks and performs reliably in real-world scenarios.

Is adversarial training suitable for all machine vision applications?

Adversarial training benefits applications requiring high reliability, like autonomous vehicles, facial recognition, and medical imaging. However, its computational demands and potential trade-offs in accuracy make it less practical for resource-constrained or less critical systems.

Can adversarial training prevent all types of attacks?

No, adversarial training improves resilience but cannot eliminate all vulnerabilities. Attackers continuously develop new techniques. You should combine adversarial training with other security measures, like anomaly detection and model monitoring, for comprehensive protection.

Does adversarial training affect model accuracy on clean data?

Adversarial training can slightly reduce accuracy on clean data due to the trade-off between robustness and performance. However, advanced techniques, like balancing adversarial and clean examples during training, help minimize this impact while maintaining strong defenses against attacks.

See Also

Understanding Few-Shot And Active Learning In Machine Vision

Investigating The Role Of Synthetic Data In Machine Vision

Comparing Firmware-Based Machine Vision With Traditional Approaches

Grasping Object Detection Techniques In Contemporary Machine Vision