A depth map machine vision system gives robots the power to see and understand the world in three dimensions. Depth maps allow robots to estimate the location and orientation of objects, even in cluttered spaces, which helps with precise 3D vision and depth perception. Robots use 3D vision for accurate navigation and safe object manipulation. Machine vision with depth perception enables robots to recognize, segment, and interact with objects in real time. The table below shows how 3D vision systems now lead the machine vision market, making depth map technology key in robotics.

| Metric | Value/Description |

|---|---|

| 3-D Vision System Market Share | Approximately 63% of the machine vision market in 2023, indicating dominant adoption over other vision technologies |

| Machine Vision Market Size | USD 11.79 billion in 2023; projected to reach USD 23.78 billion by 2032 |

| CAGR | 6.97% to 8.11% growth rate forecasted from 2024 to 2033 |

Robots rely on depth map machine vision systems for accurate 3D scene understanding, which supports safe movement and reliable object handling.

Key Takeaways

- Depth map machine vision systems give robots a 3D view, helping them understand object positions and distances accurately.

- Robots use depth maps for safe navigation, avoiding obstacles, and moving precisely in complex environments.

- Depth perception improves robots’ ability to pick up, move, and handle objects with greater accuracy and efficiency.

- These systems enhance safety by detecting people and hazards, allowing robots to react quickly and prevent accidents.

- Despite technical and cost challenges, advances in AI and sensors are making depth map vision more accessible and powerful for robotics.

Depth Map Machine Vision System

What Is a Depth Map

A depth map is a special image that shows how far objects are from a camera or sensor. Each pixel in a depth map holds a value that tells the distance between the sensor and a point in the scene. This information helps robots understand the shape and position of objects in three dimensions. In a depth map machine vision system, the depth map acts as a guide for robots to move, pick up items, or avoid obstacles. Unlike regular images, which only show color and brightness, depth maps add a third dimension—distance. This makes them essential for tasks that need precise measurements and spatial awareness.

Depth map machine vision systems use different sensors and cameras to create these maps. Some common types include stereo vision cameras, structured light cameras, and time-of-flight sensors. These devices work together with software to turn raw data into high-resolution depth maps. The result is a detailed 3D view that robots can use for navigation, inspection, and manipulation.

How It Works

A depth map machine vision system uses several methods to create depth maps. Stereo vision uses two cameras placed side by side. The system compares the images from both cameras to find differences, which helps calculate depth. This process is similar to how human eyes work. Monocular depth estimation uses only one camera. It relies on machine learning and depth estimation techniques to guess the distance of objects based on patterns and features in a single image. Monocular depth estimation is useful when space or cost limits the use of multiple cameras.

Other depth estimation techniques include structured light and time-of-flight. Structured light projects a pattern onto objects and measures how the pattern changes. Time-of-flight sensors send out light and measure how long it takes to bounce back. These methods allow for real-time depth mapping, which is important for robots that need to react quickly.

| Technique | Process Description | Application Examples |

|---|---|---|

| Stereo Vision | Uses two cameras to compare images and calculate depth | Precise object placement |

| Monocular Depth Estimation | Uses one camera and AI to estimate depth from a single image | Mobile robots, drones |

| Structured Light | Projects patterns and analyzes distortions to infer depth | Industrial inspection |

| Time-of-Flight | Measures light travel time to create 3D maps | Dynamic object tracking |

A depth map machine vision system combines these methods and sensors to give robots a complete 3D view. This helps with depth estimation, object detection, and safe movement in complex environments.

Depth Estimation in Robotics

Stereo Vision

Stereo vision uses two cameras to capture images from slightly different angles. The system compares these images to find matching points and calculates the difference, called disparity. This process helps the robot create a depth map of the scene. Stereo vision provides accurate depth estimation when lighting is good and surfaces have enough texture. Robots use stereo vision for tasks like pick and place, navigation, and real-time manipulation.

- Stereo vision offers geometrically grounded depth estimation, making it reliable for precise tasks.

- The method requires careful camera calibration and works best in controlled environments.

- Stereo vision struggles with low-texture surfaces, poor lighting, and dynamic scenes.

- Hardware costs and setup complexity can limit its use in some robots.

- Depth estimation error increases with distance, so stereo vision works best at short to medium ranges.

| Use Case | Benefit of Stereo Vision |

|---|---|

| Manufacturing | Quality inspection, automation |

| Autonomous Vehicles | Obstacle detection, navigation |

| Robotics Automation | Pick and place, manipulation |

Monocular Depth Estimation

Monocular depth estimation uses a single camera to predict depth from one image. Deep learning models, such as convolutional neural networks and multi-scale vision transformer architectures, have made monocular depth estimation more accurate. These models learn to infer depth by analyzing patterns and features in images. Monocular depth estimation is flexible and cost-effective, making it suitable for dynamic and unstructured environments.

- Monocular depth estimation faces challenges like scale ambiguity and limited information.

- Recent advances in deep learning, including dense prediction and transformer models, have improved accuracy.

- Monocular depth estimation adapts well to mobile robots and drones.

- The method is less precise than stereo vision but offers greater adaptability.

Monocular depth estimation now supports real-time applications, such as robot navigation and 3D reconstruction. High-resolution monocular metric depth estimation helps robots grasp objects and move safely.

Depth Estimation Model

A depth estimation model processes visual data to create a depth map. In robotics, depth estimation models use supervised, semi-supervised, or self-supervised learning. These models include encoder-decoder networks, transformers, and hybrid approaches. Some models, like KineDepth, combine monocular depth estimation with robot kinematics for improved accuracy.

- Depth estimation models often use large datasets for training and adapt online to new environments.

- Recent models treat depth estimation as both classification and regression, improving precision.

- Dense prediction and multi-scale vision transformer models help robots achieve better depth estimation.

- Depth estimation models support tasks like object manipulation, navigation, and safety.

Robots benefit from depth estimation models that deliver accurate, real-time depth maps. These models enable robots to operate in complex, changing environments.

Key Benefits

3D Perception

Robots need to see the world in three dimensions to work safely and efficiently. Depth maps give robots this ability by showing the distance to every point in a scene. With 3d vision, robots can understand the shape, size, and position of objects. This helps them recognize objects even when they overlap or sit in cluttered spaces. Depth perception lets robots tell which objects are closer or farther away, making their actions more accurate.

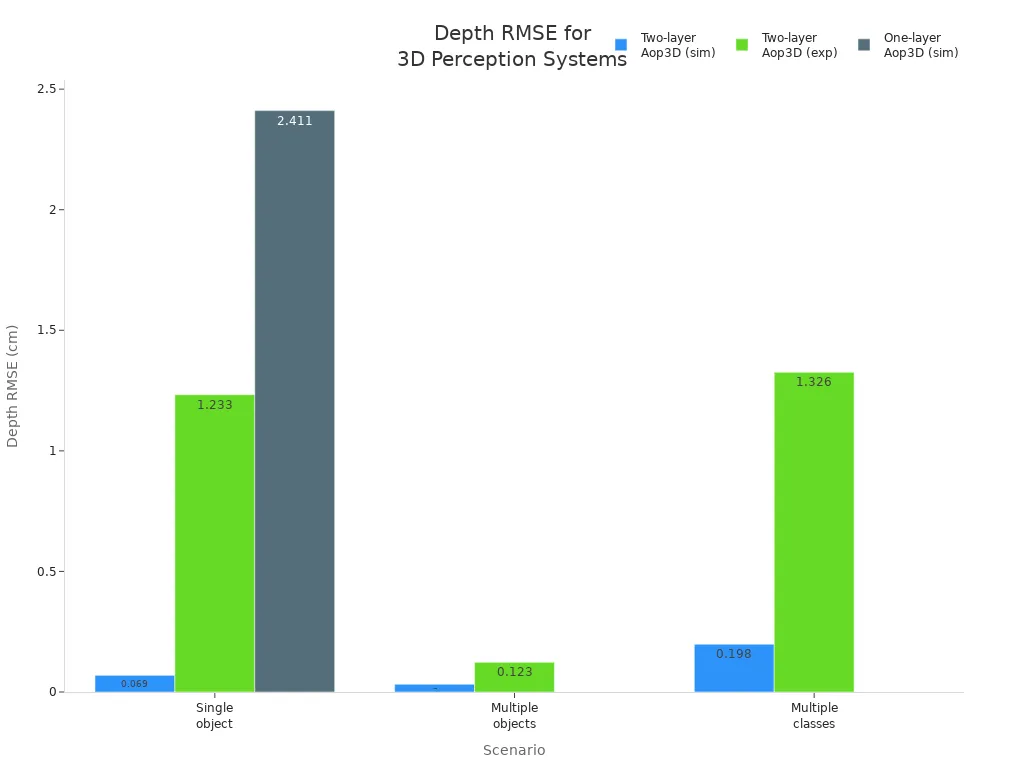

A recent study compared different 3d vision systems. The results showed that systems using depth encoding, like the two-layer Aop3D, preserved object shapes and edges much better than systems without it. The table below shows how well these systems performed in different scenarios:

| Scenario | System Type | Depth RMSE (cm) | % of Depth Range | Notes |

|---|---|---|---|---|

| Single object (character “H”) | Two-layer Aop3D | 0.069 (sim) | 0.35% | Accurate shape and edge preservation; intensity linearly related to depth |

| 1.233 (exp) | 6.17% | Experimental validation with diffractive surfaces | ||

| One-layer Aop3D | 2.411 (sim) | 12.06% | Without depth encoding, depth images are less smooth and intensity not monotonic | |

| Multiple objects (toy buildings, truck) | Two-layer Aop3D with spatial multiplexing | 0.032 (sim) | 1.07% | Millimeter-level spatial and depth resolution; complex shapes accurately reproduced |

| 0.123 (exp) | 4.09% | Experimental results confirm high accuracy in multi-object scenarios | ||

| Multiple object classes (“T”, “H”, “U”) | Two-layer Aop3D | 0.198 (sim) | 0.99% | Consistent depth estimation across different object shapes |

| 1.326 (exp) | 6.63% | Experimental results compatible with single-object setup |

Depth maps help robots generalize across many object types and scenes. They also reduce the need for heavy computer processing, which is often required by 2D vision systems. With depth perception, robots achieve better 3d recognition and can work in more complex environments.

Navigation

Robots must move safely and avoid obstacles. 3d vision systems use depth maps to help robots see their surroundings in detail. Stereo cameras and monocular depth estimation both create depth maps that show the distance to objects. This information helps robots plan paths and avoid collisions.

- Robots use computer vision to process images from cameras or depth sensors.

- Stereo cameras estimate distances by comparing two images, creating a 3d map.

- Robots use these maps to detect obstacles and plan safe routes.

- Techniques like SLAM (Simultaneous Localization and Mapping) help robots build maps and find their location at the same time.

- Robots combine depth data with other sensors, such as lidar or IMUs, for better navigation.

- These systems work well even when GPS signals are weak or blocked.

Robots with 3d vision can move through busy or changing environments. They adjust their paths in real time, even on narrow or uneven surfaces. Depth perception gives robots the spatial awareness needed for safe and reliable navigation.

Object Manipulation

Robots often need to pick up, move, or assemble objects. 3d vision and depth perception make these tasks much easier. Depth maps show the exact position and shape of objects, so robots can plan how to grasp or move them. Depth estimation models, including those using monocular depth estimation, help robots understand the 3d structure of their workspace.

- Researchers developed a depth-aware pretraining method that uses depth maps to improve how robots see and handle objects.

- Robots trained with this method completed more manipulation tasks successfully than those using only color images.

- The robots focused on important areas, such as tables, showing that depth perception helps them pay attention to the right spots.

- Real-world tests with a Franka Emika Panda robot proved that these methods work outside the lab.

- Adding information about the robot’s own movements made manipulation even more accurate.

- Adjusting the resolution of depth maps helped robots handle small objects better.

- Vision-based deep learning helps robots grasp and move objects.

- Eye-in-hand cameras give real-time feedback for precise manipulation.

- 3d vision systems improve pose estimation, making object handling more reliable.

With accurate depth estimation, robots can perform complex tasks, such as sorting, stacking, or assembling, with high efficiency.

Safety

Safety is a top priority in robotics. Depth map machine vision systems help robots create safety zones and detect moving objects, including people. These systems use depth perception to measure how close objects or people are to the robot. If something gets too close, the robot can slow down or stop to prevent accidents.

Depth cameras and real-time location systems work together to tell the difference between people and harmless objects, like carts. This reduces unnecessary stops and keeps work running smoothly. Lower camera resolution can speed up processing, making safety zones smaller and reaction times faster.

Vision-guided robots with 3d vision monitor their environment all the time. They spot hazards, enforce safety rules, and adapt to changes instantly. For example, one computer vision system raised safety compliance from under 25% to over 90% and cut unsafe incidents to zero. Robots with depth perception can track moving objects, predict obstacles, and avoid accidents in places like factories, warehouses, and hospitals.

Robots with depth maps and 3d vision protect people and property by reacting quickly to danger and following safety protocols.

Applications

Autonomous Robots

Autonomous robots use depth map machine vision systems to understand their environment in 3d. These robots rely on depth maps for tasks such as navigation, obstacle detection, and scene reconstruction. Autonomous vehicles, including delivery robots and warehouse AMRs, use 3d vision to move safely and avoid collisions with other vehicles or objects. They perform SLAM (Simultaneous Localization and Mapping) to build maps and plan routes. The scene reconstruction module helps these robots create accurate 3d models of their surroundings, which improves decision-making and efficiency.

- Autonomous vehicles use depth maps for real-time obstacle detection.

- Robots perform scene reconstruction to update their navigation paths.

- AMRs automate material transport and increase productivity in warehouses.

Depth map systems allow autonomous vehicles to operate in busy environments without special infrastructure. They adapt to changing scenes and maintain high safety standards.

Industrial Automation

Industrial automation depends on depth map machine vision for quality control, assembly, and logistics. Factories use 3d reconstruction to inspect products, detect defects, and guide robotic arms. Pick and place robots use depth maps to recognize, locate, and manipulate objects with high accuracy. These robots handle tasks such as palletization, bin picking, and assembly.

| Industry | Application Example | Benefit |

|---|---|---|

| Automotive | 3d inspection of vehicle parts | Improved accuracy |

| Electronics | Defect detection using 3d vision | Higher quality control |

| Logistics | Automated sorting with scene reconstruction | Faster processing |

| Food & Beverage | Packaging inspection | Reduced waste |

North America leads in adopting advanced 3d vision systems, with products like the Keyence WM-6000 and Cognex DataMan 380 supporting high-speed, accurate inspections. These systems help factories meet strict quality and safety standards while reducing costs.

Human-Robot Interaction

Depth map machine vision systems improve how robots interact with people. These systems capture 3d spatial information, allowing robots to recognize gestures, body movements, and facial expressions. Robots use this data to understand user intent and respond naturally. In homes and workplaces, robots use scene reconstruction to identify objects and follow pointing gestures.

- Robots detect both static and dynamic gestures for better communication.

- Depth maps help robots personalize responses and adapt to different users.

- 3d reconstruction supports safe collaboration in shared spaces.

Robots in assistive roles use depth maps to help elderly or disabled users. They interpret gestures for commands like picking up objects or moving to a location. Machine learning improves gesture recognition and makes human-robot interaction more intuitive and safe.

Challenges

Technical Limits

Depth map machine vision systems bring many technical challenges to robotics. Engineers must synchronize cameras perfectly to avoid depth errors. Even a small timing mistake, such as a 1-millisecond sync error, can cause large mistakes in measuring distance. The system’s depth accuracy depends on the distance between cameras and the quality of the lenses. A longer baseline between cameras improves depth resolution, but it also makes the setup more complex.

- Synchronization: Cameras must capture images at the exact same time.

- Resolution and Depth Accuracy: Higher image resolution and better algorithms improve results.

- Camera Overlap and Lens Selection: Proper overlap and lens choice are necessary for accurate stereo vision.

- Calibration and Rigidity: The camera setup must stay stable and calibrated.

- Hardware Synchronization: Some camera types, like Firewire, offer better sync than USB or GigE.

- Lens Compatibility: Not all lenses fit every camera, and lens quality affects performance.

Lighting conditions and surface properties also affect depth measurement. Shiny or transparent surfaces can confuse the system. Advanced cameras, such as MotionCam-3D Color, improve scanning of difficult surfaces, but these solutions add cost and complexity. Robots need more computing power to process 3D data, which increases system demands.

Note: Depth map systems must balance accuracy, speed, and reliability to work well in real-world environments.

Cost and Integration

Cost and integration present major barriers for many robotics projects. Depth-enabled sensors cost much more than standard cameras. The initial investment for hardware and software can be high. Installation often requires experts or special training, which adds to expenses.

- High computational demands make real-time processing difficult on many robots.

- Environmental changes, such as shadows or glare, reduce depth accuracy.

- Calibration can drift over time due to vibration or temperature changes.

- Integrating new systems with existing hardware and software is complex.

- Deep learning methods need more data and processing power, which may not fit on smaller robots.

Depth-enabled sensors remain less common and more expensive than regular RGB cameras. However, new deep learning techniques, such as using Pix2Pix networks to estimate depth from single images, offer hope for reducing costs. These advances may help smaller businesses adopt 3D vision without large investments.

Robots must overcome both technical and financial challenges to use depth map machine vision systems effectively.

Depth map machine vision systems give robots the ability to see and understand the world in 3D. These systems improve navigation, object handling, and safety in many industries. New trends include AI-driven 3D vision, edge computing for real-time processing, and advanced sensors like LiDAR and event-based cameras.

- Robots now use deep learning for better accuracy and faster decisions.

- Experts expect smarter, more energy-efficient robots that work well in changing environments.

Depth map technology will shape the future of robotics, making advanced solutions possible for many fields.

FAQ

What is the main advantage of using depth maps in robotics?

Depth maps give robots a 3D view of their environment. This helps robots understand where objects are located. Robots can move safely and handle objects more accurately.

Can depth map systems work in low light conditions?

Some depth map systems, like time-of-flight sensors, work well in low light. Others, such as stereo vision, may struggle when lighting is poor. Choosing the right sensor is important for each environment.

How do depth maps improve robot safety?

Depth maps help robots detect people and obstacles. Robots use this information to avoid collisions. Safety zones can be set up so robots stop or slow down when something gets too close.

Are depth map machine vision systems expensive?

Depth map systems usually cost more than regular cameras. The price depends on the type of sensor and the features needed. New technology and AI models may help lower costs in the future.

See Also

Key Reasons Machine Vision Systems Are Vital For Bin Picking

Ways Deep Learning Improves The Performance Of Machine Vision

Importance Of Guidance Machine Vision Within Robotic Applications

An Overview Of Camera Functions In Machine Vision Systems

A Guide To Dimensional Measurement Using Machine Vision Technology