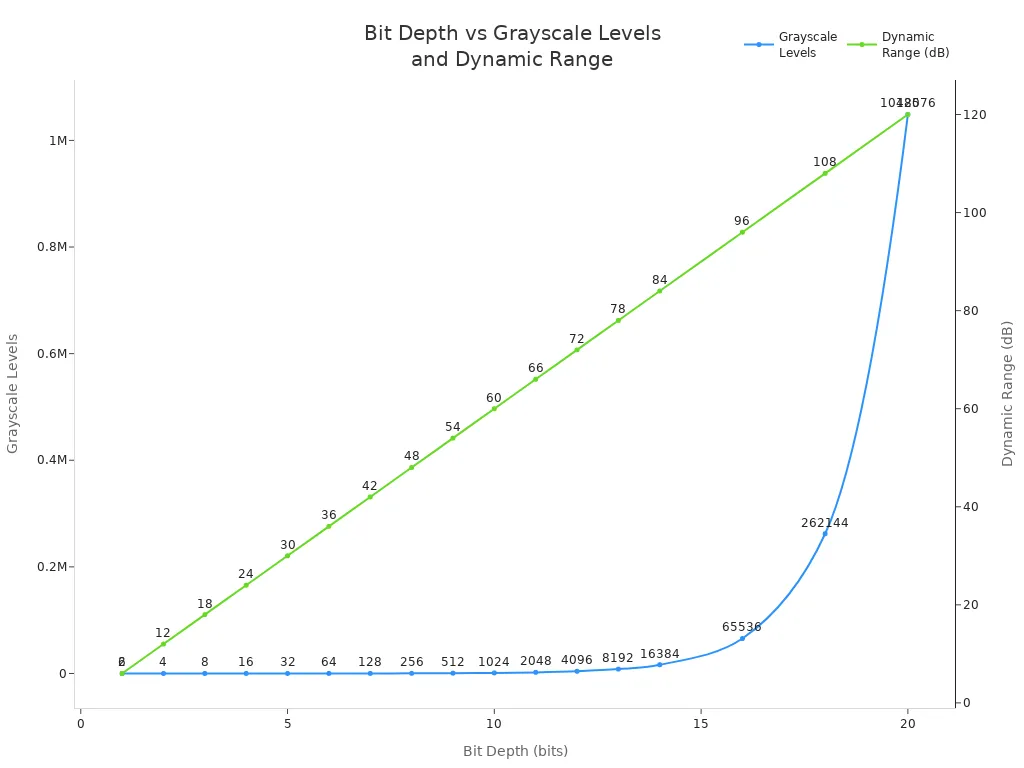

Pixel bit depth plays a key role in any pixel bit depth machine vision system because it controls how much detail and accuracy each pixel can show. Bit depth refers to the number of binary digits used to represent the intensity of every pixel in a digital image. Higher bit depth allows a system to capture finer differences in brightness and color, which leads to better image analysis and fewer visible artifacts. For example, an 8-bit pixel bit depth machine vision system can show 256 grayscale levels, while a 16-bit system displays 65,536 levels, as shown below:

| Bit Depth (bits) | Number of Grayscale Levels | Sensor Dynamic Range (dB) |

|---|---|---|

| 8 | 256 | 48 |

| 16 | 65,536 | 96 |

A pixel bit depth machine vision system with higher bit depth improves both image quality and system performance by capturing subtle details that lower bit depths may miss.

Key Takeaways

- Pixel bit depth controls how much detail each pixel can show, with higher bit depths capturing finer differences in brightness and color.

- Choosing the right bit depth improves image quality and helps detect subtle defects, but too high bit depth can waste storage and slow processing.

- Higher bit depth increases image data size, which affects data transfer speed and system performance, so balance is key.

- Matching bit depth to the sensor’s capabilities and application needs ensures efficient and accurate machine vision results.

- Environmental factors and hardware limits influence the best bit depth choice, making calibration and system design important.

Pixel Bit Depth in Machine Vision Systems

What Is Bit Depth

Bit depth, sometimes called color depth, describes the number of bits that represent the color or intensity of each pixel in an image. In a pixel bit depth machine vision system, bit depth determines how much information each pixel can store. This value directly affects the precision and range of colors or grayscale levels visible in the final image. For example, an 8-bit image sensor can show 256 different intensity levels for each pixel. Scientific cameras often use higher bit depths to capture more subtle differences in light and color.

The image sensor in a camera collects light and converts it into an electrical signal. The analog-to-digital converter (ADC) then changes this signal into digital values. The bit depth sets how many steps the ADC uses to divide the signal. More bits mean the camera can record finer changes in brightness or color. This feature is crucial for applications that need high accuracy, such as scientific cameras used in research or industrial inspection.

Bit depth does not increase the dynamic range of the sensor itself. Instead, it slices the available signal into smaller steps. For example, a sensor with a dynamic range of 4000:1 matches well with a 12-bit ADC, which provides 4096 levels. If the bit depth is too high for the sensor’s range, the extra levels do not add useful information. Matching the bit depth to the sensor’s capabilities ensures the pixel bit depth machine vision system works efficiently.

Note: Bit depth measures the precision of color or intensity per pixel, not the range of colors the camera can detect (color gamut).

Typical Bit Depth Values

Most industrial and scientific cameras use standard bit depths. The most common values are 8, 10, and 12 bits. Each increase in bit depth doubles the number of intensity levels the camera can record. The number of levels is calculated as 2 raised to the power of the bit depth. For example, an 8-bit camera records 256 levels (2^8), while a 12-bit camera records 4096 levels (2^12). This exponential growth allows scientific cameras to capture very fine differences in light and color.

| Bit Depth | Value Range | Typical Applications |

|---|---|---|

| 8 bits | 0 to 255 | Visualization, fast previews, simple inspections |

| 10 bits | 0 to 1023 | Commercial image processing, analytics, post-processing |

| 12 bits | 0 to 4095 | Industrial image processing, analytics (most common) |

- 8-bit cameras: These cameras provide 256 gray levels. They work well for simple inspections, barcode reading, and high-speed tasks where fast processing is important.

- 12-bit cameras: These cameras offer 4096 gray levels. Most industrial applications use this bit depth because it balances detail and processing speed.

- 16-bit cameras: These cameras deliver 65,536 gray levels. Scientific cameras use this bit depth for high dynamic range scenes, precise measurements, and research.

The image sensor and ADC together set the bit depth for each camera. Scientific cameras often allow users to select the bit depth based on the needs of the application. For example, a pixel bit depth machine vision system may use 8 bits for fast, simple tasks or 12 bits for detailed analysis. The full sensor resolution and bit depth together define the total amount of data the camera produces. Higher bit depths increase the data size, which can affect processing speed and storage needs.

A pixel bit depth machine vision system must match the bit depth to the signal level and the sensor’s capabilities. For low-light tasks, such as fluorescence imaging, scientific cameras may use 8 or 12 bits. For brightfield imaging or scenes with high dynamic range, 16-bit scientific cameras provide the necessary detail. Choosing the right bit depth ensures the system captures all important information without wasting resources.

Bit Depth and Image Quality

Dynamic Range

Dynamic range describes the ratio between the brightest and darkest signals a camera can detect. In machine vision, dynamic range determines how well a system can capture details in both shadows and highlights. Bit depth plays a key role in how the camera represents this range. Each increase in bit depth allows the analog-to-digital converter to divide the signal into more discrete grayscale levels. For example, an 8-bit system provides 256 levels, while a 12-bit system offers 4096 levels. A 16-bit system can show 65,536 levels. This fine quantization helps the camera record subtle changes in brightness within the sensor’s dynamic range.

However, bit depth does not increase the actual dynamic range of the sensor. The sensor’s physical properties and noise set the true limits. Even if a camera uses an 18-bit converter, noise may limit the measurable signal to about 1 part in 100,000. This means that increasing bit depth beyond the sensor’s noise limit does not extend dynamic range but does improve how the system represents signals within that range. For example, a camera with a dynamic range of 4000:1 matches well with a 12-bit mode. Using a higher bit depth, such as 16 bits, would not add useful information and could waste storage space.

A higher dynamic range in machine vision systems brings several benefits:

- The system captures more image data, including more pixels and gray levels.

- It detects subtle and low-contrast defects that standard systems might miss.

- Automated inspections become more consistent and repeatable, reducing human error.

- The system supports continuous operation, improving production efficiency.

- Quantitative data from these systems helps improve manufacturing processes over time.

Tip: Matching bit depth to the sensor’s dynamic range ensures efficient use of data and resources in a machine vision system.

Subtle Differences

Bit depth also affects the ability of a machine vision system to detect subtle differences in image intensity. Higher bit depth increases the number of intensity values each pixel can represent. This allows the camera to capture more detailed variations in brightness and contrast. For example, moving from 8 bits (256 levels) to 12 bits (4096 levels) lets the system detect much finer differences in pixel intensity.

This improvement is critical for quality control tasks. In industrial inspection, higher bit depth helps reveal low-contrast defects that might go unnoticed with lower bit depth. In medical imaging, such as CT or MRI scans, higher bit depth enhances the visibility of small changes in tissue density, which supports more accurate diagnoses. The increased range of intensity values also reduces quantization noise and prevents loss of detail or unwanted artifacts.

Some key advantages of higher bit depth for detecting subtle differences include:

- More intensity values per pixel, capturing finer variations in the image.

- Improved image contrast and resolution, which is vital for quality control.

- Reduced quantization noise, leading to cleaner and more accurate images.

- Enhanced ability to detect small defects or features, such as cracks or inclusions.

- Better support for advanced analysis, such as defect grading or tumor characterization.

A machine vision system with the right bit depth can distinguish between very similar shades or intensities. This precision enables reliable detection of defects, supports automated grading, and improves overall inspection quality.

Data and Performance Impact

Data Size

Increasing bit depth in a machine vision system leads to a significant rise in image data transfer requirements. Each additional bit per pixel multiplies the total amount of data generated by every image. For example, a 25-megapixel image at 8 bit depth produces about 210 million bits per image. When the bit depth increases to 10 bits, the data size jumps to over 262 million bits. This 25% increase directly impacts image data transport and the system’s data rate.

| Pixel Bit Depth | Data Size per 25 MP Image (bits) | Interface Data Rate Available (Gbit/s) | Max Frame Rate Supported by Interface (fps) |

|---|---|---|---|

| 8 bit | 209,715,200 | 19.2 | 91.6 |

| 10 bit | 262,214,400 | 19.2 | 73.2 |

A higher bit depth means more image data transfer per frame. The available data rate of the interface, such as CoaXPress, sets a limit on how fast the system can move this data. As the data size grows, the maximum frame rate drops. For instance, moving from 8 bit to 10 bit depth reduces the frame rate from 91.6 fps to 73.2 fps. This relationship shows why engineers must consider both image data transport and data rate when designing high-performance systems.

Processing and Storage

Larger image data transfer volumes require more powerful processing and greater storage capacity. High bit depth images contain more detail, but they also slow down data transfer and increase the load on processors. This can affect real-time performance, especially in applications that demand fast decisions.

Key trade-offs include:

- Higher bit depth improves image quality and detail, which helps in tasks like defect detection.

- Larger files from higher bit depth slow down image data transfer and processing.

- Lower bit depth speeds up data transfer and reduces storage needs, but may lose important details.

- The system’s data rate and storage must match the chosen bit depth to avoid bottlenecks.

Advances in compression technology help manage these challenges. Adaptive compression algorithms and machine learning models can reduce file sizes while keeping important details. Formats like TIFF with adaptive compression allow high bit depth images to be stored and transferred efficiently. These methods help maintain high data rate performance and make higher bit depths more practical for modern machine vision.

Tip: Always balance bit depth, image data transfer needs, and data rate limits to achieve the best system performance.

Choosing Bit Depth for Applications

Application Needs

Selecting the right bit depth for a machine vision system depends on the specific needs of the application. Inspection tasks often require a sensor with small pixels to detect fine defects. Measurement applications need the pixel size to be about one-tenth of the required tolerance, ensuring accurate results. Robotics may use adaptive resolution techniques, focusing detail where it matters most. The choice of bit depth affects how well the camera captures subtle differences in brightness, which is critical for tasks like photogrammetry or precise industrial measurement. In high-speed imaging, a higher bit depth can help maintain image quality even when the data rate is high.

| Factor | Effect of Smaller Pixels | Effect of Larger Pixels | Application Considerations and Techniques |

|---|---|---|---|

| Pixel Size | Higher spatial resolution; detects finer details | Increased sensitivity by capturing more light | Inspection requires small pixels for detecting defects; measurement needs pixel size about one-tenth of tolerance; robotics may use adaptive resolution techniques |

| Sensitivity | Reduced due to fewer photons collected per pixel | Increased due to larger photon collection | Proper lighting and lens quality improve sensitivity across applications; robotics benefits from multi-layered tactile sensors for sensitivity enhancement |

| Resolution | Higher spatial resolution, better detail detection | Lower spatial resolution due to larger pixel size | Inspection and measurement demand high resolution; robotics may use adaptive foveation to focus resolution where needed |

| Environmental | N/A | N/A | Temperature and vibration affect sensor noise and image quality; cooling and vibration-dampening mounts mitigate these issues across all applications |

| Calibration | N/A | N/A | Regular calibration (e.g., Zhang’s method) is critical for maintaining accuracy, especially in dynamic systems like robotics |

| Sensor Type | N/A | N/A | Area scan for static inspection; line scan for continuous measurement; 3D sensors for robotics and depth sensing |

Environmental factors such as temperature and vibration can increase sensor noise and blur images. Cooling systems and vibration-dampening mounts help keep the sensor stable. Regular calibration ensures the camera provides accurate data, which is especially important in robotics.

Hardware Limits

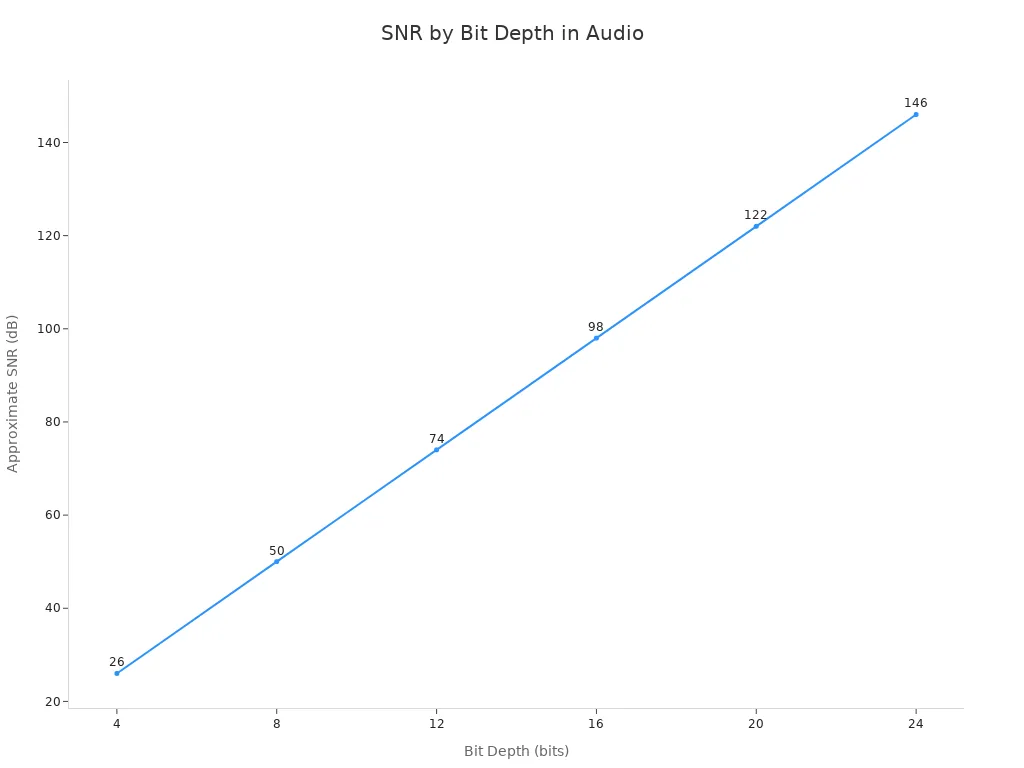

Hardware sets practical limits on bit depth. The sensor and camera electronics determine the maximum bit depth and data rate the system can handle. Higher bit depths increase the data rate, which can slow down high-speed imaging or overload storage. The analog-to-digital converter in the camera also limits the signal-to-noise ratio. For example, a 16-bit system offers about 98 dB SNR, but real-world hardware often cannot reach this level due to noise.

| Bit Depth (bits) | Approximate SNR (dB) | Quantization Step Size (dB) | Number of Possible Values |

|---|---|---|---|

| 8 | ~50 | ~0.2 | 256 |

| 12 | ~74 | ~0.018 | 4,096 |

| 16 | ~98 | ~0.0015 | 65,536 |

Applications that demand high accuracy, such as scientific measurement or advanced inspection, benefit from higher bit depth. However, for many outdoor or simple inspection tasks, 8-bit depth is often enough. When the sensor’s noise or the camera’s data rate cannot support higher bit depths, increasing bit depth adds little value and may waste resources. Engineers must balance the need for detail with the limits of the sensor, camera, and data rate to achieve the best results.

Selecting the right pixel bit depth ensures a machine vision system delivers accurate results without wasting resources. Higher bit depths improve image quality and color accuracy, but they also increase file size and system demands. Users can balance these trade-offs by choosing suitable image formats and bit depths for their needs:

- JPEG uses 8-bit color depth and offers smaller files with some quality loss.

- PNG supports higher color depths and preserves image quality, but files are larger.

Careful evaluation of application requirements leads to optimal performance and efficiency.

FAQ

What does pixel bit depth mean in a machine vision system?

Pixel bit depth shows how many intensity levels each pixel can display. A higher bit depth lets the camera capture more detail. This improves the system’s ability to see subtle differences in brightness and color.

How does bit depth affect dynamic range and image quality?

Bit depth controls how finely the camera divides the sensor’s signal. More levels mean better dynamic range and smoother images. Scientific cameras use higher bit depth to capture small changes in brightness, which helps in quality control and measurement.

Why does increasing bit depth impact image data transfer and storage?

A higher bit depth increases the amount of data each image holds. This raises image data transfer and storage needs. The data rate of the system must support this extra load, especially in high-speed imaging or when using full sensor resolution.

When should engineers choose higher bit depth for a machine vision application?

Engineers select higher bit depth when the application needs to detect small differences in brightness or color. Tasks like scientific measurement, defect detection, or high-resolution inspection benefit from more levels. For simple tasks, lower bit depth often works well.

What hardware factors limit the choice of bit depth?

The image sensor, camera electronics, and data rate set the maximum bit depth. If the sensor’s noise is high, extra bit depth adds little value. Engineers must match bit depth to the sensor’s performance and the system’s image data transport capacity.

See Also

Exploring Pixel-Based Machine Vision In Contemporary Uses

Understanding Camera Resolution Fundamentals In Machine Vision

The Effect Of Frame Rate On Machine Vision Efficiency