Sensor pixel characteristics play a critical role in every sensor pixel machine vision system. Small changes in pixel size, quality, or arrangement can shift measurement accuracy by microns or even nanometers. In manufacturing, a 1,000 x 1,000 pixel camera can achieve distance measurements with 1 mm resolution, while in healthcare, pixel-level precision enables capillary tube measurements down to 5 nanometers.

| Measurement Type | Resolution Achieved | Accuracy Achieved | Notes |

|---|---|---|---|

| Distance measurement (1m field) | 1 mm | 500 times pixel | Using a 1,000 x 1,000 pixel camera |

| Capillary tube opening measurement | 5 nm | 12 µm opening | Achieved with light wavelength of 500 nm |

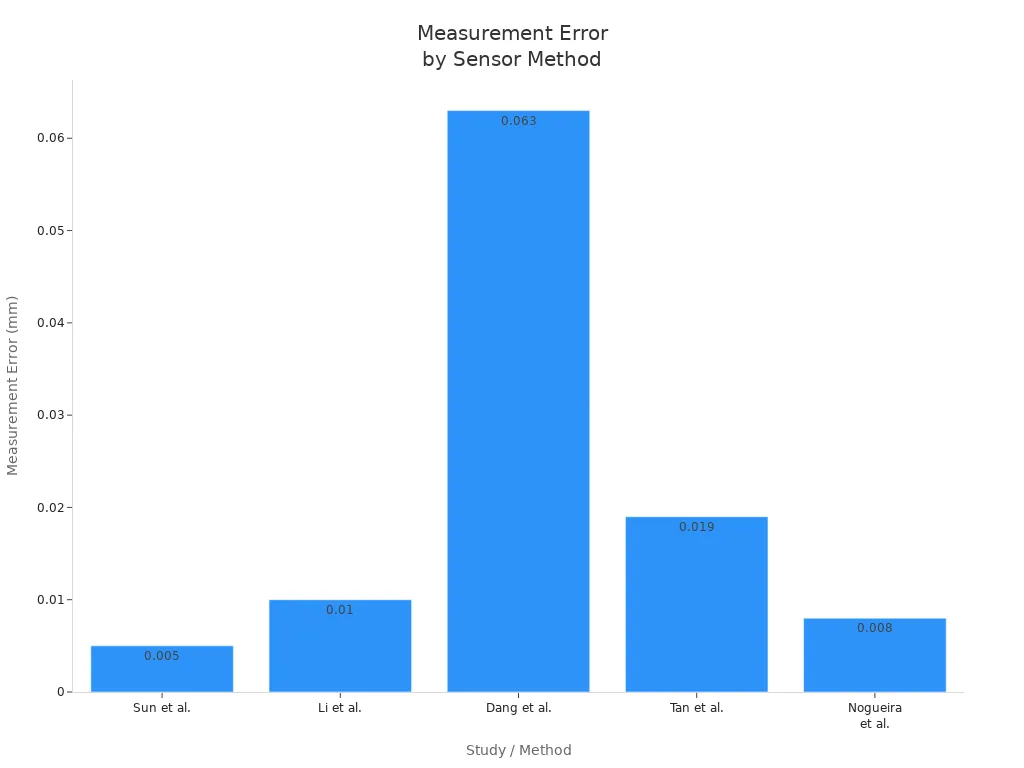

Machine vision accuracy depends on more than just the number of sensor pixels. High-resolution sensors, careful calibration, and quality optics reduce error and reveal fine details. As shown below, improvements in pixel machine vision methods lead to lower measurement errors:

A well-designed sensor pixel machine vision system uses these details to boost accuracy, speed, and reliability for complex tasks in automation and robotics.

Key Takeaways

- Sensor pixel size and arrangement directly affect the accuracy and detail a machine vision system can capture.

- Choosing the right sensor type and matching the lens to the sensor ensures sharp, clear images and better measurement results.

- Larger pixels improve sensitivity and image quality in low light, while smaller pixels provide higher resolution for fine details.

- Regular calibration and controlling lighting and environmental factors help maintain reliable and precise machine vision performance.

- Machine vision systems use different sensor types and pixel designs to meet the needs of industries like manufacturing, healthcare, and robotics.

Sensor Pixels in Machine Vision

What Are Sensor Pixels?

A sensor pixel forms the smallest unit of an image in a machine vision system. Each pixel collects light from a tiny area of the scene. The image sensor, such as a CCD or CMOS chip, contains thousands or millions of these pixels. Together, they capture the full image. In a sensor pixel machine vision system, the pixel’s job is to measure the brightness of light that hits it. The more pixels an image sensor has, the higher the resolution. This means the machine vision system can detect smaller features and finer details.

A typical machine vision system uses several key components:

- A lens focuses light onto the image sensor.

- The image sensor, made up of many pixels, converts light into digital signals.

- Processing tools analyze the image for features like brightness, contrast, and edges.

- Lighting ensures the object is visible and clear.

- Communication interfaces send image data to computers for further image processing.

Note: High spatial resolution and dynamic range help the sensor capture detailed images, even in challenging lighting conditions.

How Pixels Convert Light

Pixels in an image sensor turn light into digital information through a series of steps:

- Photons from the scene strike each pixel’s photodetector.

- The photodetector generates electrons based on the amount of light (quantum efficiency).

- These electrons collect in a small well inside the pixel.

- The sensor converts the collected charge into an analog voltage.

- An analog-to-digital converter (ADC) changes this voltage into a digital value.

- The machine vision system uses these digital values to create an image for analysis.

Each pixel’s digital value shows how much light it received. Image processing software then uses these values to measure, inspect, or classify objects. The quality of the sensor, the arrangement of pixels, and the effectiveness of image processing all affect the accuracy of pixel machine vision tasks.

Pixel Size and Arrangement

Impact on Resolution

Pixel size and arrangement form the foundation of resolution in any sensor pixel machine vision system. The number of pixels and how they are arranged on the image sensor determine the smallest object a machine vision system can detect. Each pixel represents a tiny area of the scene, and more pixels mean the system can capture finer details. When pixels are arranged in a tight, regular grid, the machine vision system can resolve edges and small features with greater accuracy.

Spatial resolution describes the ability of a sensor to distinguish objects that are close together. In a pixel machine vision system, higher spatial resolution means more pixels per unit area. This allows the system to capture high-resolution images and detect small defects or features. The arrangement of pixels also affects how well the system can find edges and measure objects. Sub-pixilation techniques use the grayscale values of neighboring pixels to locate edges more precisely than the pixel grid alone. This improves measurement accuracy in pixel machine vision tasks.

Note: The lens must match the resolving power of the camera sensor. If the lens cannot resolve details as small as the pixel size, the image will appear blurry, and the system will lose accuracy. A mismatch between lens and sensor can also cause artifacts like aliasing or vignetting, which reduce the effectiveness of image processing.

The field of view, magnification, and working distance all depend on the relationship between the camera sensor and the lens. Proper alignment ensures that the pixel machine vision system captures sharp, distortion-free images. When the sensor and lens work together, the system achieves the highest possible spatial resolution and accuracy.

Sensitivity and Image Quality

Pixel size also affects sensitivity and image quality in a pixel machine vision system. Larger pixels collect more photons because they have a bigger surface area. This increases the sensor’s sensitivity and improves the signal-to-noise ratio. As a result, the machine vision system can detect faint signals and produce clearer images, even in low light.

Smaller pixels allow for higher resolution on the same sensor size, but they collect fewer photons. This can reduce sensitivity and make the image noisier. In some cases, small pixels may cause blooming or crosstalk, which lowers contrast and makes it harder for image processing software to detect fine details. The choice between large and small pixels depends on the needs of the pixel machine vision application. For example, inspecting tiny electronic components may require small pixels for high resolution, while detecting faint marks on a surface may need larger pixels for better sensitivity.

| Pixel Size | Sensitivity | Resolution | Typical Use Case |

|---|---|---|---|

| Large | High | Lower | Low-light inspection, general detection |

| Small | Lower | High | Fine detail inspection, high-resolution images |

Sensor-shift or pixel shifting technology can further improve effective resolution in a sensor pixel machine vision system. This method moves the sensor in tiny steps to capture multiple images, then combines them to create a single image with much higher resolution. Pixel shifting increases color accuracy, reduces noise, and minimizes artifacts like moiré patterns. However, this technique works best with static scenes, as moving objects can cause blurring.

Tip: Pixel shifting can replace multiple cameras in some pixel machine vision setups, saving cost and simplifying calibration. It also allows the use of lenses with a larger field of view without losing detail.

To achieve the best results, the camera sensor and lens must be carefully matched. The lens should cover the entire sensor area to avoid shading and ensure even illumination. The focal length and f-number must fit the application’s needs for field of view and depth of field. High-quality lenses improve focus and sharpness, which helps the pixel machine vision system deliver accurate, reliable results.

Camera Sensor Technologies

CCD vs. CMOS

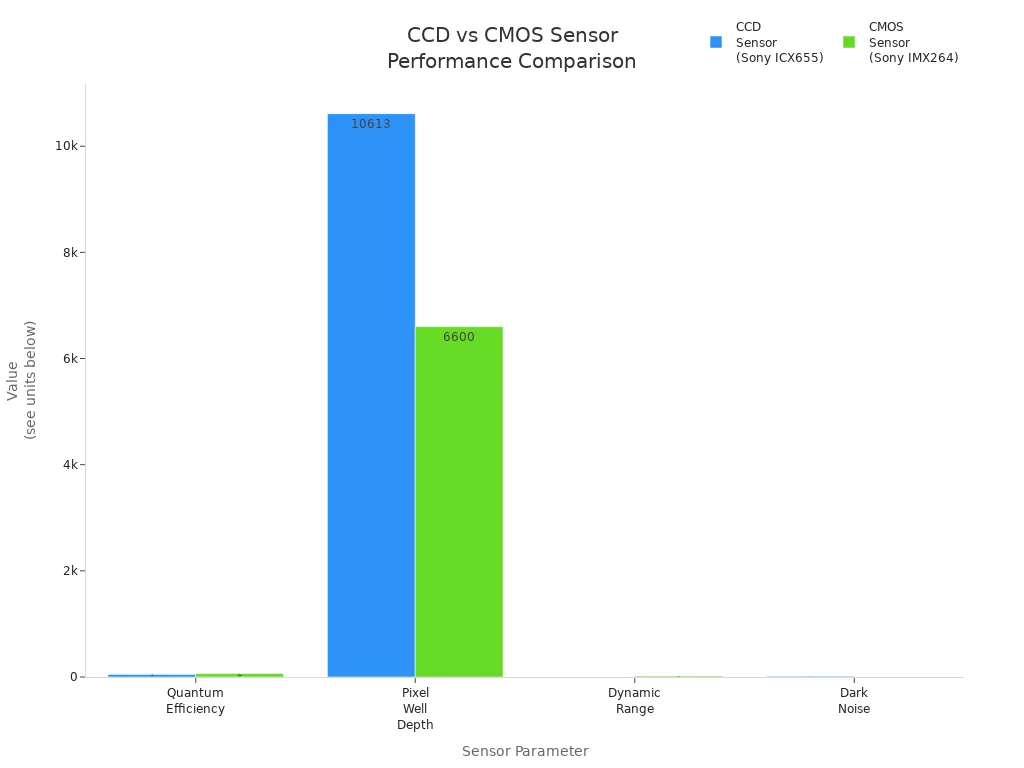

A camera sensor is the heart of any machine vision system. Two main types exist: CCD (Charge-Coupled Device) and CMOS (Complementary Metal-Oxide-Semiconductor). Both types convert light into electrical signals, but they work in different ways. CCD sensors move electrical charges across the chip and read them at one corner. This process gives high image quality and low noise. CMOS sensors have amplifiers at each pixel, allowing them to read signals in parallel. This design makes CMOS sensors faster and more power-efficient.

Recent improvements in CMOS technology have closed the gap in image quality. CMOS sensors now offer better low-light and near-infrared performance. Manufacturers prefer CMOS sensors because they cost less, use less power, and fit well into modern devices. The industry now focuses on CMOS development, while CCD sensors become less common.

| Performance Aspect | CCD Sensors Characteristics | CMOS Sensors Characteristics |

|---|---|---|

| Image Quality | Higher image quality, especially in low-light conditions | Slightly lower image quality but improving with BSI technology |

| Dynamic Range | Typically higher dynamic range | Slightly lower, but improving |

| Noise Levels | Lower noise due to serial charge transfer | Higher noise, but gap narrowing |

| Power Consumption | Higher power consumption | Lower power consumption |

| Speed | Slower readout speeds | Faster readout speeds |

| Integration | Limited on-chip integration | High on-chip integration |

| Cost | More expensive | Cheaper to manufacture |

CMOS sensors now outperform CCDs in speed, noise, and power use for most machine vision applications. For example, a CMOS image sensor can deliver higher frame rates and better dynamic range. This helps a machine vision camera capture fast-moving objects with less blur. The chart below shows how CMOS sensors compare to CCDs in key areas like quantum efficiency and noise.

CCD sensors still serve in scientific imaging and astronomy, where the highest image quality matters most. However, CMOS sensors dominate industrial machine vision because they support real-time image processing and cost less to operate.

Sensor Types for Applications

A machine vision system uses different camera sensor types for different tasks. The three main types are area scan, line scan, and 3D sensors. Each type has a unique pixel arrangement and fits specific applications.

- Area scan cameras use a 2D array of pixels. They capture full images in one shot. These sensors work best for inspecting static objects or reading barcodes.

- Line scan cameras use a single row of pixels. They build images line by line as objects move past. This design suits tasks like checking fabric or paper on a conveyor belt.

- 3D sensors add depth information. They use special pixels to measure distance, often with lasers or time-of-flight technology. These sensors help robots pick up items or inspect surfaces in three dimensions.

| Sensor Type | Pixel Arrangement | Suitable Applications |

|---|---|---|

| Area Scan | 2D array (matrix) | Captures entire image at once; ideal for static scenes and objects |

| Line Scan | Single line (1D array) | Captures images line-by-line; ideal for continuous or high-speed tasks like printing and conveyor belt monitoring |

| 3D Sensor | Depth-sensing pixels | Captures spatial depth; ideal for robotic guidance and surface inspection |

A machine vision camera may also use special image sensors for tasks beyond visible light. For example, InGaAs sensors detect short-wave infrared, and microbolometer arrays sense heat. These options expand what a machine vision system can do, such as seeing through fog or checking temperatures.

Tip: Choosing the right camera sensor type and pixel arrangement helps a machine vision system achieve the best results for each application.

Practical Factors in Machine Vision Accuracy

Lighting and Environment

Lighting conditions shape the performance of every machine vision system. When lighting is too bright or too dim, sensor pixels can become over- or under-exposed. Over-exposure leads to saturated images, which disrupts image processing and reduces accuracy. Under-exposure hides important details, making it hard for the system to detect features. In real-world applications, such as autonomous vehicles, poor lighting can distort object detection and 3D modeling. New exposure control methods, like neuromorphic exposure control, help sensors adjust quickly to changing light. These systems reduce saturation and improve the accuracy of pixel machine vision tasks.

Environmental factors, such as temperature and vibration, also affect sensor performance. High temperatures increase sensor noise, which lowers image quality. Vibration causes blurring and misalignment, especially in 2D systems. In production environments, vibration can move objects and change how pixels capture details. 3D smart sensors use built-in correction tools to remove vibration effects, resulting in cleaner data. Protective enclosures, cooling systems, and vibration-dampening mounts help maintain stable camera sensor operation.

| Environmental Factor | Impact on Sensor Performance | Mitigation Strategy |

|---|---|---|

| Temperature | Increases sensor noise, degrading image quality | Use cooling systems to maintain stable camera performance |

| Vibration | Causes image blurring and distortion | Employ vibration-dampening platforms and mounts to stabilize cameras |

Lighting components also face challenges. Heat can reduce the lifespan of LEDs, while vibration may damage fixtures. Using lighting with thermal management and vibration-resistant mounts ensures reliable operation. High-quality machine vision lenses further improve image clarity, supporting better quality control and image processing.

Calibration and Trade-Offs

Calibration ensures that a pixel machine vision system delivers accurate measurements. Classical calibration methods, such as direct linear transformation and Zhang’s method, correct camera parameters and lens distortions. Intelligent optimization algorithms, like genetic algorithms and particle swarm optimization, improve calibration accuracy. Advanced calibration can reduce measurement errors to less than 0.5% and lower computation time. Real-time calibration is important for dynamic systems, such as robotic arms, to maintain accuracy during operation. Regular validation and recalibration help address hardware wear and environmental changes.

Trade-offs exist between pixel size, sensitivity, and resolution. Smaller pixels increase resolution but collect less light, reducing sensitivity. Larger pixels improve sensitivity but lower spatial resolution. The right balance depends on the application. For example, optical character recognition needs high resolution, while barcode scanning works with lower resolution. Higher resolution increases processing load and storage needs, but regions of interest (ROI) can help focus image processing and maintain frame rates.

| Aspect | Effect of Smaller Pixels | Effect of Larger Pixels | Additional Techniques and Considerations |

|---|---|---|---|

| Pixel Size | Improves spatial resolution; detects finer details | Increases sensitivity by capturing more light | Pixel binning combines pixels to improve sensitivity but reduces resolution |

| Sensitivity | Reduced due to fewer photons collected per pixel | Increased due to larger photon collection | Proper lighting and high-quality lenses improve image clarity |

| Resolution | Higher spatial resolution, better detail detection | Lower spatial resolution due to larger pixel size | Nyquist criterion requires at least two pixels per smallest feature to avoid aliasing |

Proper calibration, environmental control, and careful selection of sensor and lens components help optimize machine vision for quality control and reliable image processing.

Real-World Machine Vision Applications

Manufacturing

Manufacturing relies on machine vision for precise quality control and defect detection. The choice of sensor pixel size and arrangement directly affects measurement accuracy.

- In visual inspection, the pixel size determines the smallest feature the system can measure. For example, if the pixel is too large, the system may miss small defects or fail to meet tight tolerances.

- A common rule states that the pixel size should be about one tenth of the required measurement tolerance. This ensures reliable and repeatable automated inspections.

- High-resolution cameras and telecentric lenses help maintain image quality as pixel counts increase.

- Sub-pixel measurement techniques can further improve accuracy, but engineers must validate these methods with real parts.

- For very small fields of view, specialized optics designed for machine vision applications provide better results than standard lenses.

Defect detection rates improve when manufacturers use high-resolution cameras and advanced lighting setups. Proper sensor positioning and image processing algorithms allow systems to find subtle defects that humans might miss. Techniques like line scan and multi-spectral imaging help detect microscopic and subsurface flaws. As a result, manufacturers experience fewer inspection failures and higher product quality.

Healthcare

Healthcare machine vision systems depend on sensor pixel quality for accurate diagnostics and monitoring.

- RGB-IR cameras, which combine visible and infrared pixels, allow clinicians to see details in tissues and blood flow that standard imaging misses.

- In ophthalmology, these sensors help detect subtle changes in the eye, improving diagnostic accuracy without causing discomfort.

- During surgery, RGB-IR imaging reveals tumor boundaries and tissue oxygenation, supporting critical decisions.

- For remote monitoring, these cameras provide continuous data by switching between visible and infrared imaging.

High spatial resolution is essential for monitoring subcellular features in medical images. Superresolution methods can enhance this further, helping pathologists identify tissue changes that affect diagnosis. Accurate pixel registration and robust image processing reduce noise and improve reliability. Advances in sensor technology now allow sub-micron precision, supporting long-term monitoring and flexible camera positioning.

Robotics

Robotics uses machine vision for navigation, manipulation, and quality control.

- Robots equipped with high-resolution cameras and depth sensors can map environments and avoid obstacles with greater accuracy.

- Adaptive foveation methods focus sensor resolution on important areas, making data collection more efficient.

- Neural network-based algorithms use pixel-level data for fast and precise grasping, improving manipulation tasks.

- Event-based cameras capture rapid changes, allowing robots to react in real time.

Optimized sensor pixel characteristics improve tactile feedback and measurement range in robotic applications. Multi-layered vision-based tactile sensors increase sensitivity, helping robots perform delicate tasks. These advancements lead to more reliable automated inspections and better defect inspection in manufacturing robots.

Tip: As sensor pixel technology advances, machine vision applications become more scalable and flexible, supporting industries from electronics to automotive production.

Sensor pixel characteristics directly shape pixel machine vision system accuracy, as research shows that image quality and pixel design drive reliable defect detection and classification. To optimize pixel machine vision for quality control, engineers should match pixel resolution to inspection needs, select the right sensor type, and calibrate systems regularly. They must consider both technical details and real-world conditions.

Advances in sensor pixel technology, such as higher pixel density and AI-driven processing, promise even greater accuracy for future machine vision applications.

FAQ

What is the main job of a sensor pixel in machine vision?

A sensor pixel collects light from a small part of the scene. It turns this light into a digital value. The machine vision system uses these values to create images and measure objects.

How does pixel size affect image quality?

Larger pixels collect more light, which makes images clearer and less noisy. Smaller pixels capture finer details but may create more noise. The right pixel size depends on the task.

Why do lens and sensor need to match?

A lens must focus light sharply onto the sensor. If the lens cannot resolve details as small as the sensor pixels, the image will look blurry. Matching both ensures the system captures sharp, accurate images.

Can machine vision work in low light?

Yes, but the system needs sensitive pixels and good lighting. Larger pixels help in low light. Special lighting or infrared sensors can also improve performance in dark areas.

What is pixel shifting in machine vision?

Pixel shifting moves the sensor in tiny steps to capture several images. The system combines these images to create one with higher resolution and better color. This method works best with still objects.

See Also

Understanding Pixel-Based Vision Technology In Contemporary Uses

Fundamental Concepts Of Camera Resolution In Vision Systems

A Clear Overview Of Image Processing In Vision Systems