A pose estimation machine vision system uses computer vision to find the position and orientation of objects or people in images or video. Pose estimation in computer vision helps machines understand movement and body positions. Human pose estimation plays a key role in tracking joint locations for tasks like fitness and safety. Recent transformer-based models have improved pose estimation accuracy, especially in 3d human pose estimation. Many industries now use pose estimation to automate inspections, measure angles, and boost productivity.

- Real-time pose estimation gives fast feedback and reduces errors.

- Pose estimation machine vision system supports quality control and process optimization.

Key Takeaways

- Pose estimation systems use cameras and deep learning to find the position and movement of people or objects in images and videos.

- These systems work in real time to provide fast feedback, helping in fitness, healthcare, robotics, and safety monitoring.

- Advanced models improve accuracy even in difficult conditions like occlusion, poor lighting, and complex movements.

- Pose estimation supports many industries by improving quality control, reducing errors, and increasing productivity.

- Challenges include handling hidden body parts, high computing needs, and adapting to different environments, but ongoing research is making systems better and faster.

Pose Estimation Machine Vision System

What Is Pose Estimation

Pose estimation is a process in computer vision that finds the position and orientation of objects or people in images or videos. In computer vision systems, pose estimation helps machines understand how a body or object moves. This technology detects and locates key points, such as joints on a human body, and connects them to form a skeleton. Pose estimation in computer vision can work in both 2D and 3D, allowing for single-person or multi-person scenarios. Accurate joint positioning is important for many applications, including sports analysis, robotics, and safety monitoring.

- Pose estimation models often use deep learning to identify key points like ankles, knees, shoulders, and wrists.

- These models face challenges such as occlusion, changes in lighting, and different clothing styles.

- In robotics, pose estimation means finding the six degrees of freedom (DOF) transformation between a sensor and a reference frame, which includes both position and orientation.

Pose estimation in computer vision enables automated understanding of human motion in videos, supporting tasks from virtual trainers to clinical assessments.

Empirical studies show that pose estimation systems track 2D and 3D keypoints from video data. They allow for planar 2D kinematics and 3D reconstruction using multiple cameras. These systems have proven useful in clinical settings, such as measuring motor symptoms in Parkinson’s disease, where they can achieve high accuracy and sometimes outperform standard clinical assessments.

Key Components

A pose estimation machine vision system relies on several critical components to function effectively. Each part plays a unique role in capturing, processing, and interpreting visual data.

| Component Type | Description & Role |

|---|---|

| Sensor Integration | Uses monocular, stereo, depth, and RGB-D cameras to capture spatial and depth information. Each type offers specific advantages for accuracy and robustness. |

| Network Architectures | Advanced models like Spatial-Temporal Graph Convolutional Networks (STGCN) extract features from skeletal data, improving accuracy in dynamic scenes. |

| Attention Mechanisms | Dynamically focus on important joints to enhance robustness in complex environments. |

| Pose Refinement Modules | Apply symmetry constraints to ensure realistic and accurate predictions. |

| Multi-source Data Fusion | Combine thermal, depth, and color data to improve detection under challenging conditions. |

| Lightweight Model Designs | Create efficient architectures for real-time mobile applications. |

| Feature Extraction Methods | Use appearance and local features to handle occlusions and lighting changes. |

Recent technical studies highlight the importance of integrating multi-source data, such as thermal and depth images, to improve pose estimation accuracy. Lightweight network designs help these systems run in real time, even on mobile devices. Deep learning plays a key role in building these advanced network architectures and feature extraction methods.

How It Works

A pose estimation machine vision system follows a clear process to analyze images or videos and estimate poses. The process starts with data input, where the system receives images or video frames. Deep learning models, especially convolutional neural networks (CNNs), process these inputs by extracting features through multiple layers. These features include body part patches, geometry descriptors, and motion features like optical flow.

The system uses encoder-decoder architectures. The encoder processes the input image, and the decoder generates heatmaps that show the likelihood of each joint’s location. The system then selects the coordinates with the highest likelihood for each joint. This approach supports both 2D and 3D pose estimation.

Pose estimation models operate in two main ways:

- Bottom-up approach: Detects individual keypoints first, then groups them by person or object.

- Top-down approach: Detects objects first, then locates keypoints within each object.

Training these models requires large labeled datasets. Transfer learning from pre-trained models, such as those trained on COCO-Pose, helps improve performance. Fine-tuning with task-specific images allows the system to adapt to specialized uses.

Empirical studies validate pose estimation machine vision systems by comparing their outputs to ground truth measurements, such as joint kinematics with multiple degrees of freedom. Validation datasets often include complex joint motions and real-world challenges like occlusion and lighting changes. Performance metrics focus on joint kinematic errors, accuracy differences between lab and outdoor settings, and computational cost per frame.

Recent findings show that transformer-based pose estimation models, such as ViTAE and ViTAEv2, achieve high accuracy (about 88.5%) and improved computational efficiency. These systems reduce human error rates from 25% to under 2%, lower inspection costs by up to 30%, and increase inspection speed. The market for pose estimation technology continues to grow, with a projected value of over USD 21 billion by 2033.

Tip: Open-source libraries like OpenPose, AlphaPose, and DensePose offer practical implementations of pose estimation algorithms, supporting real-time multi-person tracking in both research and industry.

Pose estimation in computer vision uses deep learning to extract meaningful features from images and videos. These features help the system understand spatial and temporal relationships, making pose estimation models effective for a wide range of applications.

Human Pose Estimation and Tracking

Human Pose Estimation

Human pose estimation helps computers find and track the positions of body joints in images or videos. This process uses computer vision to detect keypoints like elbows, knees, and shoulders. Accurate joint detection supports many activities, such as fitness, rehab, and coaching. In fitness, trainers use pose estimation to check posture and give real-time feedback. Rehab specialists rely on these systems to monitor patient progress and correct movements. Coaches use pose estimation to improve athletic performance by analyzing posture and motion tracking.

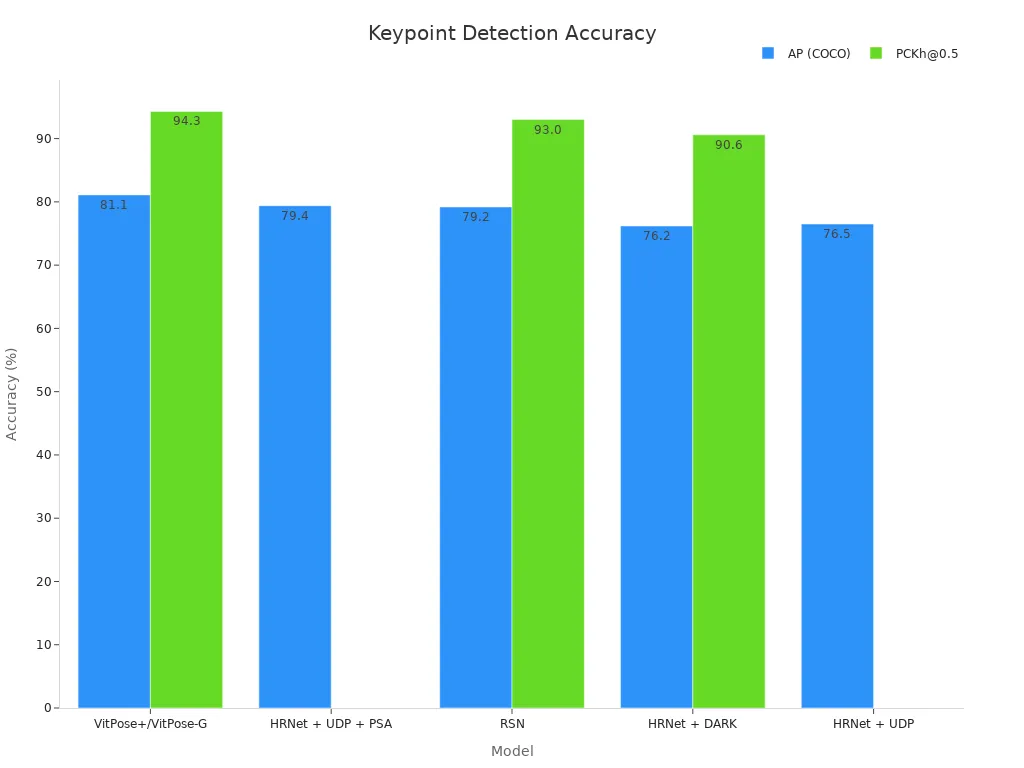

Researchers measure the accuracy of human pose estimation using standard datasets. The table below shows how different models perform in detecting keypoints:

| Model | AP (COCO test-dev) | PCKh@0.5 (MPII) |

|---|---|---|

| VitPose+/VitPose-G | 81.1% | 94.3% |

| HRNet + UDP + PSA | 79.4% | N/A |

| RSN | 79.2% | 93.0% |

| HRNet + DARK | 76.2% | 90.6% |

| HRNet + UDP | 76.5% | N/A |

These results show that modern human pose estimation models can detect joints with high accuracy. In real-world fitness and rehab, this means better posture correction and safer movement.

Pose Tracking Methods

Pose tracking follows the movement of joints over time. This method allows for automatic human motion tracking in videos, which is important for fitness, rehab, and coaching. Pose tracking systems use deep learning to connect keypoints across frames, creating a smooth record of posture and motion tracking. Some methods, like OpenPose and AlphaPose, show high accuracy and low error rates. The table below compares different pose tracking methods:

| Pose Tracking Method | Systematic Differences (mm) | Random Errors (mm) | Performance Notes |

|---|---|---|---|

| OpenPose | ~1–5 | ~1–3 | High accuracy, good for walking and running |

| AlphaPose | ~1–5 | ~1–3 | Similar to OpenPose, robust in dynamic scenes |

| DeepLabCut | Larger | Larger | Lower performance, best for single-person tracking |

Pose tracking helps with monitoring posture changes during fitness routines and rehab exercises. Studies show that pose tracking can measure joint angles with errors as low as 9.9 degrees, making it useful for real-time coaching and movement analysis.

Marker-less Systems

Marker-less systems use cameras and computer vision to track posture without attaching markers to the body. These systems make fitness, rehab, and coaching easier because users do not need special suits or sensors. Marker-less pose estimation works well for motion tracking in gyms, clinics, and sports fields.

Comparisons between marker-less and marker-based systems show that marker-less systems can reach similar accuracy. For example, marker-less systems detect lower body joints with a standard deviation between 9.6 and 23.7 mm. They measure joint positions with 80% of errors under 30 mm. Both systems show less than 0.5° deviation in some joint angles. Marker-less systems also reduce setup time and improve comfort during monitoring.

Note: Marker-less pose estimation supports real-time feedback in fitness and rehab, making posture correction and coaching more accessible.

Marker-less systems now play a key role in fitness, rehab, and surveillance. They allow for continuous monitoring of posture and movement, helping people stay safe and improve performance.

3D Human Pose Estimation

3D Pose Estimation Techniques

3D human pose estimation finds the position and orientation of body joints in three-dimensional space. This process uses advanced pose estimation techniques that combine data from multiple cameras or sensors. Deep learning models, such as fusion transformers, help the system merge information from different views and time frames. These models reduce errors in depth and improve accuracy, even when some joints are hard to see.

Sensor fusion plays a key role in modern pose estimation. By combining data from RGB cameras, depth sensors, and other sources, the system can handle occlusions and changes in lighting. Deep learning networks learn to recognize patterns and features in large datasets. They use self-supervised training, which means the system can improve itself by checking if its predictions match across different camera angles. This approach makes the models more adaptable and accurate.

Some systems use end-to-end networks that estimate 3D poses directly from images. These networks do not need extra geometric calculations. They also work faster and handle complex scenes better than older methods. Real-time human pose estimation becomes possible with these advances, allowing quick feedback in dynamic environments.

Tip: Combining sensor fusion with deep learning helps pose estimation technology work well in crowded or changing scenes.

Applications in Industry

Industries use 3D human pose estimation for robotics, automation, and safety monitoring. Robots need to know the exact position and orientation of objects or people to move safely and complete tasks. In factories, pose estimation helps machines track workers’ movements and avoid accidents. Automation systems use 3D pose data to guide robotic arms and inspect products.

Many industrial tasks require tracking six degrees of freedom (6-DoF), which means knowing both the position and rotation of objects. Sensor fusion improves accuracy in these tasks. For example, using synchronized multi-view cameras can raise acceptance rates for joint detection up to 91.4%. The table below shows how different sensors perform in industrial environments:

| Sensor Type | Acceptance Rate (%) | Key Observations |

|---|---|---|

| RGB Camera | 56 | Useful for assessing wearable robot impact but less accurate than OTS; affected by occlusions and camera angles |

| Depth Camera | 22 | Lower acceptance due to issues like reflective tape on exoskeletons; affected by occlusions |

| Optical Tracking System (OTS) | 78 | Reference system with highest accuracy; performance decreases with marker occlusion by exoskeleton |

Real-time pose estimation helps factories respond quickly to changes and keep workers safe. Pose estimation technology continues to improve, making it more reliable for complex industrial tasks.

Applications

Robotics and Automation

Robots use pose estimation to improve accuracy and safety in factories and workshops. These systems help machines track the position and orientation of objects and tools. In robotic machining, vision-based pose estimation with LSTM RNN reduces path tracking errors from 0.744 mm to 0.014 mm for straight lines. Aircraft assembly uses laser trackers in closed-loop feedback systems, lowering pose errors to less than 0.2 mm and 1°. Dynamic path correction relies on position-based visual servoing and photogrammetry sensors, achieving tracking accuracy of ±0.20 mm in position and ±0.1° in orientation. The table below shows real-world applications of pose estimation in robotics:

| Application Area | Method/Technology Used | Measurable Outcome / Accuracy Achieved |

|---|---|---|

| Robotic machining | Vision-based pose estimation with LSTM RNN | Path tracking error reduced to 0.014 mm (straight line) |

| Aircraft assembly | Laser tracker in closed-loop feedback system | Pose errors < 0.2 mm and 1° |

| Dynamic path correction | PBVS with C-track 780 and Kalman filter | ±0.20 mm (position), ±0.1° (orientation) |

| Robotic milling | Nikon K-CMM photogrammetric sensor | Accuracy of 0.2 mm |

| Industrial milling robot | AICON MoveInspect HR stereo camera system | Positioning errors < 0.3 mm |

These applications of pose estimation support non-cooperative targets, such as moving parts or tools, and use radio signal integration for better tracking. Robots in autonomous vehicles also use pose estimation for navigation and obstacle avoidance.

Healthcare and Sports

Healthcare and sports benefit from human pose estimation by enabling precise posture analysis and continuous monitoring. The MediaPipe framework increases accuracy by 20% and reduces processing time by 30%, making real-time feedback possible in rehabilitation and sports analytics. AI fitness coaching uses 4D depth cameras to capture joint data during treadmill workouts. This technology provides biomechanical data, such as stride length and joint angles, which helps create custom training programs. AI Dual Anti-Fall Design monitors user stability, adjusting treadmill speed or stopping to prevent falls. These features support safer rehabilitation for stroke patients and seniors, improving confidence and fitness outcomes.

- AI-driven real-time posture correction detects and fixes postural issues early.

- Injury assessment uses movement pattern analysis and joint tracking for early intervention.

- Standardized movement tracking helps physiotherapists compare patient movements to ideal standards.

- AI-powered virtual trainers offer personalized feedback for remote physiotherapy and fitness training.

- In sports, pose estimation enables biomechanical analysis, injury risk identification, and tailored coaching programs.

Popular algorithms like OpenPose and DensePose improve accuracy and real-time tracking, making ai fitness applications more effective for both healthcare and sports.

Security and AR/VR

Security systems use pose estimation to detect suspicious behavior and monitor crowds in real time. Human pose estimation helps identify abnormal posture or movement, supporting early intervention and safety. In augmented reality (AR) and virtual reality (VR), pose estimation tracks body joints to create realistic avatars and immersive experiences. These systems enable ai coaching and corrective feedback in virtual environments, helping users improve posture and movement during fitness or training sessions.

Real-world applications of pose estimation in AR/VR include interactive games, remote fitness classes, and virtual physiotherapy. Continuous monitoring of posture and movement ensures safety and enhances user experience. Human-computer interaction improves as systems respond to natural body movements, making technology more accessible and engaging.

Challenges

Occlusion and Data Quality

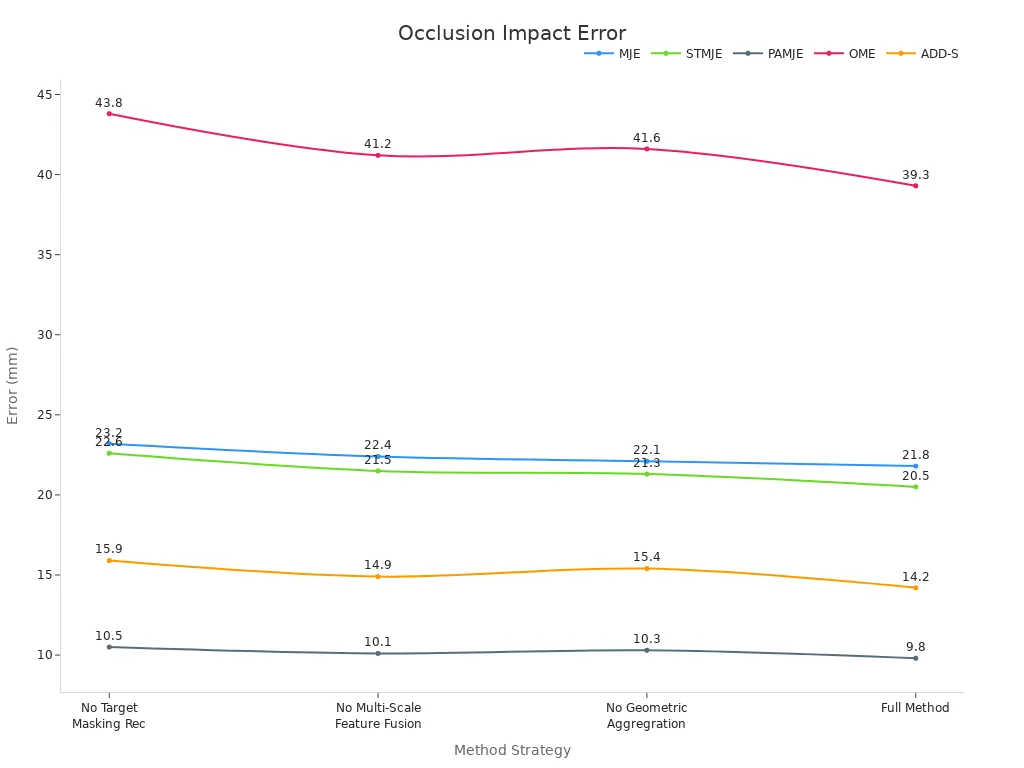

Pose estimation systems often struggle when objects or body parts become hidden, or when image quality drops. Occlusion happens when something blocks a joint or limb, like a barbell covering an athlete’s arm. This can cause the system to place keypoints incorrectly, leading to errors in 3D pose estimation. Data quality also matters. Blurry images or missing details make it hard for deep learning models to find the right posture. Studies show that removing occlusion-aware features or using poor masking strategies increases errors across several metrics. The table below shows how different methods affect accuracy on the HO3Dv2 dataset:

| Condition / Masking Strategy | MJE | STMJE | PAMJE | OME | ADD-S |

|---|---|---|---|---|---|

| Without Target-Focused Masking and Image Reconstruction | 23.2 | 22.6 | 10.5 | 43.8 | 15.9 |

| Without Multi-Scale Feature Fusion for SDF Regression | 22.4 | 21.5 | 10.1 | 41.2 | 14.9 |

| Without Implicit and Explicit Geometric Aggregation | 22.1 | 21.3 | 10.3 | 41.6 | 15.4 |

| Full Method (with all occlusion-aware components) | 21.8 | 20.5 | 9.8 | 39.3 | 14.2 |

Large datasets help deep learning models learn to handle occlusion, but many datasets lack images of fast or complex movements. For example, the Human3.6M dataset only includes indoor scenes, which limits how well models work in real life.

Computational Demands

Real-time pose estimation requires fast and efficient processing. Deep learning models must analyze many images per second, especially in safety-critical tasks. Systems need to keep latency low and throughput high. The table below lists important benchmarks for machine vision:

| Benchmark Category | Key Metrics and Examples |

|---|---|

| Latency and Tail Latency | Mean latency per request; tail latency percentiles (p95, p99, p99.9) critical for real-time responsiveness |

| Throughput and Efficiency | Queries per second (QPS), frames per second (FPS), batch throughput measuring system capacity |

| Numerical Precision Impact | Accuracy trade-offs between FP32, FP16, INT8; speed gains from reduced precision |

| Memory Footprint | Model size, RAM usage, memory bandwidth utilization affecting deployment feasibility |

| Cold-Start Performance | Model load time and first inference latency impacting system readiness |

| Scalability | Ability to handle concurrent workloads and scale with additional resources |

| Power Consumption and Energy | Joules per inference, queries per second per watt (QPS/W) measuring energy efficiency |

Lightweight models, such as those using MobileNet or DenseNet, help reduce memory and power use. However, these models may lose some accuracy, which can affect posture tracking in fitness apps.

Environmental Factors

Environmental conditions, like camera height and angle, can change how well pose estimation works. A low camera height often gives better accuracy because it reduces occlusion and distortion. High camera angles or unusual views can make it harder for deep learning systems to estimate joint positions. The table below shows how different factors affect accuracy:

| Environmental Factor | Condition | Effect on Accuracy | Effect on Average Euclidean Distance | Explanation |

|---|---|---|---|---|

| Camera Height | Low (1 m) | Higher accuracy | Lower error | Lower camera height reduces occlusion and projection distortion |

| Camera Height | High (2.3 m) | Lower accuracy | Higher error | Higher camera height causes more part occlusion problems |

| Camera Angle/View | Coronal view | Lower accuracy | Higher error | 3D-to-2D projection limitations affect joint angle estimation |

| Environmental Heterogeneity | External validation | Slight decrease in accuracy | Increased error | Real-world video recording conditions introduce variability affecting model performance |

Lighting, background clutter, and movement speed also play a role. Fast leg movements, like kicks, can blur images and confuse the system. Ongoing research aims to improve deep learning models so they can adapt to these changing conditions and provide reliable posture analysis in any setting.

Pose estimation machine vision systems have transformed how machines understand movement. These systems use deep learning and advanced models to achieve high accuracy in both 2D and 3D tasks.

- Advantages:

- Robustness to occlusions and extreme poses

- Effective for single and multi-person scenarios

- Applications in healthcare, robotics, and sports

- Limitations:

- Occlusion and data quality issues

- High computational demands

- Limited annotated data

Future research will focus on improving real-time performance and integrating more data types. These technologies will shape many industries and open new opportunities.

FAQ

What is the main purpose of pose estimation in machine vision?

Pose estimation helps machines find the position and orientation of objects or people. This process allows robots and computers to understand movement and interact safely with their environment.

How does a pose estimation system detect body joints?

The system uses cameras and deep learning models. These models find keypoints like elbows and knees in images or videos. The system then connects these points to create a digital skeleton.

Can pose estimation work in real time?

Yes, many modern systems process images quickly. Real-time pose estimation gives instant feedback. This feature helps in fitness coaching, safety monitoring, and robotics.

What are the main challenges for pose estimation systems?

Occlusion, poor image quality, and fast movements can cause errors. Systems also need powerful computers to process data quickly.

Where do people use pose estimation technology?

People use pose estimation in robotics, healthcare, sports, and security. It helps with posture correction, injury prevention, and safe machine operation.

See Also

A Comprehensive Guide To Image Processing Vision Systems

Exploring Computer Vision Models Within Machine Vision Systems

Defining Illumination In Machine Vision Technology