A flatten machine vision system in 2025 uses advanced imaging, lighting, and artificial intelligence to produce highly accurate, flattened representations of complex visual scenes for industrial analysis. Unlike earlier versions, the 2025 system integrates attention-centric neural networks and real-time context awareness, enabling precise detection even in challenging environments. A comprehensive study found that these systems can explain most of the variance in human emotional and aesthetic responses to images, showing their strong impact and reliability in practical tasks.

| YOLO Version | Key Advances | Performance Impact |

|---|---|---|

| YOLOv9-12 (2024-2025) | Programmable Gradient Information, Area Attention, Residual Efficient Layer Aggregation | State-of-the-art accuracy, faster inference, better detection of small and overlapping objects |

The flatten machine vision system now plays a critical role in quality control, robotics, and automated inspection, making it a cornerstone of modern industry.

Key Takeaways

- Flatten machine vision systems in 2025 use advanced lighting, optics, and AI to capture clear, flat images for precise industrial analysis.

- Flat dome lighting and improved lenses help these systems detect small defects and details even in challenging environments.

- AI-powered software boosts accuracy and speed, reducing errors and improving quality control across many industries.

- These systems support diverse applications like automated inspection, robot guidance, medical imaging, and inventory management.

- Adopting flatten machine vision technology leads to higher productivity, better product quality, and smarter automation.

Overview

What Is a Flatten Machine Vision System

A flatten machine vision system in 2025 combines advanced hardware and software to capture, process, and analyze visual data from industrial environments. This system uses a combination of flat dome lighting and digital image flattening techniques. Flat dome lighting creates uniform illumination, reducing shadows and reflections on surfaces. The digital image flattening process converts complex visual scenes into simplified, two-dimensional representations. This approach allows the system to extract features and patterns from images with high accuracy.

Machine vision systems integrate several core components. These include lighting, lenses, cameras, cabling, interface peripherals, computing platforms, and software. Each part plays a specific role in capturing and processing images. Lighting ensures clear image details. Lenses focus and magnify the scene. Cameras capture the digital image. Cabling connects all components. Interface peripherals enable communication between the camera and computing platforms. Computing platforms process the data. Software handles image analysis, decision-making, and communication with other systems.

| Component Category | Description & Role |

|---|---|

| Lighting | Essential for capturing image details; includes various techniques based on position, angle, reflectivity, pattern, and spectrum (e.g., front/back lighting, directed/diffused, UV, NIR). |

| Lens | Focuses light to magnify the scene; key terms include focus, angle of view, field of view, focal length, aperture. |

| Camera | The core image capturing device (implied as main component). |

| Cabling | Connects components, ensuring data and power transmission. |

| Interface Peripherals | Devices that facilitate communication between camera and computing platforms. |

| Computing Platforms | Includes Industrial PCs, Vision Controllers, Embedded Systems, Workstation PCs, Enterprise Servers, and Cloud-based Systems, each with specific roles in processing and integration. |

| Software | Responsible for camera interfacing, image processing, analysis, decision-making, and communication; includes Camera Viewer, Comprehensive Software, and SDKs supporting multiple programming languages. |

This multidisciplinary approach allows the flatten machine vision system to automate inspection tasks, providing consistent and objective results.

Key Features in 2025

In 2025, flatten machine vision systems offer several advanced features. Artificial intelligence drives much of the progress. These systems use attention-centric neural networks to focus on important regions within an image. Real-time context awareness enables the system to adapt to changing environments. Improved flat dome lighting ensures even illumination, which enhances image quality and reduces errors.

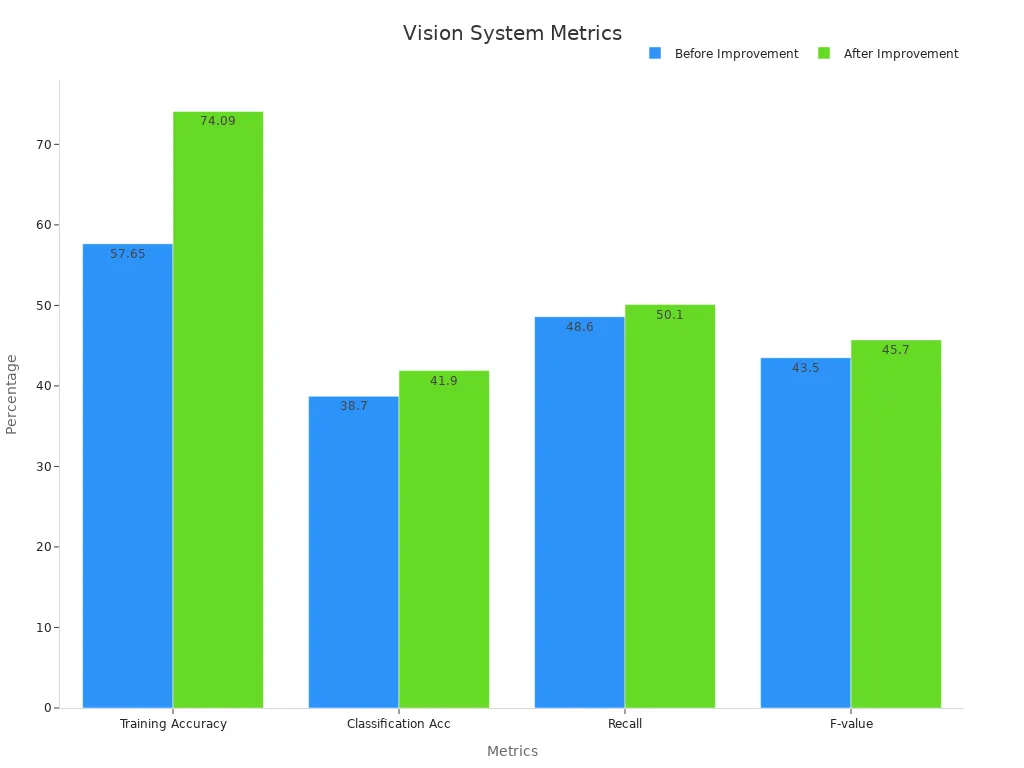

Performance metrics show significant improvements over previous generations. The mean square error for image analysis dropped from 0.02 to 0.005, indicating better image quality and feature extraction. Training accuracy increased from 57.65% to 74.09%. Classification accuracy rose from 38.7% to 41.9%. Recall and F-value also improved, reflecting better detection and balanced precision. The parameter count decreased by 23%, which speeds up training and reduces resource use. Training time and calculation speed both improved, making the system more efficient.

| Metric Description | Before Improvement | After Improvement | Improvement Detail |

|---|---|---|---|

| Mean Square Error (MSE) | 0.02 | 0.005 | Enhanced image quality and feature extraction |

| Training Accuracy (batch size) | 57.65% | 74.09% | Faster and more effective training |

| Classification Accuracy | 38.7% | 41.9% | Accuracy uplift due to improved feature extraction |

| Recall | 48.6% | 50.1% | Improved model detection performance |

| F-value | 43.5% | 45.7% | Balanced precision and recall improvements |

| Parameter Count | 4.8 million | 3.7 million | 23% reduction, speeding training |

| Average Classification Accuracy | N/A | +5% | Overall accuracy improvement |

| Training Time and Calculation Speed | N/A | Faster | Reduced time consumption |

These features make the flatten machine vision system more reliable and efficient for industrial use.

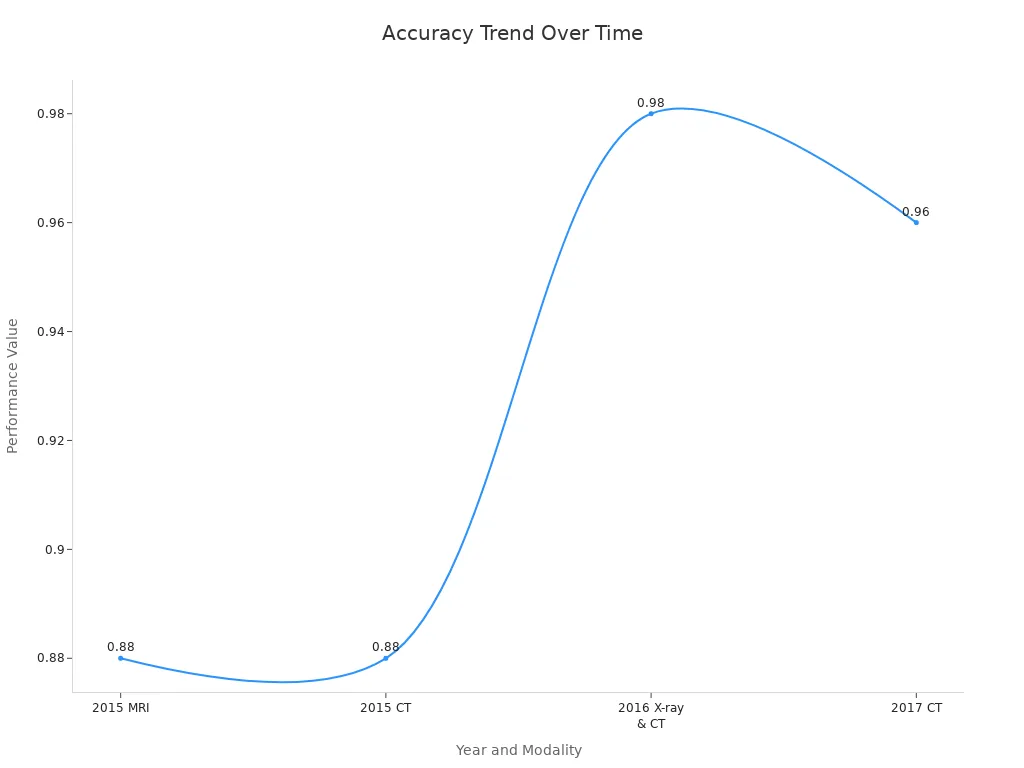

Applications

Flatten machine vision systems support a wide range of industrial applications. They perform automated quality control on assembly lines, detecting defects such as scratches and dents. In healthcare, they analyze medical images like X-rays and MRIs, helping doctors make early diagnoses. Autonomous vehicles use these systems for real-time object detection and navigation, processing camera feeds to identify pedestrians, road signs, and obstacles. Agriculture benefits from drone-based crop monitoring, while retail stores use these systems for automated checkout without barcodes.

| Application Area | Description | Quantitative Example or Detail |

|---|---|---|

| Visual Inspection | Automated checking of products for defects and quality control. | Real-time defect detection ensuring only compliant products proceed. |

| Component Sorting | Identification and categorization of parts by size, shape, and color to streamline assembly. | Automated sorting reduces manual labor and errors. |

| Robot Guidance | Vision-guided robots locate and orient parts for assembly without manual positioning. | Enables flexible automation across different products. |

| Part Counting | Automated tallying of components during manufacturing and packaging. | Example: PCB component counts such as 7 ICs, 12 connectors, 10 capacitors, etc. |

| Defect Detection | Identification of surface imperfections and anomalies during production. | Ensures high product quality by detecting defects missed by conventional methods. |

| Specification Accuracy | Verification of product dimensions and shapes against design standards. | Dimension inspection to guarantee compliance and reduce malfunctions. |

| Protective Gear Check | Monitoring employee compliance with PPE usage for safety. | Real-time alerts for missing hard hats, gloves, or safety glasses. |

| Access Control | Identification and authorization verification for restricted area entry. | Enhances security by preventing unauthorized access. |

| Maintenance Monitoring | Detection of wear, tear, and unusual equipment patterns to schedule proactive maintenance. | Early detection reduces downtime and extends equipment lifespan. |

| Barcode Scanning | Automated identification and logging of products in inventory management. | Speeds processing and reduces human error in supply chain tracking. |

| Storage Optimization | Analysis of warehouse layouts and item dimensions for optimal space utilization. | Improves retrieval times and operational efficiency. |

| Package Inspection | Verification of package sealing, labeling, and integrity before shipment. | Reduces shipping errors and returns. |

| Pallet Tracking | Real-time monitoring of pallet location and routing in warehouses and transit. | Enhances logistical efficiency and supply chain management. |

Example: A flatten machine vision system can count PCB components with high accuracy. For instance, it can detect 7 integrated circuits, 12 connectors, 2 clocks, 1 button, 2 electrolytic capacitors, 4 LEDs, 2 diodes, and 10 capacitors in a single digital image. This level of detail supports precise inventory management and quality assurance.

These applications show the versatility and value of flatten machine vision systems in modern industry.

Lighting

Lighting plays a crucial role in the performance of flatten machine vision systems. Proper illumination ensures that the image sensor captures clear and accurate details, which is essential for reliable analysis. Without the right lighting, even the most advanced sensor or image sensor cannot deliver consistent results. Lighting affects how the sensor detects features, edges, and defects on different surfaces.

Flat Dome Lights

Flat dome lights have become the preferred choice for many flatten machine vision systems in 2025. Their design creates uniform, shadow-free illumination across flat and slightly curved surfaces. This even lighting helps the sensor and image sensor detect small details that other lighting types might miss.

- In practical tests, flat dome lights outperformed high-angle ring lights, coaxial lights, and dark field ring lights.

- High-angle ring and coaxial lights produced poor contrast, making it hard for the sensor to distinguish features.

- Dark field ring lights improved contrast but caused unwanted shadows, which confused the image sensor.

- Diffuse dome lights gave even contrast but did not highlight features well enough.

- Flat dome lights provided the best results, offering even contrast without shadows or vignetting. They also do not need to be mounted close to the part, giving more flexibility in system setup.

This advantage makes flat dome lights ideal for reading small codes or inspecting fine details, especially when the sensor must work with challenging surfaces.

Lighting Types

Machine vision systems use several lighting types, each with unique strengths:

- Fluorescent lighting: Efficient and affordable, provides diffuse light for non-reflective surfaces, but offers limited intensity.

- Quartz halogen lighting: Delivers high intensity and bright continuous light, suitable for precise inspections, but generates heat.

- LED lighting: Offers long life, energy efficiency, and color options. It is stable and flexible, though less cost-effective for large areas.

- Metal halide lighting: Features discrete wavelength peaks, useful for microscopy and fluorescence studies.

- Xenon lighting: Produces intense, bright light, excellent for strobe lighting in high-speed inspections, but uses more energy.

Performance metrics for these lighting types include intensity, spectral content, stability, and energy efficiency. The choice depends on the sensor’s spectral sensitivity, the inspection environment, and the object’s size and geometry.

Lighting Integration

Integrating lighting into flatten machine vision systems requires careful planning. Researchers highlight the need to evaluate and select lighting schemes that provide controlled, uniform illumination. This approach helps the sensor and image sensor capture high-quality images, revealing even the smallest defects and flattened color gradients. Modern systems combine lighting with high-resolution cameras and advanced sensors to minimize operator intervention and maintain image consistency. The integration process often starts with assessing camera and lens options, followed by lighting evaluation to optimize the system’s performance. Proper lighting integration ensures that the sensor can deliver reliable results in any environment.

Optics and Imaging

Lenses

Lenses play a vital role in flatten machine vision systems. They focus light onto the sensor, which allows the image sensor to capture sharp and detailed images. Modern lens technology has advanced rapidly. Autofocus lenses now adjust automatically, making setup faster and more precise. P-Iris technology gives the system better control over the aperture, which improves image clarity and depth of field. Ruggedized lenses withstand harsh industrial conditions, so they maintain performance even in tough environments.

Short-Wave Infrared (SWIR) lenses help the sensor see details that visible light cannot reveal. These lenses improve image clarity and precision, especially when inspecting materials that standard lenses cannot handle. Accessories such as polarizers and cylindrical lenses reduce glare and enhance contrast, which helps the sensor detect fine features. Adjustable lens positions allow the system to change the field of view for different tasks.

Lens advancements have led to higher accuracy and faster training in machine vision. Automated feature processing and model selection now support data-driven decisions in 80% of tasks.

Cameras

Cameras serve as the eyes of the flatten machine vision system. The image sensor inside the camera converts light into digital signals, which the system then processes. Recent improvements in camera sensitivity and image resolution have transformed industrial inspection. InGaAs cameras, for example, detect near-infrared wavelengths, which helps the sensor find defects invisible to standard cameras. These advances lower production costs and make high-sensitivity cameras more accessible.

Smart cameras now include AI-powered algorithms and 3D vision. These features allow the sensor to analyze images in real time and spot tiny defects. Super-resolution techniques, such as deep learning models, boost image resolution and clarity. Tests show that segmentation precision can improve by nearly 20%, with Dice Similarity Coefficient scores reaching 0.91. This level of performance ensures that the sensor delivers reliable results in demanding applications.

Cabling

Cabling connects all components in the machine vision system. Reliable cables ensure that the sensor receives power and transmits data without loss. CAT5e cables support high-speed data transfer up to 30 meters, but CAT6A cables offer better shielding and work well in environments with strong electromagnetic interference. Proper cable management prevents signal loss and keeps the sensor working at peak performance.

| Aspect | Details |

|---|---|

| Cable Coiling | Excessive coiling can cause interference, leading to connectivity issues or speed reduction. |

| Cable Types | CAT5e supports 10GigE up to 30m; CAT6A recommended beyond 30m due to better shielding. |

| Shielding | CAT6A has more robust shielding, improving performance in EMI-prone environments. |

| Cable Bends | Tight bends in CAT5e cables may degrade signal quality. |

| RJ45 Couplers | Should be avoided to maintain signal integrity and 10GigE link speeds. |

| Impact on Reliability | Proper cabling practices directly influence system performance and reliability in machine vision setups. |

Note: Good cabling practices help the sensor maintain high data rates and stable connections, which are essential for accurate image analysis.

Processing and Software

Image Flattening Process

The flatten machine vision system uses a specialized image flattening process to convert complex visual data into a simplified format. This process takes the feature maps generated by neural networks and transforms them into vectors. By flattening the image data, the system can analyze patterns and features more efficiently. The digital image becomes easier to process, which improves the speed and accuracy of defect detection. This method supports high-volume industrial tasks where rapid and reliable analysis is essential. The process also helps the system handle images with varying lighting and surface conditions, ensuring consistent results.

Machine Vision System Workflow

A typical machine vision system follows a structured workflow to analyze images. The process begins with image capture, where cameras or sensors acquire the digital image from different angles. Next, the system performs preprocessing to correct distortion, adjust lighting, and remove noise. Feature extraction follows, using algorithms to identify edges, shapes, and regions within the image. Pattern recognition comes next, where machine learning models classify objects or detect defects. Finally, the system makes decisions, such as stopping production or sorting items.

| Diagram Type | Purpose and Use Case | Relevance to Machine Vision Systems |

|---|---|---|

| Workflow Diagram | Visualizes structured processes, task sequences, decisions, and interactions to improve efficiency | Maps out machine vision processing steps and team collaboration |

| Data Flow Diagram (DFD) | Shows how data moves through a system, focusing on data sources, processing, and storage | Useful for understanding image and data flow in vision systems |

| EPC Diagram | Models business processes with events, tasks, and decision points for process improvement | Helps optimize workflows in manufacturing and automation |

| SDL Diagram | Models complex real-time system interactions, states, and transitions | Useful for system design and troubleshooting in embedded vision systems |

| Process Map | Provides detailed step-by-step process documentation and analysis | Maps detailed machine vision workflows and quality control steps |

| Process Flow Diagram | Visualizes high-level process flows and key equipment in industrial processes | Represents overall machine vision system operations in manufacturing |

The workflow supports integration with AI and edge computing, which enhances real-time decision-making and operational efficiency.

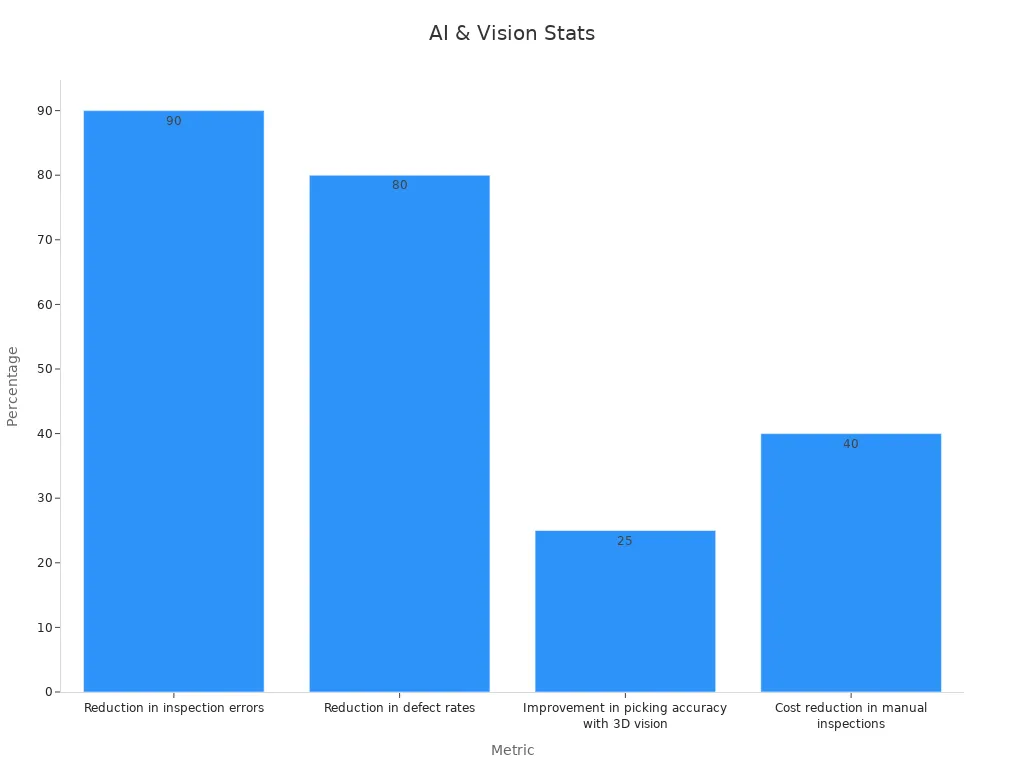

AI and Software Advances

Software and AI advancements in 2025 have transformed how the machine vision system processes images. AI-powered algorithms now deliver real-time analysis with high accuracy. Recent studies show a reduction in inspection errors by over 90% compared to manual inspection. Defect rates have dropped by up to 80%. Picking accuracy with 3D vision has improved by 25%. Real-time control latency using advanced protocols like ROS 2.0 now measures below one millisecond. These improvements have led to significant cost and energy savings in industries such as automotive and pharmaceuticals.

The global AI market continues to grow rapidly. Projections estimate the generative AI market will reach $1.3 trillion by 2032. AI tools are expected to reach over 700 million users by 2030. Over 95% of executives believe generative AI will revolutionize applications, including digital image processing. Manufacturing, healthcare, and software development lead in AI adoption, driving productivity and automation. These trends show that the flatten machine vision system will remain at the forefront of industrial innovation.

Flatten machine vision system technology in 2025 stands out for its advanced lighting, precise optics, and powerful software. These systems deliver fast, accurate results across many industries.

- Lighting and optics improvements help systems capture clear images in any environment.

- Software and AI advancements make systems smarter and more reliable.

Companies adopting these systems can expect better quality control and higher productivity. Future systems will likely become even more adaptable and accessible. Every industry should explore how these systems can transform their operations.

FAQ

What makes a flatten machine vision system different from traditional systems?

A flatten machine vision system uses advanced lighting and AI to create clear, flat images. This approach helps the system find small defects and patterns that older systems might miss.

Can a flatten machine vision system work in low-light environments?

Yes. The system uses flat dome lights and sensitive cameras. These features allow it to capture clear images even when lighting is poor.

How does AI improve the accuracy of these systems?

AI helps the system focus on important image areas. It learns from data and adapts to new situations. This process leads to better detection and fewer errors.

What industries benefit most from flatten machine vision systems?

Industries such as manufacturing, healthcare, logistics, and agriculture use these systems. They help with quality control, inspection, and automation tasks.

See Also

Understanding Field Of View In Machine Vision Systems

How Machine Vision Systems Detect Flaws Effectively

Exploring Computer Vision Models Within Machine Vision Systems