Common loss functions in machine vision include mean squared error (MSE), cross-entropy, Dice loss, and L1/L2 losses. A loss function measures the difference between a model’s predictions and the actual results in computer vision tasks. Models use loss functions to focus on important features in every image, which helps them improve accuracy and performance. In classification, cross-entropy loss often leads to better results, while MSE is preferred for regression. The Loss Function machine vision system directly affects how well a model learns and adapts to image data.

Key Takeaways

- Loss functions measure how well a machine vision model predicts and help it learn by reducing errors.

- Different loss functions suit different tasks: cross-entropy for classification, MSE or MAE for regression, and Dice loss for segmentation.

- Choosing the right loss function improves model accuracy, handles challenges like outliers and class imbalance, and speeds up training.

- Advanced models combine multiple loss functions to create realistic images and keep important details.

- Testing and adapting loss functions to your data and task leads to better machine vision results.

Loss Function Basics

What Is a Loss Function?

A loss function measures how well a machine learning model predicts the correct answer. In computer vision, the loss function compares the model’s output to the true label or value. The model uses this information to adjust its parameters during training. Neural networks rely on loss functions to guide them toward better predictions. The process of minimization helps the model reduce the difference between its predictions and the actual results.

Many machine learning systems use loss functions as a type of cost function. The cost function represents the total error across all examples in the dataset. For example, mean squared error (MSE) and mean absolute error (MAE) are common choices for regression tasks. These loss functions help neural networks learn by penalizing large errors more heavily or by being robust to outliers.

| Loss Function Name | Mathematical Definition | Empirical Data Relevance in Machine Vision Systems | ||

|---|---|---|---|---|

| Mean Squared Error (MSE) | ( text{MSE} = frac{1}{N} sum_{i=1}^N (y_i – hat{y}_i)^2 ) | Assumes Gaussian noise in regression tasks; emphasizes larger errors by squaring. | ||

| Mean Absolute Error (MAE) | ( text{MAE} = frac{1}{N} sum_{i=1}^N | y_i – hat{y}_i | ) | Robust to outliers; used when data noise is non-Gaussian or heavy-tailed. |

| Binary Cross-Entropy Loss | ( L_{BCE} = -frac{1}{N} sum_{i=1}^N [y_i log hat{y}_i + (1-y_i) log (1-hat{y}_i)] ) | Models probabilistic outputs in binary classification; aligns with empirical label distributions. | ||

| Categorical Cross-Entropy | ( L_{CCE} = -sum_{i=1}^C y_i log hat{y}_i ) | Used in multi-class classification; aligns with empirical class probability distributions. |

These loss functions are shaped by the data and noise found in machine vision tasks. They help neural networks focus on reducing prediction error and improving performance.

Why Loss Functions Matter

Loss functions play a key role in machine learning and computer vision. They guide neural networks during training by showing how far the model’s predictions are from the correct answers. When a model uses the right loss function, it learns important features in images and improves its accuracy.

Loss functions also include regularization terms, such as L1 and L2 penalties. These terms help control the complexity of neural networks and prevent problems like vanishing or exploding gradients. This keeps training stable and helps the model generalize better to new data.

In computer vision models, the choice of loss function affects how quickly and accurately the model learns. For example, weighted pixel-wise cross-entropy loss helps with class imbalance in image segmentation. Perceptual loss allows neural networks to capture high-level features, not just pixel differences. Cycle consistency loss improves image translation quality by making sure generated images can return to their original form.

Studies show that using adaptive loss functions can lower error rates in deep learning models. For instance, crowd counting models using a composite loss function saw mean absolute error drop by up to 12.2% on some datasets. This proves that the right loss function can make a big difference in model performance and minimization of error.

Loss Functions in Computer Vision

Classification Loss Functions

Classification tasks in computer vision require models to assign labels to images or objects. Neural networks use loss functions to measure how well their predictions match the true class. The most common loss function for image classification is cross-entropy loss. This loss function compares the predicted probability distribution with the actual class labels.

-

Binary Cross-Entropy

Binary cross-entropy is used when there are only two classes. The formula is:

[

L_{BCE} = -frac{1}{N} sum_{i=1}^N [y_i log hat{y}_i + (1-y_i) log (1-hat{y}_i)]

]

Neural networks use binary cross-entropy to learn from each image and reduce prediction error. This loss function works well for tasks like detecting if an object is present or absent. -

Categorical Cross-Entropy

Categorical cross-entropy is used for multi-class classification. The formula is:

[

L_{CCE} = -sum_{i=1}^C y_i log hat{y}_i

]

Neural networks use categorical cross-entropy to compare the predicted class probabilities with the true class. This loss function helps models learn to classify images into many categories, such as animals or vehicles. -

Hinge Loss

Hinge loss is often used in support vector machines for classification. It encourages the model to create a margin between classes. Hinge loss can improve margin-based classification, but it may not provide probabilistic outputs like cross-entropy.

Cross-entropy loss is popular in computer vision because it provides clear gradients for neural networks during training. However, it treats all classes equally, which can cause problems when some classes appear more often than others. Focal loss can help by down-weighting common classes and focusing on rare ones.

The table below compares the performance of different classification loss functions on popular datasets:

| Loss Function Type | Loss Functions Compared | Datasets Used | Performance Metrics | Key Observations |

|---|---|---|---|---|

| Classification Losses | Binary Cross-Entropy, Hinge Loss, Categorical Cross-Entropy, Multiclass Hinge Loss | MNIST, CIFAR-10 | Accuracy, F1-score, ROC curves | Binary cross-entropy offers probabilistic interpretability. Hinge loss can improve margin-based classification. Trade-offs exist in optimization stability. |

Neural networks trained with cross-entropy loss often achieve high accuracy in image classification and object detection tasks. However, class imbalance can reduce performance. Researchers have developed new loss functions and ensemble methods to improve results, especially in medical image classification.

Regression Loss Functions

Regression tasks in computer vision predict continuous values, such as the location of an object in an image. Neural networks use loss functions to measure prediction error between the predicted and actual values.

-

Mean Squared Error (MSE)

Mean squared error is the most common loss function for regression. The formula is:

[

text{MSE} = frac{1}{N} sum_{i=1}^N (y_i – hat{y}_i)^2

]

Neural networks use mean squared error to penalize large prediction errors. This loss function assumes the noise in the data follows a Gaussian distribution. MSE is sensitive to outliers because it squares the error, making large errors even larger. -

Mean Absolute Error (MAE)

Mean absolute error is another popular loss function for regression. The formula is:

[

text{MAE} = frac{1}{N} sum_{i=1}^N |y_i – hat{y}_i|

]

MAE penalizes errors linearly, making it more robust to outliers. Neural networks use MAE when the data contains noise or outliers that could affect training. -

L1 and L2 Losses

L1 loss is the same as mean absolute error. L2 loss is another name for mean squared error. Both help neural networks learn by minimizing prediction error, but they respond differently to outliers.

A comparison of regression loss functions shows important differences:

- Mean squared error provides smooth gradients for neural networks, which helps with training.

- Mean absolute error is less sensitive to outliers, making it useful for noisy image data.

- The choice between MSE and MAE depends on the data and the goal of the model.

The table below summarizes findings from studies comparing regression loss functions:

| Loss Function Type | Loss Functions Compared | Datasets Used | Performance Metrics | Key Observations |

|---|---|---|---|---|

| Regression Losses | MAE vs. MSE | MNIST, CIFAR-10 | Accuracy, F1-score, AUC | MAE shows robustness to outliers. MSE provides smoother gradients but is sensitive to large errors. |

Researchers found that mean squared error works best when the noise in the image data is Gaussian. However, mean absolute error performs better when outliers are present. Cross-validation helps select the best loss function for a given task.

- Ordinary least squares minimizes mean squared error and works well when data has no outliers.

- Ridge regression can outperform ordinary least squares in terms of mean squared error when data has multicollinearity.

- The choice of loss function affects how well the model handles noise and prediction error in images.

Segmentation Loss Functions

Semantic segmentation tasks require models to label each pixel in an image. Neural networks use specialized loss functions to measure how well their predictions match the true segmentation.

-

Dice Loss

Dice loss measures the overlap between the predicted and true segmentation masks. The formula is:

[

text{Dice} = frac{2 sum_{i=1}^N y_i hat{y}i}{sum{i=1}^N y_i + sum_{i=1}^N hat{y}_i}

]

Neural networks use Dice loss to improve segmentation accuracy, especially when the target regions are small or imbalanced. -

Intersection over Union (IoU) Loss

IoU loss, also called Jaccard loss, measures the intersection divided by the union of the predicted and true masks. This loss function helps neural networks focus on the correct boundaries in images. -

Cross-Entropy Loss for Segmentation

Cross-entropy loss is also used in segmentation tasks. Neural networks use pixel-wise cross-entropy to compare each predicted pixel with the true label. Weighted cross-entropy can help with class imbalance.

Studies show that Dice loss outperforms cross-entropy loss by 1 to 6 percentage points in segmentation accuracy across datasets. Modified loss functions can improve segmentation scores by 5% to 15% for small lesions. Assigning zero weight to background pixels can increase accuracy by 6 percentage points in eye fundus images.

The table below summarizes improvements in segmentation tasks using specialized loss functions:

| Dataset / Task | Loss Function / Variation | Improvement Quantified |

|---|---|---|

| Small lesions segmentation | Modified loss functions | 5% to 15% increase in segmentation scores |

| Across datasets | Dice loss vs Cross Entropy | Dice outperforms by 1 to 6 percentage points |

| MRI segmentation | Weighting false positives vs false negatives | Up to 12 percentage points improvement |

| Eye fundus images (EFI) | Assigning zero weight to background | 6 percentage points improvement |

| Difficult small targets (EFI) | Loss modifications | 6 to 9 percentage points improvement |

| Network architectures | DeepLabV3 vs UNet and FCN | DeepLabV3 outperforms in all datasets |

A recent study on medical image segmentation found that common metrics like Dice overlap may not always reflect clinical relevance. The study suggests that new evaluation metrics and loss functions are needed for reliable results in challenging cases.

Neural networks trained with segmentation loss functions like Dice and IoU achieve better results in semantic segmentation and object detection. These loss functions help models learn to identify boundaries and regions in complex images.

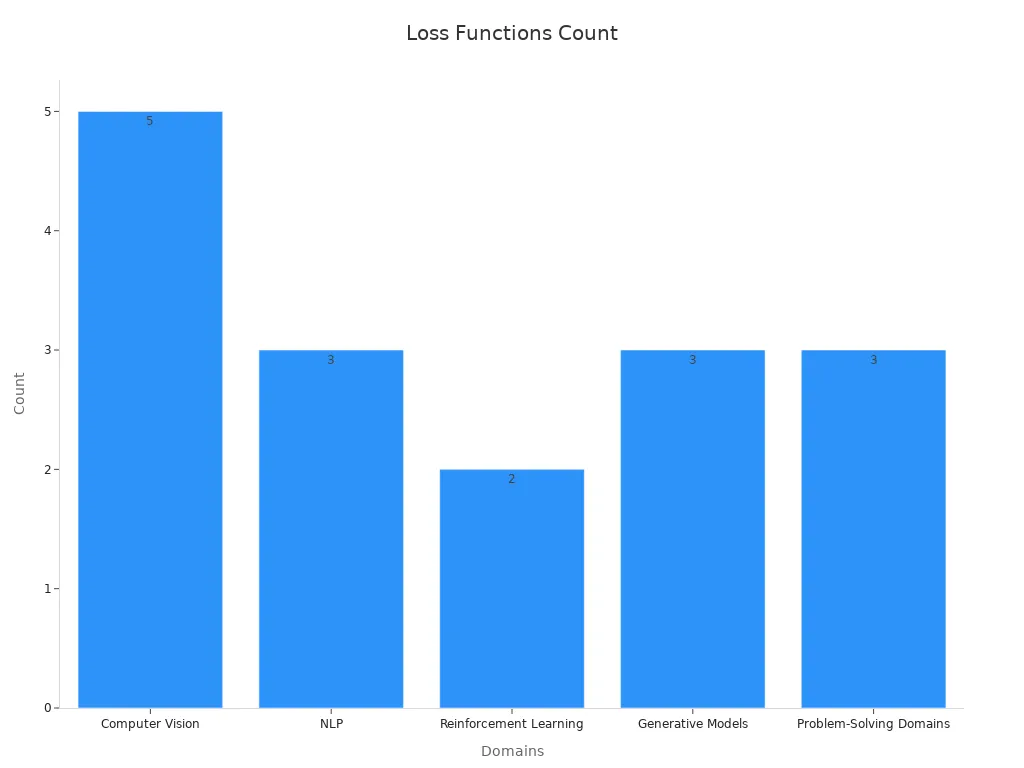

Below is a chart showing the use of loss functions across different domains:

Loss Function Machine Vision System

Impact on Model Performance

The loss function machine vision system shapes how neural networks learn from images. The choice of loss function affects model performance in many ways. For example, a study on wildlife re-identification compared triplet loss and Proxy-NCA loss. Models trained with triplet loss reached higher Recall@1 scores, which means better identification accuracy. Proxy-NCA loss made training easier but did not improve results much. This shows that the loss function can change key metrics like mean average precision and recall.

Loss functions guide neural networks during training by measuring error. They help the model update its parameters to reduce mistakes. Some loss functions, like mean squared error, penalize large errors more. Others, like mean absolute error, handle outliers better. The loss function machine vision system also affects computational efficiency. Some loss functions need more calculations, which can slow down training. The cost function, which sums up all errors, helps the model focus on important features in the image.

A table below shows how specific loss functions improve performance in real tasks:

| Vision Task | Loss Function | Quantified Benefit |

|---|---|---|

| Object Detection | Focal Loss | ~5% increase in accuracy |

| Medical Image Segmentation | Dice Loss | Up to 10% increase in Dice coefficient |

Choosing the Right Loss Function

Selecting the best loss function machine vision system requires careful thought. The model must handle outliers, class imbalance, and computational limits. For classification, cross-entropy loss works well because it compares predicted probabilities with true labels. For regression, mean squared error or mean absolute error may fit better, depending on the data.

Custom loss functions help when standard ones do not solve the problem. For example, in TensorFlow, developers can combine losses for special tasks.

def vae_loss(y_true, y_pred): kl_loss = vae_kl_loss(y_true, y_pred) rc_loss = vae_rc_loss(y_true, y_pred) kl_weight_const = 0.01 return kl_weight_const * kl_loss + rc_loss

When choosing a loss function, consider these tips:

- Check if the data has outliers. Use mean absolute error if it does.

- Look for class imbalance. Try Dice loss or focal loss for better accuracy.

- Think about training speed. Some loss functions need more time to compute.

- Test different loss functions to see which gives the best model improvement.

The loss function machine vision system plays a key role in model improvement and accuracy. Machine learning experts often try several options before finding the best fit for their image task.

Advanced Loss Functions

Generative Models

Generative models create new images that look real. These models use special loss functions to help them learn. One important loss function is the adversarial loss. This loss comes from Generative Adversarial Networks (GANs). In a GAN, two networks compete. One network tries to make fake images. The other network tries to tell real images from fake ones. The adversarial loss helps both networks get better.

Researchers use advanced statistical ideas like Maximum Likelihood Estimation and adversarial training. These methods help the model learn the true data distribution. For example, the Wasserstein loss uses a special distance to improve training stability. Relativistic adversarial loss compares real and fake images directly. This leads to better results and more stable training. A study tested a new loss called RMCosGAN on datasets like CIFAR-10 and MNIST. The results showed higher image quality and better training stability than older loss functions.

Adversarial losses help models create images with more detail and fewer mistakes. They also make training more stable.

Combined Losses

Some tasks need more than one loss function. Combined losses mix several loss types to guide the model. For example, image-to-image translation uses adversarial loss, cycle-consistency loss, and perceptual loss together. Each loss has a job. Adversarial loss makes images look real. Cycle-consistency loss checks if the image can change back to its original form. Perceptual loss helps the model focus on important features, not just pixels.

- Combined loss functions help models:

- Create images that look real and clear

- Keep important details in the image

- Learn from both pixel-level and high-level features

The table below shows how different loss functions work together in advanced models:

| Model Type | Loss Functions Used | Benefit |

|---|---|---|

| GANs | Adversarial, Wasserstein, Relativistic | Stable training, better image quality |

| Image Translation | Adversarial, Cycle-consistency, Perceptual | Realistic images, detail preservation |

| Inpainting | Adversarial, Pixel-wise, Perceptual | Fills missing parts, keeps structure |

Modern models use these combined losses to solve hard image problems. They move beyond simple cross-entropy or mean squared error. This helps them create images that look real and keep important features.

Loss functions play a vital role in machine vision by guiding learning and improving results. They help compare predictions to real answers, making models better over time.

- Loss functions can be tailored for tasks like classification or segmentation.

- They guide optimization and provide feedback for better results.

| Loss Function | Use Case | Benefit |

|---|---|---|

| Cross-Entropy | Image classification | Handles probabilities well |

| Dice Loss | Medical segmentation | Addresses class imbalance |

For more learning, resources like Ultralytics documentation and Neptune.ai offer practical guides on loss functions.

FAQ

What is the main purpose of a loss function in machine vision?

A loss function helps a model learn by showing how far its predictions are from the correct answers. The model uses this feedback to improve its accuracy during training.

How does the choice of loss function affect model results?

The loss function changes how a model learns. Some loss functions work better for certain tasks. For example, Dice loss helps with segmentation, while cross-entropy works well for classification.

Can a model use more than one loss function at the same time?

Yes, some models combine several loss functions. This helps the model learn different things, like making images look real and keeping important details. Combined losses often improve results.

Which loss function should someone use for image segmentation?

Most experts use Dice loss or cross-entropy loss for image segmentation. Dice loss works well when the target area is small or classes are imbalanced. Cross-entropy helps with pixel-level accuracy.

See Also

A Comprehensive Guide To Thresholding In Vision Systems

Top Image Processing Libraries Used In Machine Vision

Exploring Pixel-Based Machine Vision In Current Technologies

An Overview Of Few-Shot And Active Learning Methods

Essential Principles Behind Edge Detection In Machine Vision