A fitting machine vision system stands out for its ability to combine advanced sensors, real-time processing, and adaptability. These features deliver precise fitting and seamless integration in industrial environments. High-speed interfaces and smart sensors, like GPUs and event-based types, enable quick, low-latency decisions. Companies report up to 70% faster inspection times and 20% to 30% more equipment uptime when using a machine vision system. Accurate fitting and component selection become possible with this level of intelligence.

Key Takeaways

- Fitting machine vision systems use advanced sensors and smart algorithms to deliver fast, accurate inspections that improve product quality and reduce errors.

- High-quality cameras, lighting, and sensors work together to capture detailed images, enabling precise fitting and defect detection even in complex industrial environments.

- Different system types, including 1D, 2D, and 3D vision, suit various tasks; 3D systems offer the highest detail and are essential for precision industries like aerospace and automotive.

- Adaptive AI-powered algorithms help these systems adjust to changing conditions and new products, boosting defect detection rates and operational flexibility.

- Seamless integration, user-friendly interfaces, and customizable solutions make machine vision systems easy to adopt and maintain, supporting higher productivity and cost savings.

Fitting Machine Vision System

Key Components

A fitting machine vision system depends on several critical components to achieve high accuracy and reliability. Cameras, lenses, lighting, and sensors work together to capture and process images for precise fitting and inspection. Modern systems use CCD and CMOS sensors to convert light into electrical signals, which forms the foundation for image quality. Autofocus and liquid lenses allow the system to adapt quickly, maintaining sharp focus even when objects move or change position. LED lighting provides consistent and energy-efficient illumination, which is essential for reliable performance in industrial environments.

Optimized lighting can improve machine vision accuracy by over 12%, with systems reaching up to 95% accuracy under proper lighting conditions. High-quality lenses detect micron-level defects, which is vital for quality assurance in manufacturing.

Advanced sensors and imaging components play a crucial role in system performance. Research shows that integrating these elements can boost model accuracy by 17%, especially when combining imaging tools with complementary sensors. The diversity of sensor inputs, such as 3d camera, radar, LiDAR, and laser, enables the fitting machine vision system to perform faster and more accurate inspections. This fusion of data sources leads to better AI decision-making, faster inspection speeds, and lower manufacturing costs.

A bin-picking solution often relies on a 3d camera and depth camera to guide a robot arm in identifying and picking parts from a bin. The synergy between cameras, lighting, and sensors ensures the system can handle complex tasks with high precision.

Accurate Fitting

Accurate fitting stands at the heart of every fitting machine vision system. The system must identify, measure, and position components with extreme precision. Metrics such as precision, recall, and F1 score help quantify the system’s ability to detect defects and minimize errors. Gauge Repeatability and Reproducibility (Gauge R&R) analysis evaluates how consistently the system measures parts, aiming for less than 10% variability due to measurement error.

Sub-pixel processing techniques allow the system to detect features smaller than a single pixel, achieving precision levels around 1:10. Proper calibration aligns the system with measurement standards, maintaining accuracy within one-third of the tolerance band. Pixel resolution and adherence to the Nyquist-Shannon theorem ensure the system captures fine details without missing critical features.

- Vision systems enable 100% inline inspection, eliminating manual variability and increasing throughput.

- High-speed cameras and image processors capture multiple images under different lighting conditions, improving defect detection.

- Vision-guided robotics, powered by a 3d camera and depth camera, automate coordinate detection for the robot arm, reducing manual programming time.

Environmental factors, such as stable lighting and controlled conditions, further enhance measurement precision. The use of industrial 3d cameras and advanced calibration techniques ensures the system maintains high accuracy across diverse applications.

System Types

Fitting machine vision systems come in several types, each suited for specific tasks and environments. The four basic types include 1D, 2D area, 2D line scan, and 3D machine vision systems.

| System Type | Operational Principle | Typical Applications | Advantages | Limitations/Notes |

|---|---|---|---|---|

| 1D Vision System | Scans line-by-line, often using laser triangulation | Continuous motion inspection, barcode scanning, unwrapping cylindrical objects | Suitable for moving objects, cost-effective | Less detailed and precise than 2D/3D systems |

| 2D Area Scan | Captures full 2D image snapshot of stationary objects | Inspection of discrete parts, defect detection, label verification | Provides full image, suitable for stationary items | No depth information, limited light sensitivity |

| 2D Line Scan | Builds image line-by-line requiring motion and intense illumination | High-speed inspection, cylindrical objects, continuous motion | High resolution, less expensive than area scan | Requires motion and encoder feedback, complex integration |

| 3D Vision System | Uses stereo vision, structured light, fringe pattern, or time-of-flight to capture depth and volume | Depth measurement, volume, surface angles, robotic guidance | Provides detailed 3D data, critical for precision industries | Higher cost, complexity, and maintenance |

A 1D system works well for simple, continuous inspections but offers less detail. A 2D area scan system captures images of stationary objects, making it ideal for defect detection and label verification. The 2D line scan system builds images line-by-line, which suits high-speed inspection tasks. The 3D vision system, often equipped with a 3d camera, delivers unmatched capabilities for automation, object recognition, and detailed dimensional measurements. This system is essential for industries like aerospace and automotive, where precision is critical.

A bin-picking solution typically uses a 3d camera and robot arm to identify and pick parts from a bin, relying on the depth information provided by the 3d camera. The robot arm can then position and assemble components with high accuracy, guided by the fitting machine vision system.

The choice of system depends on the required accuracy, speed, and complexity of the application. 3D systems provide the highest level of detail but require more investment and maintenance.

Image Processing

High-Precision Sensors

High-precision sensors form the backbone of modern image processing in machine vision systems. These sensors, such as FPGA-based and neuromorphic types, deliver exceptional accuracy and real-time performance. FPGA-based technology uses parallel computing and optimized algorithms. This approach reduces manual errors and resource waste. It also improves detection reliability and supports intelligent automation in industrial inspection.

Neuromorphic sensors stand out for their high temporal precision, reaching the low microsecond range. They detect even the smallest changes and enable early fault detection, which is critical for quality assurance. These sensors work well under different lighting conditions and capture high-frequency movements. This capability makes them ideal for rapid fault detection and process monitoring.

| Application/Technology | Accuracy/Efficiency Rate |

|---|---|

| Defect detection (machine vision) | Over 99% |

| Object detection | 98.5% |

| Optical Character Recognition (OCR) | Over 99.5% |

| AI-powered defect detection | 95–98% |

| Automated visual inspection | Up to 80% defect rate reduction |

| Inspection error reduction | Over 90% compared to manual |

| 3D stereo vision (depth estimation) | Over 90% |

Lighting & Optics

Lighting and optics play a vital role in enhancing image quality. Geometry, structure, wavelength, and filters each influence image contrast and clarity. Adjusting the spatial relationship among the object, light, and camera changes how surface details appear. For example, coaxial and off-axis lighting geometries affect specular reflections and image contrast.

Optical filters, such as bandpass and polarizing types, block unwanted ambient light and improve contrast. Experiments show that monochrome vision systems with blue bandpass filters achieve up to 90% efficiency in blue fluorescence imaging, compared to only 25% for color systems.

Advanced optical microscopy methods, including lateral shearing and dual-wavelength phase retrieval, further enhance imaging quality. These techniques improve image stability, resolution, and phase estimation accuracy, especially in challenging environments.

Robust Acquisition

Robust image acquisition ensures reliable inspection and reduces errors. Machine vision systems that use advanced acquisition techniques have demonstrated a 50% reduction in defect rates through early detection. These systems also drastically lower false rejects. For instance, one system reduced weekly false rejects from 12,000 to just 246 units, saving over $18 million per production line each year.

| Technique / Methodology | Description / Impact on Error Reduction and Reliability |

|---|---|

| Pixel Intensity Analysis with Histograms | Detects and classifies noise types, improving image quality and reliability. |

| Size Distribution Estimation with Error Classification | Identifies and manages errors, separating true defects from artifacts. |

| Machine Learning Model Adaptation | Updates models after each cycle, improving future detection. |

| Data Drift Detection | Monitors data changes to catch anomalies early and maintain quality. |

| Reduction in False Rejects | Significantly lowers false rejects, leading to cost savings and reliability. |

| Consistent Inspection Quality | Ensures robust, reliable acquisition and defect detection. |

These methods help maintain consistent inspection quality, even in dynamic industrial environments. Reliable image acquisition forms the foundation for smart, efficient machine vision systems.

Algorithms & AI

Pattern Recognition

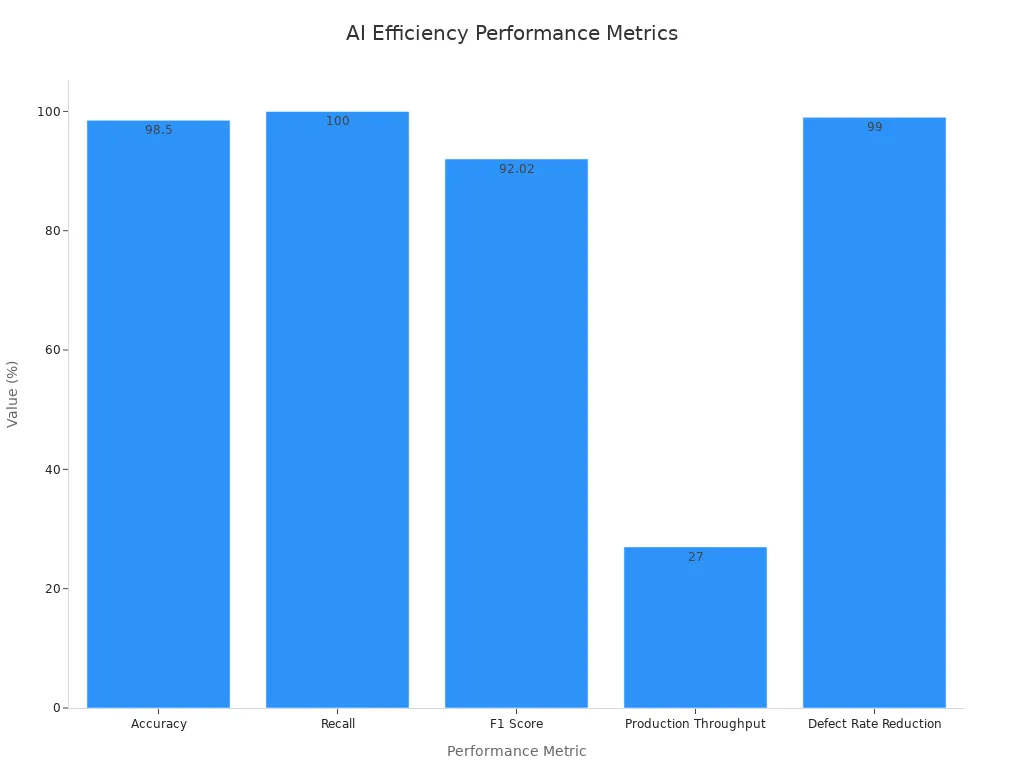

Pattern recognition forms the core of smart machine vision systems. These systems use advanced algorithms to identify shapes, textures, and patterns in images. They rely on key performance metrics such as accuracy, precision, recall, and F1 score to measure their effectiveness. Accuracy shows how often the system makes correct identifications. Precision checks if positive predictions are correct, while recall measures the ability to find all relevant items. F1 score balances both precision and recall. Execution time and inference latency reveal how quickly the system processes images, which is vital for real-time tasks. Gauge R&R ensures the system stays consistent over time and across different operators.

| Performance Metric | Numerical Evidence | Impact on Validation of Algorithm Efficiency |

|---|---|---|

| Accuracy | Up to 98.5% accuracy | Demonstrates high correctness in object identification and defect detection |

| Recall | 100% recall rate | Ensures all relevant instances are detected, minimizing missed defects |

| F1 Score | Above 90% (e.g., 92.02%) | Balances precision and recall, indicating reliable prediction quality |

| Precision | Around 83.7% (machine) vs 79% (human) | Shows correctness of positive predictions, outperforming human inspection |

| Mean Intersection over Union (IoU) | Improved from 0.68 to 0.83 | Indicates better alignment between predicted and actual object locations |

| Execution/Inference Time | Machines inspect 80+ times faster than humans | Validates processing speed and throughput efficiency |

| Defect Detection Improvement | 25% to 34% increase with AI integration | Empirical evidence of AI enhancing defect detection accuracy |

| Defect Rate Reduction | Up to 99% reduction in defective products | Confirms significant quality improvement and waste reduction |

| Production Throughput | 27% increase in throughput | Shows enhanced operational efficiency due to improved algorithm performance |

| Error Rate Reduction | Over 90% decrease compared to manual inspection | Validates reliability and trustworthiness of automated systems |

Optimizing these metrics leads to better defect detection, fewer errors, and higher throughput in industrial settings.

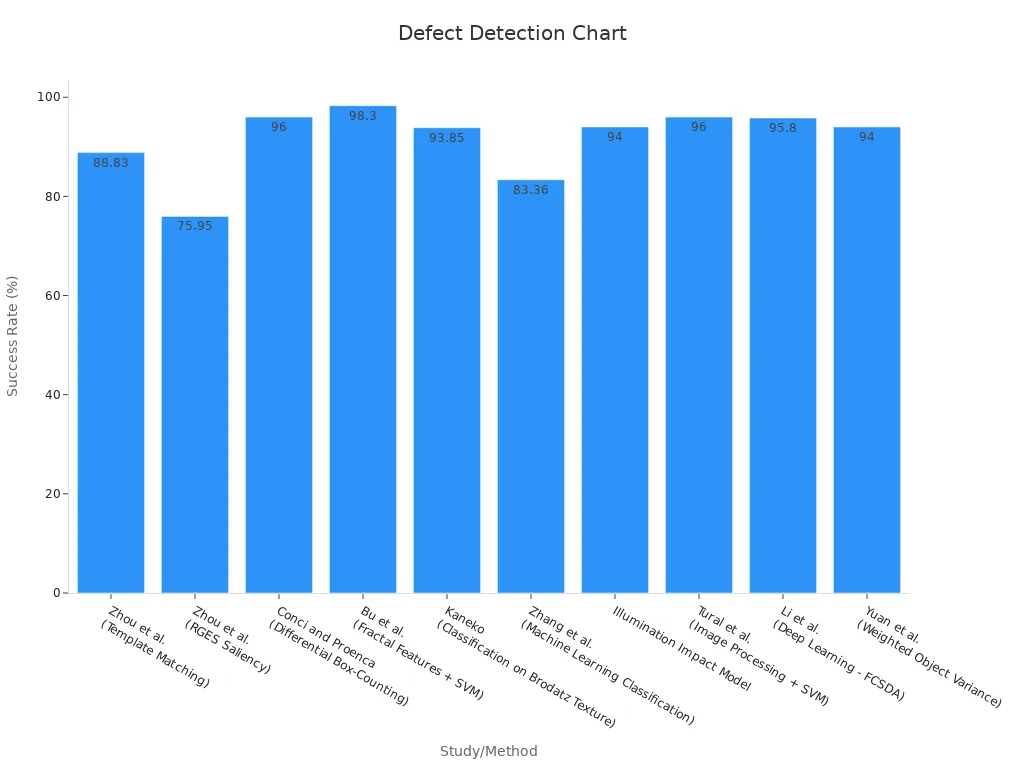

Defect Detection

Defect detection algorithms help machine vision systems spot flaws and irregularities in products. These algorithms use machine learning and deep learning to analyze images and classify defects. Recent industrial studies show high success rates for different methods. For example, template matching achieves 88.83% success, while fractal features with SVM reach 98.3%. Deep learning models like FCSDA report 95.8% accuracy with low false alarms. These results prove that AI-powered systems can outperform traditional inspection methods.

| Study / Method | Defect Detection Success Rate | Sample Size / Dataset | Notes |

|---|---|---|---|

| Zhou et al. (Template Matching) | 88.83% | N/A | Template matching with multiscale mean filtering |

| Zhou et al. (RGES Saliency) | 75.95% | N/A | Region growing Euclidean saliency method |

| Conci and Proenca (Differential Box-Counting) | 96% | 80 defect-free / 75 defective samples | Non-overlapping image copies |

| Bu et al. (Fractal Features + SVM) | 98.3% | 14,378 defect-free / 3,222 defect samples | High accuracy on large dataset |

| Kaneko (Classification on Brodatz Texture) | 93.85% | 65 samples | Computationally heavier method |

| Zhang et al. (Machine Learning Classification) | 83.36% | N/A | 13 base classifiers and ensemble methods |

| Illumination Impact Model | 94% | 1,865 samples | Simple classifier with illumination modeling |

| Tural et al. (Image Processing + SVM) | 96% | Real-time production environment | Bullet shell defect detection |

| Li et al. (Deep Learning – FCSDA) | 95.8% | 600 samples | Patterned fabric defect detection with 2.5% false alarms |

| Yuan et al. (Weighted Object Variance) | 94% | N/A | Improved Otsu thresholding with 8.4% false alarm rate |

Adaptive Processing

Adaptive processing allows machine vision systems to adjust to new data and changing environments. These systems use real-time feedback to update their algorithms and maintain high inspection quality. For example, a YOLOv7 model achieved a mean average precision of 0.94 and reduced inspection time by nearly 47%. In more than half of the test scenarios, the model reached a precision above 0.99. Adaptive systems also keep false-positive rates low, around 0.05, and outperform older models by 24% in detection accuracy.

- Adaptive inspection systems can improve defect identification rates by up to 35% compared to static systems.

- These systems dynamically change their algorithms based on real-time data, which enhances decision-making and reduces false positives.

- Operational flexibility increases by about 30% when machine vision systems are integrated.

- Adaptive processing supports high inspection quality even when lighting or background conditions change.

- Techniques like image augmentation and normalization help the system stay robust in different environments.

Real-time adaptive processing, powered by edge computing and advanced AI, enables faster and more efficient inspections across industries.

3D Machine Vision Sensor

Mount Your 3D Machine Vision Sensor

Proper mounting forms the foundation for reliable performance in any 3d machine vision sensor application. Engineers use secure brackets and mounting solutions to keep the 3d camera stable during operation. This stability allows the sensor to capture accurate point cloud data, which is essential for tasks like bin-picking or guiding a robot arm. Secure mounting improves translation accuracy by three times and rotational calibration by five times compared to less stable setups. Stationary mounting enables the system to process new point clouds quickly when the robot arm moves out of view. On-arm mounting offers flexibility, letting the 3d camera collect data from multiple angles, but it requires careful planning for weight and cabling. The choice of mounting directly affects cycle times, data quality, and the effectiveness of the 3d machine vision sensor in industrial automation.

- Proper mounting reduces negative impacts on robot maneuverability and payload.

- Well-planned mounting supports high-quality, true-to-reality point cloud data for inspection and assembly.

Fixturing & Positioning

Fixturing and positioning equipment play a critical role in maximizing the precision of a 3d camera. Consistent and precise positioning allows the system to capture detailed images from multiple viewpoints, reducing inspection errors by over 90% compared to manual methods. Operators rely on advanced fixturing to achieve sub-micron accuracy, with some equipment reaching ±0.1 µm. This level of precision ensures the system can verify component dimensions and detect even the smallest defects. Environmental factors like temperature and vibration can affect accuracy, but controlled fixturing helps maintain stable measurements. Reliable positioning also improves the consistency of point cloud data, which supports high-quality analysis and defect detection.

Training operators to trust and work alongside these systems further enhances reliability and measurement precision.

Validation & Simulation

Validation and simulation ensure that a 3d machine vision sensor performs as expected before full deployment. Engineers use structured frameworks like the V-model to link development phases with verification activities. This approach includes Installation Qualification, Operational Qualification, and Performance Qualification, each using metrics such as accuracy, precision, recall, and mean-squared-error. Simulation tools help optimize system performance and identify weaknesses before live operation. In real-world applications, metrics like Intersection over Union and mean Average Precision measure how well the 3d camera detects and segments objects. Medical imaging studies show accuracy rates of 87.6% and specificity of 94.8%, confirming the effectiveness of these validation methods. Continuous monitoring and operator training help maintain system reliability and address data drift over time.

| Evidence Type | Details |

|---|---|

| Technological Advancement | 3D systems capture full spatial data (length, width, depth) enabling complex object handling. |

| Advanced Technologies | Use of time-of-flight cameras and time-coded structured light techniques achieving up to 100x better accuracy than traditional methods. |

| Case Studies | DS Smith automated pallet dimensioning improving quality; Kawasaki Robotics improved precision and reduced cycle times in assembly. |

| Quantitative Metrics | Cycle time reduced by 26 seconds; 97% consistency in assembly; reduction of operators from 3 to 1; elimination of work-in-progress stations. |

| Industry Applications | Aerospace, medical devices, robotics where precision is critical. |

| AI Integration | Use of convolutional neural networks enhances object recognition and handling, improving automation and reducing errors. |

Integration & Usability

System Compatibility

System compatibility stands as a cornerstone for integrating machine vision systems into modern factories. Manufacturers now demand cameras that support advanced sensor capabilities, such as near-infrared, hyperspectral, and thermal imaging. These cameras must also withstand harsh environments, meeting IP67 ratings and resisting vibration and electromagnetic interference. Regulatory compliance, like FDA and HACCP standards, shapes both hardware and software design. Companies such as Cognex and Keyence deliver turnkey solutions that combine cameras, processing, and software, reducing integration complexity. Modular designs allow seamless connection to existing automation platforms, supporting dozens or even hundreds of cameras across multiple servers. Plug-ins and software support, like eCapture Pro, streamline image acquisition and processing. Open standards and middleware enable smooth integration with factory systems, including PLCs, MES, SCADA, and ERP.

| Benchmark Category | Evidence of Improvement |

|---|---|

| Productivity & Speed | Inspection speeds up to 2,400 parts per minute; throughput in tens of thousands of parts per hour. |

| Error Reduction | Error rates reduced by up to 90%. |

| Hardware Advances | Use of high-resolution cameras, multi-spectrum lighting, AI-driven self-training systems. |

| Integration Compatibility | Seamless integration with factory systems via open standards and middleware. |

| Usability Enhancements | Intuitive interfaces, fast setup, reduced need for specialized personnel, strong vendor support. |

| Cost Reduction | High-end system costs reduced from $500,000-$1,000,000 to $50,000-$100,000. |

| Quality Control | Shift toward 100% quality inspection at every production stage, enabling early defect detection and waste reduction. |

Customization

Customization allows machine vision systems to adapt to unique industry needs. In retail, Amazon Go uses tailored vision systems to automate checkout, improving productivity and customer satisfaction. Logistics companies like Amazon and DHL process millions of packages daily, using vision systems for defect detection and real-time inventory updates. In healthcare, remote patient monitoring with vision systems has reduced hospital admissions and improved outcomes. Manufacturers benefit from real-time defect detection, which enhances quality control and reduces errors. Technology providers such as Cognex and Landing.ai offer scalable, customizable solutions that fit diverse applications. Modular platforms, like Siemens’ Industrial Edge, show how integrating machine vision systems can improve operations and adapt to changing requirements. Customized AI-powered systems also automate hazardous tasks, improving workplace safety and reducing human error.

User Interfaces

User interfaces play a vital role in the usability of machine vision systems. Modern interfaces focus on effectiveness, efficiency, and user satisfaction. Studies using ISO 9241-11 standards show that well-designed interfaces increase task completion rates and reduce user fatigue. Companies now prioritize intuitive layouts, clear instructions, and fast setup processes. Improved user interfaces help operators complete tasks faster and with fewer errors, which can improve operations and boost productivity. Continuous feedback and iterative design further enhance usability, leading to higher user satisfaction and better adoption rates. Even a small improvement in interface design can lead to significant gains in customer retention and profits, making usability a key factor in successful machine vision deployments.

Fitting machine vision systems stand apart through advanced sensors, intelligent algorithms, and seamless integration. These features help manufacturers meet quality standards and ensure package integrity across industries. The systems deliver high accuracy, efficiency, and adaptability, supporting product integrity and reliable inspection.

The future of machine vision looks promising, with rapid growth and new trends shaping the industry:

- The global market may reach $35 billion by 2032, driven by automation and AI.

- IoT-enabled 3D vision systems and edge computing are improving real-time decision-making.

- Predictive analytics and open-source platforms are making technology more accessible.

- North America, Europe, and Asia Pacific each focus on unique applications and standards.

Machine vision will continue to transform how companies maintain quality and integrity in every process.

FAQ

What industries use fitting machine vision systems?

Manufacturers in automotive, electronics, food and beverage, and pharmaceuticals use these systems. They help automate inspection, improve quality, and reduce errors. Many factories rely on machine vision to boost productivity and maintain high standards.

How does a 3D camera improve inspection accuracy?

A 3D camera captures depth and surface details. This data helps the system measure objects more precisely. Engineers use 3D cameras to detect small defects and ensure correct assembly. The result is higher accuracy and fewer mistakes.

Can machine vision systems adapt to new products?

Yes. Modern systems use AI and adaptive algorithms. These features allow quick adjustments for new shapes, sizes, or materials. Operators can retrain the system with new data, making it flexible for changing production needs.

What maintenance do machine vision systems require?

Operators clean lenses and sensors regularly. They check for software updates and recalibrate the system as needed. Routine maintenance ensures reliable performance and extends the system’s lifespan.

See Also

A Comprehensive Guide To Inspection Vision Systems In 2025

Comparing Firmware-Based Vision Systems With Traditional Alternatives

An In-Depth Look At Electronics-Based Vision Systems

Exploring How Filtering Enhances Machine Vision System Accuracy

Understanding The Role Of Machine Vision In Automotive Industry