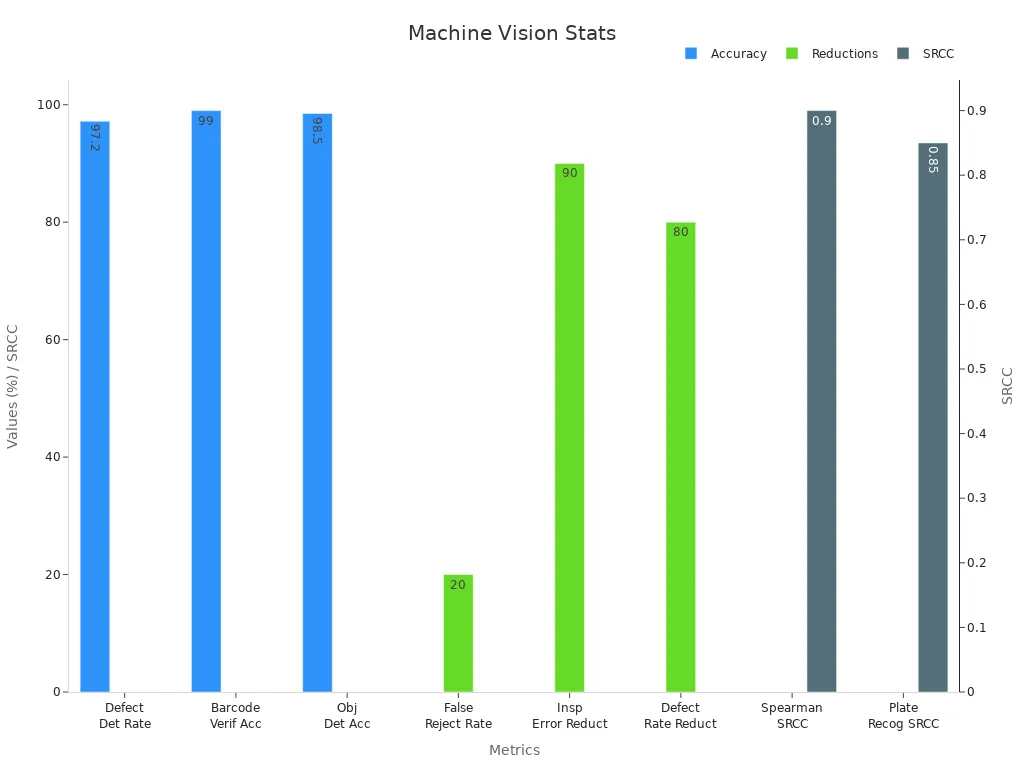

A robustness machine vision system uses advanced model design and data strategies to maintain high performance despite challenging conditions. Robustness ensures the model can handle unexpected data or environmental changes. In real-world settings, robustness protects production quality assurance and prevents costly errors. Reliable validation and strong security measures boost robustness, driving up defect detection rates and reducing errors. The following table highlights the impact of robustness on model reliability, data accuracy, and system performance:

| Metric / Statistic | Value / Improvement | Significance / Impact Description |

|---|---|---|

| Defect Detection Rate | Improved from 93.5% to 97.2% | Statistically significant improvement, showing enhanced defect detection accuracy. |

| Barcode Verification Accuracy | Over 99% | Ensures high-quality barcode production and compliance. |

| Object Detection Accuracy | 98.5% | High accuracy in identifying objects, critical for reliable inspections. |

| False Reject Rate Reduction | 20% reduction | Decrease in false rejects in pharmaceutical inspections, improving reliability. |

| False Eject Detection by Deep Learning Model | Improved from 8/37 to 24/37 cases | 65% success rate after expanding training data, reducing errors. |

| Inspection Error Reduction | Over 90% reduction | Validation reduces errors dramatically, enhancing system reliability. |

| Defect Rate Reduction | Up to 80% reduction | Validation cuts defect rates, supporting product quality and compliance. |

| Spearman Rank Correlation Coefficients | Range 0.29 to 0.9 | Quantifies detection accuracy and impact of skipping validation. |

| License Plate Recognition Metric | SRCC 0.85 | High correlation with recognition quality, emphasizing validation’s role. |

Key Takeaways

- A robust machine vision system keeps working well even with noisy, corrupted, or unexpected data, ensuring reliable inspections and quality control.

- High data quality and continuous validation are essential to maintain model accuracy and adapt to changing environments over time.

- Techniques like adversarial training, domain adaptation, and regularization help models resist attacks, handle new data types, and avoid overfitting.

- Explainability and strong security measures build user trust by making model decisions clear and protecting against errors or attacks.

- Regular maintenance and monitoring extend system life, reduce downtime, and keep machine vision systems performing at their best.

Robustness in Machine Vision Systems

Definition

Robustness describes how a machine vision system maintains performance when faced with unexpected challenges. In this context, model robustness means the model can handle errors, corrupted data, or sudden changes in the environment. A robustness machine vision system does not fail when it receives noisy data or distorted images. Instead, the model continues to deliver accurate results. This ability protects production lines and ensures that inspections remain reliable. Model robustness also helps the system adapt to new types of data without losing accuracy.

Key Features

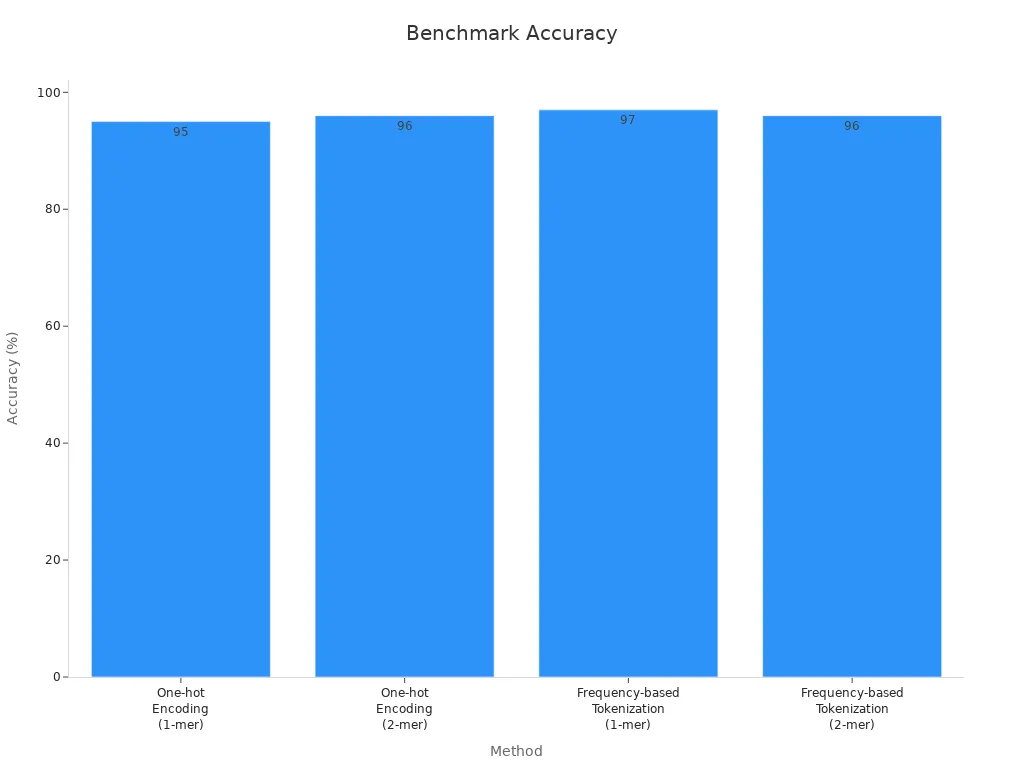

A robustness machine vision system includes several important features. First, the model must process large amounts of data and filter out irrelevant information. Feature selection methods, such as Hybrid V-WSP-PSO, reduce the number of features from thousands to just over a hundred, making the model more efficient. The table below shows how different encoding and feature selection methods improve model robustness and accuracy:

| Method / Encoding Type | Feature Reduction | Accuracy / Performance Metric |

|---|---|---|

| Hybrid V-WSP-PSO Feature Selection | Reduced features from 27,620 to 114 | RMSECV = 0.4013 MJ/kg, RCV2 = 0.9908 (high predictive performance) |

| One-hot Encoding (1-mer) | N/A | Accuracy ~95% |

| One-hot Encoding (2-mer) | N/A | Accuracy ~96% |

| Frequency-based Tokenization (1-mer) | N/A | Accuracy ~97% |

| Frequency-based Tokenization (2-mer) | N/A | Accuracy ~96% |

Statistical tests, such as chi-square and ANOVA, confirm that these features improve model robustness. Validation after installation ensures the model continues to perform well as new data arrives.

Corruption Robustness

Corruption robustness measures how well the model handles damaged or altered data. In industrial environments, dust, lighting changes, or camera faults can corrupt images. A robustness machine vision system must detect and correct these issues. The model uses error handling to identify corrupted data and prevent mistakes. Regular validation checks help maintain model robustness over time. Ruggedizing the hardware also supports robustness by protecting the system from harsh conditions. Together, these steps ensure the model delivers reliable results, even when the data is not perfect.

Why Robustness Matters

Reliability

Robustness stands at the core of every dependable machine vision system. When a model faces unexpected data, it must continue to deliver consistent results. High robustness means the model can process data with noise, distortion, or missing elements. This ability ensures the model does not break down during real-world operations. Precision, recall, F1 score, and AUC serve as key metrics for evaluating reliability. These metrics help engineers understand how well the model handles data and maintains accuracy. The table below summarizes their roles:

| Metric | Purpose | Ideal Value | Importance |

|---|---|---|---|

| Precision | Measures accuracy of positive predictions | High | Crucial when minimizing false positives or false detections is important. |

| Recall | Measures ability to identify all positives | High | Essential when missing positive cases is costly or full detection is required. |

| F1 Score | Balances Precision and Recall | High | Useful for imbalanced datasets or when false positives and negatives have different costs. |

| AUC | Overall classification performance | High | Important for evaluating performance across thresholds and comparing models. |

Intersection over Union (IoU) also plays a vital role. It measures how well the model matches predicted objects to actual objects. High IoU values show that the model can locate objects with great accuracy, even when data changes.

Security

Robustness protects machine vision systems from attacks and errors. When a model receives corrupted data, it must detect and handle these issues. Adversarial attacks try to fool the model by changing data in subtle ways. A robust model resists these attacks and keeps its predictions accurate. Security features include regular validation, error detection, and strong data handling. These steps help the model avoid mistakes that could lead to safety risks or production losses. By focusing on robustness, engineers create systems that guard against both accidental and intentional data problems.

Trust

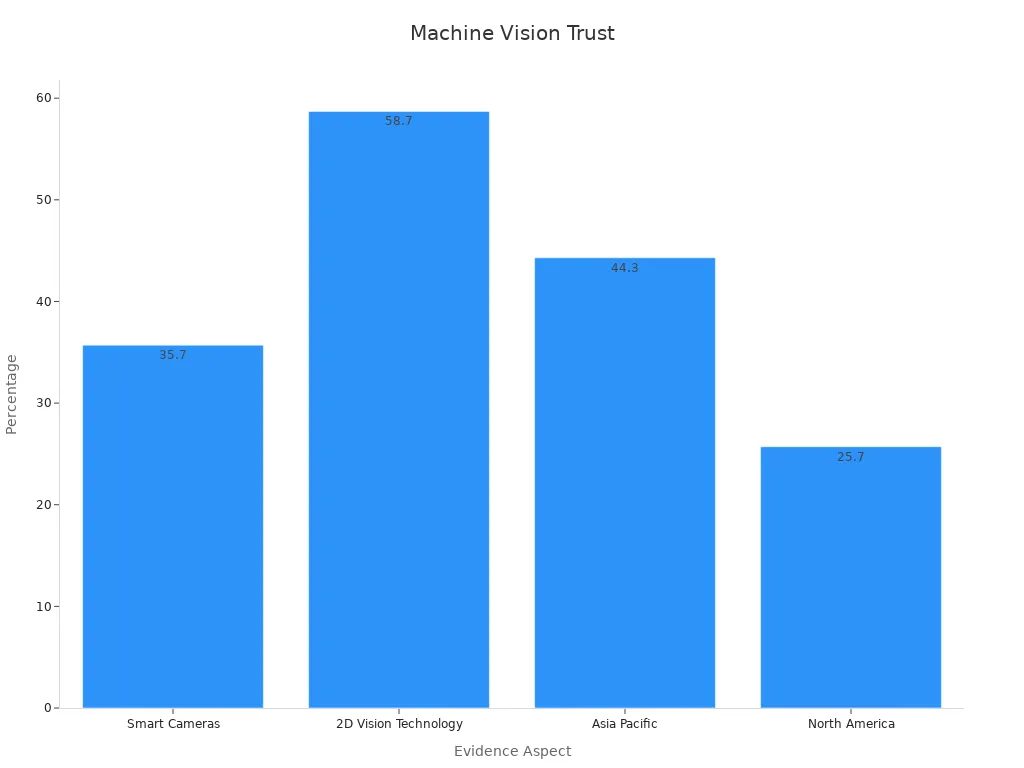

Trust in machine vision systems grows when users see consistent performance. Robustness builds this trust by ensuring the model works well with new data and in changing environments. Market research shows strong adoption of robust systems. For example, the global machine vision market will reach USD 13.52 billion in 2025, with a projected growth to USD 23.63 billion by 2032. Companies like BMW, Toyota, and Amazon rely on robust models for quality control and automation. The chart below highlights market share and trust in these technologies:

Smart cameras and 2D vision technology lead the market, showing that users trust robust models for accurate inspections. As robustness improves, more industries adopt these systems, knowing they can trust the model to handle diverse data and maintain high accuracy.

Achieving Robustness Machine Vision System

Data Quality

High data quality forms the foundation of model robustness in machine vision. Reliable data ensures that ai systems can learn accurate patterns and make correct predictions. Engineers use several metrics to measure data quality and its impact on model performance. These metrics include accuracy, precision, recall, F1-score, and mean intersection over union (IoU). Error rates, inference time, and throughput also play a role in evaluating data reliability. The table below summarizes key data quality benchmarks:

| Metric / Benchmark | Description / Impact on Reliability |

|---|---|

| Accuracy | Measures correct predictions; key for evaluating dataset reliability |

| Precision | Indicates correctness of positive predictions |

| Recall | Measures ability to find all relevant instances |

| F1-Score | Balances false positives and negatives |

| Mean Intersection over Union (IoU) | Evaluates overlap between predicted and true object locations |

| Error Rates | Lower rates improve trust and system reliability |

| Inference Time | Time to process images; critical for real-time applications |

| Throughput | Number of images processed per second; higher means efficiency |

| Downtime | Less downtime means higher availability |

| Annotation Time | Reduced time improves dataset quality and efficiency |

| Defect Rate Reduction | Up to 80% fewer defects observed |

| Inspection Error Reduction | Over 90% decrease compared to manual inspection |

| Production Cycle Time | Up to 20% faster cycles |

Continuous monitoring for data drift and real-time validation help maintain high data quality. Diverse dataset collection ensures that robust models can handle a wide range of scenarios. Engineers often use Gaussian data augmentation to simulate real-world noise and improve model robustness.

Adversarial Training

Adversarial training strengthens model robustness by exposing ai systems to challenging inputs during learning. This technique helps models resist attacks and unexpected data changes. Research shows that adversarial training maintains high accuracy on clean data and improves robustness against adversarial perturbations. For example, models trained with adversarial methods achieve nearly perfect accuracy on standard datasets, even when facing small perturbations. In medical imaging, adversarial training can boost accuracy from less than 20% to 80% under attack conditions. Even with stronger attacks, robust models maintain about 40% accuracy, while untrained models fail. Studies on benchmark datasets like CIFAR10 and Tiny ImageNet confirm that adversarial training leads to a 40% improvement in object recognition accuracy under adverse conditions. Attack success rates drop, and robustness metrics rise, making adversarial training a proven method for enhancing model robustness.

Domain Adaptation

Domain adaptation allows ai models to perform well when data changes between training and deployment environments. This process helps robust models adjust to new lighting, backgrounds, or camera types. Engineers use techniques such as transfer learning and feature alignment to bridge the gap between different domains. Domain adaptation ensures that model performance remains high, even when the data distribution shifts. This approach supports model robustness by preparing the system for real-world variability. Regular domain adaptation updates keep the model reliable as new data arrives.

Regularization

Regularization techniques control model complexity and prevent overfitting, which improves model robustness. Methods like dropout, batch normalization, and noise injection force the model to generalize better. For example, random cropping increases accuracy from 72.88% to 80.14%, while noise injection boosts accuracy from 44.0% to 96.74%. Dropout and batch normalization further improve test accuracy and reduce loss. Engineers use cross-validation accuracy, mean squared error, and area under the curve to evaluate the impact of regularization. These methods help robust models maintain high performance on unseen data and noisy environments.

Explainability

Explainability builds trust in ai systems by making model decisions transparent. Engineers use visualization tools and feature importance scores to understand how the model makes predictions. Explainable models help identify errors and improve model robustness. When users can see why a model made a decision, they feel more confident in its reliability. Explainability also supports model evaluation by highlighting areas where the model may need improvement. This transparency is essential for regulated industries and safety-critical applications.

Validation

Validation confirms that a machine vision system meets performance and reliability standards. Engineers use Gage Repeatability and Reproducibility studies, bias and linearity tests, and statistical comparisons to evaluate model robustness. Key indicators include %RandR values under 10%, acceptable %P/T values, and measurement differences within 0.01 mm. High appraiser agreement percentages show strong repeatability and reproducibility. Statistical tests reveal no significant bias or linearity issues, except for minor average bias that may need further review. The vision system’s measurement resolution matches standard calibrated systems, supporting its use for in-process inspection. These results confirm that robust models can replace traditional destructive testing with 100% non-destructive inspection. Continuous validation ensures that model performance remains high as new data and conditions arise.

Tip: Integrating hardware improvements, advanced algorithms, and ongoing validation creates a robust machine vision system. Neuromorphic exposure control and interdisciplinary collaboration further enhance model robustness and system reliability.

Model Robustness Challenges

Noisy Data

Noisy data presents a major challenge for model robustness in machine vision systems. Engineers often see a drop in model performance when data contains random errors or distortions. For example, accuracy can fall to 44.0% with noisy data, but noise injection can boost it to 96.74%. Random cropping increases accuracy from 72.88% to 80.14%. Dropout and batch normalization also improve test accuracy and reduce loss. L2 regularization helps models handle noisy data by reducing overfitting. The total error in a machine vision system includes bias squared, variance, and irreducible error. Bias comes from incorrect model assumptions, while variance results from sensitivity to noisy data. Irreducible error is noise that no model can remove. Engineers use regularization and data augmentation to balance bias and variance, improving model robustness and overall performance.

Environmental Variability

Environmental variability affects model robustness by introducing unpredictable changes. Factors such as lighting, temperature, dust, humidity, vibration, and power supply fluctuations can degrade data quality and model performance. For instance, environmental light adds noise, but optical filters can help. Temperature increases sensor noise, so cooling systems are used. Dust reduces image clarity, but sealed enclosures prevent buildup. Humidity causes condensation, which dehumidifiers address. Vibration blurs images, so vibration-dampening platforms stabilize cameras. Power supply fluctuations and electromagnetic interference also impact model robustness. Studies show that models like the Segment Anything Model (SAM) lose performance when images are perturbed. Tailored prompting strategies and dataset-centric analysis can improve robustness against these challenges.

Overfitting

Overfitting occurs when a model learns patterns specific to the training data but fails to generalize to new data. This reduces model robustness and leads to poor performance in real-world applications. Engineers use regularization, dropout, and data augmentation to prevent overfitting. These techniques help the model focus on relevant features and ignore noise. Adversarial training also improves model robustness by exposing the model to challenging inputs. Case studies show that adversarial attacks can trick models, causing misclassification in autonomous vehicles and facial recognition systems. Defense strategies such as ensemble learning and gradient masking help maintain robustness and fairness.

Maintenance

Ongoing maintenance is essential for model robustness and production quality assurance. Regular hardware checks, software updates, calibration, and functional testing keep the model and ai systems reliable. Predictive maintenance reduces costs by up to 40% and downtime by 20-50%. In the automotive industry, predictive maintenance saved $20 million annually and reduced downtime by 15%. AI and IoT enable real-time monitoring, allowing early detection of anomalies. Maintenance activities restore optimal performance and extend the lifespan of machine vision systems. Industries such as healthcare, manufacturing, and energy benefit from improved equipment reliability and reduced failures.

A robustness machine vision system helps industries keep inspections accurate and reliable. Engineers build a strong model by using high-quality data, regularization, and adversarial training. They also use domain adaptation and explainability to improve the model. Teams should monitor data, update the model, and validate results often. A recent review shows that only a few studies focus on continuous validation after deployment. Using failure monitors, safety channels, redundancy, voting, and input/output restrictions keeps the model dependable. Ongoing adaptation and validation protect the model from new data and changing environments. These steps help the model stay robust and effective.

FAQ

What makes a machine vision model robust?

A robust model maintains high accuracy when it processes new or unexpected data. Engineers design the model to handle noise, corrupted images, and environmental changes. This approach ensures the model supports production quality assurance and delivers reliable results in real-world applications.

How does data quality affect model performance?

High-quality data helps the model learn correct patterns. Clean, diverse data reduces errors and improves accuracy. Engineers monitor data for drift and validate the model regularly. Reliable data supports production quality assurance and keeps the model effective in changing environments.

Why is validation important for a machine vision model?

Validation checks if the model meets performance standards. Engineers use validation to confirm the model works with new data. Regular validation helps the model adapt to changes and supports production quality assurance. This process keeps the model accurate and reliable.

How do engineers protect a model from noisy data?

Engineers use data augmentation and regularization to help the model handle noise. They add random changes to data during training. This process teaches the model to ignore irrelevant information. The model then performs well, even when it receives noisy or corrupted data.

Can a model adapt to new environments or data types?

Yes. Engineers use domain adaptation to help the model adjust to new data or environments. The model learns from different data sources and stays accurate. This flexibility supports production quality assurance and ensures the model remains reliable as conditions change.