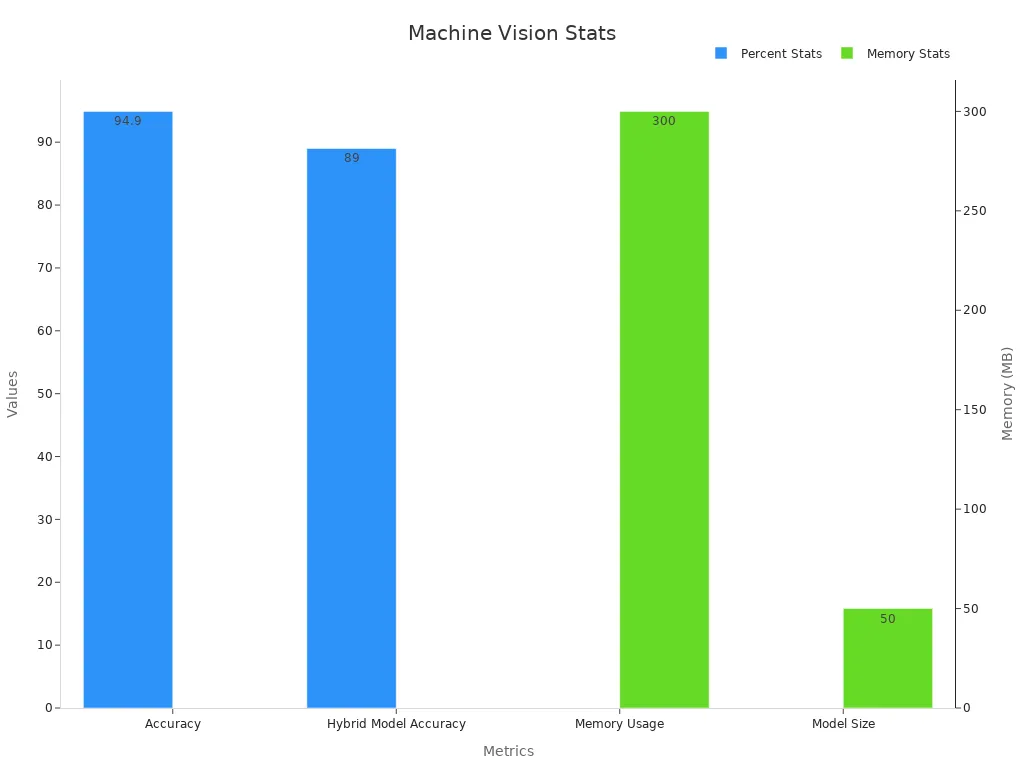

An embedding machine vision system combines compact hardware and smart algorithms to analyze visual data directly within a device. Unlike traditional machine vision, embedded vision systems integrate processing and sensing, enabling real-time decisions with minimal delay. The latest statistics reveal up to 94.9% accuracy, low latency, and efficient memory usage—making embedded machine vision ideal for fast, reliable results in practical settings.

Decision-makers benefit from understanding these key features, as embedded vision systems deliver high adaptability, cost efficiency, and scalability. The table below outlines measurable criteria for evaluating system performance and business value.

| Key Metric / Feature | Quantitative Evidence / Description |

|---|---|

| Processing Speed | Ranges from milliseconds to minutes depending on task; traditional systems can achieve 60+ FPS in stable settings, AI-based systems around 30+ FPS depending on hardware and model. |

| Accuracy | Traditional systems achieve 98-99% accuracy in controlled environments; AI-based systems maintain 94%+ accuracy in variable conditions when properly trained. |

| Error Rates | Metrics include false negatives and false positives; reducing these improves system reliability. |

| Hardware Requirements | Traditional systems typically use CPUs or embedded processors; AI-based systems require GPUs or AI accelerators. |

| Cost vs Performance | Trade-offs exist; traditional systems have lower upfront costs but may incur higher labor/maintenance; AI systems have higher initial investment but reduce long-term expenses. |

| Adaptability & Scalability | Traditional systems excel in stable, repetitive tasks; AI-based systems adapt to changing environments and scale to multiple tasks. |

| Feature Extraction | Traditional uses hand-engineered rules; AI uses deep learning for automatic feature extraction. |

| Implementation Complexity | Traditional systems require manual tuning; AI systems need large labeled datasets and retraining. |

Key Takeaways

- Embedded machine vision systems combine compact hardware and smart software to analyze images directly on devices, enabling fast and accurate decisions.

- These systems offer real-time processing with low power use, making them ideal for applications like industrial automation, robotics, and consumer electronics.

- Embedded vision reduces costs by processing data locally, lowering latency, and minimizing the need for expensive external computers or cloud services.

- Key features include compact design, integrated hardware and software, energy efficiency, and adaptability to various tasks and environments.

- Industries benefit from embedded vision through improved quality inspection, faster production, enhanced safety, and smarter consumer devices.

What Is Embedded Vision?

Embedded Machine Vision Defined

Embedded vision describes the integration of image capture and processing directly into compact devices. These systems combine sensors, processors, and software to analyze visual data without relying on external computers. Industry leaders define embedded machine vision as a technology that embeds image processing at the hardware level, often using systems on chips, single-board computers, or specialized modules. This approach allows devices to perform complex computer vision tasks, such as object detection or pattern recognition, within a small footprint.

Embedded machine vision systems use advanced hardware like GPUs and FPGAs to deliver real-time performance. These components enable efficient image processing and support AI-driven applications. For example, a camera with onboard processing can identify objects or track movement instantly. This capability makes embedded vision systems ideal for applications in robotics, autonomous vehicles, and smart consumer devices.

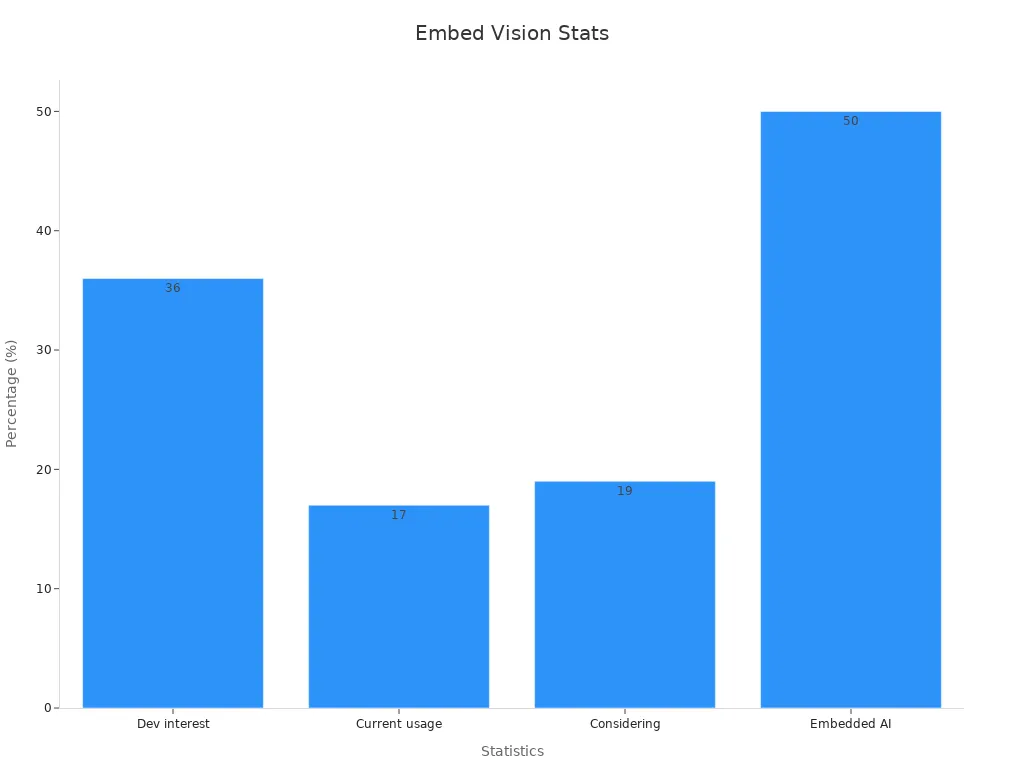

The growing interest in embedded vision reflects its expanding role in modern technology. The table below highlights key industry statistics:

| Statistic Description | Value / Detail |

|---|---|

| Developer interest in Embedded Vision | 36% |

| Developers currently using Embedded Vision | 17% |

| Developers considering Embedded Vision | 19% |

| Embedded AI interest among advanced tech | 50% |

| Embedded Systems market size (2024) | US$178.15 billion |

| Projected market size (2034) | US$283.90 billion |

| CAGR (2024-2034) | 4.77% |

| Leading application fields | Industrial control & automation (29%), IoT (24%), Communications (21%), Automotive (19%) |

| Largest regional market share | North America (51%), Europe (24%), Asia Pacific (16%) |

| Popular embedded platforms | Raspberry Pi (39%), Arduino, GNU GCC, CMake |

| Widely used OS | Embedded Linux (44%), FreeRTOS (44%) |

Core Functions

Embedded vision systems perform several essential functions that drive innovation across industries. These systems capture images, process visual data, and make decisions in real time. In material recovery facilities, for example, embedded machine vision automates the identification and sorting of recyclable materials. The system uses AI and deep learning models to classify objects on conveyor belts, improving efficiency and reducing manual labor.

Embedded machine vision systems also support continuous software updates and data-driven business models. Companies use these systems to collect diagnostic and performance data, which they can share with suppliers or use to enhance product quality. Embedded vision enables real-time monitoring, quality assurance, and predictive maintenance in industrial automation.

The core functions of embedded vision include:

- Image acquisition and processing for object detection and classification

- Real-time decision-making using AI and machine learning

- Data generation for analytics and business insights

- Integration with IoT devices and cloud platforms

Embedded vision systems rely on robust embedded systems architectures, such as SoCs and FPGAs, to deliver reliable performance. These systems support a wide range of applications, from robotics and healthcare to consumer electronics and automotive safety. As embedded machine vision systems continue to evolve, they will play a central role in the future of computer vision and AI-powered automation.

Embedded vs. Traditional Vision

System Structure

Embedded vision and traditional machine vision systems use different structures to process visual information. Embedded vision places the processing unit, sensors, and software inside a single compact device. This design allows the system to analyze images and make decisions directly on the device. In contrast, traditional machine vision systems separate the camera from the processing hardware. The camera sends images to an external computer, which then performs analysis and detection tasks.

Embedded vision systems often use dedicated processors like NVIDIA Jetson or NXP chips. These processors handle computer vision tasks on the device, reducing the need for data transfer. Traditional machine vision systems rely on powerful PCs or cloud servers for image processing and detection. This structure can increase latency and slow down decision-making.

The compact design of embedded vision makes it suitable for environments with limited space. Traditional machine vision systems, with their separate components, often require more room and complex setup.

Key Differences

Several key differences set embedded vision apart from traditional machine vision. The table below highlights these differences:

| Feature/Aspect | Embedded Vision Systems | Traditional Machine Vision Systems |

|---|---|---|

| Processing Location | On-device (inside camera hardware) | External computers |

| Latency | Low; enables real-time detection | Higher; data transfer increases delay |

| Speed | Fast; ideal for high-speed manufacturing | Slower with large datasets |

| Cost | Lower total ownership cost | Higher upfront and maintenance costs |

| Energy Efficiency | High; fewer components | Lower; more components |

| Flexibility | Limited; hardware-centric | High; software and hardware upgrades possible |

| Scalability | Limited; hardware changes needed | Easily scalable by adding components |

| Setup and Maintenance | Simple; plug-and-play | Complex; requires expertise |

| Application Suitability | Best for real-time, speed-critical tasks | Best for complex, evolving tasks |

| Size | Compact | Bulkier |

| Ease of Integration | Needs engineering expertise | Easier with standard interfaces |

| Speed of Decision-Making | Excels in real-time | Needs fast hardware/software for near real-time |

Embedded vision excels in real-time detection and computer vision tasks where speed and efficiency matter. Machine vision systems offer more flexibility and scalability for complex computer vision applications. Embedded vision works best for specific, high-speed detection needs, while traditional machine vision systems handle broader, evolving computer vision challenges.

Key Features of Embedded Vision Systems

Compact Design

Embedded vision systems stand out for their compact design. Engineers integrate sensors, processors, and software into a single, small device. This approach allows embedded machine vision systems to fit into tight spaces, such as drones, smart cameras, or portable medical tools. Designers measure compactness using metrics like power consumption in milliwatts and computational throughput in multiply-accumulate operations per second. For example, some embedded vision processors deliver hundreds of GMAC/s while consuming only a few hundred milliwatts. This efficiency is critical for battery-powered devices and IoT applications. The scalability of these processors, such as the ability to add more CNN engines, lets manufacturers tailor performance to specific needs. Compact design enables deployment in environments where traditional machine vision systems would not fit, such as wearable devices or autonomous robots.

Real-Time Processing

Real-time processing is a core feature of embedded vision. These systems analyze images and make decisions instantly, without sending data to external computers or the cloud. This capability is essential for applications like industrial automation, robotics, and automotive safety. The table below compares real-time performance between embedded vision and traditional architectures:

| Aspect | Embedded Vision System (FRYOLO) | Traditional Cloud Computing / Two-Stage Models |

|---|---|---|

| Latency | Significantly reduced due to edge processing | Higher due to data transmission to cloud |

| Detection Performance | Recall: 84.7%, Precision: 92.5%, mAP: 89.0% | Generally lower real-time suitability |

| Frame Rate (FPS) | Up to 33 FPS, meeting real-time requirements | Lower FPS in two-stage models |

| Power Consumption | Lower in single-stage models | Higher in two-stage models |

| Memory Usage | Balanced with performance | Higher memory usage |

| System Response Speed | Improved due to local processing | Slower due to network latency |

Embedded vision systems deliver fast, reliable results. They support real-time analysis in environments where delays could cause safety risks or production losses. This advantage makes embedded machine vision systems ideal for tasks that require immediate feedback, such as quality inspection or autonomous navigation.

Low Power Use

Low power use is another key benefit of embedded vision. Designers use several strategies to reduce energy consumption:

- Sensor standby and idle modes lower power use to a fraction of normal operation.

- Advanced lithography nodes, such as moving from 180-nm to 65-nm technology, reduce operating voltages and cut dynamic power.

- Image sensors with lower noise allow for reduced LED illumination, saving energy in active lighting applications.

- Switching from rolling shutter to global shutter sensors decreases LED ON time, further reducing power needs.

These features help embedded vision systems operate efficiently in battery-powered and portable devices. Low power use extends device life and supports deployment in remote or mobile environments. Applications like wearable health monitors, smart home devices, and portable scanners benefit from this energy efficiency.

Integrated Hardware & Software

Embedded vision systems combine hardware and software in a tightly integrated package. Dedicated processors, such as NVIDIA Jetson or NXP i.MX, handle image processing directly on the device. This integration enables near real-time decision-making and eliminates the need for data transfer to external computers. Key advantages include:

- On-device processing for fast, autonomous decisions.

- Compact and flexible design for use in drones, vehicles, and IoT devices.

- Real-time data capture and processing, with dynamic adjustment of parameters.

- High-speed cameras and powerful GPUs ensure smooth operation.

- Software optimization algorithms, such as adam optimization, improve accuracy and reduce errors.

This level of integration boosts operational efficiency and adaptability. Embedded vision systems can handle complex computer vision tasks, such as face detection or recommendation systems, with high accuracy and speed.

Note: Integrated hardware and software allow embedded vision systems to deliver precise, energy-efficient, and responsive performance across a wide range of machine vision applications.

Cost Efficiency

Cost efficiency drives the adoption of embedded vision systems in industry. Processing data locally on edge devices reduces latency, communication costs, and privacy risks. Running AI inference on embedded vision systems is more economical for scalable, low-latency, or mission-critical applications than relying on cloud-based APIs. Key cost-saving strategies include:

- Lightweight AI models, such as TensorFlow Lite, lower computing resource needs and total costs.

- Efficient application design balances frame rates and hardware capabilities, reducing the need for expensive processors.

- Cross-platform hardware and software prevent vendor lock-in and lower long-term expenses.

- Edge processing reduces cloud data handling and compliance costs.

The embedded vision systems market is growing rapidly, with a projected compound annual growth rate of 10.75%. By 2031, the market could reach USD 14.46 billion, reflecting strong economic incentives for businesses. Cost efficiency makes embedded machine vision systems attractive for industrial automation, quality inspection, and other machine vision applications.

Embedded vision systems enable deployment in diverse environments, from factory floors to smart cities, by combining compactness, real-time analysis, low power, integration, and cost savings. These features support the growing demand for advanced machine vision and AI-driven computer vision solutions.

Embedded Machine Vision: Components

Image Sensors

Image sensors serve as the foundation of machine vision systems. These sensors convert light into electrical signals, enabling cameras to capture detailed images for further image processing and image analysis. Two main sensor types exist: CCD and CMOS. CMOS sensors have become popular in embedded systems because they offer high speed and lower costs, while CCD sensors deliver superior image quality. Shutter type also matters. Rolling shutters expose pixels one after another, which can cause distortion in fast-moving scenes. Global shutters expose all pixels at once, reducing distortion and improving accuracy in machine vision tasks.

Key sensor specifications include resolution, pixel size, frame rate, sensitivity, and dynamic range. Higher resolution and frame rates allow cameras to capture more detail and process images faster. For example, quantum efficiency measures how well a sensor converts light into usable signals, which is vital for low-light conditions. The table below summarizes important sensor parameters:

| Parameter | Unit | Definition | Impact on Machine Vision Systems |

|---|---|---|---|

| Quantum Efficiency (QE) | Percent (%) | Light-to-electron conversion rate | Higher QE improves low-light performance |

| Temporal Dark Noise | Electrons (e-) | Noise with no signal present | Lower noise means clearer images |

| Saturation Capacity | Electrons (e-) | Maximum charge per pixel | Higher capacity increases dynamic range |

| Signal to Noise Ratio | Decibels (dB) | Signal strength versus noise | Higher SNR gives better image clarity |

Processing Units

Processing units handle the core image processing and image analysis tasks in embedded machine vision. These units range from simple microcontrollers to advanced GPUs and AI accelerators. Inference latency benchmarks, such as those using ResNet-50 or EfficientDet models, help measure how quickly a processor can complete image recognition and deep learning tasks. EEMBC benchmarks also provide industry-standard ways to compare processor performance in real-world scenarios.

Many embedded systems use System-on-Chip (SoC) designs, which combine processors, graphics, and interfaces into one compact board. This integration reduces system size, power consumption, and cost. Specialized benchmarks like ADASMark and MLMark test how well these processors handle machine vision workloads, including pattern recognition and real-time image capture and processing.

Software Algorithms

Software algorithms drive the intelligence behind machine vision. These algorithms perform tasks such as object detection, classification, and pattern recognition. Deep learning neural networks, convolutional neural networks (CNNs), and transfer learning techniques have shown high accuracy in industrial inspection, agricultural robotics, and process control. For example, FPGA-based acceleration can speed up image processing, enabling real-time inspection in manufacturing.

Recent studies show that advanced algorithms like Random Forest and Support Vector Machines outperform traditional methods in predictive tasks. The table below highlights how different algorithms perform in various application domains:

| Application Domain | Algorithm/Technique | Empirical Findings / Statistical Evidence |

|---|---|---|

| Industrial Inspection | Deep learning, CNNs, Mobile Nets | High accuracy, efficient automated inspection, importance of tuning |

| Agricultural Robotics | Machine vision with machine learning | Enables harvesting, weed identification, improves crop quality |

| Assembly Process Inspection | FPGA-accelerated vision algorithms | Real-time, accurate geometric inspection |

Communication Interfaces

Communication interfaces connect cameras and processing units, allowing fast data transfer for image processing and image analysis. Common interfaces include Ethernet (GigE Vision), USB, Camera Link, and CoaXPress. Each interface offers different benefits. For example, GigE Vision supports high-speed transfers over long distances, while USB 3.0 works well for short-range, high-resolution imaging. Camera Link and CoaXPress provide low latency and ultra-high-speed data transfer, which are critical for demanding machine vision applications.

Performance metrics for communication interfaces include frames per second (FPS), bandwidth, power consumption, and energy efficiency. High bandwidth and low latency ensure that cameras can send images quickly for real-time analysis. Efficient interfaces help embedded systems maintain small size and low power use, supporting deployment in diverse environments.

Tip: Choosing the right communication interface can improve system responsiveness and reliability, especially in industrial and automotive machine vision.

Applications of Embedded Vision

Industrial Automation

Embedded vision systems transform manufacturing by enabling real-time machine vision on the factory floor. Smart camera-based systems operate in space-constrained environments, performing decentralized visual inspection, label verification, and simple part sorting. AI-powered vision systems in the semiconductor and automotive industries handle defect detection and pattern recognition, adapting to complex surfaces and unstructured data. Companies like BMW use AI-driven machine vision for assembly verification, reducing manual errors and improving throughput. Robots equipped with embedded vision guide pick-and-place tasks, ensuring precise alignment and tracking. The table below highlights common applications of embedded machine vision systems in industrial automation:

| Application Area | Industry Examples | Use Cases and Benefits |

|---|---|---|

| Smart Camera-Based Systems | Space-constrained environments | Decentralized inspection, label verification, part sorting |

| AI-Powered Vision Systems | Semiconductor, Automotive, Fabric | Defect detection, anomaly detection, pattern recognition |

| Assembly Verification | Automotive, Aerospace, Consumer Elec | Component placement, alignment, securing, reducing failures |

| Robot Guidance and Part Localization | Electronics, Automotive, Logistics | Robotic arms for pick-and-place, alignment, sorting |

Darwin Edge’s deep learning-based defect detection system processes over 5,000 images on edge devices, enabling real-time defect detection and immediate alerts. This reduces manual inspection errors and supports manufacturing quality control.

Quality Inspection

Manufacturing relies on embedded vision for visual inspection and defect detection. These systems improve accuracy from 63% to 97%, catching issues earlier and reducing defective products. Automated inspection increases production speed and lowers labor costs. Samsung uses AI vision for PCB defect detection, while pharmaceutical companies inspect vials for cracks or missing caps. Integration with quality management systems allows real-time monitoring, instant alerts, and automated reporting. Manufacturers see up to 20% fewer incidents and 30% lower inspection costs. Visual inspection ensures higher product quality and customer satisfaction, minimizing recalls and supporting manufacturing quality control.

Robotics & Autonomous Systems

Robotics and autonomous systems depend on embedded vision for object detection, tracking, and real-time decision-making. Robotic vision systems achieve up to 99.9% detection accuracy in controlled environments. Vision-guided robots increase manufacturing line efficiency by 35% and reduce inspection errors by over 90%. Drones with machine vision and AI reach an 88.4% success rate in obstacle avoidance. Automotive suppliers report a 40% reduction in manual inspection costs after adopting embedded vision. Aerospace assembly lines use vision-guided robots to reduce cycle time and achieve 97% consistency in part assembly. Industrial vision systems enable real-time defect detection and motion tracking, improving safety and productivity.

Consumer Devices

Embedded vision brings machine vision to everyday technology. Devices like Arduino Nicla Vision and OpenMV Cam perform real-time vision tasks such as motion detection and face recognition with low latency and power use. TinyML enables vision AI on microcontrollers, powering battery-operated security cameras and smart doorbells. Smart embedded vision cameras with onboard AI chips handle object detection, quality inspection, and tracking without bulky computers. Consumer products, including AR glasses, appliances, and wildlife cameras, use embedded vision for enhanced features. Software frameworks like TensorFlow Lite support scalable, energy-efficient deployment of AI vision models in consumer devices.

Embedded vision systems deliver real-time, intelligent solutions across manufacturing, robotics, and consumer technology. These common applications of embedded machine vision systems drive efficiency, accuracy, and innovation in diverse industries.

An embedding machine vision system combines compact hardware, real-time processing, and low power use. These systems deliver fast, accurate results in many industries. Key features include integrated hardware and software, cost efficiency, and adaptability.

- Companies use these systems for automation, inspection, and robotics.

Decision-makers can unlock new value by exploring embedded vision for their own projects.

FAQ

What industries use embedded machine vision systems?

Manufacturers, automotive companies, electronics firms, and consumer device makers use embedded machine vision. These systems also support robotics, healthcare, and smart home products. Many industries choose embedded vision for real-time analysis and automation.

How do embedded vision systems save energy?

Engineers design embedded vision systems with low-power processors and efficient sensors. These systems use standby modes and advanced chip technology. Devices run longer on batteries and reduce energy costs.

Can embedded vision systems work with AI?

Yes. Embedded vision systems often use AI models for object detection, classification, and pattern recognition. AI improves accuracy and enables smart automation in real time.

What is the main advantage over traditional machine vision?

Embedded vision systems process images directly on the device. This reduces latency and eliminates the need for external computers. Users see faster results and lower costs.

Are embedded vision systems easy to integrate?

Many embedded vision systems offer plug-and-play designs. Engineers can quickly add them to robots, cameras, or IoT devices. Some systems require basic programming or configuration.

See Also

Understanding The Role Of Cameras In Vision Systems

Essential Features And Advantages Of Medical Vision Devices

A Comprehensive Guide To Image Processing In Vision Systems