A few-shot learning machine vision system allows machines to recognize and understand new objects using only a few examples. In 2025, this technology matters because it lets computer vision systems work well when data is scarce. Researchers see major changes in machine learning, as models now focus on learning similarities and differences between objects instead of just memorizing.

- Few-shot learning helps models generalize from small data samples.

- This approach supports important tasks like image recognition, robotics, and natural language processing.

- Models can adapt and perform well, even with limited training data, making them valuable in real-world settings.

Key Takeaways

- Few-shot learning lets machines recognize new objects using only a few examples, saving time and data.

- This technology helps in many fields like healthcare, robotics, e-commerce, and industrial automation by improving accuracy with limited data.

- Key methods include meta-learning, transfer learning, data augmentation, and prototypical networks, which make models adapt quickly and perform well.

- Few-shot learning uses support and query sets in episodic training to teach models how to learn and test from small samples effectively.

- Despite challenges like data scarcity and costs, few-shot learning opens new opportunities for smarter, faster, and more flexible AI systems.

Few-shot Learning Machine Vision System

Core Concepts

Few-shot learning machine vision systems help computers recognize new objects or categories using only a few examples. In 2025, these systems play a key role in computer vision because they solve problems where data is limited. Traditional machine learning needs thousands of images to learn a new object. Few-shot learning can work with just a handful.

Researchers define few-shot learning as building a function for a task using a small supervised dataset and an extra dataset that is not related to the main task. The goal is to map inputs to the right outputs with very little supervision. Scholars often describe this as an N-way K-shot problem. Here, N is the number of categories, and K is the number of examples per category. For example, a 5-way 1-shot task means the system learns to recognize five categories with only one example for each.

Few-shot learning methods fall into four main groups: data augmentation, metric learning, external memory, and parameter optimization. These strategies help models learn faster and adapt better.

Many studies have tested few-shot learning machine vision systems on real-world tasks. Researchers use benchmarks like Caltech-UCSD Birds-200-2011 for fine-grained image classification, ISIC for skin lesion analysis, and ChestX-ray8 for medical imaging. Methods such as Matching Networks, MAML, and manifold mixup have shown strong results on these benchmarks. The field has grown quickly, with over 30 theoretical and 20 applied studies confirming the value of these systems.

| Study / Benchmark | Description |

|---|---|

| Lake et al. (2015) | Human-level concept learning through probabilistic program induction, foundational for few-shot learning theory. |

| Vinyals et al. (2016) | Matching Networks for one-shot learning, a seminal method in few-shot machine vision. |

| Finn et al. (2017) | Model-Agnostic Meta-Learning (MAML), enabling fast adaptation in few-shot scenarios. |

| Meta-Dataset (Triantafillou et al., 2020) | A comprehensive dataset combining multiple datasets to benchmark few-shot learning methods. |

| Caltech-UCSD Birds-200-2011 | A fine-grained image classification dataset used for few-shot learning evaluation. |

| ChestX-ray8 | Medical imaging dataset used to test few-shot learning in healthcare applications. |

| ISIC Skin Lesion Analysis | Benchmark for few-shot learning in dermatology image classification. |

| Incremental Few-Shot Instance Segmentation (Ganea et al., 2021) | Demonstrates few-shot learning applied to object detection and segmentation tasks. |

Few-shot learning machine vision systems have shown impressive improvements in accuracy and speed. For example, in image recognition, cross-domain transfer with domain adaptation led to a 27% increase in accuracy. In medical imaging diagnosis, fine-tuning with progressive layer unfreezing improved accuracy by 30%. These results show that few-shot learning can outperform older methods, especially when data is scarce.

Episode Structure

Few-shot learning uses a special training method called episodic training. Each episode acts like a mini-task. The system receives a small set of labeled examples and a set of new, unlabeled examples to test its learning. This setup mimics real-world situations where new categories appear, and only a few samples are available.

Researchers design episodes with two main parts: the support set and the query set. During meta-training, the support set helps the model learn, while the query set checks if the model can apply what it learned. In meta-testing, the model uses a new support set to adapt to new categories and a new query set to test its final performance.

- Each episode helps the model practice learning from few examples.

- The model learns to generalize, not just memorize.

- This approach reduces overfitting and helps the system adapt to new tasks quickly.

Studies show that this episode structure helps few-shot learning machine vision systems avoid forgetting old knowledge and overfitting to small datasets. The system becomes more robust and flexible, which is important for computer vision tasks in changing environments.

Support and Query Sets

Support and query sets form the backbone of few-shot learning. The support set contains a few labeled examples from each category. The query set has new, unlabeled examples that the model must classify using what it learned from the support set.

| Concept | Description |

|---|---|

| Support Set | Small labeled examples representing each class, acting as a mini-training set for the model to learn key features. |

| Query Set | Unlabeled examples used to test the model’s ability to classify based on the support set knowledge. |

| Benchmark Example | ‘5-way 1-shot’ task: model classifies among 5 classes with only 1 labeled example per class in the support set. |

| Purpose | Standard evaluation method to benchmark and compare few-shot learning algorithms under controlled conditions. |

Meta-learning algorithms like MAML and Prototypical Networks use this setup to train models that can quickly adapt to new classes. For example, in a ‘3-way 1-shot’ task, the model learns from one example in each of three classes and then predicts the class of new images. This method has improved generalization and adaptability in many studies.

A recent study found that reducing the difference between the support and query sets improves how well the model learns. When the data in both sets is similar, the model performs better on new tasks. Researchers have even created new normalization methods based on this idea, making few-shot learning machine vision systems even stronger.

Tip: Support and query sets help few-shot learning classifiers learn quickly and adapt to new categories, even when only a few examples are available.

Approaches in Few-shot Learning

Meta-learning

Meta-learning teaches models how to learn new tasks quickly. In few-shot learning, meta-learning helps a system adapt to new categories with only a few examples. Researchers have developed several meta-learning strategies. Early work used LSTM-based optimizers to update model weights, which added complexity but showed new ways to train models. Later, the Model Agnostic Meta-Learning (MAML) framework improved this process. MAML finds the best starting point for different tasks, so the model can adapt with just a few updates. This method does not need extra parameters and works with popular optimizers like ADAM. MAML’s flexibility allows it to work across many models and tasks. Studies show that MAML performs well in both computer vision and natural language processing. The SNAIL algorithm also appeared as a "black-box" meta-learning method, but MAML remains popular because of its wide use and strong results.

Meta-learning gives few-shot learning systems the ability to generalize from small datasets, making them more useful in real-world computer vision tasks.

Transfer Learning

Transfer learning uses knowledge from one task to help solve another. In few-shot learning, transfer learning often starts with a model trained on a large dataset, such as ImageNet. The model then fine-tunes its knowledge using only a few new examples. This approach saves time and improves accuracy, especially when labeled data is limited.

A study on transcriptome data showed that transfer learning reached over 94% accuracy with only 15 samples per class. This result is close to the 95.6% accuracy of models trained on much larger datasets. Transfer learning also leads to faster training and better precision and recall compared to training from scratch.

| Metric | Transfer Learning (ImageNet weights) | Training from Scratch |

|---|---|---|

| Initial Training Loss | 0.56 ± 0.02 | Higher (not specified) |

| Initial Validation Loss | 0.52 ± 0.3 | Higher (not specified) |

| Average Epochs to Converge | 26 ± 3 | 50 ± 12 |

| Precision (class-weighted) | Improved over scratch model | Lower |

| Recall (class-weighted) | Improved over scratch model | Lower |

Transfer learning also supports few-shot class-incremental learning. The TLCE method, which combines robust hyperdimensional networks and transferable knowledge networks, improves accuracy for both new and old classes. This method does not need extra training for new classes and outperforms other few-shot learning approaches. In medical imaging, transfer learning helps segment new structures with only a few labeled images, showing strong results even when the data comes from different sources.

Data Augmentation

Data augmentation increases the variety of training data by creating new samples from existing ones. In few-shot learning, this technique helps models avoid overfitting and improves their ability to generalize. Recent methods use reinforcement learning to find and transform samples that are likely to cause overfitting. For example, the ReAugment method adapts time series data to improve forecasting accuracy in few-shot scenarios.

Researchers have also developed new ways to match synthetic images with real data. By using inversion and distribution matching, they make synthetic data more useful for few-shot classification. Studies show that these methods improve accuracy and help models learn better from limited data.

- EEG-AUGMENTATION-BENCHMARK-2022 compares data augmentation techniques for EEG data in few-shot learning.

- Variational Autoencoders generate synthetic EEG signals to improve classification.

- TorchEEG combines modeling, augmentation, and transfer learning for EEG research.

- The Reptile algorithm supports few-shot EEG classification.

- Contrastive learning enhances feature extraction for few-shot image tasks.

Hybrid data augmentation methods now use prompt engineering and retrieval modules to make datasets more diverse. These techniques help few-shot learning systems become more robust and accurate.

A study by Paci et al. tested new augmentation methods using Discrete Wavelet Transform and Constant-Q Gabor Transform. These methods, when used with pretrained ResNet50 networks, achieved top accuracy scores on several benchmark datasets. Ensemble models that combine different augmentation techniques reached state-of-the-art results, proving that data augmentation is key for few-shot learning in computer vision.

Prototypical Networks

Prototypical networks offer a simple yet powerful approach for few-shot learning. These networks create a "prototype" for each class by averaging the features of the support set examples. When the model sees a new query image, it compares its features to each prototype and assigns the label of the closest one.

This method works well because it reduces the need for complex training and adapts quickly to new classes. Prototypical networks have become popular in computer vision because they handle new categories with very little data. They also support fast inference, which is important for real-time applications.

Prototypical networks help few-shot learning systems classify new objects with high accuracy, even when only a few labeled examples are available.

In 2025, these four approaches—meta-learning, transfer learning, data augmentation, and prototypical networks—form the backbone of few-shot learning in computer vision. Researchers continue to improve these methods, making machine learning systems more flexible and efficient in handling limited data.

Few-shot Classification and Object Detection

Few-shot Classification

Few-shot learning allows computers to perform image classification with only a few labeled examples. In 2025, this approach helps systems recognize new categories quickly, even when data is scarce. Researchers have developed models that use prompt-based meta-learning to improve few-shot classification. These models show strong accuracy and adapt well to new tasks. For example, a prompt-based meta-learning model achieved state-of-the-art results on four text classification datasets. It also worked well in real-world situations, such as event detection in social networks. The model used attention-guided prototypes to handle new classes with very little labeled data. This method helps solve problems like annotation challenges and changes in data.

Performance metrics show the strength of few-shot learning in image classification. On the MiniImageNet dataset, a 1-shot setting reached 66.57% accuracy, while a 5-shot setting achieved 84.42%. On the FC100 dataset, the 1-shot setting scored 44.78%, and the 5-shot setting reached 66.27%. These results highlight the ability of few-shot classification to generalize and adapt across different domains, including medical imaging.

| Dataset | Setting | Accuracy (%) | Notes |

|---|---|---|---|

| MiniImageNet | 1-shot | 66.57 | Effective learning from limited data. |

| MiniImageNet | 5-shot | 84.42 | Strong few-shot learning capabilities. |

| FC100 | 1-shot | 44.78 | Outperforms previous methods. |

| FC100 | 5-shot | 66.27 | Robust and transferable representations. |

| Cross-domain | 5-way 5-shot | Matches baselines | Adapts to medical imaging datasets. |

Few-shot Object Detection

Few-shot object detection helps systems find and classify objects in images using only a few examples per class. In 2025, this method has become essential for tasks where collecting large datasets is difficult. Researchers have created models like FM-FSOD, which combines foundation models and large language models. FM-FSOD uses a vision-only contrastive pre-trained backbone and contextualized learning to classify proposals. This model achieved state-of-the-art results on several few-shot object detection benchmarks.

A global domain adaptation strategy also improved few-shot object detection by enhancing gradient propagation and classification. Experiments on PASCAL VOC and MS COCO datasets showed significant gains. For example, the method increased mean average precision (mAP) by up to 5% over previous approaches. Training with only 1 to 10 shots per class led to steady improvements, reaching top performance after about 2000 iterations for 1-shot training.

Recent research divides few-shot object detection into two main approaches:

- Episode-task-based methods use episodic tasks to help models adapt quickly.

- Single-task-based methods transfer knowledge from base classes and fine-tune on new classes.

These strategies allow systems to maintain or improve detection accuracy, even with limited data. In smart farming, few-shot object detection achieved over 90% accuracy in plant disease detection and 96.2% in pest detection. Animal detection accuracy improved from 55.61% (1-shot) to 71.03% (5-shot). These results show that few-shot object detection boosts performance on small datasets and supports real-world applications.

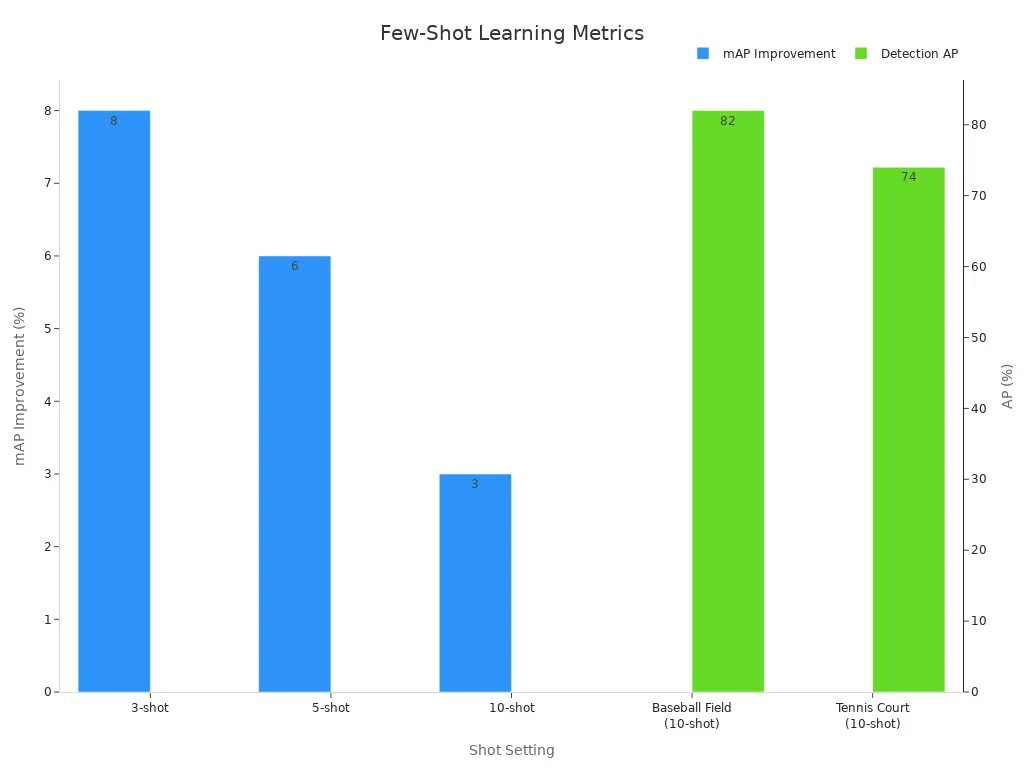

| Shot Setting | GC-IKDH mAP Improvement Over TSF-RGR | Notes |

|---|---|---|

| 3-shot | +8% | Large improvement in low-shot settings. |

| 5-shot | +6% | Superior detection with few samples. |

| 10-shot | +3% | Maintains advantage as samples grow. |

| Baseball Field (10-shot) | 82% AP | High accuracy on specific classes. |

| Tennis Court (10-shot) | 74% AP | Strong results on challenging objects. |

Few-shot object detection in 2025 enables rapid adaptation to new categories, making it valuable for image recognition, image classification, and object detection tasks in dynamic environments.

Applications in 2025

Healthcare

Few-shot learning machine vision systems have changed healthcare in 2025. Doctors use these systems to analyze medical images, such as X-rays and skin lesions, even when only a few labeled examples exist. Hospitals can now detect rare diseases faster because the models learn from small datasets. This helps doctors make better decisions and improves patient care. Machine vision also supports early diagnosis by finding patterns in images that humans might miss. As a result, healthcare providers can offer more accurate and timely treatments.

Robotics

Robotics has seen rapid progress with few-shot learning. Robots now learn new tasks with very little training data. Large language models, such as SayCan and Instruct2Act, help robots understand commands and perform complex actions. These models use both vision and language to guide robots. The REFLECT framework lets robots explain and fix their mistakes, which increases success rates. Vision-language models and Socratic Models allow robots to handle new tasks without extra training. MetaMorph and Cap frameworks help robots adapt to different shapes and jobs. These advances mean robots can work in more places, from homes to factories, with less setup time.

Robots in 2025 use few-shot learning to improve perception, decision-making, and control. They adapt quickly to new environments and tasks, making them more useful in daily life.

E-commerce

E-commerce companies use few-shot learning to improve product search and recommendation. These systems recognize new products with only a few images. Shoppers find what they want faster because the models handle new categories quickly. Retailers save time and money since they do not need large labeled datasets. Few-shot learning also helps with image classification, making it easier to organize and display products online.

Industrial Automation

Industrial automation benefits from machine vision powered by few-shot learning. Factories use these systems for quality control and defect detection, even when only a few examples of faulty products are available. The demand for adaptable AI models has grown as industries seek smarter automation. Edge AI allows real-time processing, which reduces delays and keeps data private. Companies in manufacturing, logistics, and automotive sectors now rely on these systems to boost efficiency and safety.

Future Trends

Innovations

Few-shot learning machine vision systems continue to evolve in 2025. Researchers focus on making these systems more efficient and adaptable. The Mosaic Data Science blog points out that collecting large labeled datasets remains costly and difficult. Few-shot learning helps by allowing models to learn from only a handful of examples. This reduces barriers for many industries. New models, such as the gene selection forecasting (GSF) approach, bring a fresh perspective. GSF breaks down data into smaller parts, selects the best patterns, and combines them to predict future trends. This method does not depend on large datasets. Instead, it finds patterns within the data itself. The gene evolution tree (GET) model improves both accuracy and interpretability. These advances show a shift toward discovering how data changes over time, rather than just matching inputs to outputs. Such innovations inspire new ways to build flexible and powerful machine vision systems.

Challenges

Despite progress, few-shot learning faces several challenges. Data scarcity still limits model performance in some fields. Inference latency can slow down real-time applications. High computational costs and API pricing make it hard for smaller companies to use advanced models. Model biases can affect fairness and accuracy. Researchers work to solve these problems by developing better algorithms and more efficient training methods. They also look for ways to reduce bias and improve reliability. These challenges drive ongoing research and keep the field moving forward.

Opportunities

Few-shot learning opens many doors for business and research. Companies and researchers see strong results and new possibilities:

- GPT-4 achieves 78% accuracy on the FinQA dataset and 76% on ConvFinQA, showing strong performance in financial reasoning.

- These results surpass average human scores, proving the value of few-shot learning in real-world tasks.

- Opportunities include interactive chatbots, payment assistance, financial education, and trading advice.

- Application areas cover customer service, text summarization, auto-filling forms, risk management, investment trading, and document processing.

- Ongoing research aims to address data scarcity, reduce latency, lower costs, and minimize bias.

Few-shot learning in machine vision continues to create new opportunities for smarter, faster, and more accessible AI solutions across many industries.

Few-shot learning machine vision systems in 2025 show strong progress. These systems use task-specific feature embeddings and flexible similarity measures, which help them avoid overfitting and perform well on benchmark datasets. Researchers highlight the value of fine-grained image classification in real-world tasks, such as healthcare and wildlife monitoring. Recent breakthroughs include self-supervised and hybrid methods that boost accuracy. However, challenges remain, especially with out-of-domain tasks. The table below shows both achievements and areas for growth. Future research will focus on robust optimization and flexible models.

| Aspect | Description | Example |

|---|---|---|

| Progress | Improved accuracy on standard benchmarks | Simple CNAPS + FETI: 90.3% on mini-ImageNet |

| Challenge | Drop in accuracy on medical datasets | 30% lower on BCCD, HEp-2 |

| Future | New optimization algorithms | Monarch Butterfly, Harris Hawks |

Few-shot learning will continue to shape the future of AI, making machine vision smarter and more adaptable.

FAQ

What is few-shot learning in machine vision?

Few-shot learning in machine vision lets computers recognize new objects using only a few examples. This method helps machines learn quickly and adapt to new tasks without needing thousands of images.

How does few-shot learning help in real-world applications?

Few-shot learning helps doctors, engineers, and retailers solve problems when they have little data. For example, it can detect rare diseases, guide robots, or find product defects with just a few samples.

What are support and query sets?

A support set gives the model a few labeled examples to learn from. A query set tests the model with new, unlabeled examples. This setup helps the system practice learning and testing, just like in real life.

Can few-shot learning work with different types of data?

Yes. Few-shot learning works with images, text, and even sound. Researchers use it in healthcare, robotics, and e-commerce to solve many problems with limited data.

Why is few-shot learning important in 2025?

Few-shot learning matters in 2025 because it saves time and resources. Companies and researchers can build smart systems faster, even when they cannot collect large datasets. This makes AI more useful and flexible.

See Also

Understanding Few-Shot And Active Learning In Machine Vision

Component Counting Vision Systems And Their 2025 Outlook

Ways Deep Learning Improves Machine Vision Technology Today

A Comprehensive Guide To Cameras Used In Machine Vision

Exploring Image Processing Techniques In Machine Vision Systems