The Turing Test in machine vision systems evaluates if a computer can interpret images like a human. The Visual Turing Test adapts this idea by comparing machine and human answers to image-based questions. Recent data shows that datasets such as ImageNet, with over one million images, play a key role in this process. Major companies invest millions to improve these systems, aiming for high performance on the Visual Turing Test. For example, Facebook’s platform M describes images for the visually impaired.

Machines now reach about 80% accuracy in finding cars in natural scenes, while humans reach 93%. In some inspection tasks, machine recall and precision even outperform human results, and machines work over 80 times faster. The turing test machine vision system highlights these differences and drives progress. The turing test remains a vital benchmark, showing where turing-based systems succeed and where they need improvement.

Key Takeaways

- The Turing Test checks if machines can think and understand images like humans by comparing their answers to human responses.

- The Visual Turing Test uses simple yes/no questions about images to measure how well AI systems see and interpret visual content.

- AI systems must meet key criteria like accuracy, consistency, human-like answers, and the ability to admit when unsure to pass the Turing Test.

- Current AI faces challenges like bias, poor handling of new situations, and difficulty understanding complex scenes, which slow progress.

- Real-world tests show AI still struggles with long conversations and fairness, but the Turing Test guides improvements in smarter, fairer systems.

Turing Test Basics

Turing’s Concept

The turing test asks if a machine can act like a human. Alan Turing designed this test to see if machines can think. In the turing test, a person talks to both a machine and a human. The person does not know which is which. If the machine can fool the person into thinking it is human, it passes the turing test. This idea shapes how people judge artificial intelligence. Turing believed that if a machine could use natural language processing to answer questions like a person, it would show real intelligence. The turing test does not focus on how the machine works inside. Instead, it looks at the results. The turing test uses simple questions and answers. This makes it easy to compare machines and humans. Turing’s idea helps people measure progress in ai. Many experts use the turing test to check if ai can match human skills. The turing test also guides how people build new artificial systems. Turing’s concept remains important for both natural language processing and machine vision.

Artificial Intelligence Goals

Artificial intelligence aims to create machines that solve problems, learn, and understand the world. The turing test sets a clear goal for ai: act like a human in real tasks. In machine vision, ai tries to see and understand images as people do. Turing’s ideas help set targets for artificial systems. To measure success, experts use technical metrics like precision, recall, and accuracy. These show how well ai models predict or classify images. However, these numbers do not always show the full value of ai. Business metrics such as revenue, profit, cost savings, and customer acquisition also matter. They show if artificial intelligence helps a company reach its goals.

Experts use more than just accuracy to judge ai. They look at:

- Fairness and bias testing metrics to make sure ai treats all groups equally.

- Statistical hypothesis testing to check if improvements are real and not random.

- Effect size measures to see if changes make a big difference.

- Guardrail metrics to watch for problems like longer inspection times.

- Psychometric validation to ensure results stay reliable over time.

The turing test pushes ai to improve. Turing’s vision helps guide artificial intelligence toward smarter, fairer, and more useful systems.

Visual Turing Test

Binary Question Method

The Visual Turing Test adapts the turing test for images and videos. In this method, a system receives a set of questions about visual content. These questions often use a binary format, such as "Is there a dog in the picture?" The system answers "yes," "no," or "unable to respond." This approach makes it easy to compare answers from humans and machines. The turing test uses this method to check if ai can match human performance in understanding images.

Researchers use large datasets to test the binary question method. They ask many questions about objects, actions, and scenes. An independent group evaluated 1,160 queries. The results show how well ai systems perform compared to humans. The table below shows some key findings:

| Metric | Details / Results |

|---|---|

| Total queries | 1,160 queries evaluated by an independent third party |

| Object definition queries | 243 queries, with 81% successfully detected |

| Non-definition queries | Answered in binary (true/false) or "unable to respond" |

| Accuracy calculation | Based on correctly answered non-definition queries only (object definition queries excluded) |

| Accuracy by predicate count | Accuracy decreases as number of predicates in query increases (1 to 3 predicates) |

| Accuracy by category | Good performance on detection, parts, actions, behaviors; challenges remain in spatial reasoning and human-object interactions |

| Response rates | Range from 52.2% to 79.5% across different video datasets |

| Accuracy rates | Range from 58.6% to 78.5% across different video datasets |

These results show that the binary question method gives clear, measurable data. The turing test machine vision system uses this method to track progress in ai. When accuracy drops as questions get more complex, researchers see where ai needs improvement. This method helps in evaluating machine intelligence by showing strengths and weaknesses in visual understanding.

Note: The binary question method allows for fair comparison between human and machine answers. It also highlights areas where ai still struggles, such as spatial reasoning and understanding human-object interactions.

Human-Like Understanding

The turing test measures more than just right or wrong answers. It checks if ai can understand images like a person. The Visual Turing Test uses different types of questions to test this skill. Some questions ask about objects, while others focus on actions, intentions, or relationships in a scene. The goal is to see if ai can show human-like understanding.

Researchers use several benchmarks to measure visual intelligence in artificial systems. They look at how well ai matches human perception. A vision-science-based checklist helps compare artificial networks to the way the human eye and brain work. This checklist checks spatio-temporal and color features, just like the human visual system. It helps scientists see if ai models process images in a human-like way.

The table below shows how the Visual Turing Test benchmarks visual intelligence:

| Benchmark Aspect | Description |

|---|---|

| Question-Answering (QA) Tasks | Use of single-turn QA tasks with multiple-choice and open-ended questions to assess video comprehension. |

| Question Types | Multiple-choice questions preferred over Boolean to reduce guessing; open-ended answers emulate human replies. |

| Cognitive Components Evaluated | Visual understanding, intention/context comprehension, commonsense reasoning included beyond linguistic ability. |

| Accuracy Metrics | AI accuracy measured on questions of varying difficulty levels. |

| Human-Likeness Evaluation | Judged by multiple human evaluators comparing AI answers to those of humans of various ages. |

| Story Element Analysis | Questions designed with intentions based on story elements to evaluate video understanding intelligence. |

| Comparison with Human Responses | Direct comparison with answers from humans of different ages to assess human-likeness and intelligence level. |

| Multi-Interrogator Evaluation | Multiple human interrogators used to reduce subjectivity in judging AI performance. |

| Objective Over Subjective Measures | Emphasis on quantitative measures rather than subjective judgment to overcome limitations of original Turing Test. |

The turing test uses these benchmarks to push ai toward more human-like performance. By comparing ai answers to those from people of different ages, researchers can see how close machine intelligence comes to human intelligence. The turing test also uses multiple judges to make the results fair and objective.

Artificial intelligence systems improve when they learn from these tests. The turing test helps guide the design of better artificial networks. It also shows where ai needs more training or new methods. The Visual Turing Test remains a key tool for evaluating machine intelligence and tracking progress in artificial intelligence.

Turing Test Machine Vision System

Core Components

A turing test machine vision system uses several important parts to work well. Each part helps the system see and understand images like a person. The turing test checks if these parts work together to create real machine intelligence.

- Image Input Module: This part collects pictures or video frames. It sends the visual data to the next part of the system.

- Preprocessing Unit: The system cleans and prepares the images. It may change the size, remove noise, or adjust colors. This step helps the system focus on important details.

- Feature Extraction Engine: The turing test needs the system to find key features in each image. This engine looks for shapes, edges, colors, and patterns. It turns the image into numbers the computer can use.

- Object Detection and Recognition: The system uses models to find and name objects in the image. It may use deep learning or other ai methods. The turing test machine vision system must spot objects as well as a person can.

- Reasoning and Decision Module: This part answers questions about the image. It uses logic and learned rules. The turing test checks if the system can reason like a human.

- Output Interface: The system gives answers in a way people can understand. It may use text, speech, or signals. The turing test compares these answers to human answers.

Note: Each part of the turing test machine vision system must work well for the whole system to pass the turing test. Weakness in one part can lower the system’s score.

Evaluation Criteria

The turing test uses clear rules to judge if a machine vision system acts like a person. These rules help experts see if the system shows true machine intelligence.

Key evaluation criteria include:

- Accuracy: The turing test measures how often the system gives the right answer. High accuracy means the system understands images well.

- Consistency: The system must give the same answer to the same question every time. The turing test checks for steady performance.

- Human-Likeness: The turing test compares the system’s answers to those from people. If the answers match, the system shows human-like thinking.

- Response Time: The turing test looks at how fast the system answers. Quick answers show strong ai, but the system must not rush and make mistakes.

- Handling Uncertainty: Sometimes, the image is not clear. The turing test checks if the system can say “I don’t know” or “unable to respond” when needed.

- Generalization: The turing test asks new questions or shows new images. The system must still perform well. This shows the system can learn and adapt.

- Robustness: The turing test checks if the system works with different image types, lighting, and backgrounds. A strong system does not fail when things change.

| Evaluation Criteria | What It Measures | Why It Matters for Turing Test |

|---|---|---|

| Accuracy | Correct answers | Shows understanding |

| Consistency | Same answer each time | Proves reliability |

| Human-Likeness | Similarity to human answers | Tests human-like thinking |

| Response Time | Speed of answers | Shows efficiency |

| Handling Uncertainty | Admitting when unsure | Avoids false claims |

| Generalization | Success with new data | Shows learning ability |

| Robustness | Performance in tough conditions | Proves real-world strength |

Experts use these criteria to judge if a turing test machine vision system has reached the level of human intelligence. The turing test helps guide ai research and shows where systems need to improve.

The turing test remains a key tool for building better ai. It pushes teams to create systems that see, think, and answer like people. When a turing test machine vision system meets these criteria, it moves closer to true machine intelligence.

Challenges and Implications

Technical Barriers

The turing test for machine vision faces many technical barriers. Systems often show high scores but do not truly understand images. Dataset bias lets algorithms exploit patterns that do not reflect real-world understanding. Lack of robustness appears when systems fail on odd or tricky questions. Poor compositional generalization means systems struggle with new combinations, like a green dog or a square apple. Fragility and weak uncertainty handling cause systems to break or guess when unsure. Many systems lack true integration of vision and language, so they guess instead of understanding. The table below shows these barriers and how experts measure them:

| Technical Barrier / Challenge | Description | Performance Metrics / Evaluation Approaches |

|---|---|---|

| Dataset Bias | Algorithms exploit spurious correlations in datasets | Use metrics that account for bias; measure core abilities like counting, object detection |

| Lack of Robustness | Systems fail on semantically identical or absurd queries | Test for robustness to identical and ‘bad’ queries; evaluate on clear questions |

| Poor Compositional Generalization | Performance drops on new concept combinations | Evaluate on novel combinations not seen in training; measure compositional reasoning |

| Fragility and Uncertainty Handling | Systems break easily; lack ways to express uncertainty | Include prediction confidence; allow ‘I don’t know’ responses |

| Lack of Integrated Vision & Language | Systems succeed by shallow guessing, not true understanding | Test by withholding key info; assess across tasks for generalization and positive transfer |

Technical barriers slow progress toward passing the turing test. Overcoming these challenges will help artificial intelligence reach true machine intelligence.

Ethical Issues

The turing test in machine vision raises important ethical questions. Systems that pass the turing test may still show bias or unfairness. If a system learns from biased data, it can make unfair decisions. Privacy concerns grow when artificial systems analyze personal images. People may not know how their data is used. Transparency matters because users need to trust artificial intelligence. If a system cannot explain its answers, people may not accept its results. Passing the turing test does not mean a system acts ethically. Developers must design artificial systems that respect fairness, privacy, and transparency.

Future of Artificial Intelligence

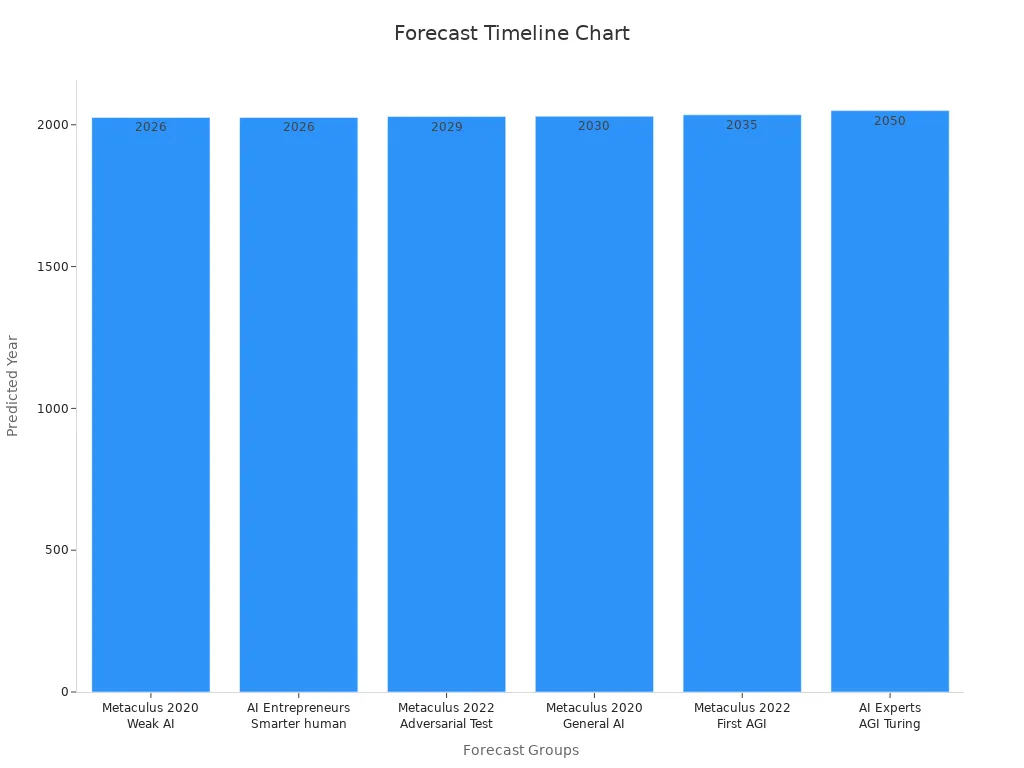

The future of artificial intelligence depends on progress in the turing test. New testing methods use adversarial and statistical protocols to check for real understanding. These methods help find weak spots in machine intelligence. Experts predict that passing the turing test will mark a big step for artificial intelligence. Surveys show that many believe artificial systems could pass the turing test between 2026 and 2050. The chart below shows these forecasts:

If artificial intelligence passes the turing test, society will see new opportunities and risks. Success could bring smarter machines that help in health, safety, and daily life. Failure to pass the turing test shows that machine intelligence still needs improvement. The turing test remains a key goal for artificial intelligence and a guide for future research.

Real-World Cases

Industry Examples

Many companies use Turing Test principles to check how well their machine vision systems and chatbots work. For example, some tech firms test chatbots by having them answer questions about images or videos. These chatbots must give answers that sound like a real person. In one case, researchers used a Self-Directed Turing Test to see if chatbots could keep up a human-like conversation about pictures. They found that longer talks made it harder for chatbots to stay consistent and natural.

Some companies use the X-Turn Pass-Rate metric to measure how often chatbots can fool human judges over many questions. GPT-4, a popular chatbot, did better than others in keeping a human-like style, but it still could not trick people all the time. Many chatbots start to agree with the user instead of giving deep answers when the conversation gets longer. This shows that even advanced chatbots have trouble acting like humans for a long time.

Note: Human judges sometimes show bias when they decide if a chatbot or machine vision system is acting like a person. This makes it hard to get fair results.

Lessons Learned

Real-world tests of chatbots and machine vision systems have taught experts many lessons:

- Dialogue length matters. Chatbots lose their human-like touch in longer talks.

- Human judgment can be biased. This affects how well chatbots and machine vision systems score on the turing test.

- Good data is important. Companies must collect lots of data and make sure it matches the real world.

- Dividing data into training and test sets helps measure true performance.

- The classic turing test works best for language. For machine vision, experts now use new tests that include seeing and acting, not just talking.

- Modern tests, like the Total Turing Test, check if chatbots and machine vision systems can handle both words and images.

These lessons show that testing chatbots and machine vision systems is complex. Experts need better ways to judge if these systems really act like people.

The turing test helps experts see if machine vision systems can think like people. The Visual Turing Test uses clear questions to measure progress. These benchmarks show where artificial intelligence works well and where it needs more learning. The turing test guides teams to build smarter systems. Many researchers now ask, "Will the turing test always show true intelligence?"

The turing test will keep shaping the future of artificial intelligence as new challenges appear.

FAQ

What is the main goal of the Visual Turing Test?

The Visual Turing Test checks if a machine can understand images like a person. It compares answers from machines and humans to see if they match.

How do experts measure if a machine vision system passes the Turing Test?

Experts look at accuracy, speed, and how close the machine’s answers are to human answers. They also check if the system can handle new images and say “I don’t know” when unsure.

Why do some machine vision systems fail the Turing Test?

Some systems fail because they guess instead of understanding. They may also struggle with new or tricky questions. Bias in training data can cause mistakes.

Can a machine vision system be perfect?

No system is perfect. Even the best systems make mistakes. Machines can work faster than people, but they still need more learning to match human understanding.

Where do people use the Turing Test in real life?

Many companies use the Turing Test to check chatbots, security cameras, and self-driving cars. This helps them see if the systems act and think like people.

See Also

An Introduction To Sorting Systems Using Machine Vision

A Comprehensive Guide To Image Processing In Vision Systems

How Thresholding Works Within Machine Vision Technologies

The Role Of Cameras In Modern Machine Vision Systems

Exploring Computer Vision Models For Machine Vision Applications