Ground truth in machine vision means the correct labels or answers for each image or video frame. These labels help systems learn what is right or wrong. High-quality ground truth plays a key role in measuring accuracy, precision, recall, and balanced accuracy. Metrics like Intersection over Union and mean Average Precision depend on ground truth to check if predictions match reality. A ground truth machine vision system uses these trusted labels to train, validate, and test models, making sure results stay reliable in real-world tasks.

Key Takeaways

- Ground truth means the correct labels or answers for images and videos that help machine vision systems learn and improve.

- High-quality ground truth data is essential for training, validating, and testing models to ensure accurate and reliable results.

- Combining human expertise with AI tools speeds up labeling and improves the quality of ground truth data.

- Clear guidelines, regular checks, and strong quality control keep ground truth data consistent and trustworthy.

- Using diverse and well-checked ground truth data helps build fair, safe, and effective machine vision systems.

Ground Truth in Machine Vision

Definition

Ground truth describes the correct answers or labels for each image, video frame, or data point in machine vision tasks. In machine learning, ground truth data serves as the reference that algorithms use to learn and improve. Experts or trained annotators usually create these labels by carefully examining images from cameras or other sensors. They might draw boxes around objects, mark regions for text recognition, or assign categories to each part of an image.

Ground truth data can include bounding boxes, segmentation masks, or even detailed outlines of objects. For example, in text recognition, annotators highlight the exact location of words or letters in a photo. This process often uses annotation platforms like Labelbox or Clarifai, which help manage and organize the labeling work. The accuracy of ground truth depends on clear objectives, careful labeling, and strong quality checks.

A table below shows common types of ground truth data and their uses:

| Type of Ground Truth Data | Example Use Case | Description |

|---|---|---|

| Bounding Boxes | Object detection in cameras | Draw rectangles around cars, people, or animals |

| Segmentation Masks | Medical imaging, agriculture | Mark each pixel as part of a tumor or a plant |

| Text Annotations | Text recognition in documents | Highlight words or letters in scanned images |

| Binary Labels | Quality control in factories | Mark items as "defective" or "good" |

Importance

Ground truth plays a central role in building and testing machine vision systems. Without accurate ground truth data, models cannot learn to recognize objects, read text, or make reliable predictions. High-quality ground truth helps measure how well a system works and guides improvements.

- Machine learning models use ground truth data as training data. This allows them to learn the difference between correct and incorrect answers.

- In text recognition, ground truth labels show exactly where each word appears. This helps the system learn to read new images from cameras.

- Ground truth data supports key metrics like accuracy, precision, recall, and F1-score. These metrics help teams compare different models and choose the best one.

A recent study showed how ground truth improves machine vision in real-world settings:

- Benchmark datasets like MNIST and CIFAR-10 use ground truth labels to compare system outputs with correct answers.

- Metrics such as accuracy, precision, recall, and AUC measure how much a system improves.

- A/B testing splits data into two groups to test new systems fairly.

- Statistical tests, like p-values and confidence intervals, check if improvements are real.

- Effect size metrics, such as Cohen’s d, show the practical impact of changes.

- An electronics manufacturer improved defect detection from 93.5% to 97.2% using ground truth data, with no negative effects on other operations.

- The experiment used identical conditions and large sample sizes to ensure reliable results.

- Ground truth data defined success, prepared fair test groups, and validated improvements.

A table below highlights how ground truth supports different evaluation metrics:

| Metric | Role in Evaluation | Example in Practice |

|---|---|---|

| Accuracy | Measures correct predictions | High accuracy means strong performance |

| Precision | Counts true positives among predicted positives | High precision reduces false alarms |

| Recall | Counts true positives among all actual positives | High recall catches more real cases |

| F1-score | Balances precision and recall | Useful for uneven data |

| Intersection over Union | Checks overlap between predicted and true locations | Important for object detection with cameras |

| mean Average Precision | Averages precision across classes and thresholds | Used in text recognition and object detection |

| Dice Coefficient | Measures similarity in segmentation tasks | Common in medical imaging |

| Jaccard Index | Compares overlap in segmentation | Used for quality checks in agriculture |

- Teams use strict labeling methods, such as double pass labeling and expert review, to improve ground truth accuracy.

- Crowdsourcing with expert validation increases data quality for tasks like text recognition.

- Annotation platforms help monitor the process and catch mistakes early.

Ground truth data also helps reduce bias by including images from different groups, such as various ages or backgrounds. It ensures that models work well for everyone and can handle rare but important cases. In text recognition, clear ground truth labels make it easier to understand why a model made a certain decision.

Role in Model Development

Training and Validation

Ground truth plays a vital role in the development of any ground truth machine vision system. During training, machine learning models use ground truth data to learn how to classify images, detect objects, or perform text recognition. For example, in object detection, the model receives images of vehicles with bounding boxes drawn around each car or truck. The model learns to spot these vehicles by comparing its guesses to the ground truth labels.

Validation comes next. Teams use a separate set of ground truth data to check how well the model performs. This step helps them fine-tune the model’s settings and choose the best algorithm. In text recognition, validation data shows if the model can read new words or letters in unseen images. If the model makes mistakes, engineers adjust the model until it matches the ground truth more closely.

User-level data splits, which group data by real users, give a better estimate of how well the model will work in the real world. This method respects ground truth groupings and leads to more accurate validation results. It also helps the model generalize better to new data.

Performance metrics such as accuracy, precision, recall, and F1 score all depend on ground truth. Teams compare the model’s predictions to the ground truth labels to see how well the model learns. For example, in advanced driver-assistance systems, the model must spot vehicles, pedestrians, and road signs. The ground truth data shows exactly where each object is, so the model can learn to make safe decisions. This process supports safety and reliability in real-world driving.

A table below shows how ground truth supports different machine vision tasks:

| Task | Ground Truth Example | Model Output Compared To… |

|---|---|---|

| Object Detection | Bounding boxes around vehicles | Predicted boxes |

| Image Segmentation | Pixel masks for road and sidewalk | Predicted masks |

| Text Recognition | Word locations in street signs | Predicted text locations |

| Driver Monitoring | Head pose and gaze direction | Predicted driver attention |

High-quality training data and validation data, both with accurate ground truth, directly affect how well the model works. When teams use well-structured ground truth data, they see better baseline predictions and improved validation results. This link between ground truth and model performance is clear in many machine learning projects.

Testing and Calibration

Testing and calibration are the final steps before a ground truth machine vision system goes into production. Teams use a new set of ground truth data, called test data, to measure how well the model works on images it has never seen before. This step checks if the model can generalize to new vehicles, road scenes, or text recognition tasks.

Calibration makes sure the model’s confidence scores match real-world outcomes. For example, if a model says it is 90% sure a vehicle is present, it should be correct about 90% of the time. In manufacturing, companies like Philips Consumer Lifestyle BV have shown that using ground truth for testing and calibration can reduce labeling effort by 3-4% without losing quality. They use calibration plots and metrics to check if the model’s predictions match the ground truth. Even when teams use new calibration methods, ground truth remains the gold standard for checking model quality.

Ground truth data acts as the trusted reference for all performance checks. Teams calculate metrics like precision, recall, and root mean squared error by comparing predictions to ground truth. They also monitor for data drift, which means the model’s predictions start to move away from the ground truth over time. When this happens, teams retrain the model using fresh ground truth data to keep accuracy high.

In advanced adas features, such as lane keeping and collision warning, ground truth data ensures the system can spot vehicles and road hazards in all conditions. Driver monitoring systems use ground truth to check if the model can track where the driver is looking. This helps improve safety by making sure the system works for every driver.

Supervised machine learning depends on ground truth at every stage. From training to testing, ground truth data guides the model, checks its progress, and keeps it reliable in real-world tasks.

Ground Truth Data Collection

Sources and Methods

Ground truth data comes from many sources in machine vision. Cameras, lidar, and radar are the most common sensors. Cameras capture color and texture, making them useful for object classification and scene understanding. Lidar creates detailed 3D maps by measuring distances with laser pulses. Radar detects range and speed, working well even in poor weather. Test vehicles often use all three sensors together. This combination helps developers compare sensor outputs and align them with ground truth data in real-world environments. Studies show that lidar provides high-resolution 3D mapping, while radar offers strong performance in rain or fog. Cameras excel at recognizing objects but can struggle with depth and harsh weather. Fusing data from these sensors improves accuracy and reliability, especially in advanced driver-assistance systems and advanced adas features.

Researchers use several methods to collect ground truth data:

- Synthetic datasets created with computer graphics

- Real-world datasets gathered from test vehicles

- Automated annotation using AI tools

- Manual annotation by trained experts

- Combined approaches that mix human and machine input

Key journals like IEEE PAMI and frameworks such as the Mikolajczyk and Schmidt methodology guide best practices for obtaining ground truth.

Labeling and Annotation

Labeling and annotation turn raw sensor data into usable ground truth. Annotators draw boxes, mark regions, or label points on images from cameras, lidar, and radar. Advanced annotation tools like Amazon SageMaker Ground Truth, Keylabs, and SuperAnnotate help speed up this process. These tools use AI to suggest labels, which humans then check and correct. Research from MIT and Google shows that even small errors in labeling can lower model accuracy by several percent. Clear guidelines, expert training, and quality checks improve annotation reliability. Industries such as healthcare and autonomous vehicles benefit from these advanced practices, which lead to better model performance.

Tip: Combining human expertise with AI-assisted tools reduces errors and increases the speed of labeling large datasets.

Challenges

Obtaining ground truth data presents several challenges. Subjectivity can affect how annotators label images, especially when objects are unclear or overlap. Consistency is hard to maintain across large teams or over time. Scalability becomes an issue as datasets grow to millions of images from cameras, lidar, and radar. Quantitative studies highlight problems like sampling bias, data loss, and disagreement between human and automated labels. Selecting the right evaluation metrics also matters. For example, some metrics may not match expert judgment in medical imaging or agriculture. Careful sampling strategies and regular quality checks help address these issues, but obtaining ground truth remains a complex task.

Quality in Ground Truth Machine Vision System

Labeling Guidelines

Clear labeling guidelines help teams create reliable ground truth for machine vision. Teams write detailed instructions with examples for each label type. These instructions explain how to mark objects like vehicles or words in text recognition tasks. Annotators follow these steps to reduce mistakes and keep results consistent. Regular training and feedback sessions help annotators understand updates and avoid repeating errors. Teams often use double-checking, where a second person reviews each label. They also measure agreement between annotators using metrics like Cohen’s Kappa. High agreement means the ground truth data is trustworthy.

Tip: Update guidelines when new errors appear. This keeps the ground truth machine vision system accurate as tasks change.

Human-Machine Collaboration

Combining human skill with machine help improves labeling speed and accuracy. Human-in-the-loop systems let experts review and fix labels suggested by AI tools. This teamwork helps catch errors and improve the quality of ground truth data. Real-time collaboration allows teams to correct mistakes quickly, especially in complex images with vehicles or text recognition challenges. Active learning strategies use AI to pick the most important samples for humans to label. Hybrid methods, like pseudo-labeling, mix human judgment with automated suggestions. These approaches help teams reach high accuracy, often above 77% on small data sets, with balanced precision and recall.

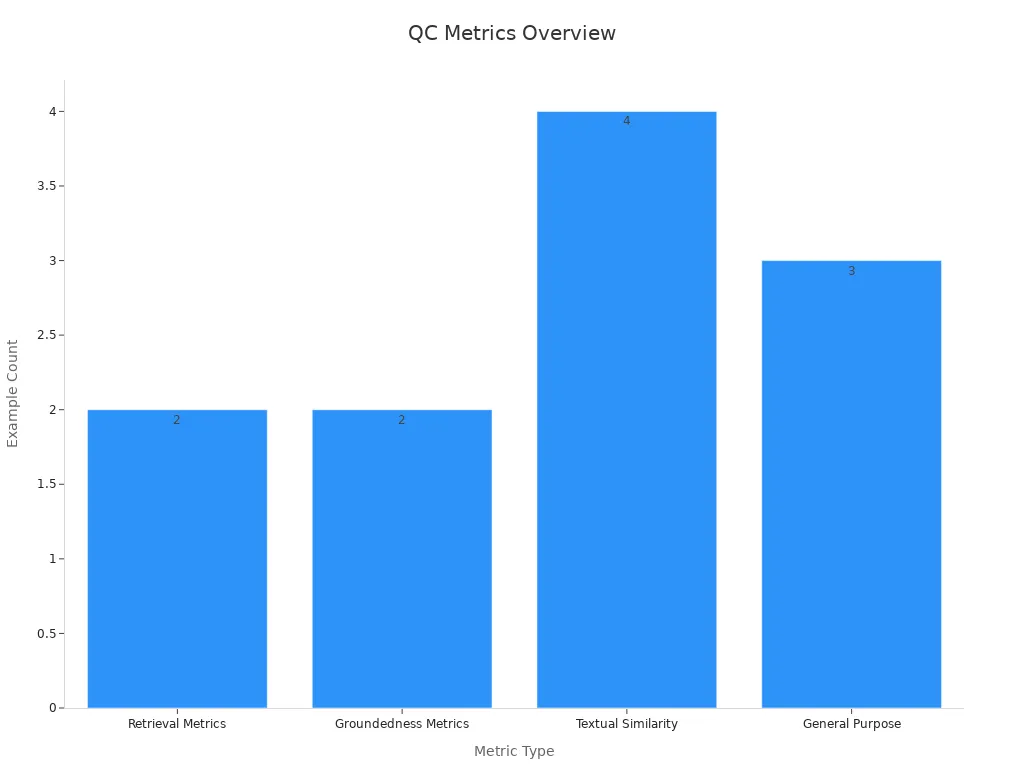

Verification and Control

Strong verification and control processes keep ground truth data reliable. Teams use both preventive and reactive steps. Preventive steps include hiring skilled annotators, following strict procedures, and using automation tools to catch errors early. Reactive steps involve checking for mistakes, giving feedback, and updating processes. Teams set benchmarks and use quality metrics to track progress. They measure data quality, model accuracy, and fairness to spot problems. Regular audits and layered reviews help maintain high standards. Some organizations reach accuracy levels of 98-99.99% by combining human checks with automated controls.

| Metric Category | Metrics / Benchmarks | Purpose / Relevance to Ground Truth Machine Vision Systems |

|---|---|---|

| Data Quality | Percentage of missing values, type mismatches, range violations | Ensures input data integrity, critical for reliable ground truth and model input data quality |

| Model Quality | Accuracy, precision, recall, F1-score (classification); MAE, MSE (regression) | Measures predictive performance, essential for validating ground truth alignment and model correctness |

| Performance by Segment | Evaluation across cohorts or slices (e.g., customer groups, locations) | Detects variations in model performance that may indicate ground truth or data issues |

| Proxy Metrics | Heuristics when ground truth is delayed (e.g., share of unclicked recommendations) | Provides early signals of model degradation when true labels are unavailable |

| Drift Detection | Input drift (feature distribution changes), output drift (prediction distribution changes) | Monitors shifts in data or model behavior that can degrade ground truth relevance and model accuracy |

| Fairness and Bias | Predictive parity, equalized odds, statistical parity | Ensures models do not discriminate, maintaining fairness and reliability in ground truth datasets and model outputs |

Creating golden datasets with diverse and well-checked labels gives teams a strong benchmark. This helps them find weak spots and improve the ground truth machine vision system for real-world use.

Accurate ground truth forms the backbone of machine vision systems. High-quality data helps models detect vehicles and supports safety in real-world tasks. Teams should focus on strong data practices and regular checks.

Next steps for improvement:

- Review labeling guidelines

- Use new tools for annotation

- Study recent research on ground truth

Reliable ground truth leads to better results and safer technology.

FAQ

What is ground truth in machine vision?

Ground truth means the correct answer for each image or video. Experts create these answers by labeling objects, text, or regions. Machine vision systems use ground truth to learn and check their predictions.

Why does ground truth matter for AI models?

Ground truth helps AI models know what is right or wrong. Models compare their guesses to ground truth labels. This process improves accuracy and helps teams measure progress.

How do teams collect ground truth data?

Teams use cameras, lidar, or radar to gather images. They use annotation tools to label objects or text. Sometimes, they combine human work with AI suggestions for faster results.

What challenges do teams face with ground truth?

Teams often face problems like unclear images, different opinions among labelers, and large amounts of data. Consistent guidelines and regular checks help reduce these issues.

Can machines label ground truth data by themselves?

Machines can suggest labels using AI, but humans still review and correct most labels. Human checks keep the data accurate and reliable.

See Also

Understanding Pixel-Based Vision Systems In Contemporary Uses

An Overview Of Camera Roles Within Vision Systems

Essential Principles Behind Edge Detection In Vision Technology

Introduction To Metrology Vision Systems And Their Fundamentals

Comparing Firmware-Based Vision With Conventional Machine Systems