Artificial intelligence brings a new level of capability to machine vision systems. These systems now interpret and respond to visual data without human help. Machine vision no longer relies only on fixed rules. AI-driven approaches allow learning and adaptation. In industrial settings, the TUNASCAN system achieved over 95% accuracy in sorting tuna by species, showing how machine vision can boost efficiency. Market trends reveal strong growth for Artificial Intelligence machine vision system technology. The global market for machine vision systems could reach $41.74 billion by 2030. With AI, machine vision supports autonomous decisions, improves accuracy, and meets the needs of industries that require quick, reliable inspection.

Key Takeaways

- AI-powered machine vision systems learn from data to detect defects and patterns more accurately than traditional rule-based systems.

- These systems adapt quickly to new products and changing environments, reducing the need for manual updates and improving efficiency.

- Real-time decision-making enabled by AI helps industries like manufacturing and autonomous vehicles respond instantly to visual data.

- Deep learning and advanced algorithms boost accuracy, speed, and resource efficiency, allowing deployment on smaller devices.

- AI-driven machine vision improves quality control, lowers costs, and supports diverse applications across healthcare, logistics, and robotics.

Machine Vision Systems

Traditional Machine Vision

Traditional machine vision uses a combination of cameras, sensors, and lighting to capture images. These systems rely on fixed rules and algorithms to process visual data. Engineers program the system to look for specific patterns or features. For example, a factory might use machine vision to check if a product has the correct shape or color. The cameras take pictures, and the sensors help measure size or position. The system then compares the captured images to a set of standards.

Most traditional machine vision systems use rule-based logic. This means the system follows a list of instructions to decide if an object passes or fails inspection. These instructions do not change unless someone updates the program. The process works well for simple tasks, such as counting items or checking for missing parts. However, traditional machine vision struggles with complex or changing environments.

Note: Traditional machine vision systems often require frequent adjustments when products or lighting conditions change. This can slow down production and increase costs.

Limitations of Rule-Based Systems

Rule-based machine vision systems face several challenges. They cannot easily adapt to new products or unexpected changes. If a product has a small defect that the rules do not cover, the system may miss it. These systems also have trouble with overlapping objects or poor lighting. The cameras and sensors can only collect data, but the system cannot learn from past mistakes.

A comparison of key performance metrics helps show the differences between traditional and AI-powered machine vision systems:

| Metric | Description |

|---|---|

| Accuracy | Proportion of correctly classified objects or correct predictions out of total inspected parts or predictions. |

| Precision | Accuracy of positive predictions made by the system, indicating how many predicted positives are true positives. |

| Recall | Ability to identify all actual instances of a class, measuring true positive rate. |

| F1 Score | Harmonic mean of precision and recall, providing a balanced measure of overall performance. |

| Dice-Sørensen Coefficient | Combines precision and recall to assess overlap between predicted and actual values, especially in medical imaging. |

| Jaccard Index (IoU) | Measures overlap between predicted and actual values, penalizing small errors more than Dice. |

| Hausdorff Distance | Measures the maximum distance between predicted and actual values, focusing on worst-case errors. |

Traditional machine vision systems often show lower accuracy and precision compared to AI-integrated systems. They cannot adjust to new data or learn from errors. As a result, factories may see more false positives or negatives during inspection. The need for manual updates and limited flexibility makes these systems less effective for modern manufacturing needs.

Artificial Intelligence Machine Vision System

AI Integration in Machine Vision

AI has transformed machine vision by allowing systems to interpret complex visual information with greater accuracy and speed. Traditional machine vision systems depended on fixed rules and manual programming. Now, artificial intelligence machine vision system technology uses advanced algorithms and machine learning to analyze images, recognize patterns, and make decisions without constant human input.

Modern machine vision systems combine cameras, sensors, and AI-powered software. Cameras capture high-resolution images, while sensors provide additional data about position, movement, or environmental conditions. AI algorithms process this data, enabling the system to identify objects, detect defects, and classify items in real time. These systems adapt to new products or changing environments by learning from new data, which increases flexibility and reduces the need for manual adjustments.

AI integration supports real-time decision-making in many industries. For example, in manufacturing, deep learning-based visual inspection systems detect subtle defects earlier than manual inspections. Predictive maintenance models use live video feeds to spot early warning signs, reducing downtime and improving efficiency. In healthcare, deep convolutional neural networks (CNNs) reach expert-level accuracy in medical imaging, supporting faster and more accurate decisions.

Note: AIoT frameworks combine artificial intelligence with the Internet of Things, connecting cameras and sensors to cloud-based machine vision systems. This setup allows for efficient data sharing and processing across multiple devices.

The efficiency of AIoT frameworks in machine vision applications is clear in recent benchmarks:

| Dataset / Metric | Accuracy (%) | Resource Consumption (MB) | Notes on Efficiency and Challenges |

|---|---|---|---|

| EPIC-SOUNDS | 33.02 ± 5.62 | 2176 | Baseline accuracy and resource use |

| EPIC-SOUNDS (optimized) | 35.43 ± 6.61 | 936 (↓ 57.0%) | Improved accuracy with reduced resource use |

These results show that optimized artificial intelligence machine vision system solutions can increase accuracy while using fewer resources. However, high data diversity and communication needs can challenge efficiency, especially when many devices share information.

Deep Learning and Computer Vision

Deep learning algorithms have become the backbone of modern computer vision. These algorithms, especially CNNs, automatically extract features from raw images, reducing the need for manual feature engineering. This approach improves detection precision and enables advanced image processing tasks such as segmentation, object recognition, and pattern recognition.

Some real-world examples highlight the impact of deep learning in machine vision:

- Automated brain tumor segmentation from MRI scans speeds up treatment planning and reduces the time needed for doctors to outline tumors.

- Tuberculosis detection from chest X-rays achieves high accuracy, helping doctors identify TB-related abnormalities quickly.

- Bone fracture detection from X-ray images outperforms traditional rule-based systems in accuracy and reliability.

Deep learning models use techniques like transfer learning, data augmentation, and active learning to improve performance, even when labeled data is limited. These methods help artificial intelligence machine vision system solutions adapt to new tasks and environments.

The integration of deep learning in machine vision systems leads to faster, more accurate diagnostics and optimized workflows. In healthcare, surgical assistance systems using deep learning reduce surgery duration by up to 20% and lower complication rates by over 25%. Cancer screening systems powered by AI outperform manual methods in both speed and sensitivity, reducing diagnosis delays.

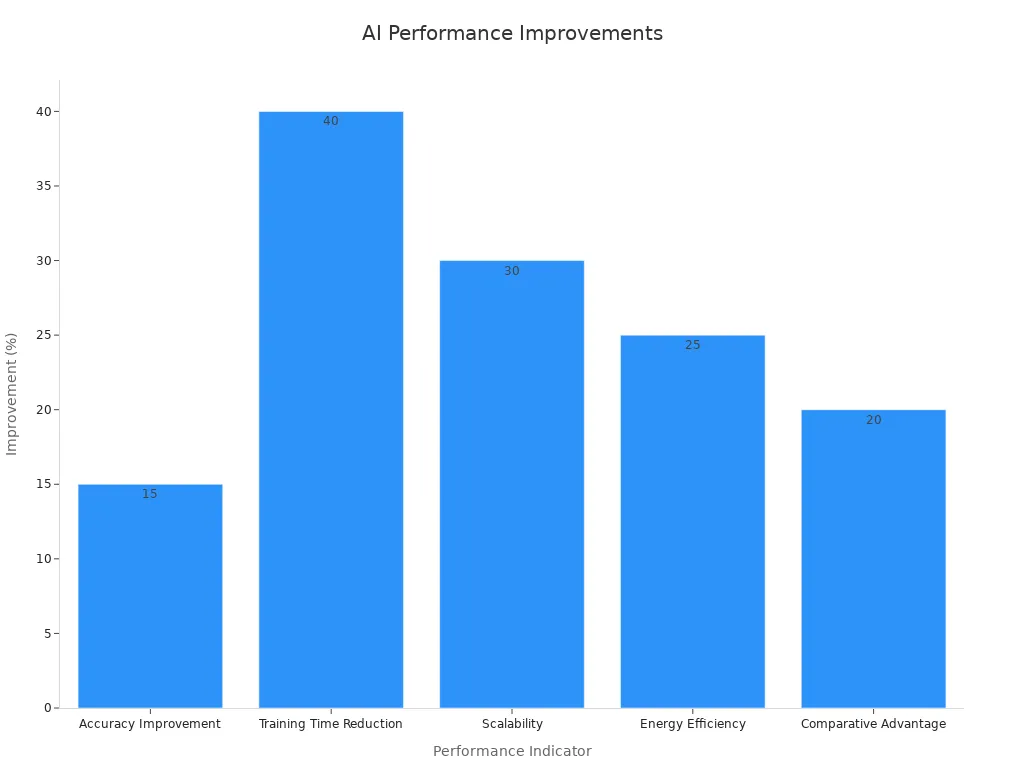

Performance indicators further demonstrate the improvements brought by AI integration:

| Performance Indicator | Description / Impact |

|---|---|

| Inference Time | Reduced inference time improves real-time application suitability. |

| Memory Utilization | Optimized models use less memory, enabling deployment on resource-constrained devices. |

| Computational Requirements | Lower computational demands facilitate scalability and efficiency. |

| Training Time Reduction | Transfer learning and hyperparameter tuning reduce training time and resource consumption. |

| Optimization Techniques | Pruning, quantization, and knowledge distillation reduce model complexity without major accuracy loss. |

| Trade-offs | Balancing speed, accuracy, and resource use is critical for practical deployment. |

| Performance Indicator | Improvement / Statistic |

|---|---|

| Accuracy Improvement | Up to 15% increase in accuracy on benchmark datasets due to deeper architectures and advanced training techniques. |

| Training Time Reduction | Training times reduced by up to 40%, enabling faster model development and deployment. |

| Scalability | Scalability improvements of up to 30% when moving from lab to real-world applications. |

| Energy Efficiency | Approximately 25% improvement in energy efficiency through optimized algorithms and hardware accelerators. |

| Comparative Advantage | CNNs outperform traditional models by about 20% in key performance metrics, highlighting AI integration benefits. |

| Method | Performance Metric | Dataset | Result |

|---|---|---|---|

| Transformer-PPO-based RL selective augmentation | AUC score | Classification task | 0.89 |

| Auto-weighted RL method | Accuracy | Breast ultrasound datasets | 95.43% |

In object recognition and pattern recognition, AI-powered machine vision systems achieve high detection accuracy. For example, the RON algorithm reaches 81.3% mean Average Precision (mAP) on the PASCAL VOC2007 dataset. RefineDet, another advanced model, combines the strengths of single-stage and two-stage detectors, improving both accuracy and speed. Backbone networks like VGG-16 and ResNet-101 enhance feature extraction, while neck structures such as feature pyramids improve detection performance.

AI integration also reduces inference time and memory usage, making it possible to deploy artificial intelligence machine vision system solutions on smaller, energy-efficient devices. These improvements support a wide range of applications, from self-driving cars and robotics to smart cameras and industrial inspection.

Tip: When choosing a machine vision solution, consider the balance between speed, accuracy, and resource use. AI-powered systems offer significant advantages in adaptability and performance, but require careful tuning to match specific application needs.

AI Enhancements in Machine Vision

Accuracy and Adaptability

Machine vision systems have become much more accurate and adaptable with the help of AI. These systems now use advanced algorithms and computer vision techniques to spot tiny defects and complex patterns that older systems often miss. Machine learning allows these systems to learn from new data, so they can keep improving over time. This means they can handle changes in lighting, temperature, or even the types of objects they need to inspect.

AI-powered machine vision works well in many real-world situations. For example, in manufacturing, these systems check products for quality and catch mistakes that humans might overlook. In smart cities, they monitor traffic and help keep roads safe. Environmental scientists use them to study changes in nature, such as tracking pollution or animal movements. These systems can run all day and night without getting tired, which reduces human error and keeps performance steady.

Polygon machine vision systems use special techniques to outline objects more precisely than older methods. This helps them recognize irregular shapes and patterns, making them useful in industries like healthcare, agriculture, and retail. They can adapt quickly to new tasks and environments, showing strong flexibility.

Note: AI vision systems scale easily from small factories to large operations. They keep working well even as the amount of data grows.

The following table shows how AI-enhanced machine vision improves accuracy and adaptability in different industries:

| Case Study | Industry | Impact on Accuracy and Adaptability |

|---|---|---|

| Zebra Medical Vision | Healthcare | Improved diagnostic accuracy and streamlined workflows, showing high precision in medical imaging. |

| U.S. Postal Service | Mail Sorting | Faster processing and less manual work, adapting to billions of items each year. |

| General OCR | Various | Automated data extraction and better efficiency, proving adaptability across many fields. |

Key metrics help measure these improvements. Metrics like Intersection over Union (IoU), Precision, Recall, and F1-Score show how well AI models detect and locate objects. Mean Average Precision (mAP) gives an overall score for detection accuracy. Minimum Viewing Time (MVT) compares how fast and accurately AI systems recognize objects compared to humans. These benchmarks help track progress and show how much better machine vision has become with AI.

Real-Time Decision-Making

AI has made real-time decision-making possible in machine vision. These systems process data quickly and make instant choices based on what they see. For example, in a factory, a machine vision system can spot a faulty product and remove it from the line right away. In self-driving cars, the system recognizes road signs, other vehicles, and people, then decides how to drive safely.

Modern algorithms like YOLO (You Only Look Once) and Faster RCNN help machine vision systems balance speed and accuracy. YOLO can detect objects in one step, making it fast enough for real-time use. Faster RCNN uses a region proposal network to find objects quickly and accurately. These improvements mean that machine vision can now keep up with fast-moving environments.

- Machine vision systems use real-time data to:

- Detect defects as soon as they appear.

- Guide robots in picking and placing items.

- Monitor traffic and adjust signals instantly.

- Help doctors make quick decisions during surgery.

Tip: Real-time machine vision systems reduce delays and help prevent costly mistakes. They also allow for quick responses to unexpected changes.

AI-powered machine vision systems use computer vision and learning to adapt their decisions as new data comes in. This makes them reliable in dynamic settings, such as busy factories or crowded city streets. They can handle large amounts of data without slowing down, which is important for industries that need fast and accurate recognition.

The following list highlights how different algorithms have improved real-time performance:

- RCNN: High accuracy but slow (about 45 seconds per image), not suitable for real-time use.

- Fast RCNN and Faster RCNN: Faster processing (about 2 seconds per image) with better accuracy.

- YOLO: Combines speed and accuracy, making it ideal for real-time machine vision tasks.

These advances show that AI has changed machine vision from a slow, rule-based process to a fast, adaptable system. Machine vision now supports real-time recognition and decision-making in many industries, helping people work smarter and safer.

Machine Vision Applications

Manufacturing and Quality Control

Machine vision has transformed manufacturing by making inspection faster and more accurate. Companies now use machine vision applications to check products for defects and ensure high quality. Bosch Automotive uses AI-powered machine vision to inspect fuel injectors, which reduces defects and improves reliability. Coca-Cola applies machine vision to fill, seal, and label bottles with precision, leading to fewer customer complaints and higher efficiency. Siemens uses machine vision to detect faults in printed circuit boards, speeding up inspection and maintaining quality.

- Ford’s Dearborn plant reduced material costs by 15% after using machine vision to detect defects and optimize processes.

- Caterpillar lowered warranty costs by 22% through design improvements based on machine vision data.

- Johnson Controls reached 99.5% defect detection accuracy and cut inspection time by 70%, saving $6.4 million in one year.

| Metric | Before AI Implementation | After AI Implementation | Description |

|---|---|---|---|

| Annual Defective Units | 5,000 doors | 2,500 doors | Real-time defect detection reduces defects |

| Warranty Claims Processed | 10 per day | 5 per day | Early correction lowers claims |

| Annual Warranty Claim Cost | $1,277,500 | $603,750 | Cost savings from fewer defects |

Machine vision applications in quality control and inspection help companies reduce waste, improve efficiency, and save money.

Robotics and Autonomous Vehicles

Robotics and autonomous vehicles rely on machine vision for real-time decision-making. In manufacturing, robots with machine vision inspect thousands of items each minute, reducing recalls and improving quality control. Self-driving cars, such as Tesla and Waymo, use machine vision to detect objects and navigate safely. These vehicles avoid collisions and adapt to complex environments. Amazon’s warehouse robots use machine vision to move without crashing, while drones scan inventory for accuracy.

| Application Area | Usage Example | Operational Benefits |

|---|---|---|

| Autonomous Vehicles | Tesla, Waymo | Real-time detection, improved safety |

| Warehouse Automation | Amazon Kiva robots, Walmart drones | Increased efficiency, fewer errors |

| Healthcare Robotics | Da Vinci Surgical System | Enhanced precision, reduced human error |

Machine vision applications in robotics and autonomous vehicles support automation, adaptability, and safety.

Other Industry Applications

Machine vision applications extend beyond factories and vehicles. In logistics, machine vision tracks inventory, sorts packages, and predicts container layouts, reaching up to 99.4% accuracy. This reduces losses and boosts productivity. In healthcare, machine vision helps doctors diagnose diseases from images. UC San Diego Health used machine vision to detect COVID-19 pneumonia early, leading to faster treatment. Machine vision also supports agriculture, where robots monitor crops and apply herbicides only where needed, improving yields and reducing chemical use.

Note: Machine vision applications continue to grow in industrial automation, healthcare, logistics, and agriculture, driving efficiency and cost savings across many sectors.

AI vs. Traditional Machine Vision

Key Differences

AI-powered machine vision and traditional machine vision systems work in different ways. Traditional systems use fixed rules to inspect objects. Engineers must program every rule, so the system can only find defects it already knows. AI-powered systems learn from examples. They can spot new or unusual defects by recognizing patterns in large sets of images. This makes AI systems more flexible and accurate.

A key difference lies in how each system handles changes. Traditional machine vision struggles when lighting or object types change. AI systems adapt better because they learn from new data. AI also processes images faster and can handle more complex tasks. The table below shows how these systems compare:

| Feature | Traditional Machine Vision | AI-Enhanced Machine Vision |

|---|---|---|

| Defect Detection Accuracy | Limited to known defects | Detects diverse and new defects |

| Adaptability | Low | High |

| Data Requirements | No training data needed | Needs large datasets |

| Speed and Repeatability | Consistent but slower | Faster and more consistent |

| Labor Cost | High (manual programming) | Lower (easier training) |

Advantages and Challenges

AI brings many advantages to machine vision. Companies see up to a 50% increase in productivity and up to 90% better defect detection rates. AI systems reduce maintenance costs by up to 40% and cut downtime in half. They also extend equipment life by up to 40%. AI-driven machine vision systems lower error rates to below 1%, while traditional systems may have error rates near 10%.

However, AI systems face challenges. They need large and diverse training datasets. If the data is not good, the system may make mistakes or show bias. Overfitting and performance drift can also affect results. Companies must invest in hardware and training. They must also check for false positives and negatives to keep the system reliable.

Tip: AI-powered machine vision works best when companies use high-quality data and monitor system performance over time.

Artificial intelligence is transforming machine vision systems by enabling machines to see, learn, and act with high precision. AI-driven vision boosts quality control, speeds up production, and adapts quickly to new tasks.

- AI systems detect defects beyond human ability, automate inspections, and save costs.

- Edge computing, 3D vision, and digital twins drive real-time, accurate results.

- Studies show AI-powered vision achieves up to 95% accuracy, outpacing traditional methods.

Machine vision adoption is set to rise by 37% in two years. Pilot projects and new technologies show strong potential for even greater impact in the future.

FAQ

What is the main benefit of using AI in machine vision systems?

AI helps machine vision systems find patterns and defects that older systems miss. These systems learn from new data and improve over time. This leads to higher accuracy and better results in many industries.

How does AI-powered machine vision handle new or changing products?

AI-powered systems learn from examples. When they see new products or changes, they adjust their models. This helps them keep working well, even when things change on the production line.

Can AI machine vision systems work in real time?

Yes, AI machine vision systems process images quickly. They make instant decisions, which helps in fast-moving environments like factories or self-driving cars.

What industries use AI-powered machine vision?

Many industries use AI-powered machine vision. These include manufacturing, healthcare, logistics, agriculture, and robotics. Each industry uses these systems to improve quality, safety, and efficiency.

Do AI machine vision systems need a lot of data?

AI systems work best with large, high-quality datasets. More data helps the system learn better and make fewer mistakes. Companies often collect and label images to train these systems.

See Also

Ways Deep Learning Improves Performance In Machine Vision

Guidance Machine Vision And Its Importance Within Robotics

Understanding The Automotive Machine Vision System Explained

Exploring Computer Vision Models And Machine Vision Technologies