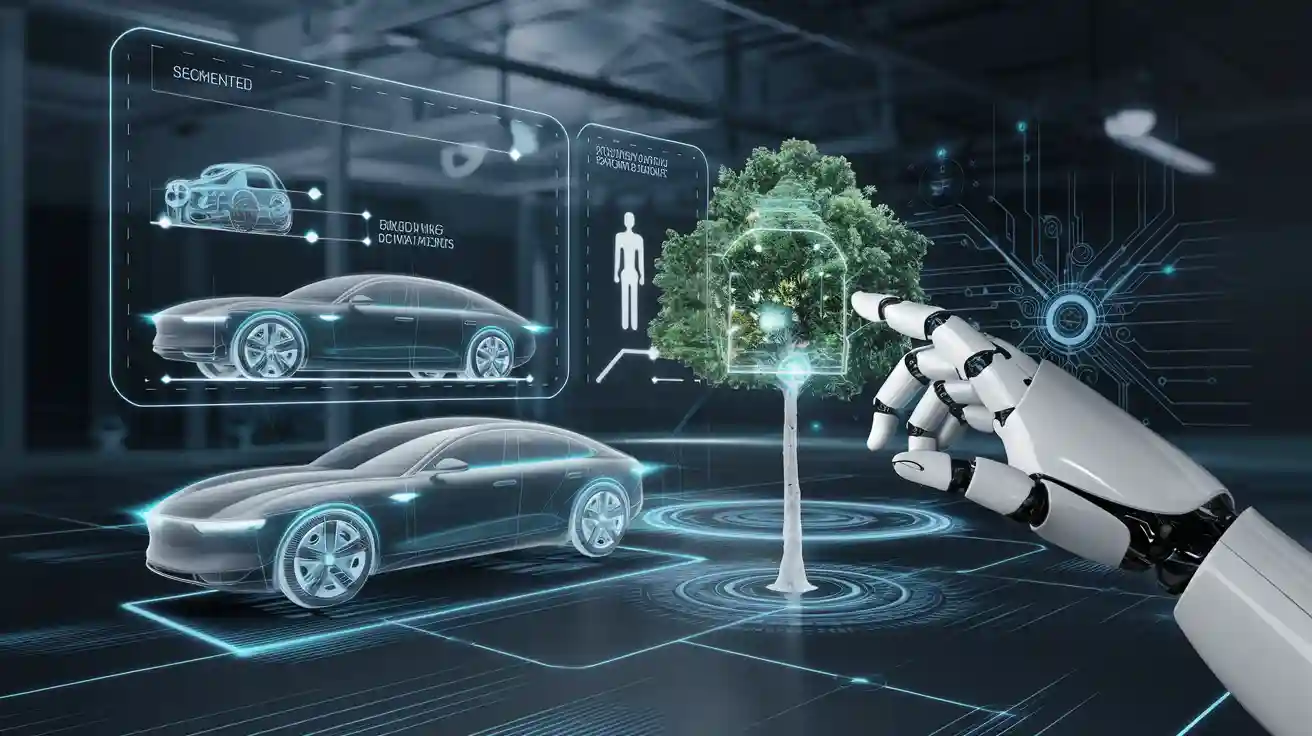

Instance segmentation machine vision systems redefine how you experience machine vision. They provide pixel-level precision, allowing you to identify individual objects within an image. This precision enhances object recognition accuracy, as seen in datasets like COCO, where scores improved from 40.2 to 41.0 with mask usage. For applications like autonomous vehicles, instance segmentation machine vision systems help detect pedestrians and road signs, ensuring safer navigation. In healthcare, they isolate regions like tumors, improving diagnostic accuracy and treatment planning. Such advancements make instance segmentation machine vision systems indispensable for technologies that require a detailed understanding of their surroundings.

Key Takeaways

- Instance segmentation finds exact pixels of objects in pictures. This helps machines work better in tasks like self-driving cars and medical scans.

- Smart tools like Mask R-CNN help find objects and draw outlines. This method works well even with busy or tricky images.

- Instance segmentation is important for jobs like healthcare, robots, and stores. It helps doctors, moves objects, and tracks items in stock.

- Fast tasks use improved models and special computer parts. Tricks like shrinking models and simplifying them make it quicker for urgent jobs.

- New ideas like transformer models and self-learning will make instance segmentation faster and smarter. This keeps machine vision growing and improving.

Understanding Instance Segmentation

What is instance segmentation?

Instance segmentation is a computer vision technique that identifies and separates individual objects within an image at the pixel level. Unlike object detection, which only provides bounding boxes, or semantic segmentation, which labels pixels without distinguishing between instances, instance segmentation combines the strengths of both. It assigns unique labels to each object, ensuring precise differentiation even when objects overlap.

- Key Characteristics:

- Combines object detection and semantic segmentation.

- Distinguishes individual objects, even in crowded scenes.

- Operates at the pixel level for high accuracy.

Deep learning has revolutionized instance segmentation. Algorithms like Mask R-CNN lead the way by using a two-step process: proposing regions of interest and generating masks for each detected object. This approach ensures detailed and accurate segmentation, making it a cornerstone of modern vision systems.

How does instance segmentation work?

Instance segmentation relies on advanced algorithms and architectures to achieve its precision. These models analyze images in multiple stages, ensuring both detection and segmentation of objects. Here’s how it typically works:

- Region Proposal: The model identifies potential areas where objects might exist. For example, Mask R-CNN uses a region proposal network to pinpoint these areas.

- Feature Extraction: The system extracts features from the proposed regions to understand object characteristics.

- Mask Generation: A mask is created for each detected object, outlining its exact shape at the pixel level.

Modern advancements have introduced innovative methods to enhance efficiency and accuracy:

- Sparse Proposal Network minimizes redundant calculations, speeding up the process.

- Mask2Former uses mask attention mechanisms for better representation.

- CondInst adapts to varying object characteristics with dynamic convolution kernels.

- YOLACT simplifies the task into mask generation and coefficient prediction, enabling real-time segmentation.

These techniques ensure that instance segmentation models can handle complex scenarios, such as overlapping objects or cluttered backgrounds, with remarkable precision.

Why is it essential for machine vision systems?

Instance segmentation plays a pivotal role in advancing machine vision systems. Its ability to distinguish individual objects with pixel-level accuracy makes it indispensable in various applications:

- Autonomous Driving: Detects pedestrians, vehicles, and road signs, ensuring safer navigation.

- Medical Imaging: Identifies tumors, organs, or other regions of interest, aiding in diagnostics and treatment planning.

- Robotics: Enables robots to recognize and manipulate objects in dynamic environments.

- Augmented Reality: Enhances user experiences by accurately overlaying virtual objects onto real-world scenes.

Quantitative studies highlight its impact. For instance, experiments on datasets like MS COCO and Cityscapes demonstrate significant improvements in distinguishing individual objects, even in challenging scenarios. Additionally, deep learning models like Mask R-CNN show measurable gains in Intersection over Union (IoU) scores, underscoring their effectiveness.

Instance segmentation transforms how machines perceive and interact with the world. By providing unparalleled precision, it empowers vision systems to operate in complex, real-world environments with confidence.

Comparing Instance Segmentation with Related Concepts

Instance segmentation vs. object detection

Instance segmentation and object detection differ in their approach to identifying objects. Object detection locates objects within an image using bounding boxes, but it doesn’t provide detailed shapes or pixel-level precision. In contrast, instance segmentation goes further by outlining the exact shape of each object, enabling machines to detect objects with greater accuracy.

For example, studies show that DI-MaskDINO improves object detection performance by +1.2 AP^box and segmentation accuracy by +0.9 AP^mask on datasets like COCO and BDD100K. Additionally, Frustum Voxnet V2 enhances detection performance by 11% compared to its predecessor, Frustum Voxnet V1, while also incorporating segmentation capabilities. These advancements highlight how instance segmentation surpasses object detection in scenarios requiring detailed object recognition.

| Model | Improvement | Dataset |

|---|---|---|

| Frustum Voxnet V2 | +11% detection | RGBD images |

| DI-MaskDINO | +1.2 AP^box, +0.9 AP^mask | COCO, BDD100K |

Instance segmentation vs. semantic segmentation

Semantic segmentation assigns pixel-level labels to an image but doesn’t differentiate between individual objects. For instance, if multiple cars appear in an image, semantic segmentation labels all car pixels as “car” without distinguishing between them. Instance segmentation, however, identifies each car as a separate entity, providing object-level identifiers.

Metrics further illustrate their differences. Semantic segmentation focuses on IoU, pixel-level accuracy, and mean accuracy, while instance segmentation uses Average Precision (AP) and Panoptic Quality (PQ). These metrics emphasize the need for object-level precision in applications like robotics and autonomous driving, where distinguishing individual objects is crucial.

- Metrics for Semantic Segmentation: IoU, pixel-level accuracy, mean accuracy.

- Metrics for Instance Segmentation: Average Precision (AP), Panoptic Quality (PQ).

- Key Differences: Semantic segmentation labels pixels, while instance segmentation identifies objects with confidence scores.

The role of panoptic segmentation in vision systems

Panoptic segmentation combines the strengths of semantic and instance segmentation. It labels all pixels in an image while distinguishing individual objects. This hybrid approach proves valuable in complex environments where both pixel-level and object-level understanding are necessary.

For example, in traffic management, panoptic segmentation identifies road signs and vehicles while labeling the road surface. This dual capability enhances machine vision systems, enabling them to interpret scenes comprehensively. By bridging the gap between semantic and instance segmentation, panoptic segmentation ensures vision systems operate effectively in diverse scenarios.

Applications of Instance Segmentation in Machine Vision Systems

Autonomous driving and traffic management

Instance segmentation plays a critical role in autonomous driving by enabling vehicles to perceive their surroundings with exceptional precision. It identifies and classifies objects like pedestrians, vehicles, and traffic signs at the pixel level, ensuring safer navigation through complex environments. This capability allows autonomous systems to make informed decisions, such as stopping for pedestrians or avoiding obstacles.

- Key Benefits:

- Accurate identification of vehicles and pedestrians.

- Enhanced detection of traffic signs and road markings.

- Improved tracking of moving objects in dynamic scenarios.

Recent advancements highlight its effectiveness in traffic management. For example, methods like YOLO-World and BOT-SORT have demonstrated their ability to monitor traffic flow by accurately identifying and tracking vehicles and pedestrians. These systems excel in crowded urban areas, where traditional object detection struggles to differentiate overlapping objects. By leveraging instance segmentation, you can ensure smoother traffic operations and reduce the risk of accidents.

Medical imaging and diagnostics

In medical imaging, instance segmentation revolutionizes diagnostics by isolating specific regions of interest, such as tumors or organs, with unparalleled accuracy. This technology enhances the precision of diagnostic tools, enabling healthcare professionals to personalize treatment plans and improve patient outcomes.

- Clinical Advancements:

- MedSAM, a foundation model for universal medical image segmentation, has been trained on over 1.5 million image-mask pairs. It covers 10 imaging modalities and more than 30 cancer types.

- Comprehensive evaluations on 86 internal and 60 external validation tasks demonstrate its robustness and accuracy compared to task-specific models.

By segmenting medical images at the pixel level, you can identify subtle abnormalities that might be missed by traditional methods. This capability is particularly valuable in detecting early-stage cancers or monitoring disease progression. MedSAM’s versatility across diverse imaging modalities ensures its applicability in various medical fields, from radiology to pathology.

Robotics and object manipulation

Instance segmentation empowers robots to interact with their environment by recognizing and manipulating objects with precision. It enables robots to distinguish individual objects, even in cluttered or overlapping scenarios, which is essential for tasks like assembly, sorting, and navigation.

Empirical studies quantify its impact on robotics performance. For example, the UOIS-SAM model demonstrates significant improvements in overlap and boundary F-measures, enhancing object manipulation accuracy:

| Model | Overlap F-measure Improvement | Boundary F-measure Improvement |

|---|---|---|

| UOIS-SAM with foreground prediction | 13% | 4% |

| UOIS-SAM with heatmap-guided sampling | 10% | 10% |

| Complete UOIS-SAM | 40% (approx.) | 40% (approx.) |

These advancements enable robots to perform complex tasks with greater efficiency. For instance, object-centric representations improve prediction and manipulation capabilities, allowing robots to adapt to dynamic environments. Whether in manufacturing or service industries, instance segmentation ensures robots can handle diverse objects with precision and reliability.

Retail, e-commerce, and inventory management

Instance segmentation transforms how you manage retail, e-commerce, and inventory systems. It enables you to identify individual objects on shelves, in warehouses, or within product catalogs with pixel-level precision. This capability ensures accurate tracking, categorization, and monitoring of items, reducing errors and improving efficiency.

- Inventory Management: You can use segmentation to automate stock counting and detect misplaced items. For example, cameras equipped with segmentation models can scan shelves and identify products that need replenishment. This eliminates manual checks and speeds up operations.

- E-commerce Platforms: Instance segmentation enhances product recognition in online catalogs. It helps you differentiate between similar-looking items, ensuring customers find the exact product they need. This technology also improves search algorithms by providing detailed object data.

- Retail Analytics: By analyzing customer behavior, segmentation helps you optimize store layouts. It tracks how customers interact with products, identifying popular items and areas that need improvement.

Recent advancements in segmentation models, such as Mask R-CNN and YOLACT, make these applications more accessible. These models handle overlapping objects and cluttered environments with ease, ensuring accurate results even in challenging scenarios. For example, in a warehouse setting, segmentation can distinguish between stacked boxes and individual items, streamlining logistics.

Tip: Implementing instance segmentation in your retail or e-commerce system can reduce operational costs and improve customer satisfaction. It ensures precise object detection and tracking, making your processes more efficient.

Other applications: Augmented reality, agriculture, and surveillance

Instance segmentation extends its benefits to diverse fields like augmented reality, agriculture, and surveillance. Its ability to identify and separate objects at the pixel level makes it a versatile tool for solving real-world challenges.

- Augmented Reality (AR): Segmentation enhances AR experiences by accurately overlaying virtual objects onto real-world scenes. For example, it allows you to place virtual furniture in your living room or try on clothes virtually. By distinguishing individual objects, segmentation ensures seamless integration of virtual elements into your environment.

- Agriculture: In farming, segmentation helps you monitor crops and detect diseases. It identifies individual plants, enabling you to assess their health and growth. For instance, drones equipped with segmentation models can scan fields and pinpoint areas that need attention, improving yield and reducing waste.

- Surveillance: Segmentation improves security systems by identifying and tracking objects in real-time. It distinguishes between people, vehicles, and other entities, ensuring accurate monitoring. This capability is particularly useful in crowded areas, where traditional object detection struggles to differentiate between overlapping objects.

Innovative models like Mask2Former and CondInst have further enhanced segmentation capabilities in these fields. They provide faster and more accurate results, making it easier for you to adopt this technology in your operations. For example, in surveillance, segmentation can identify suspicious activities by analyzing object movements and interactions.

Note: Whether you’re enhancing AR applications, optimizing farming practices, or improving security systems, instance segmentation offers the precision and reliability you need to succeed.

Technical Workings of an Instance Segmentation Model

The role of Mask R-CNN in instance segmentation

Mask R-CNN plays a pivotal role in advancing instance segmentation models. It combines object detection and segmentation mask generation into a single framework, enabling precise identification of individual objects. The model operates in two stages: first, it proposes regions of interest, and second, it generates segmentation masks for each detected object. This dual approach ensures high accuracy in complex scenarios.

Empirical data highlights Mask R-CNN’s effectiveness. For example:

- The training loss decreased to 0.16, showcasing its ability to minimize errors.

- Validation loss reached 0.25, demonstrating strong generalization capabilities.

- Metrics like precision, recall, and intersection over union (IoU) validate its segmentation accuracy.

A comparison of metrics further illustrates its reliability:

| Metric | Mask R-CNN MAE | YOLOv8 MAE |

|---|---|---|

| Width (pixels) | 1.83979 | 1.83972 |

| Length (pixels) | 8.72383 | 6.19958 |

| Area | 168.5477 | 152.9066 |

Mask R-CNN’s ability to generate accurate segmentation masks makes it indispensable for applications requiring detailed object recognition, such as autonomous driving and medical imaging.

Transformer-based approaches and their impact

Transformer-based approaches have revolutionized instance segmentation models by introducing self-attention mechanisms. These methods excel at capturing complex relationships between pixels, enabling models to focus on relevant spatial and contextual information. Transformers improve segmentation accuracy by addressing challenges like scattered target regions and significant shape variations.

Key benefits of transformer-based methods include:

- Modeling long-distance dependencies between pixels for global context.

- Capturing semantic relationships, enhancing performance on challenging datasets.

- Effectively handling medical image segmentation tasks, where precision is critical.

Transformers have gained popularity due to their ability to deliver superior results across diverse applications. Their impact extends to tasks like autonomous driving and robotics, where accurate segmentation masks are essential for reliable decision-making.

Dataset requirements for training instance segmentation models

Training instance segmentation models requires high-quality datasets with detailed annotations. These datasets provide the foundation for learning object boundaries, types, and relationships. Popular benchmarks include:

| Dataset | Description | Use Case |

|---|---|---|

| COCO | A large collection of images with annotations for object boundaries and types. | General object detection and segmentation. |

| Open Images | Offers a vast collection of images with bounding box and segmentation annotations. | Diverse object categories training. |

| Cityscapes | Focuses on urban scenes with pixel-level annotations for semantic segmentation. | Autonomous driving applications. |

These datasets ensure models can generalize across diverse environments. For instance, COCO supports general object detection, while Cityscapes focuses on urban scenarios. Using robust datasets helps you train instance segmentation models that perform well in real-world applications.

Tip: Selecting the right dataset is crucial for achieving accurate instance segmentation inference. Ensure the dataset aligns with your application’s requirements to maximize model performance.

Evaluation metrics for instance segmentation models

When evaluating instance segmentation models, you need to focus on metrics that measure both detection and segmentation accuracy. These metrics help you understand how well a model identifies objects and outlines their shapes at the pixel level.

-

Average Precision (AP): This is the most common metric for evaluating instance segmentation models. It calculates the precision of object detection and segmentation across different Intersection over Union (IoU) thresholds. A higher AP score means the model performs better at distinguishing objects and generating accurate masks.

-

Intersection over Union (IoU): IoU measures the overlap between the predicted mask and the ground truth mask. It is calculated as the ratio of the intersection area to the union area. IoU values closer to 1 indicate better segmentation accuracy.

-

Panoptic Quality (PQ): This metric combines segmentation quality and recognition quality into a single score. It evaluates how well the model segments all objects in an image while distinguishing between individual instances. PQ is particularly useful in scenarios where both semantic and instance segmentation are required.

-

Boundary F-measure: This metric assesses how accurately the model predicts object boundaries. It is especially important in applications like medical imaging, where precise boundary detection can impact diagnosis and treatment.

Tip: Always choose metrics that align with your application’s goals. For example, if you work on autonomous driving, prioritize metrics like AP and IoU to ensure accurate object detection and segmentation.

By using these metrics, you can effectively evaluate the performance of instance segmentation models and identify areas for improvement.

Challenges and Future Directions

Computational complexity and efficiency

Instance segmentation models often face challenges related to computational complexity. These models require significant processing power to analyze images at the pixel level. For example, real-time applications like autonomous driving demand high frame rates and low latency. However, many current models struggle to meet these requirements. Experimental data shows that models like GLEE-Lite process at only 1.25 FPS, with latency exceeding 800 milliseconds. In contrast, TROY-VIS achieves a latency of 40 milliseconds, offering a 20× improvement in efficiency.

To address these challenges, you can explore lightweight architectures and hardware acceleration techniques. These advancements aim to reduce computational demands while maintaining segmentation accuracy. By optimizing processing speed, you ensure that vision systems can operate effectively in real-time scenarios.

The need for large, annotated datasets

Training instance segmentation models requires extensive datasets with detailed annotations. These datasets provide the foundation for accurate object recognition and segmentation. Deep learning methods, in particular, rely on large amounts of annotated data to achieve high performance. A study revealed that accuracy did not saturate even after training with over 1.6 million cell instances. This highlights the importance of robust datasets for improving segmentation accuracy.

However, creating these datasets is a labor-intensive process. Traditional manual annotation methods are inefficient and prone to errors. For example, generating high-precision farm maps requires detailed annotations, which are challenging to produce manually. To overcome this, you can leverage automated annotation tools and crowdsourcing platforms. These approaches streamline the dataset creation process, ensuring high-quality annotations for training segmentation models.

| Dataset | Description | Use Case |

|---|---|---|

| COCO | A large collection of images with annotations for object boundaries and types. | General object detection and segmentation. |

| Cityscapes | Focuses on urban scenes with pixel-level annotations for semantic segmentation. | Autonomous driving applications. |

Generalization across diverse environments

Instance segmentation models must generalize across diverse environments to remain effective. Environmental variations, such as lighting, scale, and object types, pose significant challenges. A study on plant phenotyping demonstrated the importance of generalization. Using models like SOLOv2 and YOLOv11, researchers achieved an IoU of 0.593 on the HP Dataset. These models adapted to new plant varieties without requiring extensive annotated datasets, showcasing strong generalization capabilities.

To improve generalization, you should focus on training models with diverse datasets. Incorporating data from various conditions ensures that segmentation models perform well in real-world scenarios. Additionally, zero-shot learning techniques can enhance adaptability, allowing models to handle unseen environments effectively.

| Aspect | Details |

|---|---|

| Study Focus | Analyzes zero-shot instance segmentation for plant phenotyping across various environmental conditions. |

| Environmental Conditions | Variations in lighting, planting methods, scales, viewing angles, and plant types were assessed. |

| Key Findings | The proposed framework adapts to new plant varieties without requiring extensive annotated datasets, showcasing strong generalization capabilities across diverse conditions. |

By addressing these challenges, you can ensure that segmentation models remain robust and reliable, even in complex and dynamic environments.

Real-time processing and latency challenges

Real-time image segmentation demands high-speed processing to analyze images and generate results instantly. This requirement becomes critical in applications like autonomous driving, where every millisecond counts. You need a system that can process data quickly without compromising accuracy. However, achieving this balance presents significant challenges.

One major hurdle is the computational load. Instance segmentation models analyze images at the pixel level, which requires substantial processing power. For example, traditional models like Mask R-CNN often struggle to deliver real-time performance due to their complex architectures. High latency can lead to delays, making these models unsuitable for time-sensitive tasks.

Another challenge involves hardware limitations. Many devices, especially edge systems like drones or mobile robots, lack the computational resources to run advanced segmentation models. This limitation forces you to rely on lightweight architectures or specialized hardware accelerators like GPUs or TPUs.

To overcome these issues, researchers have developed innovative solutions. Techniques like model pruning and quantization reduce the size of segmentation models, enabling faster inference. Additionally, frameworks like TensorRT optimize models for deployment on resource-constrained devices. These advancements ensure that real-time systems can operate efficiently without sacrificing segmentation accuracy.

Tip: If you aim to implement real-time segmentation, consider using optimized models and hardware accelerators. These tools can help you achieve the speed and precision required for your application.

Future advancements in instance segmentation technology

The future of instance segmentation technology looks promising, with several advancements on the horizon. Researchers are exploring ways to enhance model efficiency, accuracy, and adaptability to meet the growing demands of real-world applications.

One exciting development is the integration of transformer-based architectures. These models excel at capturing global context, improving segmentation performance in complex scenarios. For instance, transformers can handle diverse datasets with varying object types and environmental conditions, making them ideal for applications like medical imaging and robotics.

Another area of focus is self-supervised learning. This approach reduces the dependency on large, annotated datasets by allowing models to learn from unlabeled data. You can expect this innovation to lower the cost and time required for training segmentation models.

Real-time processing will also see significant improvements. Emerging techniques like neural architecture search (NAS) automate the design of efficient models, optimizing them for speed and accuracy. Additionally, advancements in hardware, such as AI-specific chips, will further enhance the capabilities of real-time systems.

Note: Staying updated on these advancements can help you leverage the latest technologies in your projects. By adopting cutting-edge methods, you can ensure your segmentation models remain competitive and effective.

Instance segmentation transforms how you interact with machine vision systems by delivering pixel-level precision. Its applications, from autonomous driving to healthcare, drive innovation across industries. For example, in medical imaging, methods like Dilated ResFCN excel in polyp segmentation, achieving high Dice coefficients and low Hausdorff distances. These results highlight its reliability in critical tasks. While challenges like computational demands persist, advancements in models and techniques continue to expand possibilities. As vision systems evolve, you can expect instance segmentation to remain a cornerstone, shaping the future of technology with its unparalleled precision and adaptability.

FAQ

What is the difference between instance segmentation and object detection?

Instance segmentation identifies the exact shape of objects at the pixel level, while object detection only provides bounding boxes around objects. For example, instance segmentation can outline a car’s precise edges, whereas object detection just draws a rectangle around it.

Can instance segmentation work in real-time applications?

Yes, but it depends on the model and hardware. Lightweight models like YOLACT and optimized frameworks like TensorRT enable real-time performance. These tools reduce latency, making instance segmentation suitable for tasks like autonomous driving and robotics.

Why do instance segmentation models need large datasets?

Large datasets provide diverse examples for training, helping models recognize objects in different environments. For instance, datasets like COCO and Cityscapes improve accuracy by offering annotated images with varied lighting, angles, and object types.

How does instance segmentation improve medical imaging?

Instance segmentation isolates specific regions, such as tumors or organs, with pixel-level precision. This helps doctors detect abnormalities early and plan treatments more effectively. Models like MedSAM excel in medical imaging by handling diverse modalities and conditions.

What hardware is best for running instance segmentation models?

High-performance GPUs or TPUs are ideal for running instance segmentation models. These accelerators handle the computational demands of pixel-level analysis. For edge devices, lightweight models and hardware optimizations ensure efficient performance.

Tip: Choose hardware based on your application’s speed and accuracy requirements.

See Also

Future Trends in Segmentation for Machine Vision Systems

Exploring the Role of Thresholding in Machine Vision

Importance of Machine Vision Systems in Bin Picking Tasks